TidyModels

Read PostIn this post we will follow the book Tidy Modeling with R.

Contents:

- Data Analysis Process

- Ames Housing Data

- Splitting Data

- Fitting Model

- Model Workflow

- Recipes

- Metrics

- Comparing Models

- Resampling

- Model Tuning

- Screening Multiple Models

- Dimensionality Reduction

- Encoding Categorical Data

- Explaining Models

- Prediction Quality

- Ensembles of Models

- Inferential Analysis

- See Also

- References

Data Analysis Process

The general phases of data analysis are:

- Exploratory Data Analysis (EDA)

- Feature Engineering

- Model Tuning and Selection

- Model Evaluation

As an example, Kuhn and Johnson use data to model the daily ridership of Chicago’s public train system. These are the hypothetical inner monologues in chronological order:

1) EDA

- The daily ridership values between stations seemed extremely correlated

- Weekday and weekend ridership look very different

- One day in the summer of 2010 has an abnormally large number of riders

- Which stations had the lowest daily ridership values?

2) Feature Engineering

- Dates should at least be encoded as day-of-the-week and year

- Maybe PCA could be used on the correlated predictors to make it easier for the models to use them

- Hourly weather records should probably be averaged into daily measurements

3) Model Fitting

- Let’s start with simple linear regression, K-nearest neighbors, and a boosted decision tree

4) Model Tuning

- How many neighbors should be used?

- Should we run a lot of boosting iterations or just a few?

- How many neighbors seemed to be optimal for these data?

5) Model Evaluation

- Which models have the lowest root mean squared errors?

6) EDA

- Which days were poorly predicted?

7) Model Evaluation

- Variable importance scores indicate that the weather information is not predictive. We’ll drop them from the next set of models.

- It seems like we should add a lot of boosting iterations for that model

8) Feature Engineering

- We need to encode holiday features to improve predictions

9) Model Evaluation

- Let’s drop KNN from the model list

Ames Housing Data

In this section, we’ll look into the Ames housing data set and do exploratory data analysis. The data set contains information on 2,930 properties in Ames.

library(tidymodels)

dim(ames)

> [1] 2930 74Selecting the first 5 out of 74 columns:

> ames |>

select(1:5)

# A tibble: 2,930 × 5

MS_SubClass MS_Zoning Lot_Frontage Lot_Area Street

<fct> <fct> <dbl> <int> <fct>

1 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 141 31770 Pave

2 One_Story_1946_and_Newer_All_Styles Residential_High_Density 80 11622 Pave

3 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 81 14267 Pave

4 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 93 11160 Pave

5 Two_Story_1946_and_Newer Residential_Low_Density 74 13830 Pave

6 Two_Story_1946_and_Newer Residential_Low_Density 78 9978 Pave

7 One_Story_PUD_1946_and_Newer Residential_Low_Density 41 4920 Pave

8 One_Story_PUD_1946_and_Newer Residential_Low_Density 43 5005 Pave

9 One_Story_PUD_1946_and_Newer Residential_Low_Density 39 5389 Pave

10 Two_Story_1946_and_Newer Residential_Low_Density 60 7500 Pave

# ℹ 2,920 more rows

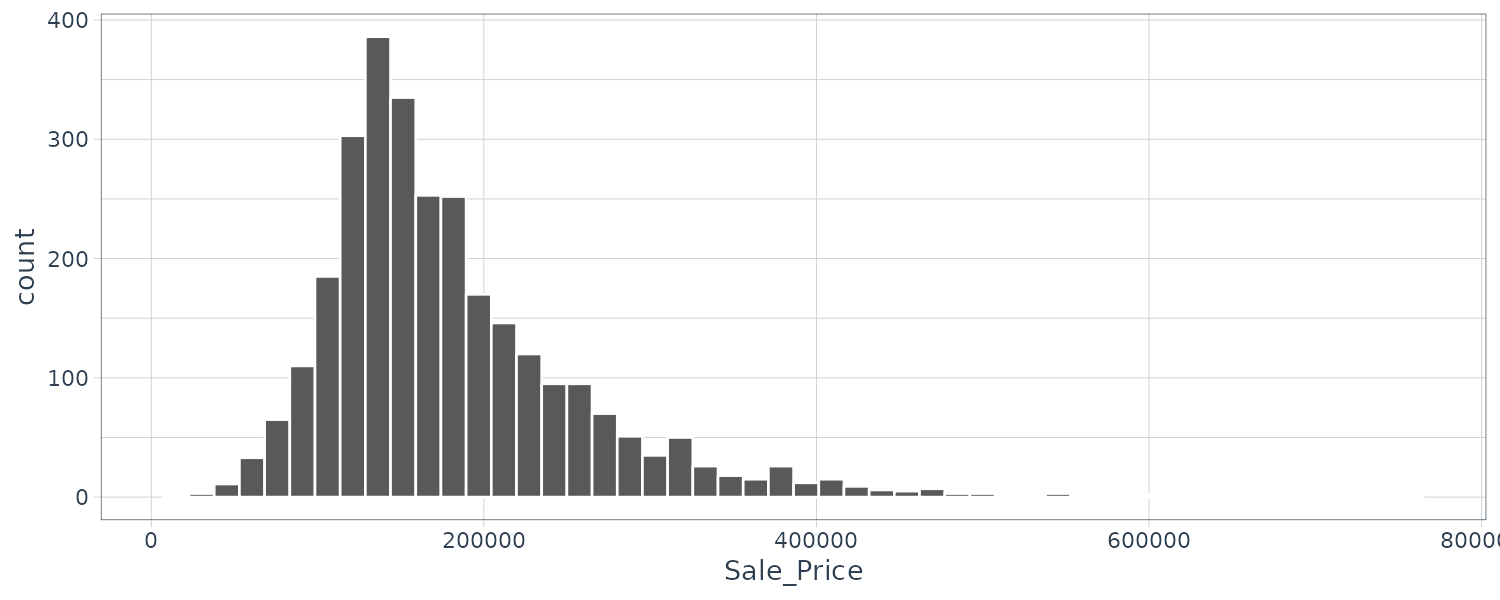

# ℹ Use `print(n = ...)` to see more rowsggplot(

ames,

aes(x = Sale_Price)

) +

geom_histogram(

bins = 50,

col = "white"

)

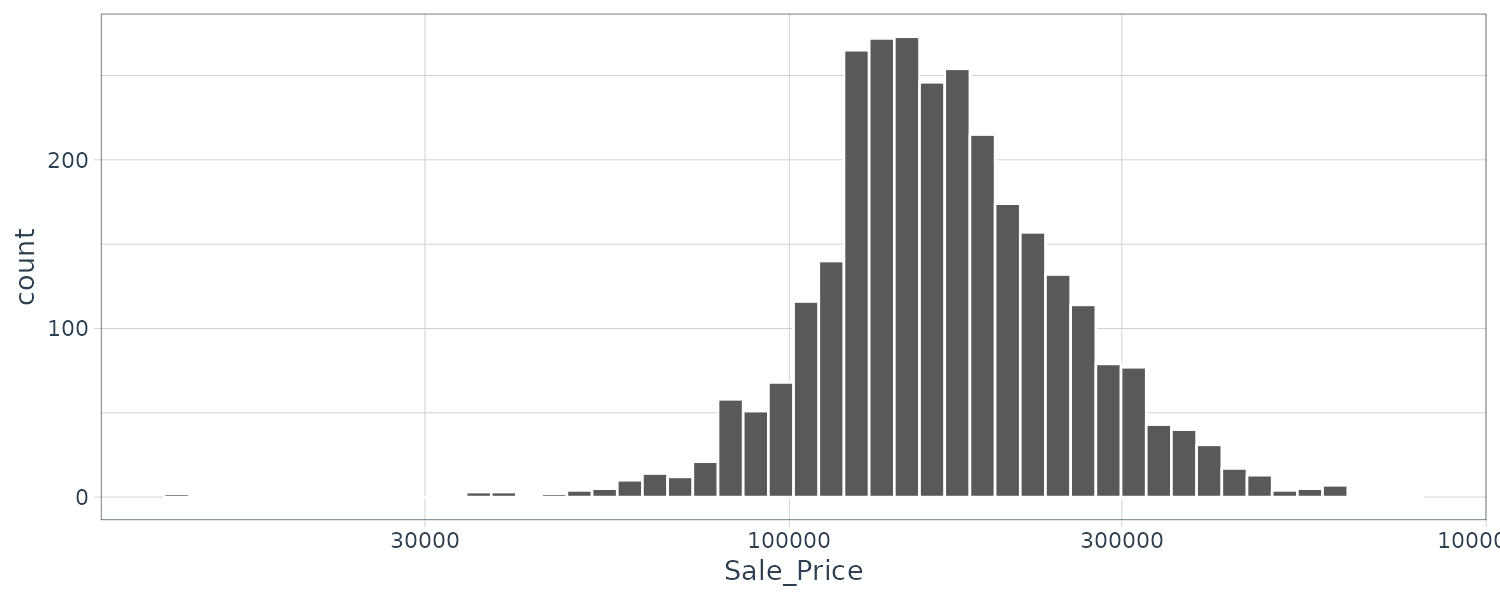

As we can see the data is skew to the right (there are more inexpensive house than super expensive ones). Let’s do a log transformation:

ggplot(

ames,

aes(x = Sale_Price)

) +

geom_histogram(

bins = 50,

col = "white"

) |>

scale_x_log10()

We will add the log transformation as a new outcome column:

ames <- ames |>

mutate(

Sale_Price = log10(Sale_Price)

)Splitting Data

Splitting the data into 80% training set and 20% test set using random sampling via the rsample package:

set.seed(501)

ames_split <- initial_split(

ames,

prop = 0.80

)

> ames_split

<Training/Testing/Total>

<2344/586/2930>When there is a large class imbalance in classification, it is better to use stratified sampling. The training/test split is conducted separately within each class. For regression problems, the outcome variable can be binned into quartiles, and then stratified sampling can be conducted 4 separate times.

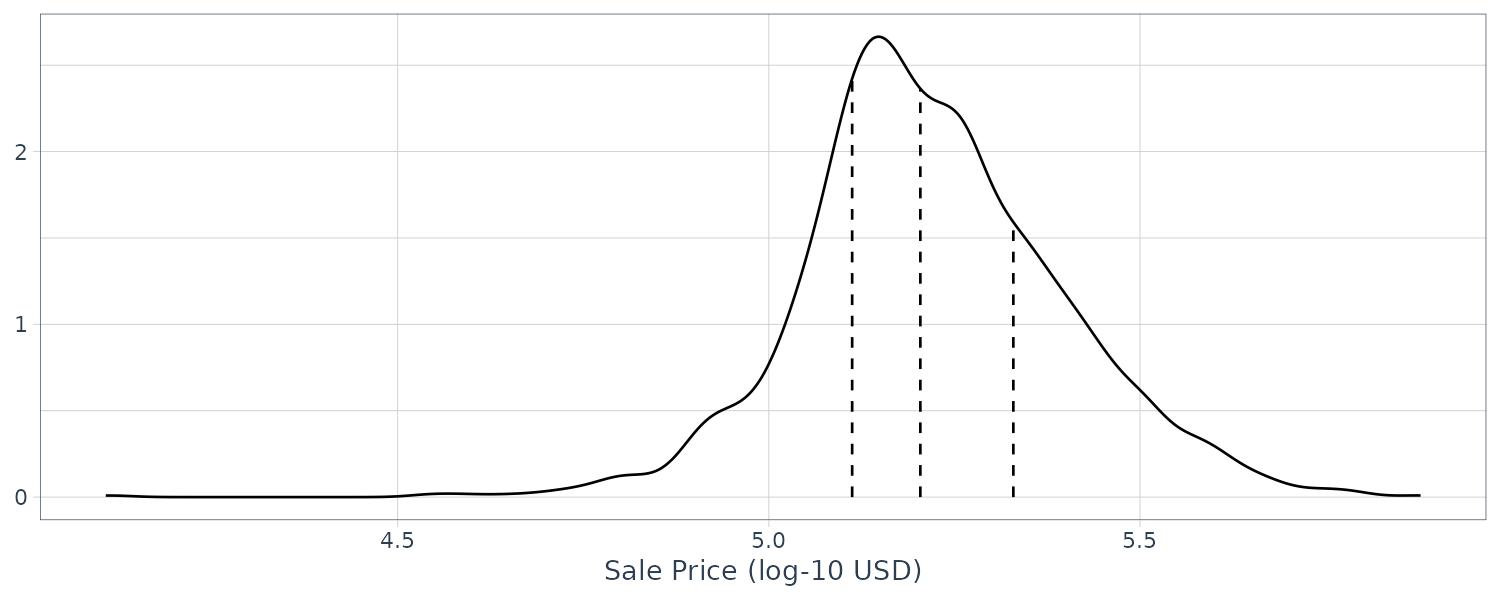

Let’s create the density distribution and quartiles:

sale_dens <- density(

ames$Sale_Price,

n = 2^10

) |>

tidy()

> sale_dens

# A tibble: 1,024 × 2

x y

<dbl> <dbl>

1 4.02 0.0000688

2 4.02 0.0000836

3 4.02 0.000101

4 4.02 0.000122

5 4.03 0.000147

6 4.03 0.000176

7 4.03 0.000210

8 4.03 0.000250

9 4.03 0.000296

10 4.04 0.000348

# ℹ 1,014 more rows

# ℹ Use `print(n = ...)` to see more rows

quartiles <- quantile(

ames$Sale_Price,

probs = c(0.25, 0.50, 0.75)

)

quartiles <- tibble(

prob = c(0.25, 0.50, 0.75),

value = unname(quartiles)

)

# linearly interpolate given datapoints

quartiles$y <- approx(

sale_dens$x,

sale_dens$y,

xout = quartiles$value

)$y

> quartiles

# A tibble: 3 × 3

prob value y

<dbl> <dbl> <dbl>

1 0.25 5.11 2.42

2 0.5 5.20 2.36

3 0.75 5.33 1.59We can graph the quartiles using the following code:

ggplot(

ames,

aes(x = Sale_Price)

) +

geom_line(stat = "density") +

geom_segment(

data = quartiles,

aes(

x = value, xend = value,

y = 0, yend = y

),

lty = 2

) +

labs(

x = "Sale Price (log-10 USD)",

y = NULL

) +

theme_tq()

In our Ames case, expensive houses are rarer, so we can use the following stratfied sampling instead:

ames_split <- initial_split(

ames,

prop = 0.80,

strata = Sale_Price

)Extracting the training and test sets:

ames_train <- training(ames_split)

ames_test <- testing(ames_split)For time series data, we would use:

initial_time_split(

ames,

prop = 0.80

)It assumes that the data have been presorted in choronological order.

Fitting Model

We are going to fit models using parsnip:

lm_model <- linear_reg() |>

set_engine("lm")

lm_form_fit <- lm_model |>

fit(

Sale_Price ~ Longitude + Latitude,

data = ames_train

)

> lm_form_fit

parsnip model object

Call:

stats::lm(formula = Sale_Price ~ Longitude + Latitude, data = data)

Coefficients:

(Intercept) Longitude Latitude

-308.415 -2.074 2.842

lm_xy_fit <- lm_model |>

fit_xy(

x = ames_train |>

select(Longitude, Latitude),

y = ames_train |>

pull(Sale_Price)

)

> lm_xy_fit

parsnip model object

Call:

stats::lm(formula = ..y ~ ., data = data)

Coefficients:

(Intercept) Longitude Latitude

-294.660 -1.984 2.714 Parsnip enables a consistent model interface for different packages. For example, using the ranger package:

rand_fit <- rand_forest(

trees = 1000,

min_n = 5

) |>

set_engine("ranger") |>

set_mode("regression")

> rand_fit |>

translate()

Random Forest Model Specification (regression)

Main Arguments:

trees = 1000

min_n = 5

Computational engine: ranger

Model fit template:

ranger::ranger(x = missing_arg(), y = missing_arg(), weights = missing_arg(),

num.trees = 1000, min.node.size = min_rows(~5, x), num.threads = 1,

verbose = FALSE, seed = sample.int(10^5, 1))Using Model Results

fit <- lm_form_fit |>

extract_fit_engine()

> fit

Call:

stats::lm(formula = ..y ~ ., data = data)

Coefficients:

(Intercept) Longitude Latitude

-294.660 -1.984 2.714

> summary(fit)

Call:

stats::lm(formula = ..y ~ ., data = data)

Residuals:

Min 1Q Median 3Q Max

-1.03203 -0.09707 -0.01845 0.09851 0.51067

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -294.6600 14.5168 -20.30 <0.0000000000000002 ***

Longitude -1.9839 0.1309 -15.16 <0.0000000000000002 ***

Latitude 2.7145 0.1792 15.15 <0.0000000000000002 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.1613 on 2339 degrees of freedom

Multiple R-squared: 0.1613, Adjusted R-squared: 0.1606

F-statistic: 225 on 2 and 2339 DF, p-value: < 0.00000000000000022The broom::tidy() function converts the result into tibble:

> tidy(fit)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) -295. 14.5 -20.3 1.68e-84

2 Longitude -1.98 0.131 -15.2 1.37e-49

3 Latitude 2.71 0.179 15.1 1.59e-49Predictions

ames_test_small <- ames_test |>

slice(1:5)

> predict(

lm_form_fit,

new_data = ames_test_small

)

# A tibble: 5 × 1

.pred

<dbl>

1 5.22

2 5.27

3 5.27

4 5.24

5 5.24Merging the results from multiple call to predict:

> ames_test_small |>

select(Sale_Price) |>

bind_cols(

predict(

lm_form_fit,

ames_test_small

)

) |>

bind_cols(

predict(

lm_form_fit,

ames_test_small,

type = "pred_int"

)

)

# A tibble: 5 × 4

Sale_Price .pred .pred_lower .pred_upper

<dbl> <dbl> <dbl> <dbl>

1 5.39 5.22 4.90 5.53

2 5.28 5.27 4.96 5.59

3 5.27 5.27 4.95 5.59

4 5.06 5.24 4.92 5.56

5 5.26 5.24 4.92 5.56Let’s fit the same data using random forest. The steps are the same:

tree_model <- decision_tree(min_n = 2) |>

set_engine("rpart") |>

set_mode("regression")

tree_fit <- tree_model |>

fit(

Sale_Price ~ Longitude + Latitude,

data = ames_train

)

ames_test_small |>

select(Sale_Price) |>

bind_cols(

predict(

tree_fit,

ames_test_small

)

)Model Workflow

Workflow is similar to pipelines in mlr3.

lm_wflow <- workflow() |>

add_model(

linear_reg() |>

set_engine("lm")

) |>

add_formula(Sale_Price ~ Longitude + Latitude)

> lm_wflow

══ Workflow ══════════════════════════════════════

Preprocessor: Formula

Model: linear_reg()

── Preprocessor ──────────────────────────────────

Sale_Price ~ Longitude + Latitude

── Model ─────────────────────────────────────────

Linear Regression Model Specification (regression)

Computational engine: lm

lm_fit <- fit(lm_wflow, ames_train)

> predict(

lm_fit,

ames_test |> slice(1:3)

)

# A tibble: 3 × 1

.pred

<dbl>

1 5.22

2 5.21

3 5.28Workflow can be updated as well:

lm_fit |>

update_formula(Sale_Price ~ Longitude) Or adding formulas:

lm_wflow <- lm_wflow |>

remove_formula() |>

add_variables(

outcome = Sale_Price,

predictors = c(

Longitude,

Latitude

)

)And using general selectors:

predictors = c(ends_with("tude"))

predictors = everything()

lm_wflow <- lm_wflow |>

remove_formula() |>

add_variables(

outcome = Sale_Price,

predictors = predictors

)Special Formulas and Inline Functions

Some models use extended formulas that base R functions cannot parse or execute. To get around this problem is to first add the variables and the supplementary model formula afterwards:

library(lme4)

library(multilevelmod)

data(Orthodont, package = "nlme")

multilevel_spec <- linear_reg() |>

set_engine("lmer")

multilevel_workflow <- workflow() |>

add_variables(

outcome = distance,

predictors = c(

Sex, age,

Subject

)

) |>

add_model(

multilevel_spec,

formula = distance ~ Sex + (age | Subject)

)

multilevel_fit <- fit(

multilevel_workflow,

data = Orthodont

)

> multilevel_fit

══ Workflow [trained] ══════════════════════════

Preprocessor: Variables

Model: linear_reg()

── Preprocessor ────────────────────────────────

Outcomes: distance

Predictors: c(Sex, age, Subject)

── Model ───────────────────────────────────────

Linear mixed model fit by REML ['lmerMod']

Formula: distance ~ Sex + (age | Subject)

Data: data

REML criterion at convergence: 471.1635

Random effects:

Groups Name Std.Dev. Corr

Subject (Intercept) 7.3912

age 0.6943 -0.97

Residual 1.3100

Number of obs: 108, groups: Subject, 27

Fixed Effects:

(Intercept) SexFemale

24.517 -2.145 And using strata() function from the survival package for survival analysis:

library(censored)

parametric_spec <- survival_reg()

parametric_workflow <- workflow() |>

add_variables(

outcome = c(fustat, futime),

predictors = c(

age,

rx

)

) |>

add_model(

parametric_spec,

formula = Surv(futime, fustat) ~ age + strata(rx)

)

parametric_fit <- fit(

parametric_workflow,

data = ovarian

)

> parametric_fit

══ Workflow [trained] ═══════════════════════════════════════════════

Preprocessor: Variables

Model: survival_reg()

── Preprocessor ─────────────────────────────────────────────────────

Outcomes: c(fustat, futime)

Predictors: c(age, rx)

── Model ────────────────────────────────────────────────────────────

Call:

survival::survreg(formula = Surv(futime, fustat) ~ age + strata(rx),

data = data, model = TRUE)

Coefficients:

(Intercept) age

12.8734120 -0.1033569

Scale:

rx=1 rx=2

0.7695509 0.4703602

Loglik(model)= -89.4 Loglik(intercept only)= -97.1

Chisq= 15.36 on 1 degrees of freedom, p= 0.0000888

n= 26 Creating Multiple Workflows at Once

We can create a set of formulas:

location <- list(

longitude = Sale_Price ~ Longitude,

latitude = Sale_Price ~ Latitude,

coords = Sale_Price ~ Longitude + Latitude,

neighborhood = Sale_Price ~ Neighborhood

)These representations can be crossed with one or more models (in this case lm and glm):

library(workflowsets)

location_models <- workflow_set(

preproc = location,

models = list(

lm = lm_model,

glm = glm_model

)

)

> location_models

# A workflow set/tibble: 8 × 4

wflow_id info option result

<chr> <list> <list> <list>

1 longitude_lm <tibble [1 × 4]> <opts[0]> <list [0]>

2 longitude_glm <tibble [1 × 4]> <opts[0]> <list [0]>

3 latitude_lm <tibble [1 × 4]> <opts[0]> <list [0]>

4 latitude_glm <tibble [1 × 4]> <opts[0]> <list [0]>

5 coords_lm <tibble [1 × 4]> <opts[0]> <list [0]>

6 coords_glm <tibble [1 × 4]> <opts[0]> <list [0]>

7 neighborhood_lm <tibble [1 × 4]> <opts[0]> <list [0]>

8 neighborhood_glm <tibble [1 × 4]> <opts[0]> <list [0]> And extract a particular model based on id:

extract_workflow(

location_models,

id = "coords_lm"

)The columns option and result are populated with specific type of objects that result from resampling.

For now we fit each models by using map():

location_models <- location_models |>

mutate(

fit = map(

info,

~ fit(.x$workflow[[1]], ames_train)

)

)

> location_models

# A workflow set/tibble: 8 × 5

wflow_id info option result fit

<chr> <list> <list> <list> <list>

1 longitude_lm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow>

2 longitude_glm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow>

3 latitude_lm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow>

4 latitude_glm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow>

5 coords_lm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow>

6 coords_glm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow>

7 neighborhood_lm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow>

8 neighborhood_glm <tibble [1 × 4]> <opts[0]> <list [0]> <workflow> Evaluating the Test Set

To test on the test set, we could invoke the convenience function last_fit() to fit on the training set and evaluate it on the test set:

final_lm_res <- last_fit(

lm_wflow,

ames_split

)

> final_lm_res

# Resampling results

# Manual resampling

# A tibble: 1 × 6

splits id .metrics .notes .predictions .workflow

<list> <chr> <list> <list> <list> <list>

1 <split [2342/588]> train/test split <tibble [2 × 4]> <tibble [0 × 3]> <tibble> <workflow> The .workflow column contains the fitted workflow and can be extracted:

fitted_lm_wflow <- extract_workflow(final_lm_res)

> fitted_lm_wflow

══ Workflow [trained] ═══════════════════

Preprocessor: Formula

Model: linear_reg()

── Preprocessor ─────────────────────────

Sale_Price ~ Longitude + Latitude

── Model ────────────────────────────────

Call:

stats::lm(formula = ..y ~ ., data = data)

Coefficients:

(Intercept) Longitude Latitude

-308.618 -2.083 2.826 And the performance metrics and predictions:

> collect_metrics(final_lm_res)

# A tibble: 2 × 4

.metric .estimator .estimate .config

<chr> <chr> <dbl> <chr>

1 rmse standard 0.160 Preprocessor1_Model1

2 rsq standard 0.176 Preprocessor1_Model1

> collect_predictions(final_lm_res)

# A tibble: 588 × 5

id .pred .row Sale_Price .config

<chr> <dbl> <int> <dbl> <chr>

1 train/test split 5.29 5 5.28 Preprocessor1_Model1

2 train/test split 5.24 19 5.15 Preprocessor1_Model1

3 train/test split 5.26 23 5.33 Preprocessor1_Model1

4 train/test split 5.24 30 4.98 Preprocessor1_Model1

5 train/test split 5.32 38 5.49 Preprocessor1_Model1

6 train/test split 5.32 41 5.34 Preprocessor1_Model1

7 train/test split 5.30 53 5.20 Preprocessor1_Model1

8 train/test split 5.30 60 5.52 Preprocessor1_Model1

9 train/test split 5.29 61 5.55 Preprocessor1_Model1

10 train/test split 5.29 65 5.34 Preprocessor1_Model1

# ℹ 578 more rows

# ℹ Use `print(n = ...)` to see more rowsRecipes

Feature engineering includes transformations and encoding of data. The recipes package can be used to combine different feature engineering and preprocessing tasks into a single object and then apply transformation to it.

Encoding Qualitative Data in a Numeric Format

step_unknown() can be used to change missing values to a dedicated factor level.

step_novel() can allocate a new level that are not encountered in training data.

step_other() can be used to analyze the frequencies of the factor levels and convert infrequently occurring values to a level of “other”, with a specified threshold.

step_dummy() creates dummy variables and has a recipe naming convention.

There are other formats such as Feature Hashing and Effects/Likelihood encodings that we shall cover later.

For interaction, in the ames example, step_interact(~ Gr_Liv_Area:startswith(“Bldg_Type_”)) make dummy variables and form the interactions. In this case, an example a interaction column is Gr_Liv_Area_x_Bldg_Type_Duplex.

Let’s create a recipe that:

- log transformed “Gr_Liv_Area”

- create dummy variables for all nominal predictors

- lumping the bottom 1% of neighborhoods in terms of counts into “other” level

- interaction between Gr_Liv_Area x Bldg_Type

- spline for Latitutde

simple_ames <- recipe(

Sale_Price ~ Neighborhood +

Gr_Liv_Area +

Year_Built +

Bldg_Type +

Latitude,

data = ames_train

) |>

step_log(

Gr_Liv_Area,

base = 10

) |>

step_other(

Neighborhood,

threshold = 0.01

) |>

step_dummy(

all_nominal_predictors()

) |>

step_interact(

~ Gr_Liv_Area:starts_with("Bldg_Type_")

) |>

step_ns(

Latitude,

deg_free = 20

)

> simple_ames

── Recipe ─────────────────────────────────────────────────

── Inputs

Number of variables by role

outcome: 1

predictor: 5

── Operations

• Log transformation on: Gr_Liv_Area

• Collapsing factor levels for: Neighborhood

• Dummy variables from: all_nominal_predictors()

• Interactions with: Gr_Liv_Area:starts_with("Bldg_Type_")

• Natural splines on: LatitudeAdding reciple to workflow:

lm_wflow <- workflow() |>

add_model(

linear_reg() |>

set_engine("lm")

) |>

add_recipe(simple_ames)

> lm_wflow

══ Workflow ══════════════════════════════════════════════

Preprocessor: Recipe

Model: linear_reg()

── Preprocessor ──────────────────────────────────────────

5 Recipe Steps

• step_log()

• step_dummy()

• step_dummy()

• step_interact()

• step_ns()

── Model ─────────────────────────────────────────────────

Linear Regression Model Specification (regression)

Computational engine: lm

lm_fit <- fit(lm_wflow, ames_train)

> predict(lm_fit, ames_test)

# A tibble: 588 × 1

.pred

<dbl>

1 5.26

2 5.09

3 5.40

4 5.26

5 5.21

6 5.49

7 5.35

8 5.14

9 5.11

10 5.14

# ℹ 578 more rows

# ℹ Use `print(n = ...)` to see more rowsExtracting fitted parsnip object:

lm_fit |>

extract_fit_parsnip() |>

tidy() |>

print(n = 53)

# A tibble: 53 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) -0.466 0.275 -1.69 9.09e- 2

2 Gr_Liv_Area 0.630 0.0152 41.4 6.75e-280

3 Year_Built 0.00193 0.000131 14.7 4.70e- 47

4 Neighborhood_College_Creek 0.106 0.0183 5.83 6.37e- 9

5 Neighborhood_Old_Town -0.0461 0.0117 -3.93 8.62e- 5

6 Neighborhood_Edwards 0.0175 0.0169 1.03 3.01e- 1

7 Neighborhood_Somerset 0.0517 0.0113 4.57 5.14e- 6

8 Neighborhood_Northridge_Heights 0.162 0.0289 5.60 2.40e- 8

9 Neighborhood_Gilbert 0.00494 0.0288 0.172 8.64e- 1

10 Neighborhood_Sawyer -0.00145 0.0116 -0.124 9.01e- 1

11 Neighborhood_Northwest_Ames 0.00464 0.00990 0.468 6.40e- 1

12 Neighborhood_Sawyer_West -0.00872 0.0120 -0.727 4.67e- 1

13 Neighborhood_Mitchell -0.137 0.0673 -2.04 4.18e- 2

14 Neighborhood_Brookside -0.0201 0.0134 -1.50 1.33e- 1

15 Neighborhood_Crawford 0.181 0.0186 9.71 7.04e- 22

16 Neighborhood_Iowa_DOT_and_Rail_Road 0.00723 0.0192 0.377 7.06e- 1

17 Neighborhood_Timberland -0.0873 0.0643 -1.36 1.75e- 1

18 Neighborhood_Northridge 0.0899 0.0141 6.39 2.00e- 10

19 Neighborhood_Stone_Brook 0.177 0.0332 5.34 1.03e- 7

20 Neighborhood_South_and_West_of_Iowa_State_University 0.0563 0.0205 2.74 6.13e- 3

21 Neighborhood_Clear_Creek 0.0559 0.0180 3.11 1.88e- 3

22 Neighborhood_Meadow_Village -0.285 0.0716 -3.98 7.01e- 5

23 Neighborhood_Briardale -0.0955 0.0222 -4.31 1.70e- 5

24 Neighborhood_Bloomington_Heights 0.0381 0.0352 1.08 2.79e- 1

25 Neighborhood_other 0.0732 0.0134 5.46 5.27e- 8

26 Bldg_Type_TwoFmCon 0.463 0.250 1.85 6.38e- 2

27 Bldg_Type_Duplex 0.521 0.212 2.46 1.41e- 2

28 Bldg_Type_Twnhs 1.18 0.277 4.27 2.08e- 5

29 Bldg_Type_TwnhsE 0.00198 0.208 0.00949 9.92e- 1

30 Gr_Liv_Area_x_Bldg_Type_TwoFmCon -0.156 0.0788 -1.98 4.83e- 2

31 Gr_Liv_Area_x_Bldg_Type_Duplex -0.194 0.0662 -2.92 3.48e- 3

32 Gr_Liv_Area_x_Bldg_Type_Twnhs -0.406 0.0886 -4.58 4.89e- 6

33 Gr_Liv_Area_x_Bldg_Type_TwnhsE -0.0110 0.0668 -0.164 8.70e- 1

34 Latitude_ns_01 -0.238 0.0841 -2.82 4.77e- 3

35 Latitude_ns_02 -0.205 0.0667 -3.08 2.10e- 3

36 Latitude_ns_03 -0.185 0.0688 -2.68 7.34e- 3

37 Latitude_ns_04 -0.208 0.0697 -2.99 2.80e- 3

38 Latitude_ns_05 -0.195 0.0692 -2.82 4.82e- 3

39 Latitude_ns_06 -0.0298 0.0695 -0.429 6.68e- 1

40 Latitude_ns_07 -0.0989 0.0680 -1.45 1.46e- 1

41 Latitude_ns_08 -0.106 0.0684 -1.54 1.23e- 1

42 Latitude_ns_09 -0.114 0.0675 -1.69 9.10e- 2

43 Latitude_ns_10 -0.129 0.0672 -1.92 5.54e- 2

44 Latitude_ns_11 -0.0804 0.0690 -1.17 2.44e- 1

45 Latitude_ns_12 -0.0999 0.0677 -1.47 1.40e- 1

46 Latitude_ns_13 -0.107 0.0679 -1.58 1.15e- 1

47 Latitude_ns_14 -0.141 0.0670 -2.11 3.53e- 2

48 Latitude_ns_15 -0.0798 0.0682 -1.17 2.42e- 1

49 Latitude_ns_16 -0.0863 0.0680 -1.27 2.04e- 1

50 Latitude_ns_17 -0.180 0.0779 -2.31 2.10e- 2

51 Latitude_ns_18 -0.167 0.0750 -2.23 2.58e- 2

52 Latitude_ns_19 0.00372 0.0744 0.0501 9.60e- 1

53 Latitude_ns_20 -0.178 0.0818 -2.18 2.94e- 2It is possible to call the tidy() function on a specific step based on the number identifier:

estimated_recipe <- lm_fit |>

extract_recipe(estimated = TRUE)

> tidy(estimated_recipe, number = 1)

# A tibble: 1 × 3

terms base id

<chr> <dbl> <chr>

1 Gr_Liv_Area 10 log_kpCTV

> tidy(estimated_recipe, number = 2)

# A tibble: 22 × 3

terms retained id

<chr> <chr> <chr>

1 Neighborhood North_Ames other_GmUzK

2 Neighborhood College_Creek other_GmUzK

3 Neighborhood Old_Town other_GmUzK

4 Neighborhood Edwards other_GmUzK

5 Neighborhood Somerset other_GmUzK

6 Neighborhood Northridge_Heights other_GmUzK

7 Neighborhood Gilbert other_GmUzK

8 Neighborhood Sawyer other_GmUzK

9 Neighborhood Northwest_Ames other_GmUzK

10 Neighborhood Sawyer_West other_GmUzK

# ℹ 12 more rows

# ℹ Use `print(n = ...)` to see more rows

> tidy(estimated_recipe, number = 3)

# A tibble: 26 × 3

terms columns id

<chr> <chr> <chr>

1 Neighborhood College_Creek dummy_cPTAb

2 Neighborhood Old_Town dummy_cPTAb

3 Neighborhood Edwards dummy_cPTAb

4 Neighborhood Somerset dummy_cPTAb

5 Neighborhood Northridge_Heights dummy_cPTAb

6 Neighborhood Gilbert dummy_cPTAb

7 Neighborhood Sawyer dummy_cPTAb

8 Neighborhood Northwest_Ames dummy_cPTAb

9 Neighborhood Sawyer_West dummy_cPTAb

10 Neighborhood Mitchell dummy_cPTAb

# ℹ 16 more rows

# ℹ Use `print(n = ...)` to see more rows

> tidy(estimated_recipe, number = 4)

# A tibble: 4 × 2

terms id

<chr> <chr>

1 Gr_Liv_Area:Bldg_Type_TwoFmCon interact_9BGw4

2 Gr_Liv_Area:Bldg_Type_Duplex interact_9BGw4

3 Gr_Liv_Area:Bldg_Type_Twnhs interact_9BGw4

4 Gr_Liv_Area:Bldg_Type_TwnhsE interact_9BGw4

> tidy(estimated_recipe, number = 5)

# A tibble: 1 × 2

terms id

<chr> <chr>

1 Latitude ns_23xDAInteraction

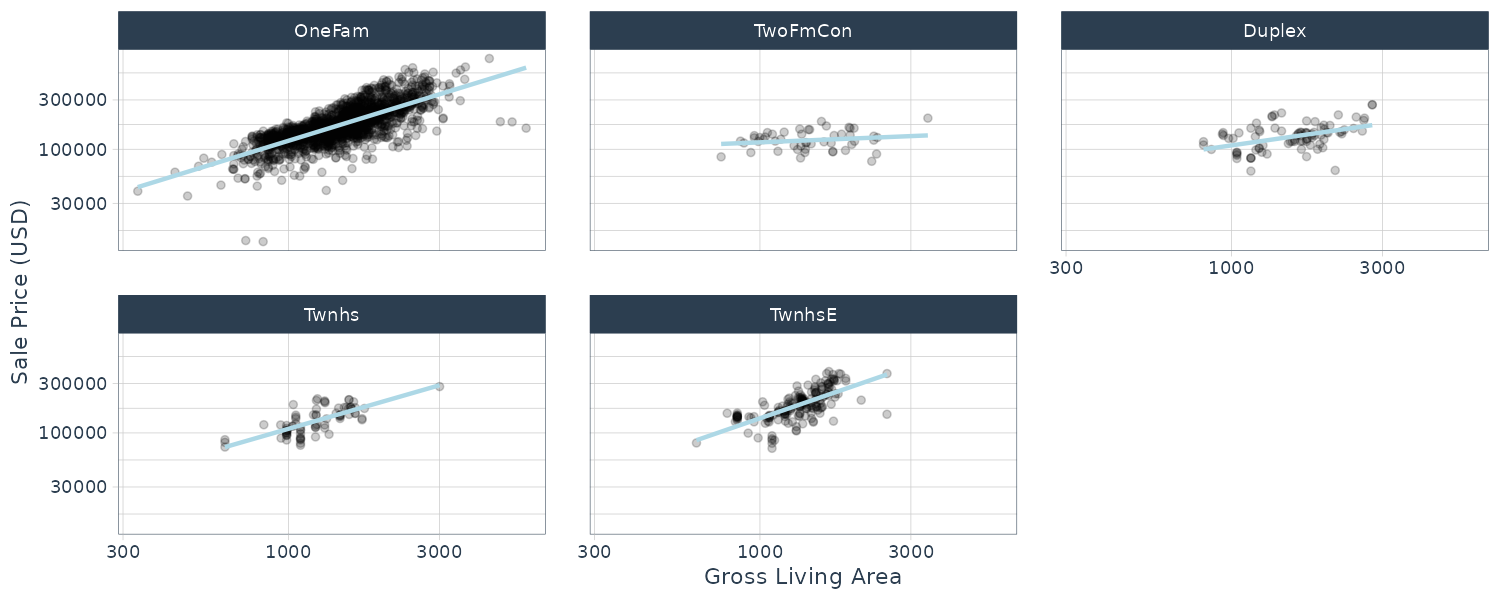

Let us investigate deeper the interaction between Gr_Liv_area x Bldg_Type. By plotting Sale_Price vs Gr_Liv_Area and separate out by Bldg_Type, we can see a difference in the slopes:

ggplot(

ames_train,

aes(

x = Gr_Liv_Area,

y = 10^Sale_Price

)

) +

geom_point(alpha = .2) +

facet_wrap(~Bldg_Type) +

geom_smooth(

method = lm,

formula = y ~ x,

se = FALSE,

color = "lightblue"

) +

scale_x_log10() +

scale_y_log10() +

labs(

x = "Gross Living Area",

y = "Sale Price (USD)"

)

Spline

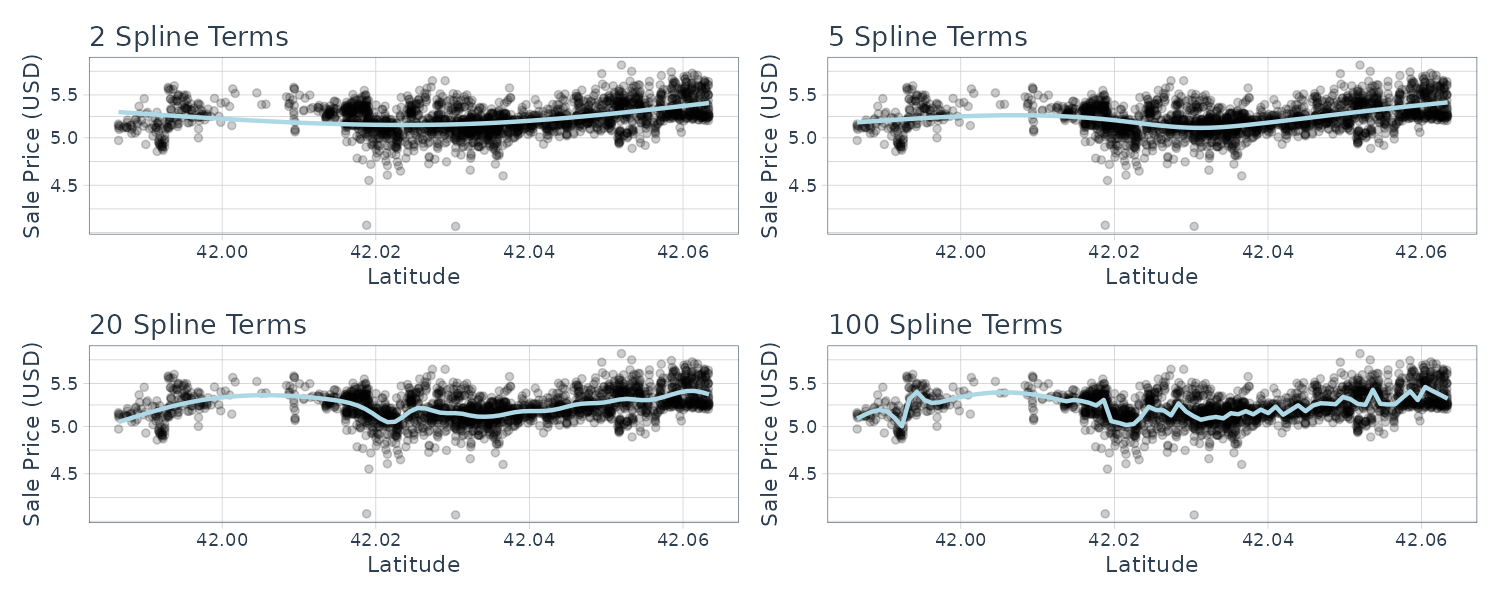

The relationship between SalePrice and Latitude is essentially nonlinear. Latitude is similar to Neighborhood in the sense that both variables are trying to capture the variation in price from the variation of location but in different ways.

The following code graphs different degrees of freedom (# of knots across latitude) and df of 20 (we will tune this parameter later) looks reasonable:

library(splines)

library(patchwork)

plot_smoother <- function(deg_free) {

ggplot(

ames_train,

aes(x = Latitude, y = Sale_Price)

) +

geom_point(alpha = .2) +

scale_y_log10() +

geom_smooth(

method = lm,

formula = y ~ ns(x, df = deg_free),

color = "lightblue",

se = FALSE

) +

labs(

title = paste(deg_free, "Spline Terms"),

y = "Sale Price (USD)"

)

}

(plot_smoother(2) + plot_smoother(5)) /

(plot_smoother(20) + plot_smoother(100))

PCA

In the Ames data, several predictors measure size of the property such as total basement size (Total_Bsmt_SF), size of the first floor (First_Flr_SF), gross living area (Gr_Liv_Area), etc. We can use the following recipe step:

step_pca(matches("(SF$)|(Gr_Liv)")) This will convert the columns matching the regex and turn them into PC1, …, PC5 (default number of components to keep is 5).

Row Sampling

Downsampling the data keeps the minority class and takes a random sample of the majority class so class frequencies are balanced.

Upsampling replicates samples from the minority class.

step_upsample(outcome_column_name)

step_downsample(outcome_column_name) General Transformations

step_mutate() can be used to conduct straightforward transformations:

step_mutate(

Bedroom_Bath_Ratio = Bedroom_AbvGr / Full_Bath

)skip = TRUE

Certain step recipes should not be applied to the predict() function. For example downsampling should not be applied during predict() but applied when using fit(). By default, skip = TRUE for step_downsample().

Getting Info from tidy()

We can get a summary of the recipe steps via tidy():

> tidy(simple_ames)

# A tibble: 5 × 6

number operation type trained skip id

<int> <chr> <chr> <lgl> <lgl> <chr>

1 1 step log FALSE FALSE log_8fJH6

2 2 step other FALSE FALSE other_1UZrP

3 3 step dummy FALSE FALSE dummy_73XYf

4 4 step interact FALSE FALSE interact_RwEJr

5 5 step pca FALSE FALSE pca_asXOF

estimated_recipe <- lm_fit |>

extract_recipe(estimated = TRUE)

> tidy(estimated_recipe)

# A tibble: 5 × 6

number operation type trained skip id

<int> <chr> <chr> <lgl> <lgl> <chr>

1 1 step log TRUE FALSE log_8fJH6

2 2 step other TRUE FALSE other_1UZrP

3 3 step dummy TRUE FALSE dummy_73XYf

4 4 step interact TRUE FALSE interact_RwEJr

5 5 step pca TRUE FALSE pca_asXOF We can also get more info via the id parameter:

> tidy(estimated_recipe, id = "other_1UZrP")

# A tibble: 20 × 3

terms retained id

<chr> <chr> <chr>

1 Neighborhood North_Ames other_1UZrP

2 Neighborhood College_Creek other_1UZrP

3 Neighborhood Old_Town other_1UZrP

4 Neighborhood Edwards other_1UZrP

5 Neighborhood Somerset other_1UZrP

6 Neighborhood Northridge_Heights other_1UZrP

7 Neighborhood Gilbert other_1UZrP

8 Neighborhood Sawyer other_1UZrP

9 Neighborhood Northwest_Ames other_1UZrP

10 Neighborhood Sawyer_West other_1UZrP

11 Neighborhood Mitchell other_1UZrP

12 Neighborhood Brookside other_1UZrP

13 Neighborhood Crawford other_1UZrP

14 Neighborhood Iowa_DOT_and_Rail_Road other_1UZrP

15 Neighborhood Timberland other_1UZrP

16 Neighborhood Northridge other_1UZrP

17 Neighborhood Stone_Brook other_1UZrP

18 Neighborhood South_and_West_of_Iowa_State_University other_1UZrP

19 Neighborhood Clear_Creek other_1UZrP

20 Neighborhood Meadow_Village other_1UZrP

> tidy(estimated_recipe, id = "pca_asXOF")

# A tibble: 25 × 4

terms value component id

<chr> <dbl> <chr> <chr>

1 Gr_Liv_Area -0.995 PC1 pca_asXOF

2 Gr_Liv_Area_x_Bldg_Type_TwoFmCon -0.0194 PC1 pca_asXOF

3 Gr_Liv_Area_x_Bldg_Type_Duplex -0.0355 PC1 pca_asXOF

4 Gr_Liv_Area_x_Bldg_Type_Twnhs -0.0333 PC1 pca_asXOF

5 Gr_Liv_Area_x_Bldg_Type_TwnhsE -0.0842 PC1 pca_asXOF

6 Gr_Liv_Area 0.0786 PC2 pca_asXOF

7 Gr_Liv_Area_x_Bldg_Type_TwoFmCon 0.0285 PC2 pca_asXOF

8 Gr_Liv_Area_x_Bldg_Type_Duplex 0.0725 PC2 pca_asXOF

9 Gr_Liv_Area_x_Bldg_Type_Twnhs 0.0646 PC2 pca_asXOF

10 Gr_Liv_Area_x_Bldg_Type_TwnhsE -0.992 PC2 pca_asXOF

# ℹ 15 more rows

# ℹ Use `print(n = ...)` to see more rowsColumn Roles

Suppose ames have a column of address that you want to leave in dataset but not use in modelling. This could be helpful when data are resampled and tracking which is which. This can be done with:

simple_ames |>

update_role(address, new_role = "street address") Metrics

Regression Metrics

Using the yardstick package, we can produce performance metrics. For example, taking the regression model we fitted earlier, we evaluate the performance on the test set:

ames_test_res <- predict(

lm_fit,

new_data = ames_test |>

select(-Sale_Price)

) |>

bind_cols(

ames_test |> select(Sale_Price)

)

> ames_test_res

# A tibble: 588 × 2

.pred Sale_Price

<dbl> <dbl>

1 5.22 5.02

2 5.21 5.39

3 5.28 5.28

4 5.27 5.28

5 5.28 5.28

6 5.28 5.26

7 5.26 5.73

8 5.26 5.60

9 5.26 5.32

10 5.24 4.98

# ℹ 578 more rows

# ℹ Use `print(n = ...)` to see more rows

> rmse(

ames_test_res,

truth = Sale_Price,

estimate = .pred

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 rmse standard 0.168To compute multiple metrics at once, we can use a metric set:

> metric_set(rmse, rsq, mae)(

ames_test_res,

truth = Sale_Price,

estimate = .pred

)

# A tibble: 3 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 rmse standard 0.168

2 rsq standard 0.200

3 mae standard 0.125Binary Classification Metrics

We will use two_class_example dataset in modeldata package to demonstrate classification metrics:

tibble(two_class_example)

# A tibble: 500 × 4

truth Class1 Class2 predicted

<fct> <dbl> <dbl> <fct>

1 Class2 0.00359 0.996 Class2

2 Class1 0.679 0.321 Class1

3 Class2 0.111 0.889 Class2

4 Class1 0.735 0.265 Class1

5 Class2 0.0162 0.984 Class2

6 Class1 0.999 0.000725 Class1

7 Class1 0.999 0.000799 Class1

8 Class1 0.812 0.188 Class1

9 Class2 0.457 0.543 Class2

10 Class2 0.0976 0.902 Class2

# ℹ 490 more rows

# ℹ Use `print(n = ...)` to see more rows A variety of yardstick metrics:

# Confusion Matrix

> conf_mat(

two_class_example,

truth = truth,

estimate = predicted

)

Truth

Prediction Class1 Class2

Class1 227 50

Class2 31 192

# Accuracy

> accuracy(

two_class_example,

truth = truth,

estimate = predicted

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 accuracy binary 0.838

# Matthews Correlation Coefficient

> mcc(

two_class_example,

truth,

predicted

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 mcc binary 0.677

# F1 Metric

> f_meas(

two_class_example,

truth,

predicted

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 f_meas binary 0.849Or combining all 3:

classification_metrics <- metric_set(

accuracy,

mcc,

f_meas

)

> classification_metrics(

two_class_example,

truth = truth,

estimate = predicted

)

# A tibble: 3 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 accuracy binary 0.838

2 mcc binary 0.677

3 f_meas binary 0.849The f_meas() metric emphasizes the positive class (event of interest). By default, the first level of the outcome factor is the event of interest. We can use the event_level argument to indicate which is the positive class:

f_meas(

two_class_example,

truth,

predicted,

event_level = "second"

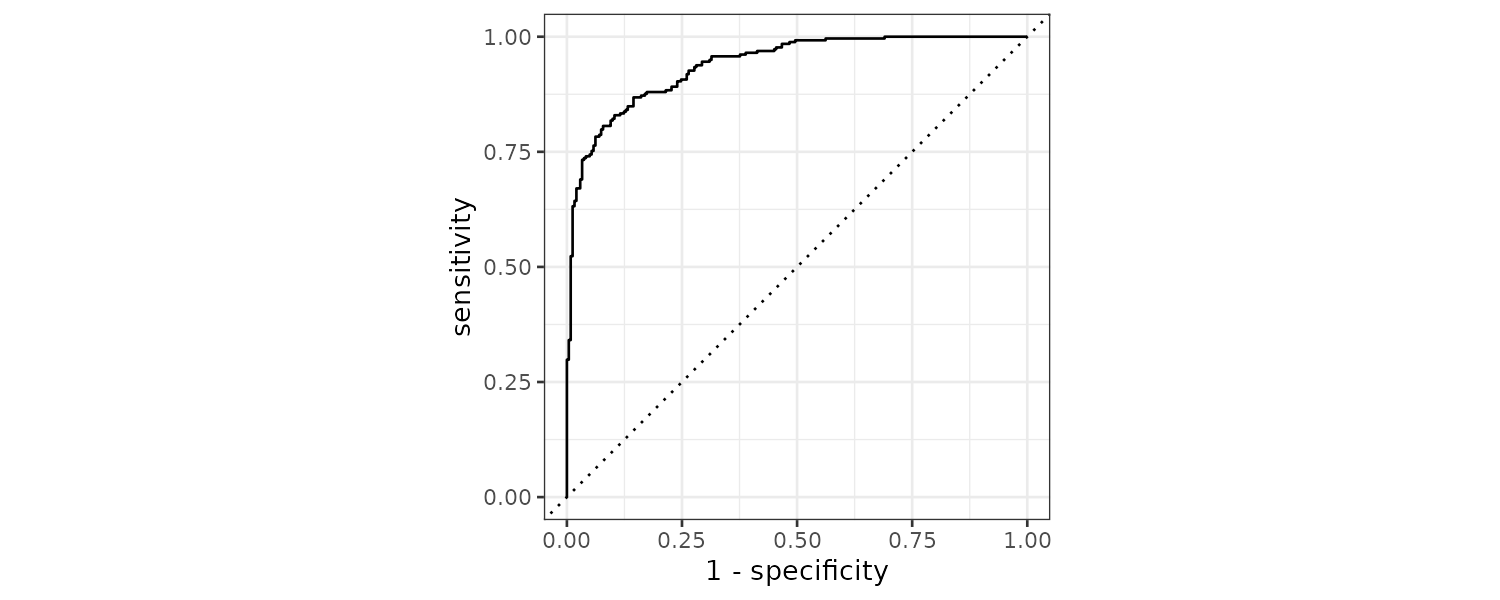

)There are other classification metrics that use the predicted probabilities as inputs rather than hard class predictions. For example, the Receiver Operating Characteristic (ROC) curve computes the sensitivy and specificity over a continuum of different event thresholds.

> roc_curve(

two_class_example,

truth,

Class1

)

# A tibble: 502 × 3

.threshold specificity sensitivity

<dbl> <dbl> <dbl>

1 -Inf 0 1

2 1.79e-7 0 1

3 4.50e-6 0.00413 1

4 5.81e-6 0.00826 1

5 5.92e-6 0.0124 1

6 1.22e-5 0.0165 1

7 1.40e-5 0.0207 1

8 1.43e-5 0.0248 1

9 2.38e-5 0.0289 1

10 3.30e-5 0.0331 1

# ℹ 492 more rows

# ℹ Use `print(n = ...)` to see more rows

> roc_auc(

two_class_example,

truth,

Class1

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 roc_auc binary 0.939 Plotting the ROC curve:

roc_curve(

two_class_example,

truth,

Class1

) |>

autoplot()

If the curve is close to the diagonal line, the model’s predictions would be no matter than random guessing.

Multiclass Classification Metrics

Using the hpc_cv dataset:

> tibble(hpc_cv)

# A tibble: 3,467 × 7

obs pred VF F M L Resample

<fct> <fct> <dbl> <dbl> <dbl> <dbl> <chr>

1 VF VF 0.914 0.0779 0.00848 0.0000199 Fold01

2 VF VF 0.938 0.0571 0.00482 0.0000101 Fold01

3 VF VF 0.947 0.0495 0.00316 0.00000500 Fold01

4 VF VF 0.929 0.0653 0.00579 0.0000156 Fold01

5 VF VF 0.942 0.0543 0.00381 0.00000729 Fold01

6 VF VF 0.951 0.0462 0.00272 0.00000384 Fold01

7 VF VF 0.914 0.0782 0.00767 0.0000354 Fold01

8 VF VF 0.918 0.0744 0.00726 0.0000157 Fold01

9 VF VF 0.843 0.128 0.0296 0.000192 Fold01

10 VF VF 0.920 0.0728 0.00703 0.0000147 Fold01

# ℹ 3,457 more rows

# ℹ Use `print(n = ...)` to see more rowsThe hpc dataset contains the predicted classes and class probabilities for a linear discriminant analysis model fit to the HPC data set from Kuhn and Johnson (2013). These data are the assessment sets from a 10-fold cross-validation scheme. The data column columns for the true class (‘obs’), the class prediction(‘pred’) and columns for each class probability (columns ‘VF’, ‘F’, ‘M’, and ‘L’). Additionally, a column for the resample indicator is included.

> conf_mat(

hpc_cv,

truth = obs,

estimate = pred

)

Truth

Prediction VF F M L

VF 1620 371 64 9

F 141 647 219 60

M 6 24 79 28

L 2 36 50 111

> accuracy(

hpc_cv,

obs,

pred

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 accuracy multiclass 0.709

> mcc(

hpc_cv,

obs,

pred

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 mcc multiclass 0.515Note that the estimator is “multiclass” vs the previous “binary”.

Sensitivity

There are a few ways to calculate sensitivity to multiclass classification. Below we set up some code that can be used to calculate sensitivity:

class_totals <- count(

hpc_cv,

obs,

name = "totals"

) |>

mutate(class_wts = totals / sum(totals))

> class_totals

obs totals class_wts

1 VF 1769 0.51023940

2 F 1078 0.31093164

3 M 412 0.11883473

4 L 208 0.05999423

cell_counts <- hpc_cv |>

group_by(obs, pred) |>

count() |>

ungroup()

> cell_counts

# A tibble: 16 × 3

obs pred n

<fct> <fct> <int>

1 VF VF 1620

2 VF F 141

3 VF M 6

4 VF L 2

5 F VF 371

6 F F 647

7 F M 24

8 F L 36

9 M VF 64

10 M F 219

11 M M 79

12 M L 50

13 L VF 9

14 L F 60

15 L M 28

16 L L 111

one_versus_all <- cell_counts |>

filter(obs == pred) |>

full_join(class_totals, by = "obs") |>

mutate(sens = n / totals)

> one_versus_all

# A tibble: 4 × 6

obs pred n totals class_wts sens

<fct> <fct> <int> <int> <dbl> <dbl>

1 VF VF 1620 1769 0.510 0.916

2 F F 647 1078 0.311 0.600

3 M M 79 412 0.119 0.192

4 L L 111 208 0.0600 0.534 Macro-Averaging

Computes a set of on-versus-all metrics and averaging it:

> one_versus_all |>

summarize(

macro = mean(sens)

)

# A tibble: 1 × 1

macro

<dbl>

1 0.560

> sensitivity(

hpc_cv,

obs,

pred,

estimator = "macro"

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 sensitivity macro 0.560Macro-Weighted Averaging

The average is weighted by the number of samples in each class:

> one_versus_all |>

summarize(

macro_wts = weighted.mean(sens, class_wts)

)

# A tibble: 1 × 1

macro_wts

<dbl>

1 0.709

> sensitivity(

hpc_cv,

obs,

pred,

estimator = "macro_weighted"

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 sensitivity macro_weighted 0.709Micro-Averaging

Aggregating the totals for each class, and then computes a single metric from the aggregates:

> one_versus_all |>

summarize(

micro = sum(n) / sum(totals)

)

# A tibble: 1 × 1

macro_wts

<dbl>

1 0.709

> sensitivity(

hpc_cv,

obs,

pred,

estimator = "micro"

)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 sensitivity micro 0.709 Multiclass ROC:

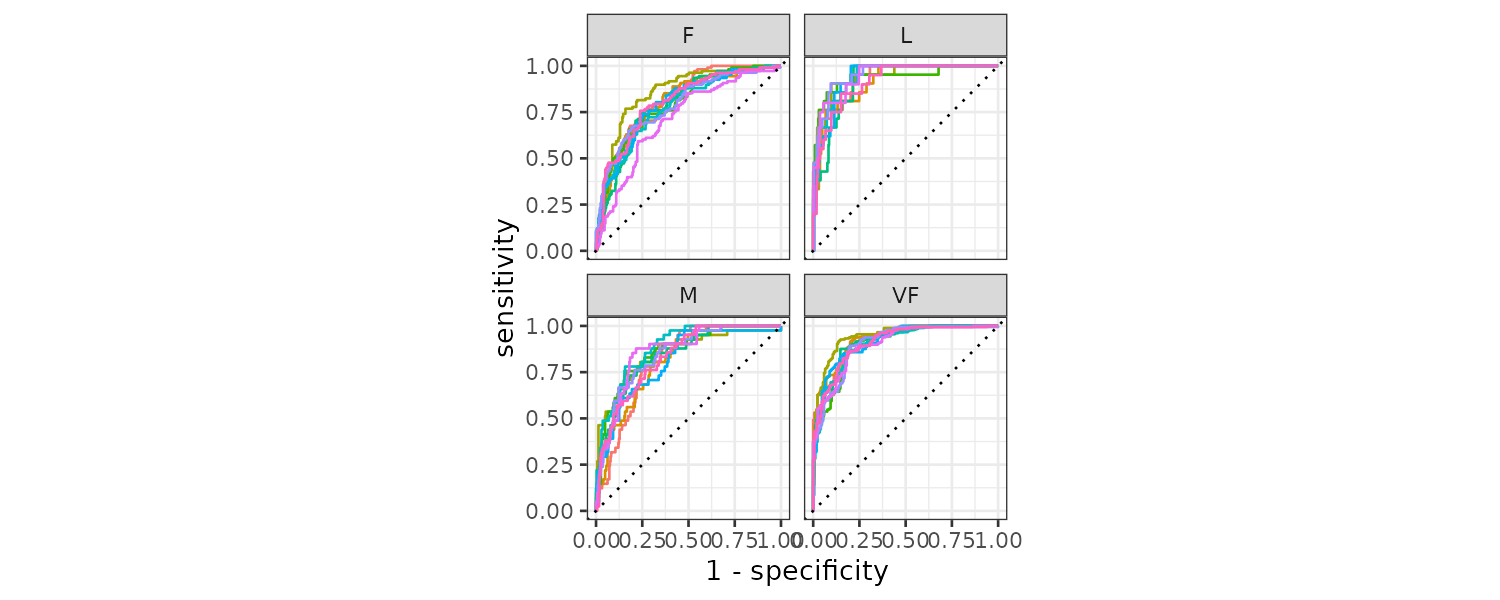

There are multiclass analog for ROC as well:

> roc_auc(hpc_cv, obs, VF, F, M, L)

# A tibble: 1 × 3

.metric .estimator .estimate

<chr> <chr> <dbl>

1 roc_auc hand_till 0.829

roc_curve(hpc_cv, obs, VF, F, M, L) |>

autoplot()We can plot the ROC curve for each group by the folds:

hpc_cv |>

group_by(Resample) |>

roc_curve(obs, VF, F, M, L) |>

autoplot() +

theme(legend.position = "none")

Grouping the accuracy by resampling groups:

> hpc_cv |>

group_by(Resample) |>

accuracy(obs, pred)

# A tibble: 10 × 4

Resample .metric .estimator .estimate

<chr> <chr> <chr> <dbl>

1 Fold01 accuracy multiclass 0.726

2 Fold02 accuracy multiclass 0.712

3 Fold03 accuracy multiclass 0.758

4 Fold04 accuracy multiclass 0.712

5 Fold05 accuracy multiclass 0.712

6 Fold06 accuracy multiclass 0.697

7 Fold07 accuracy multiclass 0.675

8 Fold08 accuracy multiclass 0.721

9 Fold09 accuracy multiclass 0.673

10 Fold10 accuracy multiclass 0.699 Resampling

When we measure performance on the same data that we used for training, the data is said to be resubstituted.

Predictive models that are capable of learning complex trends are known as low bias models. Many black-box ML models have low bias, while models such as linear regression and discriminant analysis are less adaptable and are considered high bias models.

Resampling is only conducted on the training set. For each iteration of resampling, the data are partitioned into an analysis set and an assessment set. Model is fit on the analysis set and evaluated on the assessment set.

Cross-Validation

Generally we prefer a 10-fold cross-validation as a default. Larger values result in resampling estimates with small bias but large variance, while smaller values have large bias but low variance.

ames_folds <- vfold_cv(ames_train, v = 10)

> ames_folds

# 10-fold cross-validation

# A tibble: 10 × 2

splits id

<list> <chr>

1 <split [2107/235]> Fold01

2 <split [2107/235]> Fold02

3 <split [2108/234]> Fold03

4 <split [2108/234]> Fold04

5 <split [2108/234]> Fold05

6 <split [2108/234]> Fold06

7 <split [2108/234]> Fold07

8 <split [2108/234]> Fold08

9 <split [2108/234]> Fold09

10 <split [2108/234]> Fold10The column splits contains the information on how to split the data (training/test). Note that data is not replicated in memory for the folds. We can show this by noticing the object size of ames_folds are not 10 times the size of ames_train:

> lobstr::obj_size(ames_train)

849.83 kB

> lobstr::obj_size(ames_folds)

944.36 kBTo retrieve the first fold training data:

> ames_folds$splits[[1]] |>

analysis() |>

select(1:5)

# A tibble: 2,107 × 5

MS_SubClass MS_Zoning Lot_Frontage Lot_Area Street

<fct> <fct> <dbl> <int> <fct>

1 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 70 8400 Pave

2 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 70 10500 Pave

3 Two_Story_PUD_1946_and_Newer Residential_Medium_Density 21 1680 Pave

4 Two_Story_PUD_1946_and_Newer Residential_Medium_Density 21 1680 Pave

5 One_Story_PUD_1946_and_Newer Residential_Low_Density 53 4043 Pave

6 One_Story_PUD_1946_and_Newer Residential_Low_Density 24 2280 Pave

7 One_Story_PUD_1946_and_Newer Residential_Low_Density 55 7892 Pave

8 One_Story_1945_and_Older Residential_High_Density 70 9800 Pave

9 Duplex_All_Styles_and_Ages Residential_Medium_Density 68 8930 Pave

10 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 0 9819 Pave

# ℹ 2,097 more rows

# ℹ Use `print(n = ...)` to see more rowsAnd test set:

> ames_folds$splits[[1]] |>

assessment() |>

select(1:5)

# A tibble: 235 × 5

MS_SubClass MS_Zoning Lot_Frontage Lot_Area Street

<fct> <fct> <dbl> <int> <fct>

1 One_and_Half_Story_Finished_All_Ages Residential_Low_Density 60 8382 Pave

2 One_Story_1945_and_Older Residential_Medium_Density 60 7200 Pave

3 One_and_Half_Story_Finished_All_Ages Residential_Medium_Density 0 8239 Pave

4 Two_Family_conversion_All_Styles_and_Ages Residential_Medium_Density 100 9045 Pave

5 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 0 11200 Pave

6 One_and_Half_Story_Finished_All_Ages C_all 66 8712 Pave

7 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 70 9100 Pave

8 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 120 13560 Pave

9 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 60 10434 Pave

10 One_Story_1946_and_Newer_All_Styles Residential_Low_Density 75 9533 Pave

# ℹ 225 more rows

# ℹ Use `print(n = ...)` to see more rowsRepeated CV

Depending on the size of the data, the V-fold CV can be noisy. One way to reduce the noise is to repeat V-fold multiple times (R times). The standard error would then be \(\frac{\sigma}{\sqrt{V\times R}}\).

As long as we have a lot of data relative to VxR, the summary statistics will tend toward a normal distribution by CLT.

> vfold_cv(ames_train, v = 10, repeats = 5)

# 10-fold cross-validation repeated 5 times

# A tibble: 50 × 3

splits id id2

<list> <chr> <chr>

1 <split [2107/235]> Repeat1 Fold01

2 <split [2107/235]> Repeat1 Fold02

3 <split [2108/234]> Repeat1 Fold03

4 <split [2108/234]> Repeat1 Fold04

5 <split [2108/234]> Repeat1 Fold05

6 <split [2108/234]> Repeat1 Fold06

7 <split [2108/234]> Repeat1 Fold07

8 <split [2108/234]> Repeat1 Fold08

9 <split [2108/234]> Repeat1 Fold09

10 <split [2108/234]> Repeat1 Fold10

# ℹ 40 more rows

# ℹ Use `print(n = ...)` to see more rowsMonte Carlo Cross-Validation (MCCV)

The difference between MCCV and CV is that the data in each fold is randomly selected:

> mc_cv(

ames_train,

prop = 9 / 10,

times = 20

)

# Monte Carlo cross-validation (0.9/0.1) with 20 resamples

# A tibble: 20 × 2

splits id

<list> <chr>

1 <split [2107/235]> Resample01

2 <split [2107/235]> Resample02

3 <split [2107/235]> Resample03

4 <split [2107/235]> Resample04

5 <split [2107/235]> Resample05

6 <split [2107/235]> Resample06

7 <split [2107/235]> Resample07

8 <split [2107/235]> Resample08

9 <split [2107/235]> Resample09

10 <split [2107/235]> Resample10

11 <split [2107/235]> Resample11

12 <split [2107/235]> Resample12

13 <split [2107/235]> Resample13

14 <split [2107/235]> Resample14

15 <split [2107/235]> Resample15

16 <split [2107/235]> Resample16

17 <split [2107/235]> Resample17

18 <split [2107/235]> Resample18

19 <split [2107/235]> Resample19

20 <split [2107/235]> Resample20This results in assessment sets that are not mutually exclusive.

Validation Sets

If the dataset is very large, it might be adequate to reserve a validation set and only do the validation once rather than CV.

val_set <- validation_split(

ames_train,

prop = 3 / 4

)

> val_set

# Validation Set Split (0.75/0.25)

# A tibble: 1 × 2

splits id

<list> <chr>

1 <split [1756/586]> validationBootstrapping

A bootstrap sample is a sample that is drawn with replacement. This mean that some data points might be selected more than once. Furthermore, each data point has a 63.2% chance of inclusion in the training set at least once. The assessment set contains all of the training set samples that were not selected for the analysis set. The assessment set is often called the out-of-bag sample.

> bootstraps(ames_train, times = 5)

# Bootstrap sampling

# A tibble: 5 × 2

splits id

<list> <chr>

1 <split [2342/854]> Bootstrap1

2 <split [2342/846]> Bootstrap2

3 <split [2342/857]> Bootstrap3

4 <split [2342/861]> Bootstrap4

5 <split [2342/871]> Bootstrap5Compared to CV, bootstrapping has low variance but high bias. For example, if the true accuracy of the model is 90%, bootstrapping will tend to estimate value less than 90%.

Rolling Forecasts

Time series forecast require a rolling resampling. In this example, a training set of 6 samples is sampled with an assessment (validation) size of 30 days, with a 29 day skip:

time_slices <- tibble(x = 1:365) |>

rolling_origin(

initial = 6 * 30,

assess = 30,

skip = 29,

cumulative = FALSE

)

> time_slices

# Rolling origin forecast resampling

# A tibble: 6 × 2

splits id

<list> <chr>

1 <split [180/30]> Slice1

2 <split [180/30]> Slice2

3 <split [180/30]> Slice3

4 <split [180/30]> Slice4

5 <split [180/30]> Slice5

6 <split [180/30]> Slice6The analysis() function shows the indices of training set and assessment() function shows the indices of validation set:

data_range <- function(x) {

summarize(

x,

first = min(x),

last = max(x)

)

}

> map_dfr(

time_slices$splits,

~ analysis(.x) |>

data_range()

)

# A tibble: 6 × 2

first last

<int> <int>

1 1 180

2 31 210

3 61 240

4 91 270

5 121 300

6 151 330

> map_dfr(

time_slices$splits,

~ assessment(.x) |>

data_range()

)

# A tibble: 6 × 2

first last

<int> <int>

1 181 210

2 211 240

3 241 270

4 271 300

5 301 330

6 331 360Estimating Performance

Using the earlier CV folds, we can run a resampling:

lm_res <- lm_wflow |>

fit_resamples(

resamples = ames_folds,

control = control_resamples(

save_pred = TRUE,

save_workflow = TRUE

)

)

> lm_res

# Resampling results

# 10-fold cross-validation

# A tibble: 10 × 5

splits id .metrics .notes .predictions

<list> <chr> <list> <list> <list>

1 <split [2107/235]> Fold01 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [235 × 4]>

2 <split [2107/235]> Fold02 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [235 × 4]>

3 <split [2108/234]> Fold03 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]>

4 <split [2108/234]> Fold04 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]>

5 <split [2108/234]> Fold05 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]>

6 <split [2108/234]> Fold06 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]>

7 <split [2108/234]> Fold07 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]>

8 <split [2108/234]> Fold08 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]>

9 <split [2108/234]> Fold09 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]>

10 <split [2108/234]> Fold10 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [234 × 4]> .metrics contains the assessment set performance statistics.

.notes contains any warnings or errors generated during resampling.

.predictions contain out-of-sample predictions when save_pred = TRUE.

To obtain the performance metrics:

> collect_metrics(lm_res)

# A tibble: 2 × 6

.metric .estimator mean n std_err .config

<chr> <chr> <dbl> <int> <dbl> <chr>

1 rmse standard 0.160 10 0.00284 Preprocessor1_Model1

2 rsq standard 0.178 10 0.0198 Preprocessor1_Model1To obtain the assessment set predictions:

assess_res <- collect_predictions(lm_res)

> assess_res

# A tibble: 2,342 × 5

id .pred .row Sale_Price .config

<chr> <dbl> <int> <dbl> <chr>

1 Fold01 5.29 9 5.09 Preprocessor1_Model1

2 Fold01 5.28 48 5.10 Preprocessor1_Model1

3 Fold01 5.25 50 5.09 Preprocessor1_Model1

4 Fold01 5.26 58 5 Preprocessor1_Model1

5 Fold01 5.25 78 5.11 Preprocessor1_Model1

6 Fold01 5.24 80 5.08 Preprocessor1_Model1

7 Fold01 5.23 90 5 Preprocessor1_Model1

8 Fold01 5.32 91 5.08 Preprocessor1_Model1

9 Fold01 5.19 106 5.04 Preprocessor1_Model1

10 Fold01 5.16 117 5.04 Preprocessor1_Model1

# ℹ 2,332 more rows

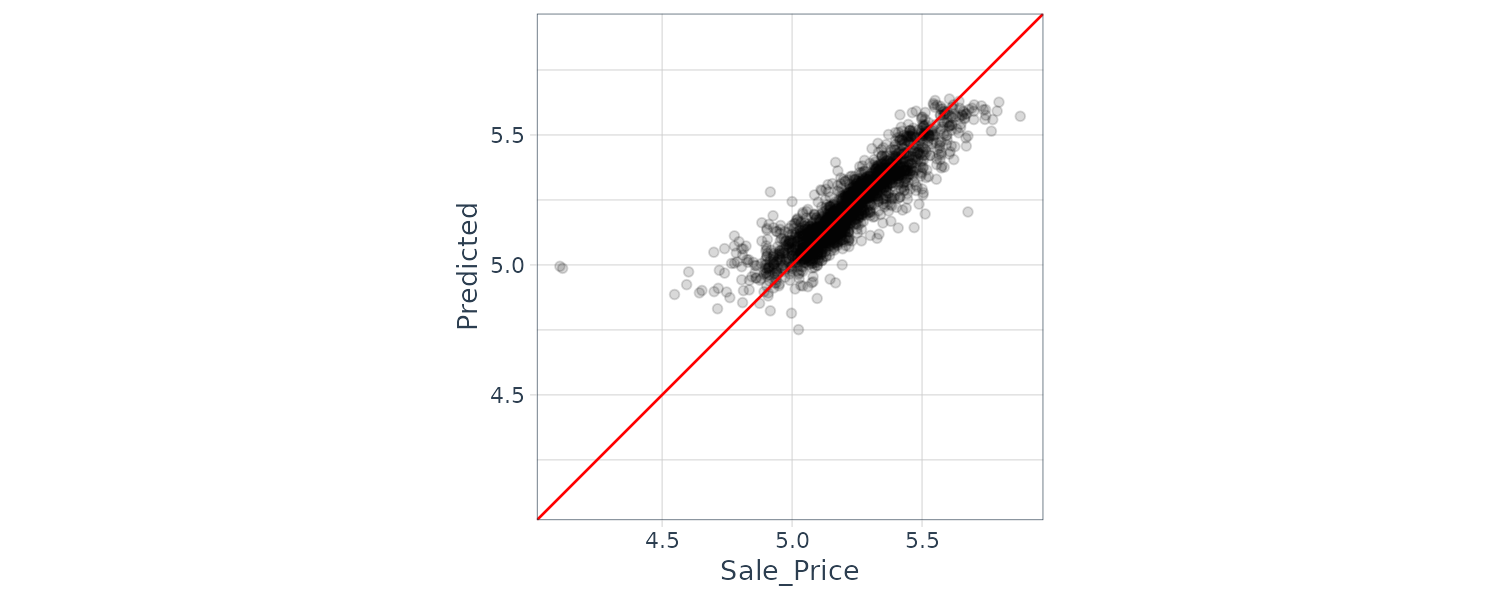

# ℹ Use `print(n = ...)` to see more rowsWe can check for predictions that are way off by first plotting the prediction vs actual test set:

assess_res |>

ggplot(aes(x = Sale_Price, y = .pred)) +

geom_point(alpha = .15) +

geom_abline(color = "red") +

coord_obs_pred() +

ylab("Predicted")

There are two houses in the test set that are overpredicted by the model:

over_predicted <- assess_res |>

mutate(residual = Sale_Price - .pred) |>

arrange(desc(abs(residual))) |>

slice(1:2)

> over_predicted

# A tibble: 2 × 6

id .pred .row Sale_Price .config

<chr> <dbl> <int> <dbl> <chr>

1 Fold05 4.98 329 4.12 Prepro…

2 Fold03 4.95 26 4.11 Prepro…

# ℹ 1 more variable: residual <dbl>We then search for the data rows in the training set:

> ames_train |>

slice(over_predicted$.row) |>

select(

Gr_Liv_Area,

Neighborhood,

Year_Built,

Bedroom_AbvGr,

Full_Bath

)

# A tibble: 2 × 5

Gr_Liv_Area Neighborhood Year_Built

<int> <fct> <int>

1 733 Iowa_DOT_and_… 1952

2 832 Old_Town 1923

# ℹ 2 more variables:

# Bedroom_AbvGr <int>,

# Full_Bath <int>To use a validation set:

val_res <- lm_wflow |>

fit_resamples(

resamples = val_set

)

> val_res

# Resampling results

# Validation Set Split (0.75/0.25)

# A tibble: 1 × 4

splits id .metrics

<list> <chr> <list>

1 <split [1756/586]> validati… <tibble>

# ℹ 1 more variable: .notes <list>

> collect_metrics(val_res)

# A tibble: 2 × 6

.metric .estimator mean n

<chr> <chr> <dbl> <int>

1 rmse standard 0.0820 1

2 rsq standard 0.790 1

# ℹ 2 more variables: std_err <dbl>,

# .config <chr>Parallel Processing

The number of possible worker possible worker processes is determined by the parallel package:

# number of physical cores

> parallel::detectCores(logical = FALSE)

[1] 6

# number of independent simultaneous processes that can be used

> parallel::detectCores(logical = TRUE)

[1] 6For fit_resamples() and other functions in tune, the user need to register a parallel backend package. On Unix we can load the doMC package:

# Unix only

library(doMC)

registerDoMC(cores = 2)

# Now run fit_resamples()...

# To reset the computations to sequential processing

registerDoSEQ()For all operating systems, we can use the doParallel package:

library(doParallel)

# Create a cluster object and then register:

cl <- makePSOCKcluster(2)

registerDoParallel(cl)

# Now run fit_resamples()`...

stopCluster(cl)Depending on the data and model type, the linear speed-up deteriorates after four to five cores. Note also that memory use will be increased as the data set is replicated across multiple core processes.

Saving the Resampled Objects

The models created during resampling are not retained. However, we can extract information of the models.

Let us review what we currently have for recipe:

ames_rec <- recipe(

Sale_Price ~ Neighborhood +

Gr_Liv_Area +

Year_Built +

Bldg_Type +

Latitude +

Longitude,

data = ames_train

) |>

step_other(Neighborhood, threshold = 0.01) |>

step_dummy(all_nominal_predictors()) |>

step_interact( ~ Gr_Liv_Area:starts_with("Bldg_Type_") ) |>

step_ns(Latitude, Longitude, deg_free = 20)

lm_wflow <- workflow() |>

add_recipe(ames_rec) |>

add_model(

linear_reg() |>

set_engine("lm")

)

lm_fit <- lm_wflow |>

fit(data = ames_train)

> extract_recipe(

lm_fit,

estimated = TRUE

)

── Recipe ───────────────────────────────────────────────────────────────

── Inputs

Number of variables by role

outcome: 1

predictor: 6

── Training information

Training data contained 2342 data points and no incomplete rows.

── Operations

• Collapsing factor levels for: Neighborhood | Trained

• Dummy variables from: Neighborhood, Bldg_Type | Trained

• Interactions with: Gr_Liv_Area:(Bldg_Type_TwoFmCon + Bldg_Type_Duplex +

Bldg_Type_Twnhs + Bldg_Type_TwnhsE) | Trained

• Natural splines on: Latitude, Longitude | TrainedTo save and extract the linear model coefficients from a workflow:

ames_folds <- vfold_cv(ames_train, v = 10)

lm_res <- lm_wflow |>

fit_resamples(

resamples = ames_folds,

control = control_resamples(

extract = function(x) {

extract_fit_parsnip(x) |>

tidy()

}

)

)

> lm_res

# Resampling results

# 10-fold cross-validation

# A tibble: 10 × 5

splits id .metrics .notes .extracts

<list> <chr> <list> <list> <list>

1 <split [2107/235]> Fold01 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

2 <split [2107/235]> Fold02 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

3 <split [2108/234]> Fold03 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

4 <split [2108/234]> Fold04 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

5 <split [2108/234]> Fold05 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

6 <split [2108/234]> Fold06 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

7 <split [2108/234]> Fold07 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

8 <split [2108/234]> Fold08 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

9 <split [2108/234]> Fold09 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]>

10 <split [2108/234]> Fold10 <tibble [2 × 4]> <tibble [0 × 3]> <tibble [1 × 2]> An example to extract 2 folds coefficients:

> lm_res$.extracts[[1]][[1]]

[[1]]

# A tibble: 71 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 1.08 0.329 3.28 1.05e- 3

2 Gr_Liv_Area 0.000176 0.00000485 36.3 5.01e-223

3 Year_Built 0.00210 0.000152 13.8 2.75e- 41

4 Neighborhood_College_Creek -0.0628 0.0366 -1.71 8.65e- 2

5 Neighborhood_Old_Town -0.0572 0.0139 -4.12 4.02e- 5

6 Neighborhood_Edwards -0.129 0.0303 -4.25 2.28e- 5

7 Neighborhood_Somerset 0.0582 0.0209 2.79 5.37e- 3

8 Neighborhood_Northridge_Heights 0.0845 0.0309 2.74 6.24e- 3

9 Neighborhood_Gilbert -0.00883 0.0249 -0.355 7.23e- 1

10 Neighborhood_Sawyer -0.130 0.0289 -4.50 7.29e- 6

# ℹ 61 more rows

# ℹ Use `print(n = ...)` to see more rows

> lm_res$.extracts[[10]][[1]]

[[1]]

# A tibble: 71 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 1.17 0.330 3.56 3.82e- 4

2 Gr_Liv_Area 0.000173 0.00000469 36.9 2.37e-228

3 Year_Built 0.00198 0.000154 12.8 2.99e- 36

4 Neighborhood_College_Creek 0.0127 0.0369 0.345 7.30e- 1

5 Neighborhood_Old_Town -0.0467 0.0139 -3.35 8.21e- 4

6 Neighborhood_Edwards -0.109 0.0294 -3.70 2.18e- 4

7 Neighborhood_Somerset 0.0358 0.0209 1.71 8.70e- 2

8 Neighborhood_Northridge_Heights 0.0636 0.0313 2.04 4.18e- 2

9 Neighborhood_Gilbert -0.0165 0.0252 -0.656 5.12e- 1

10 Neighborhood_Sawyer -0.118 0.0282 -4.18 3.06e- 5

# ℹ 61 more rows

# ℹ Use `print(n = ...)` to see more rowsAll the results can be flattened into a tibble:

all_coef <- map_dfr(

lm_res$.extracts,

~ .x[[1]][[1]]

)

> all_coef

# A tibble: 718 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 (Intercept) 1.12 0.313 3.58 3.48e- 4

2 Gr_Liv_Area 0.000166 0.00000469 35.3 1.87e-213

3 Year_Built 0.00202 0.000148 13.6 1.19e- 40

4 Neighborhood_College_Creek -0.0795 0.0365 -2.18 2.96e- 2

5 Neighborhood_Old_Town -0.0642 0.0132 -4.87 1.18e- 6

6 Neighborhood_Edwards -0.151 0.0290 -5.20 2.25e- 7

7 Neighborhood_Somerset 0.0554 0.0202 2.75 6.03e- 3

8 Neighborhood_Northridge_Heights 0.0948 0.0286 3.32 9.32e- 4

9 Neighborhood_Gilbert -0.00743 0.0225 -0.331 7.41e- 1

10 Neighborhood_Sawyer -0.163 0.0276 -5.91 4.12e- 9

# ℹ 708 more rows

# ℹ Use `print(n = ...)` to see more rows

> filter(all_coef, term == "Year_Built")

# A tibble: 10 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 Year_Built 0.00202 0.000148 13.6 1.19e-40

2 Year_Built 0.00192 0.000146 13.1 7.02e-38

3 Year_Built 0.00193 0.000145 13.3 6.73e-39

4 Year_Built 0.00200 0.000142 14.1 5.68e-43

5 Year_Built 0.00201 0.000142 14.2 1.68e-43

6 Year_Built 0.00188 0.000142 13.2 2.88e-38

7 Year_Built 0.00193 0.000143 13.5 6.99e-40

8 Year_Built 0.00201 0.000144 13.9 2.69e-42

9 Year_Built 0.00192 0.000141 13.7 7.41e-41

10 Year_Built 0.00190 0.000144 13.2 1.79e-38 Comparing Models

Let us create 3 different linear models that add interaction and spline incrementally:

basic_rec <- recipe(

Sale_Price ~ Neighborhood +

Gr_Liv_Area +

Year_Built +

Bldg_Type +

Latitude +

Longitude,

data = ames_train) |>

step_log(Gr_Liv_Area, base = 10) |>

step_other(Neighborhood, threshold = 0.01) |>

step_dummy(all_nominal_predictors())

interaction_rec <- basic_rec |>

step_interact(~ Gr_Liv_Area:starts_with("Bldg_Type_"))

spline_rec <- interaction_rec |>

step_ns(Latitude, Longitude, deg_free = 50)

preproc <- list(

basic = basic_rec,

interact = interaction_rec,

splines = spline_rec

)

lm_models <- workflow_set(

preproc,

list(lm = linear_reg()),

cross = FALSE

)

> lm_models

# A workflow set/tibble: 3 × 4

wflow_id info option result

<chr> <list> <list> <list>

1 basic_lm <tibble [1 × 4]> <opts[0]> <list [0]>

2 interact_lm <tibble [1 × 4]> <opts[0]> <list [0]>

3 splines_lm <tibble [1 × 4]> <opts[0]> <list [0]>Using workflow_map(), we will run resampling for each model:

lm_models <- lm_models |>

workflow_map(

"fit_resamples",

seed = 1101,

verbose = TRUE,

resamples = ames_folds,

control = control_resamples(

save_pred = TRUE,

save_workflow = TRUE

)

)

> lm_models

# A workflow set/tibble: 3 × 4

wflow_id info option result

<chr> <list> <list> <list>

1 basic_lm <tibble [1 × 4]> <opts[2]> <rsmp[+]>

2 interact_lm <tibble [1 × 4]> <opts[2]> <rsmp[+]>

3 splines_lm <tibble [1 × 4]> <opts[2]> <rsmp[+]>Using helper functions to report performance statistics:

> collect_metrics(lm_models) |>

filter(.metric == "rmse")

# A tibble: 3 × 9

wflow_id .config preproc model .metric .estimator mean n std_err

<chr> <chr> <chr> <chr> <chr> <chr> <dbl> <int> <dbl>

1 basic_lm Preprocessor1_Model1 recipe linear_reg rmse standard 0.0802 10 0.00231

2 interact_lm Preprocessor1_Model1 recipe linear_reg rmse standard 0.0797 10 0.00226

3 splines_lm Preprocessor1_Model1 recipe linear_reg rmse standard 0.0792 10 0.00223 Now let’s add another random forest model to the workflow set:

rf_wflow <- workflow() |>

add_recipe(ames_rec) |>

add_model(

rand_forest(trees = 1000, min_n = 5) |>

set_engine("ranger") |>

set_mode("regression")

)

rf_res <- rf_wflow |>

fit_resamples(

resamples = ames_folds,

control = control_resamples(

save_pred = TRUE,

save_workflow = TRUE

)

)

four_models <- as_workflow_set(

random_forest = rf_res

) |>

bind_rows(lm_models)

> four_models

# A workflow set/tibble: 4 × 4

wflow_id info option result

<chr> <list> <list> <list>

1 random_forest <tibble [1 × 4]> <opts[0]> <rsmp[+]>

2 basic_lm <tibble [1 × 4]> <opts[2]> <rsmp[+]>

3 interact_lm <tibble [1 × 4]> <opts[2]> <rsmp[+]>

4 splines_lm <tibble [1 × 4]> <opts[2]> <rsmp[+]> To plot the \(R^{2}\) confidence intervals for easy comparison:

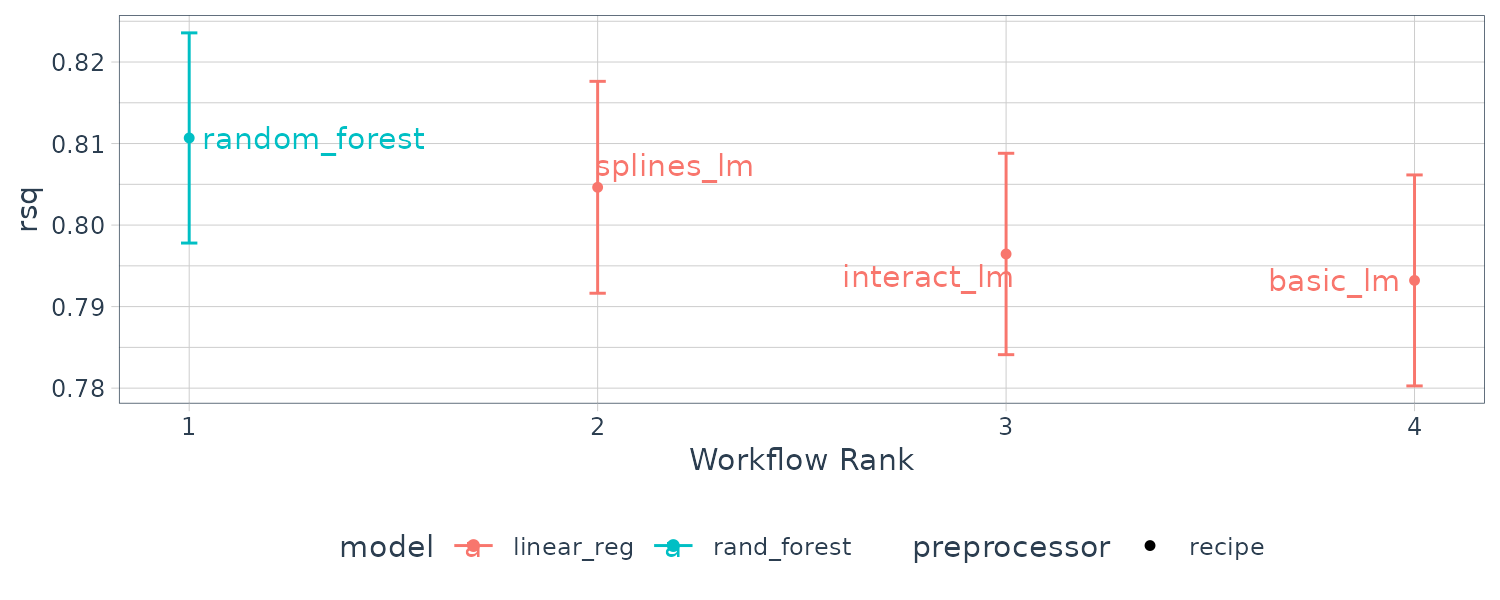

library(ggrepel)

autoplot(four_models, metric = "rsq") +

geom_text_repel(aes(label = wflow_id)) +

theme(legend.position = "none")

Let us gather and summarize the correlation of the resamnpling statistics across each models:

rsq_indiv_estimates <- collect_metrics(

four_models,

summarize = FALSE

) |>

filter(.metric == "rsq")

> rsq_indiv_estimates

# A tibble: 40 × 8

wflow_id .config preproc model id .metric .estimator .estimate

<chr> <chr> <chr> <chr> <chr> <chr> <chr> <dbl>

1 random_forest Preprocessor1_Model1 recipe rand_forest Fold01 rsq standard 0.826

2 random_forest Preprocessor1_Model1 recipe rand_forest Fold02 rsq standard 0.808

3 random_forest Preprocessor1_Model1 recipe rand_forest Fold03 rsq standard 0.812

4 random_forest Preprocessor1_Model1 recipe rand_forest Fold04 rsq standard 0.855

5 random_forest Preprocessor1_Model1 recipe rand_forest Fold05 rsq standard 0.853

6 random_forest Preprocessor1_Model1 recipe rand_forest Fold06 rsq standard 0.853

7 random_forest Preprocessor1_Model1 recipe rand_forest Fold07 rsq standard 0.696

8 random_forest Preprocessor1_Model1 recipe rand_forest Fold08 rsq standard 0.855

9 random_forest Preprocessor1_Model1 recipe rand_forest Fold09 rsq standard 0.805

10 random_forest Preprocessor1_Model1 recipe rand_forest Fold10 rsq standard 0.841

# ℹ 30 more rows

# ℹ Use `print(n = ...)` to see more rowsrsq_wider <- rsq_indiv_estimates |>

select(wflow_id, .estimate, id) |>

pivot_wider(

id_cols = "id",

names_from = "wflow_id",

values_from = ".estimate"

)

> rsq_wider

# A tibble: 10 × 5

id random_forest basic_lm interact_lm splines_lm

<chr> <dbl> <dbl> <dbl> <dbl>

1 Fold01 0.807 0.781 0.791 0.808

2 Fold02 0.840 0.815 0.816 0.821

3 Fold03 0.835 0.804 0.811 0.820

4 Fold04 0.769 0.743 0.750 0.757

5 Fold05 0.828 0.794 0.789 0.783

6 Fold06 0.799 0.801 0.802 0.820

7 Fold07 0.842 0.821 0.822 0.831

8 Fold08 0.808 0.762 0.765 0.775

9 Fold09 0.790 0.794 0.798 0.804

10 Fold10 0.789 0.816 0.820 0.828

> corrr::correlate(

rsq_wider |>

select(-id),

quiet = TRUE

)

# A tibble: 4 × 5

term random_forest basic_lm interact_lm splines_lm

<chr> <dbl> <dbl> <dbl> <dbl>

1 random_forest NA 0.618 0.574 0.480

2 basic_lm 0.618 NA 0.987 0.922

3 interact_lm 0.574 0.987 NA 0.963

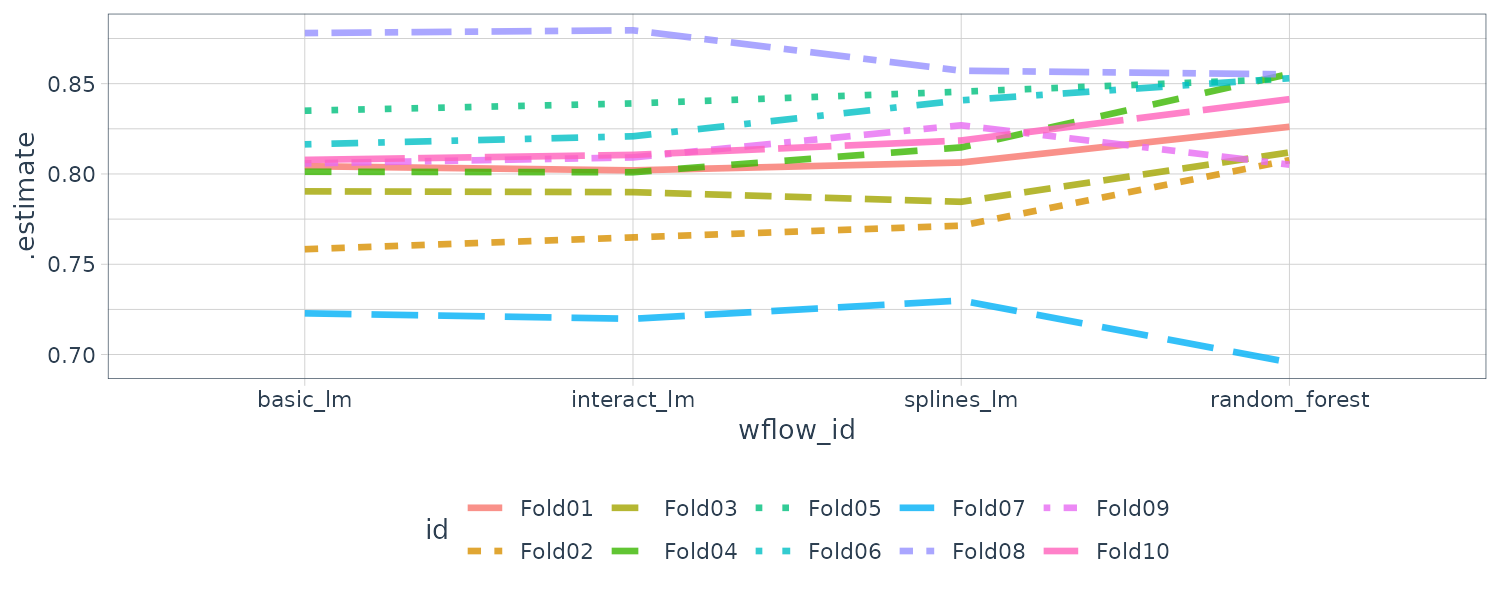

4 splines_lm 0.480 0.922 0.963 NAThere are large correlations across models implying a large within-resample correlations. Another way to view this is to plot each model with lines connecting the resamples:

rsq_indiv_estimates |>

mutate(

wflow_id = reorder(

wflow_id,

.estimate

)

) |>

ggplot(

aes(

x = wflow_id,

y = .estimate,

group = id,

color = id,

lty = id

)

) +

geom_line(

alpha = .8,

lwd = 1.25

) +

theme(legend.position = "none") +

theme_tq()

If there are no correlations within resamples, there would not be any parallel lines. Testing whether the correlations are significant:

> rsq_wider |>

with(

cor.test(

basic_lm,

splines_lm

)

) |>

tidy() |>

select(

estimate,

starts_with("conf")

)

# A tibble: 1 × 3

estimate conf.low conf.high

<dbl> <dbl> <dbl>

1 0.948 0.790 0.988 So why is this important? Consider the variance of a difference of two variables:

\[\begin{aligned} \text{var}(X - Y) &= \text{var}(X) + \text{var}(Y) - 2\text{cov}(X, Y) \end{aligned}\]Hence, if there is a significant positive covariance, then any statistical test of the difference would be reduced.

Simple Hypothesis Testing Methods

With the ANOVA model, the predictors are binary dummy variables for different groups. The \(\beta\) parameters estimate whether two or more groups are different from one another:

Supposed the outcome \(y_{ij}\) are the individual resample \(R^{2}\) statistics and X1, X2, and X3 columns are indicators for the models:

\[\begin{aligned} y_{ij} &= \beta_{0} + \beta_{1}x_{i1} + \beta_{2}x_{i2} + \beta_{p}x_{i3} + e_{ij} \end{aligned}\]- \(\beta_{0}\) is the mean \(R^{2}\) statistic for the basic linear models

- \(\beta_{1}\) is the change in the mean \(R^{2}\) statistic when interactions are added to the basic linear model

- \(\beta_{1}\) is the change in the mean \(R^{2}\) statistic between basic linear model and random forest model

- \(\beta_{1}\) is the change in the mean \(R^{2}\) statistic between basic linear model and model with interactions and splines

However, we still need to contend with how to handle the re-sample-to-resample effect. However, when considering two models at a time, the outcomes are matched by resample and the differences do not contain the resample-to-resample effect:

compare_lm <- rsq_wider |>

mutate(

difference = splines_lm - basic_lm

)

> compare_lm

# A tibble: 10 × 6

id random_forest basic_lm interact_lm splines_lm difference

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>

1 Fold01 0.859 0.848 0.847 0.850 0.00186

2 Fold02 0.837 0.802 0.808 0.822 0.0195

3 Fold03 0.869 0.842 0.846 0.848 0.00591

4 Fold04 0.819 0.791 0.789 0.795 0.00319

5 Fold05 0.817 0.821 0.825 0.832 0.0106

6 Fold06 0.825 0.807 0.811 0.830 0.0232

7 Fold07 0.825 0.795 0.795 0.792 -0.00363

8 Fold08 0.767 0.737 0.737 0.745 0.00760

9 Fold09 0.835 0.780 0.778 0.791 0.0108

10 Fold10 0.807 0.786 0.791 0.802 0.0153

> lm(

difference ~ 1,

data = compare_lm

) |>

tidy(conf.int = TRUE) |>

select(

estimate,

p.value,

starts_with("conf")

)

# A tibble: 1 × 4

estimate p.value conf.low conf.high

<dbl> <dbl> <dbl> <dbl>

1 0.00944 0.00554 0.00355 0.0153A Random Intercept Model (Bayesian Method)

Historically, the resample groups are considered block effect and an appropriate term is added to the model. Alternatively, the resample effect could be considered a random effect where the resamples were drawn at random from a larger population of possible models. Methods for fitting an ANOVA model with this type of random effect are Linear Mixed Model or a Bayesian Hierarchical Model.

The ANOVA model assumes the errors \(e_{ij}\) to be independent and follow a Gaussian distribution \(N(0, \sigma)\). A Bayesian linear model makes additional assumptions, We specify a prior distribution for the model parameters. For example a fairly uninformative priors:

\[\begin{aligned} e_{ij} &\sim N(0, \sigma)\\ \beta_{j} &\sim N(0, 10)\\ \sigma &\sim \text{Exp}(1) \end{aligned}\]To adapt the ANOVA model so that the resamples are adequately modeled, we consider a random intercept model. We assume that the resamples impact the only through the intercept and that the regression parameters \(\beta_{j}\) have the same relationship across resamples.

\[\begin{aligned} y_{ij} &= (\beta_{0} + b_{i}) + \beta_{1}x_{i1} + \beta_{2}x_{i2} + \beta_{p}x_{i3} + e_{ij} \end{aligned}\]And we assume the following prior for \(b_{i}\):

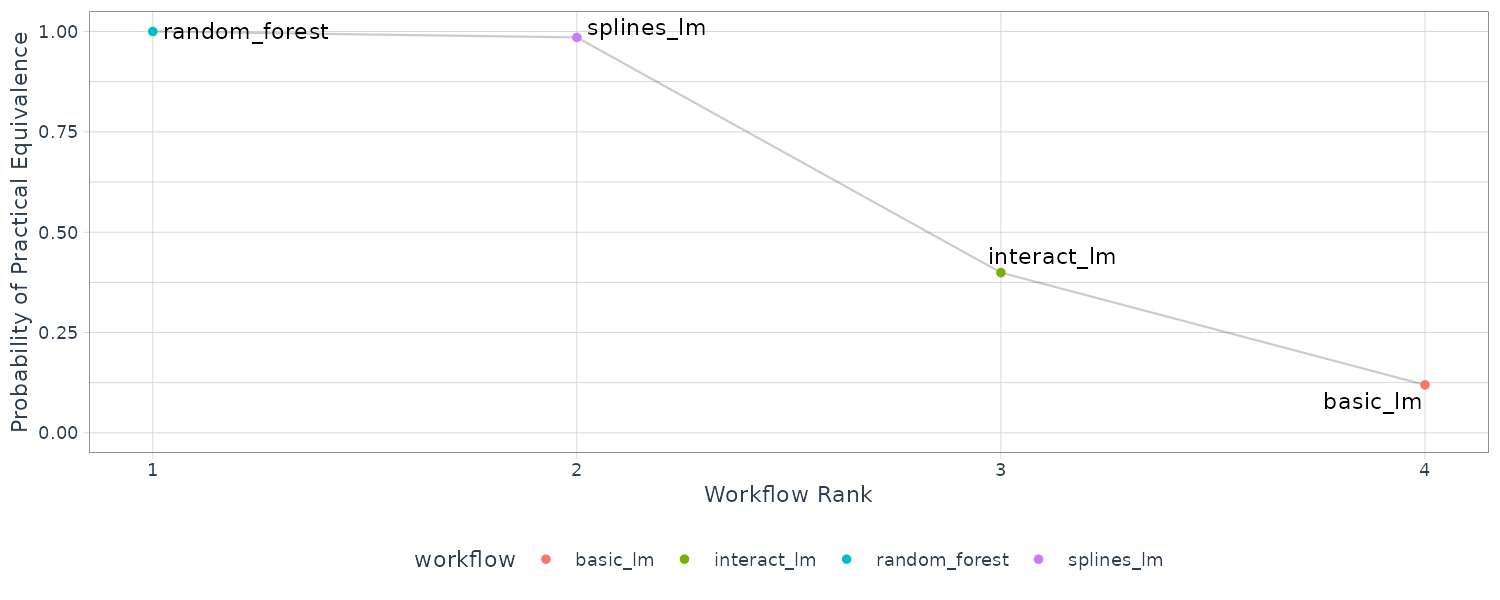

\[\begin{aligned} b_{i} &\sim t(1) \end{aligned}\]The perf_mod() in tidyposterior package can be used for comparing resampled models. For workflow sets, it creates an ANOVA model where the groups correspond to the workflows. If one of the workflows in the set had tunng parameters, the best tuning parameters set for each workflow will be used in the Bayesian analysis. In this case, perf_mode() focuses on between-workflow comparisons.

For objects that contain a single model and tuned using resampling, perf_mod() makes within-model comparisons. In this case, the Bayesian ANOVA model groups the submodels defined by the tuning parameters.

perf_mod() uses default priors for all parameters except for the random intercepts which we would need to specify.

rsq_anova <- perf_mod(

four_models,

metric = "rsq",

prior_intercept = rstanarm::student_t(df = 1),

chains = 4,

iter = 5000,

seed = 1102

)

> rsq_anova

Bayesian Analysis of Resampling Results

Original data: 10-fold cross-validationNext we take random samples from the posterior distribution:

model_post <- rsq_anova |>

tidy(seed = 1103)

> model_post

# Posterior samples of performance

# A tibble: 40,000 × 2

model posterior

<chr> <dbl>

1 random_forest 0.837

2 basic_lm 0.806

3 interact_lm 0.808

4 splines_lm 0.817

5 random_forest 0.830

6 basic_lm 0.806

7 interact_lm 0.811

8 splines_lm 0.818

9 random_forest 0.826

10 basic_lm 0.796

# ℹ 39,990 more rows

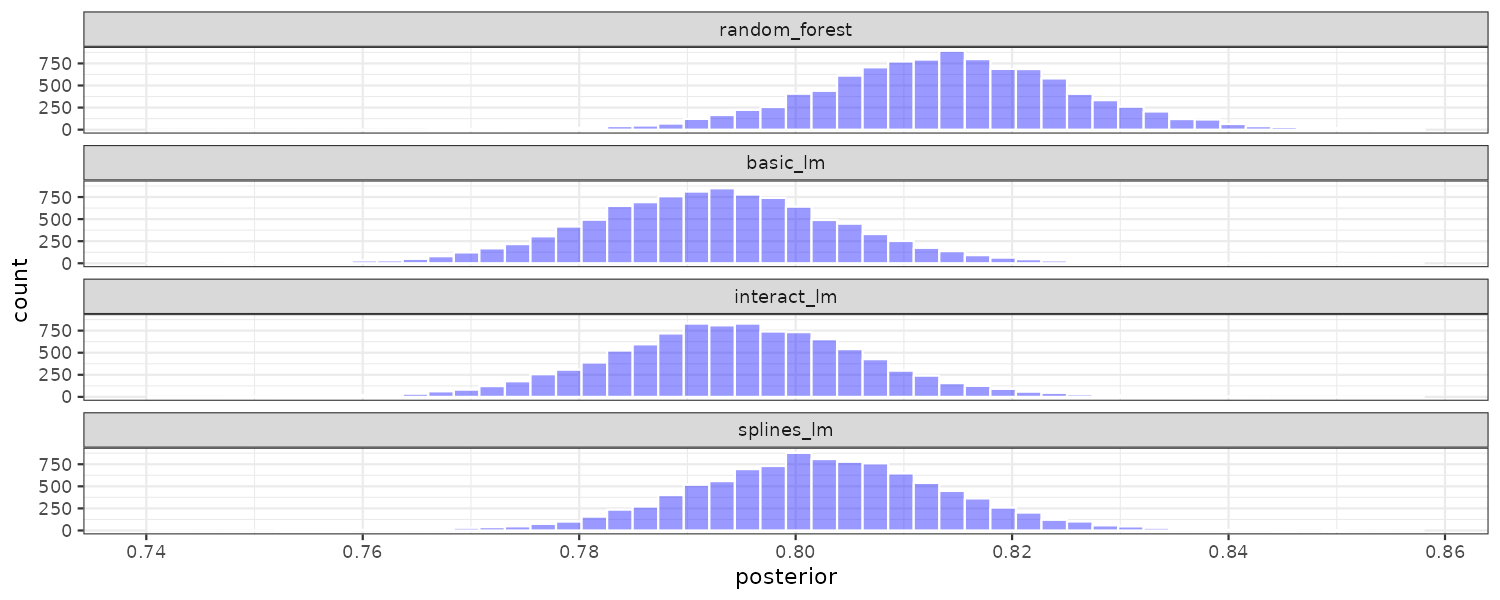

# ℹ Use `print(n = ...)` to see more rows Plotting the 3 posterior distributions from the random samples:

model_post |>

mutate(

model = forcats::fct_inorder(model)

) |>

ggplot(aes(x = posterior)) +

geom_histogram(

bins = 50,

color = "white",

fill = "blue",

alpha = 0.4

) +

facet_wrap(

~model,

ncol = 1

) +

theme_tq()

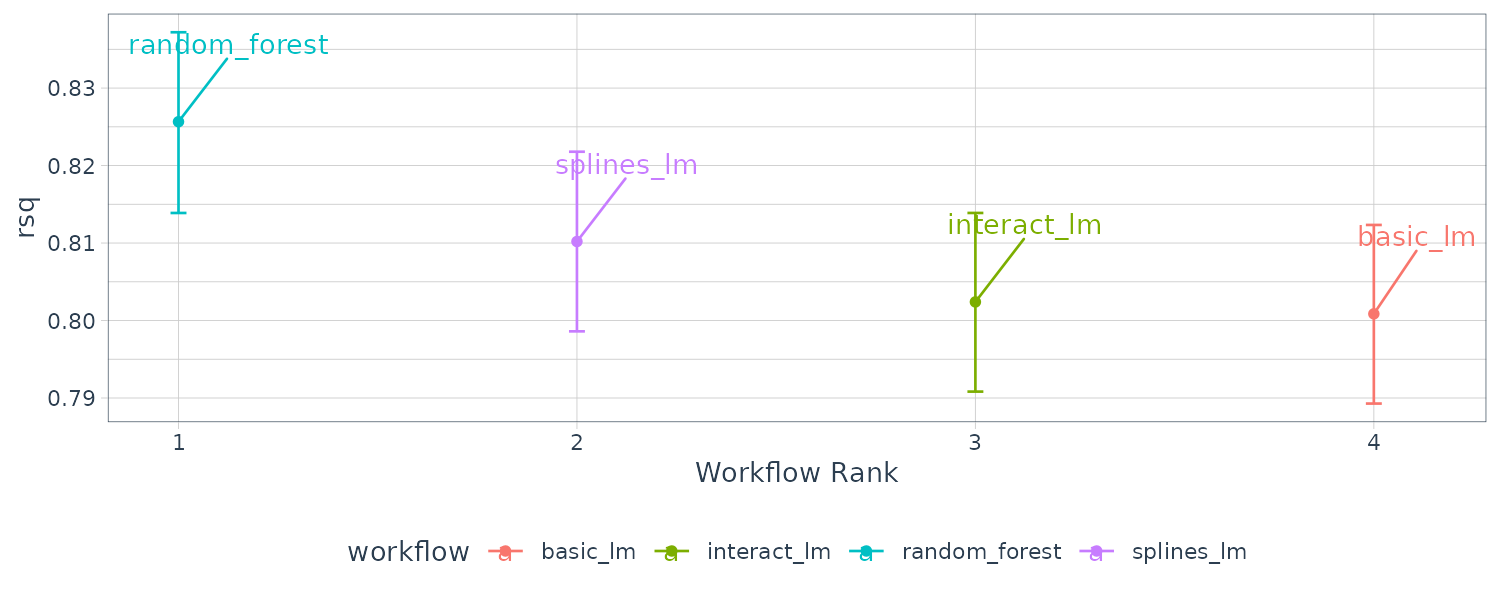

Or we could call autoplot() directly on the perf_mod() object:

autoplot(rsq_anova) +

geom_text_repel(

aes(label = workflow),

nudge_x = 1 / 8,

nudge_y = 1 / 100

) +

theme(legend.position = "none") +

theme_tq()

Once we have the posterior distribution for the parameters, it is trivial to get the posterior distributions for combinations of the parameters. For example, the contrast_models() can compare the differences between models:

rqs_diff <- contrast_models(

rsq_anova,

list_1 = "splines_lm",

list_2 = "basic_lm",

seed = 1104

)

> rqs_diff

# Posterior samples of performance differences

# A tibble: 10,000 × 4

difference model_1 model_2 contrast

<dbl> <chr> <chr> <chr>

1 0.0111 splines_lm basic_lm splines_lm vs. basic_lm

2 0.0119 splines_lm basic_lm splines_lm vs. basic_lm

3 0.0136 splines_lm basic_lm splines_lm vs. basic_lm

4 0.00412 splines_lm basic_lm splines_lm vs. basic_lm

5 0.00402 splines_lm basic_lm splines_lm vs. basic_lm

6 0.0116 splines_lm basic_lm splines_lm vs. basic_lm

7 0.00482 splines_lm basic_lm splines_lm vs. basic_lm

8 0.0136 splines_lm basic_lm splines_lm vs. basic_lm

9 0.0124 splines_lm basic_lm splines_lm vs. basic_lm

10 0.0118 splines_lm basic_lm splines_lm vs. basic_lm

# ℹ 9,990 more rows

# ℹ Use `print(n = ...)` to see more rowsAnd plotting the difference histogram:

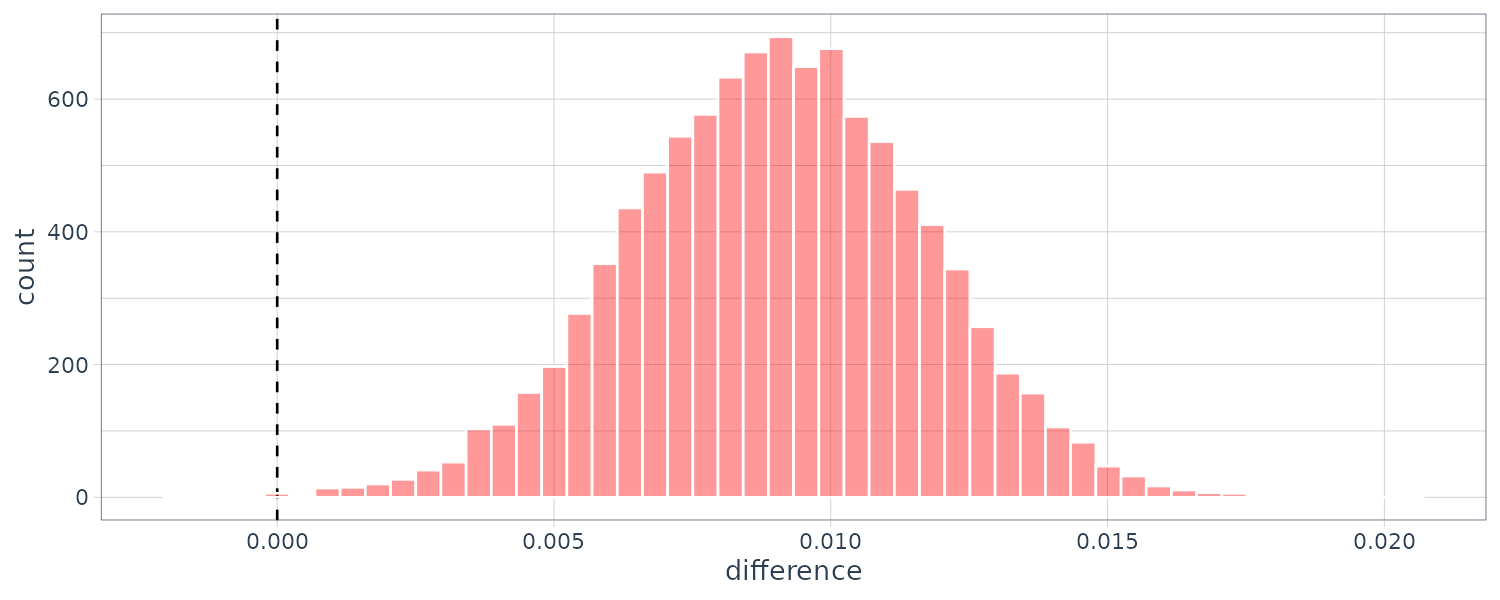

rqs_diff |>

as_tibble() |>

ggplot(aes(x = difference)) +

geom_vline(

xintercept = 0,

lty = 2

) +

geom_histogram(

bins = 50,

color = "white",

fill = "red",

alpha = 0.4

) +

theme_tq()

The summary() method computes the credible intervals as well as a probability column that the proportion of the posterior is greater than 0.

> summary(rqs_diff) |>

select(-starts_with("pract"))

# A tibble: 1 × 6

contrast probability mean lower upper size

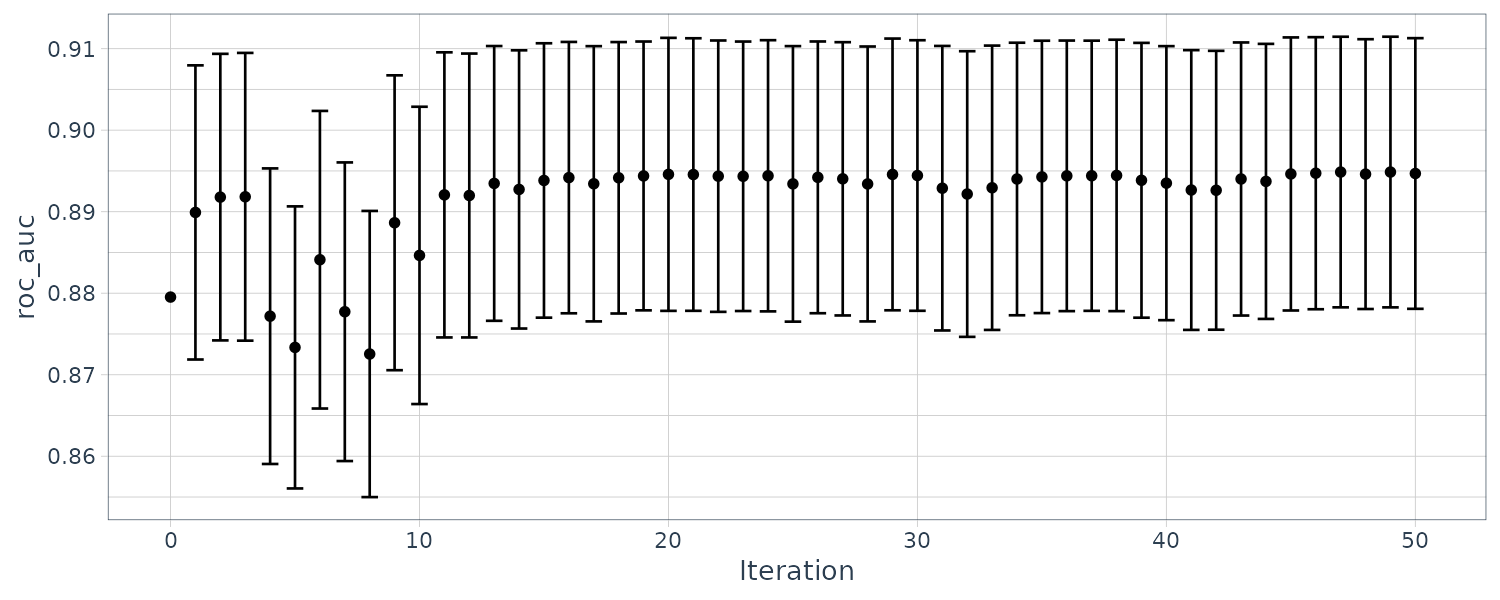

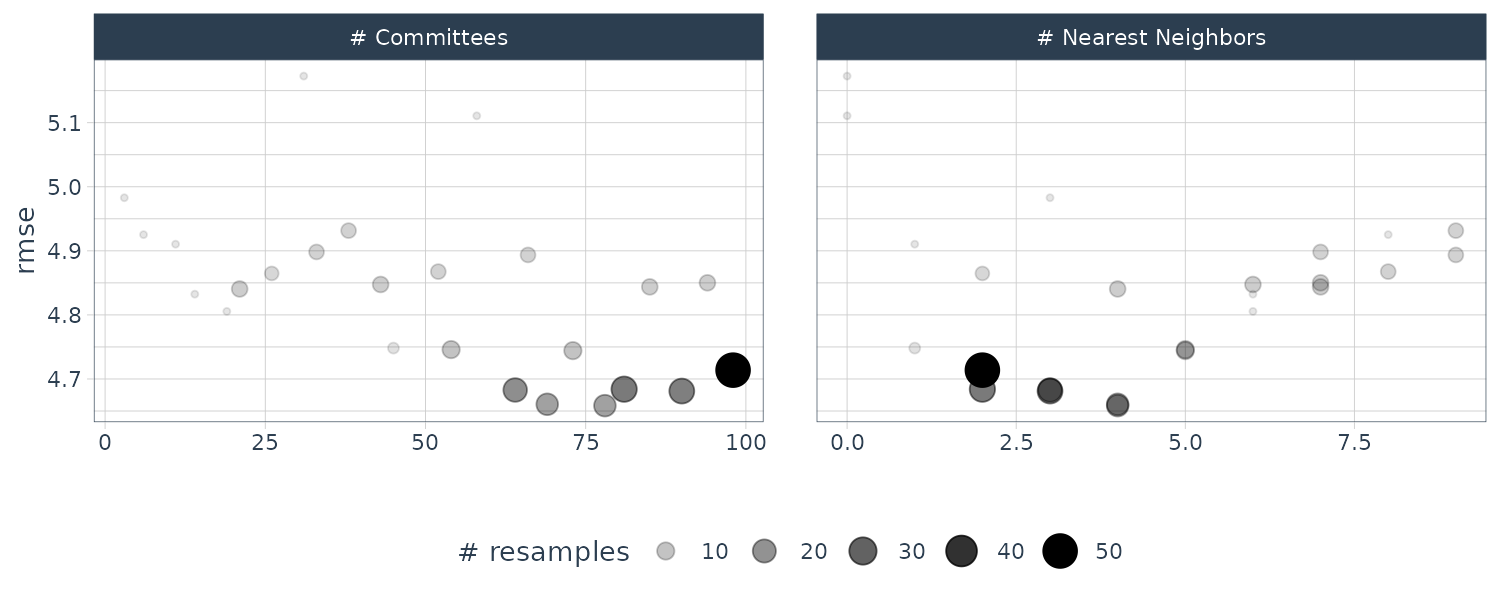

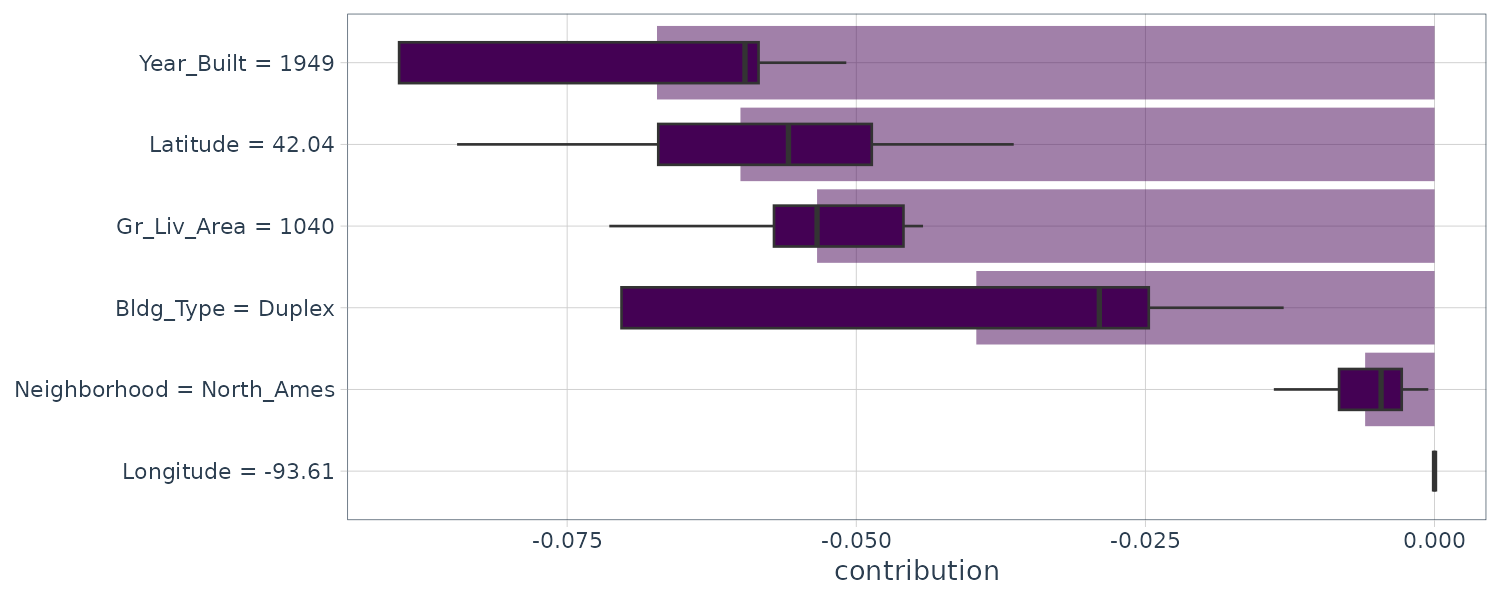

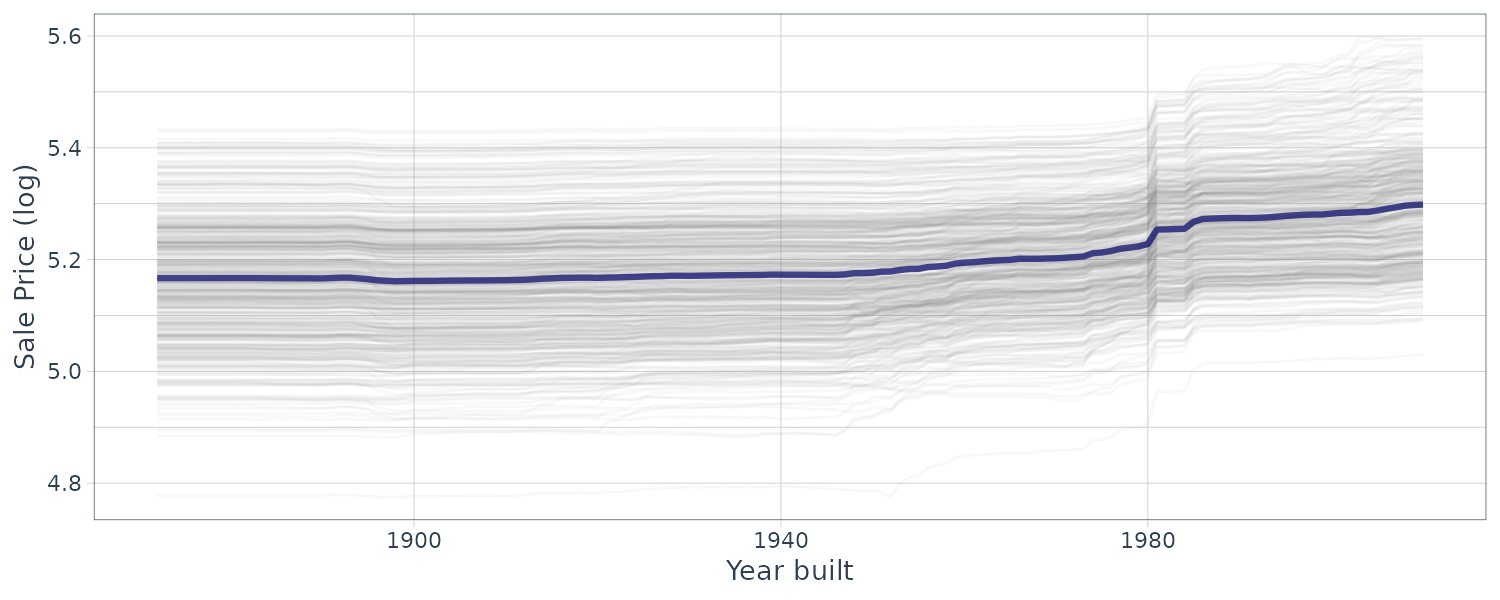

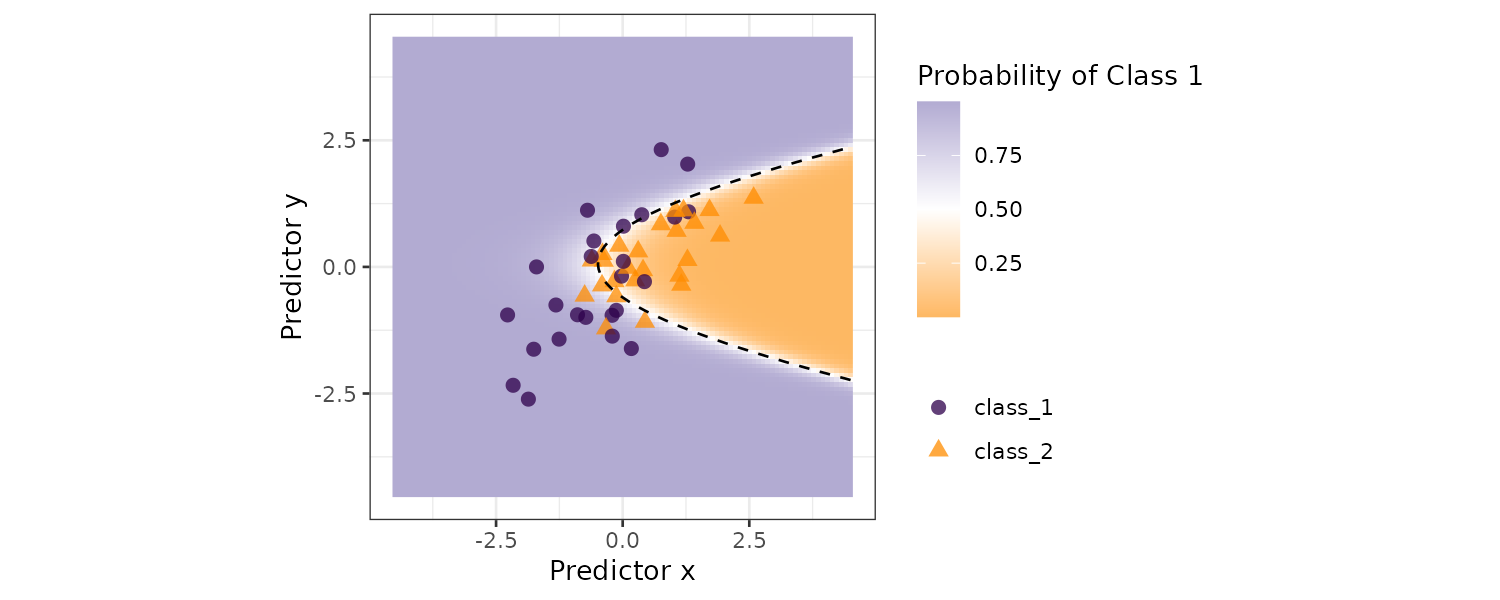

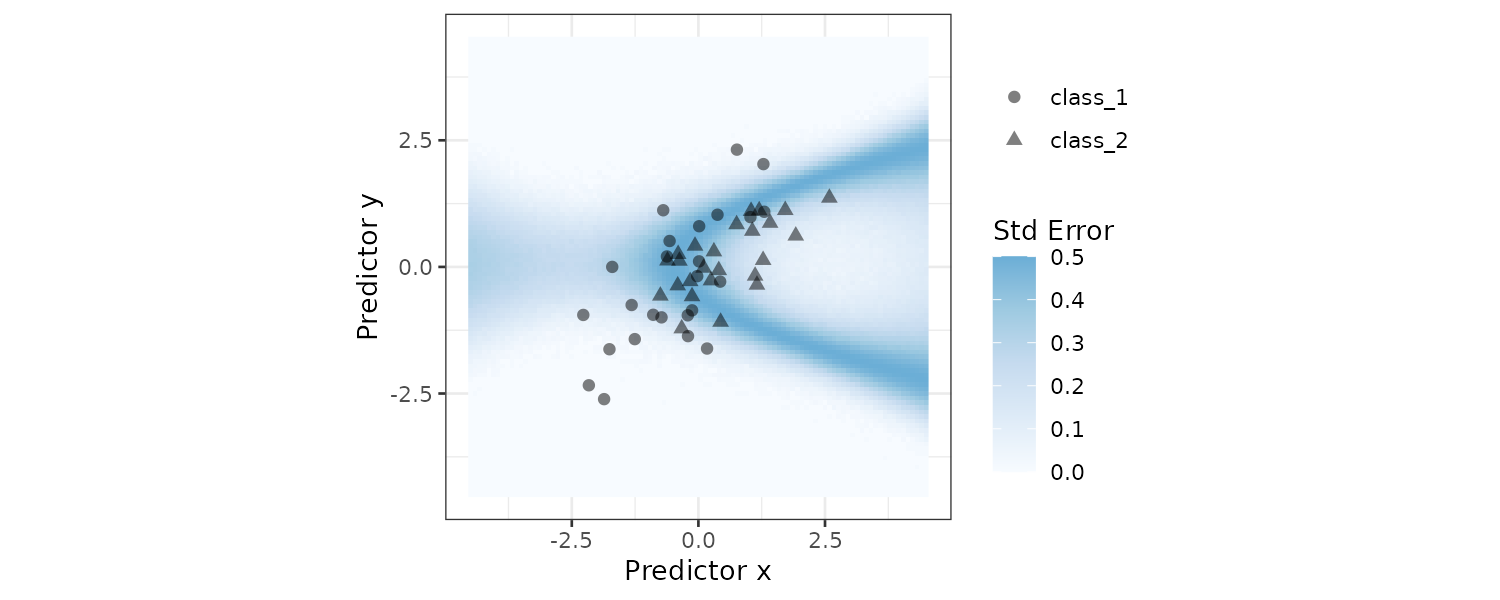

<chr> <dbl> <dbl> <dbl> <dbl> <dbl>