Time Series Analysis - ARIMA Models

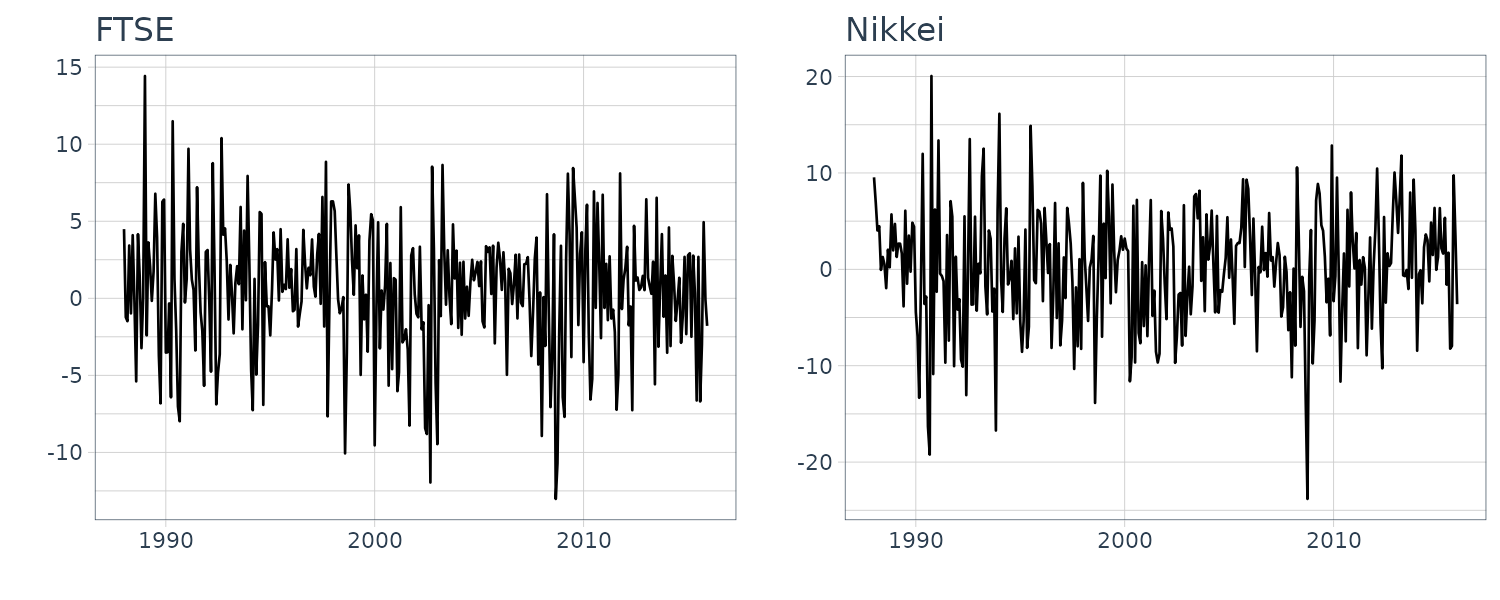

Read PostIn this post, we will continue with Shumway H. (2017) Time Series Analysis, focusing on ARIMA models.

Contents:

- Autoregressive Models (AR)

- Moving Average Models (MA)

- Autoregressive Moving Average Models (ARMA)

- Difference Equations

- Autocorrelation and Partial Autocorrelation

- Forecasting

- Estimation

- Integrated Models for Nonstationary Data

- Building ARIMA Models

- Regression with Autocorrelated Errors

- Multiplicative Seasonal ARIMA Models

- Long Memory ARMA and Fractional Differencing

- See Also

- References

Autoregressive Models (AR)

In time series, sometimes it is desirable to allow the dependent variable to be influenced by the past values of the independent variables of own past values.

An AR(p) is of the form:

\[\begin{aligned} x_{t} &= \phi_{1}x_{t-1} + \phi_{2}x_{t-2} + \cdots + \phi_{p}x_{t-p} + w_{t} \end{aligned}\] \[\begin{aligned} w_{t} &\sim \text{wn}(0, \sigma_{w}^{2}) \end{aligned}\]Where \(x_{t}\) is stationary.

If the mean of \(x_{t}\) is not zero:

\[\begin{aligned} x_{t} - \mu &= \phi_{1}(x_{t-1} - \mu) + (\phi_{2}x_{t-2} - \mu) + \cdots + (\phi_{p}x_{t-p} - \mu) + w_{t} \end{aligned}\] \[\begin{aligned} x_{t} &= \alpha + \phi_{1}x_{t-1} + \phi_{2}x_{t-2} + \cdots + \phi_{p}x_{t-p} + w_{t} \end{aligned}\] \[\begin{aligned} \alpha &= \mu(1 - \phi_{1} - \cdots - \phi_{p}) \end{aligned}\]Note that unlike regular regression, the regressors \(x_{1}, \cdots, x_{t-p}\) are random variables and not fixed.

We can simplify the notation using the backshift operator:

\[\begin{aligned} (1 - \phi_{1}B - \phi_{2}B^{2} - \cdots - \phi_{p}B^{p})x_{t} &= w_{t} \end{aligned}\] \[\begin{aligned} \phi(B)x_{t} &= w_{t} \end{aligned}\]\(\phi(B)\) is known as the autoregressive operator.

Example: AR(1) model

Given \(x_{t} = \phi x_{t-1} + w_{t}\) and iterating backwards k times:

\[\begin{aligned} x_{t} &= \phi x_{t-1} + w_{t}\\ &= \phi(\phi x_{t-2} + w_{t-1}) + w_{t}\\ &\ \ \vdots\\ &= \phi^{k}x_{t - k} + \sum_{j=0}^{k-1}\phi^{j}w_{t-j} \end{aligned}\]Given that:

\[\begin{aligned} |\phi| &< 1\\ \end{aligned}\] \[\begin{aligned} \text{sup}_{t} \text{ var}(x_{t}) &< \infty \end{aligned}\]We can represent an AR(1) model as a linear process:

\[\begin{aligned} x_{t} &= \sum_{j= 0}^{\infty}\phi^{j}w_{t-j} \end{aligned}\]And the process above is stationary with mean:

\[\begin{aligned} E[x_{t}] &= \sum_{j=0}^{\infty}\phi^{j}E[w_{t-j}]\\ &= 0 \end{aligned}\]And the autocovariance function (\(h \geq 0\)):

\[\begin{aligned} \gamma(h) &= \text{cov}(x_{t+h}, x_{t})\\ &= E[x_{t+h}x_{t}]\\ &= E\Big[\sum_{j=0}\phi^{j}w_{t+h-j}\sum_{j=0}\phi^{j}w_{t-j}\Big]\\ &= E[(w_{t+h} + \cdots + \phi^{h}w_{t} + \phi^{h+1}w_{t-1} + \cdots) (w_{t} + \phi w_{t-1} + \cdots)]\\ &= E[w_{t+h}w_{t} + \cdots + \phi^{h}w_{t}w_{t} + \phi^{h+1}w_{t-1}\phi w_{t-1} + \cdots]\\ &= \sigma_{w}^{2}\sum_{j=0}^{\infty}\phi^{h+j}\phi^{j}\\ &= \sigma_{w}^{2}\phi^{h}\sum_{j=0}^{\infty}\phi^{2j}\\ &= \frac{\sigma_{w}^{2}\phi^{h}}{1 - \phi^{2}}\\ \end{aligned}\]The ACF of an AR(1) is then:

\[\begin{aligned} \rho(h) &= \frac{\gamma(h)}{\gamma(0)}\\ &= \phi^{h} \end{aligned}\]And satisfies the recursion:

\[\begin{aligned} \rho(h) &= \phi \rho(h-1)\\ h &= 1, 2, \cdots \end{aligned}\]Example: The Sample Path of AR(1) Process

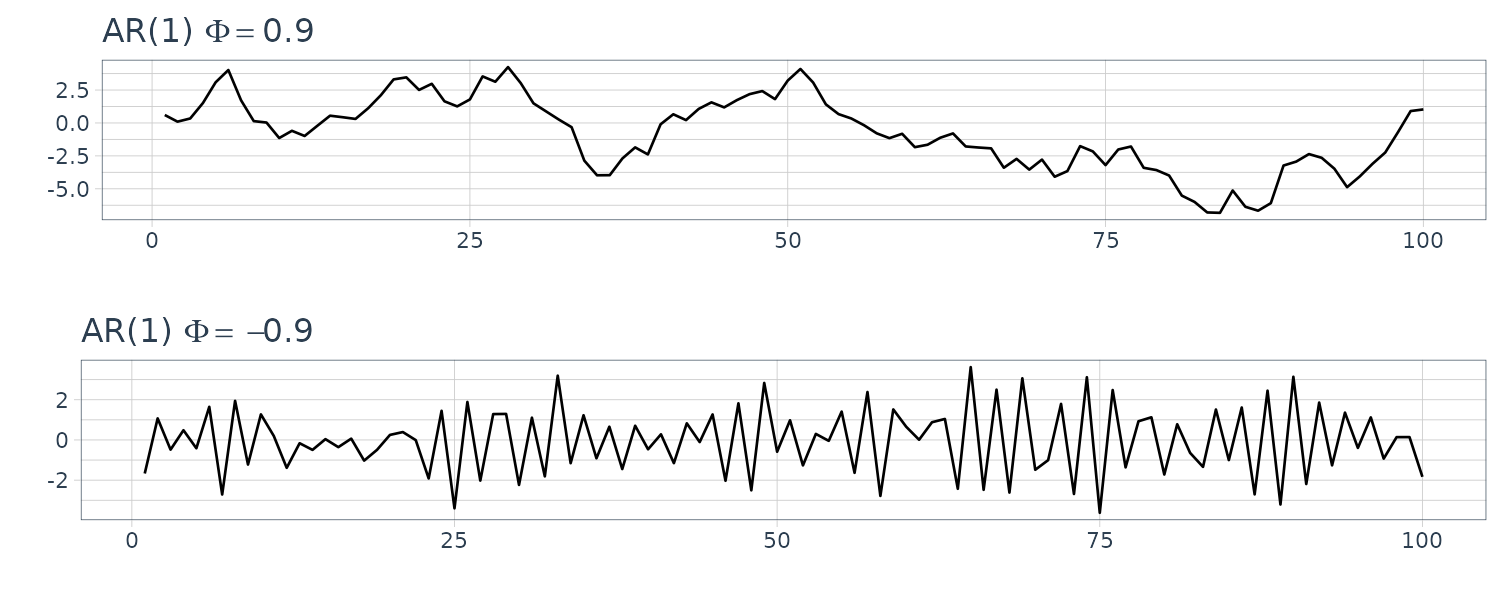

Let’s simulate two time series with \(\phi = 0.9\) and \(\phi = -0.9\):

dat1 <- as_tsibble(

arima.sim(

list(order = c(1, 0, 0), ar = 0.9),

n = 100

)

)

dat2 <- as_tsibble(

arima.sim(

list(order = c(1, 0, 0), ar = -0.9),

n = 100

)

)

plot_grid(

dat1 |>

autoplot() +

ggtitle(latex2exp::TeX("AR(1) $\\Phi\\ = 0.9$")) +

xlab("") +

ylab("") +

theme_tq(),

dat2 |>

autoplot() +

ggtitle(latex2exp::TeX("AR(1) $\\Phi\\ = -0.9$")) +

xlab("") +

ylab("") +

theme_tq(),

ncol = 1

)

\(\phi = -0.9\) appears choppy because the points are negatively correlated. When one point is positive, the next point is likely to be negative.

Example: Explosive AR Models and Causality

When \(\phi > 1\), \(\mid \phi \mid^{j}\) increases without bound as \(\lim_{k \rightarrow \infty}\sum_{j=0}^{k-1}\phi^{j}w_{t-j}\) will not converge. However, we could obtain a stationary model by writing:

\[\begin{aligned} x_{t+1}&= \phi x_{t} + w_{t+1}\\ \phi x_{t} &= x_{t+1} - w_{t+1}\\ x_{t} &= \phi^{-1}x_{t+1} - \phi^{-1}w_{t+1}\\ &= \phi^{-1}(\phi^{-1}x_{t+2} - \phi^{-1}w_{t+2}) - \phi^{-1}w_{t-1}\\ &\ \ \vdots\\ &= \phi^{-k}x_{t+k} - \sum_{j=1}^{k-1}\phi^{-j}w_{t+j} \end{aligned}\]Because \(\mid \phi \mid^{-1} <1\), this suggests that the future dependent AR(1) model is stationary:

\[\begin{aligned} x_{t} &= -\sum_{j=1}^{\infty}\phi^{-j}w_{t+j} \end{aligned}\]When a process does not depend on the future, such as AR(1) model with \(\mid \phi \mid < 1\), we say the process is causal.

Example: Every Explosion Has a Cause

Consider the explosive model:

\[\begin{aligned} x_{t} &= \phi x_{t-1} + w_{t} \end{aligned}\] \[\begin{aligned} |\phi| &> 1 \end{aligned}\] \[\begin{aligned} w_{t} &\sim N(0, \sigma_{w}^{2}) \end{aligned}\] \[\begin{aligned} E[x_{t}] &= 0 \end{aligned}\]The autocovariance function:

\[\begin{aligned} \gamma_{x}(h) &= \text{cov}(x_{t+h}, x_{t})\\ &= \text{cov}\Big(-\sum_{j=1}^{\infty}\phi^{-j}w_{t+h+j}, -\sum_{j=1}^{\infty}\phi^{-j}w_{t_j}\Big)\\ &= \frac{\sigma_{w}^{2}\phi^{-2}\phi^{-h}}{1 - \phi^{-2}} \end{aligned}\]Compared with the AR(1) model autocovariance with \(\phi < 1\):

\[\begin{aligned} \gamma_{x}(h) &= \frac{\sigma_{w}^{2}\phi^{h}}{1-\phi^{2}} \end{aligned}\]The causal process is then defined as:

\[\begin{aligned} y_{t} &= \phi^{-1}y_{t-1} + \nu_{t}\\[5pt] \nu_{t} &\sim N(0, \sigma_{w}^{2}\phi^{-2}) \end{aligned}\]For example, the below explosive process as an equivalent future dependent causal process:

\[\begin{aligned} x_{t} &= 2x_{t-1} + w_{t}\\ y_{t} &= \frac{1}{2}y_{t-1} + \nu_{t}\\ \end{aligned}\] \[\begin{aligned} \sigma_{w}^{2} &= 1\\ \sigma_{\nu}^{2} &= \frac{1}{4} \end{aligned}\]AR(p) Models

The technique of iterating backward works well for AR models when \(p=1\), but not for larger orders. A general technique is that of matching coefficients.

Consider a AR(1) model:

\[\begin{aligned} \phi(B)x_{t} &= w_{t}\\ 1 - \phi(B) &= 1 - \phi B\\ |\phi| &< 1 \end{aligned}\] \[\begin{aligned} x_{t} &= \sum_{j=0}^{\infty}\psi_{j}w_{t-j}\\ &= \psi(B)w_{t} \end{aligned}\]Therefore,

\[\begin{aligned} \phi(B)x_{t} &= w_{t} \end{aligned}\] \[\begin{aligned} x_{t} &= \psi(B)w_{t}\\ \phi(B)x_{t} &= \phi(B)\psi(B)w_{t}\\ &= \psi(B)w_{t} \end{aligned}\]LHS must equal RHS so:

\[\begin{aligned} \phi(B)\psi(B) &= 1\\ \end{aligned}\] \[\begin{aligned} (1 - \phi B)(1 + \psi_{1}B + \psi_{2}B^{2} + \cdots + \psi_{j}B^{j} + \cdots) &= 1\\ 1 + (\psi_{1} - \phi)B + (\psi_{2} - \psi_{1}\phi)B^{2} + \cdots + (\psi_{j}- \psi_{j-1}\phi)B^{j} + \cdots &=1 \end{aligned}\] \[\begin{aligned} \psi_{j} - \psi_{j-1}\phi &= 0 \end{aligned}\] \[\begin{aligned} \psi_{j} &= \psi_{j-1}\phi \end{aligned}\]Given \(\psi_{0} = 1\) because:

\[\begin{aligned} \psi_{0}B^{0} &= 1 \end{aligned}\]Then:

\[\begin{aligned} \psi_{1} &= \psi_{0}\phi\\ &= \phi\\[5pt] \psi_{2} &= \psi_{1}\phi\\ &= \phi^{2}\\ &\ \ \vdots\\ \psi_{j} &= \phi^{j} \end{aligned}\]Another way to think about this is:

\[\begin{aligned} \phi(B)x_{t} &= w_{t}\\ \phi^{-1}(B)\phi(B)x_{t} &= \phi^{-1}(B)w_{t}\\ \end{aligned}\] \[\begin{aligned} x_{t} &= \phi^{-1}(B)w_{t} \end{aligned}\] \[\begin{aligned} \phi^{-1}(B) &= \psi(B) \end{aligned}\]If we treat \(B\) as a complex number \(z\):

\[\begin{aligned} \phi(z) &= 1 - \phi z\\[5pt] \phi^{-1}(z) &= \frac{1}{1 - \phi z}\\ &= 1 + \phi z + \phi^{2}z^{2} + \cdots + \phi^{j}z^{j} + \cdots \end{aligned}\] \[\begin{aligned} |z| &\leq 1 \end{aligned}\]We see that the coefficientso of \(B^{j}\) is the same as \(z^{j}\).

Moving Average Models (MA)

The MA(q) model of order q is defined as:

\[\begin{aligned} x_{t} &= w_{t} + \theta_{1}w_{t-1} + \theta_{2}w_{t-2} + \cdots + \theta_{q}w_{t-q} \end{aligned}\] \[\begin{aligned} w_{t} &\sim \text{wn}(0, \sigma_{w}^{2}) \end{aligned}\]Or in operator form:

\[\begin{aligned} x_{t} &= \theta(B)w_{t} \end{aligned}\] \[\begin{aligned} \theta(B) &= 1 + \theta_{1}B + \theta_{2}B^{2} + \cdots + \theta_{q}B^{q} \end{aligned}\]Unlike AR models, the MA models is stationary for any values of parameters \(\theta_{1}, \cdots, \theta_{q}\).

Example: MA(1) Process

Consider the MA(1) model:

\[\begin{aligned} x_{t} &= w_{t} + \theta w_{t-1} \end{aligned}\]The autocovariance function:

\[\begin{aligned} \gamma(h) &= \begin{cases} (1 + \theta^{2})\sigma_{w}^{2} & h = 0\\ \theta \sigma_{w}^{2} & h = 1\\ 0 & h > 1 \end{cases} \end{aligned}\]ACF:

\[\begin{aligned} \rho(h) &= \begin{cases} \frac{\theta}{1 + \theta^{2}} & h = 1\\ 0 & h > 1 \end{cases} \end{aligned}\]In other words, \(x_{t}\) is only correlated with \(x_{t-1}\). This is unlike AR(1) model where the autocorrelation is never 0.

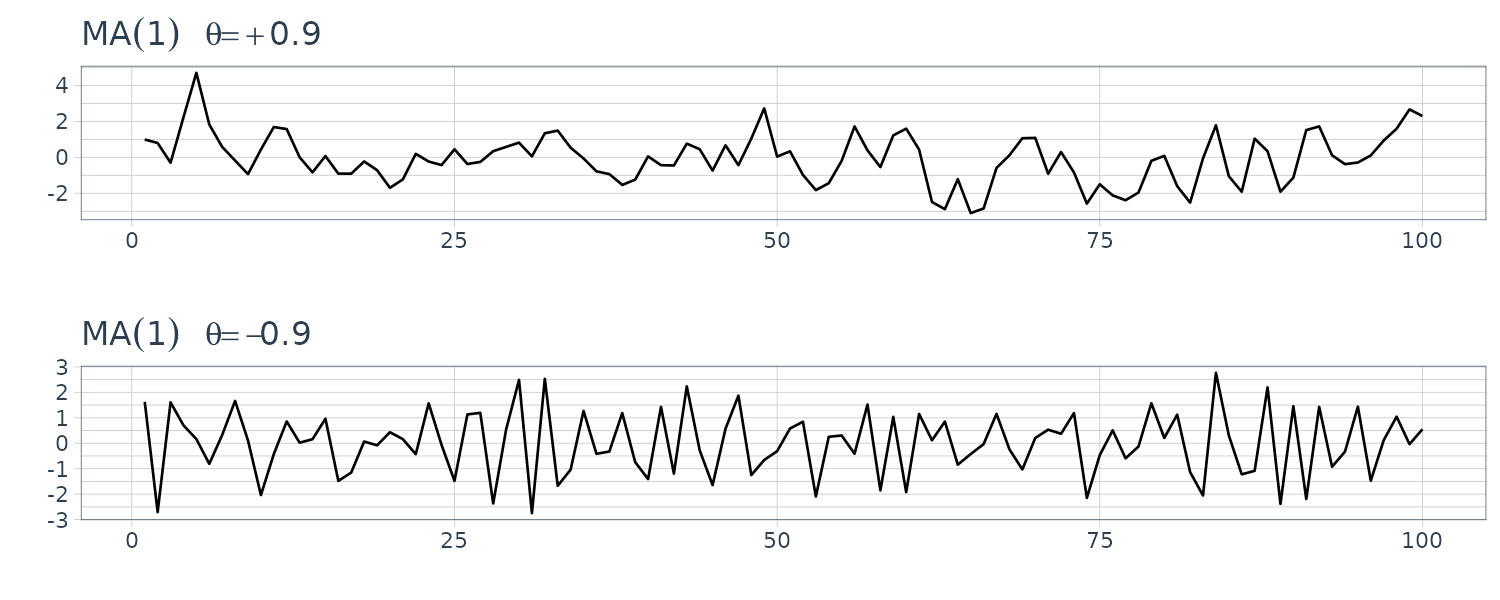

Let us simulate 2 MA(1) models with \(\theta = \pm 0.9\) and \(\sigma_{w}^{2} = 1\):

dat1 <- as_tsibble(

arima.sim(

list(order = c(0, 0, 1), ma = 0.9),

n = 100

)

)

dat2 <- as_tsibble(

arima.sim(

list(order = c(0, 0, 1), ma = -0.9),

n = 100

)

)

Non-uniqueness of MA Models

\(\rho(h)\) is the same for \(\theta\) and \(\frac{1}{\theta}\). For example the following parameters yield the same autocovariance function:

\[\begin{aligned} \sigma_{1w}^{2} &= 1\\ \theta_{1} &= 5\\[5pt] \sigma_{2w}^{2} &= \frac{1}{5}\\ \theta_{2} &= 25 \end{aligned}\] \[\begin{aligned} \gamma(h) &= \begin{cases} 26 & h = 0\\ 5 & h = 1\\ 0 & h > 1 \end{cases} \end{aligned}\]Because we cannot observe the noise and only observe the series, we cannot distinguish between the 2 models. However, only one of the model has an infinite AR representation, and such a process is invertible.

If we rewrite the MA(1) model as:

\[\begin{aligned} x_{t} &= w_{t} + \theta w_{t-1}\\ w_{t} &= -\theta w_{t-1} + x_{t}\\ &= -\theta (-\theta w_{t-2} + x_{t-1}) + x_{t}\\ &\ \ \vdots\\ &= -\theta^{k}w_{t-k} + \sum_{j=0}^{k-1}(-\theta)^{j}x_{t-j}\\ &\ \ \vdots\\ &= \sum_{j=0}^{\infty}(-\theta)^{j}x_{t-j} \end{aligned}\] \[\begin{aligned} |\theta| &< 1 \end{aligned}\]Hence, we would choose:

\[\begin{aligned} |\theta| &= \frac{1}{5}\\ &< 1\\ \sigma_{w}^{2} &= 25 \end{aligned}\]In operator form:

\[\begin{aligned} x_{t} &= \theta(B)w_{t} \end{aligned}\] \[\begin{aligned} \pi(B)x_{t} &= w_{t} \end{aligned}\] \[\begin{aligned} \pi(B) &= \theta^{-1}(B) \end{aligned}\]For example the MA(1) model:

\[\begin{aligned} \theta(z) &= 1 + \theta z\\[5pt] \pi(z) &= \theta^{-1}(z)\\ &= \frac{1}{1 + \theta z}\\ &= \sum_{j=0}^{\infty}(-\theta)^{j}z^{j} \end{aligned}\]Autoregressive Moving Average Models (ARMA)

A time series \(x_{t}\) is ARMA(p, q) if it is stationary and:

\[\begin{aligned} x_{t} &= \phi_{1}x_{t-1} + \cdots + \phi_{p}x_{t-p} + w_{t} + \theta_{1}w_{t-1} + \cdots + \theta_{q}w_{t-q} \end{aligned}\] \[\begin{aligned} \phi_{p} \neq 0\\ \theta_{q} \neq 0 \end{aligned}\]If \(x_{t}\) has a nonzero mean:

\[\begin{aligned} x_{t} - \mu &= \phi_{1}(x_{t-1} - \mu)+ \cdots + \phi_{p}(x_{t-p} - \mu) + w_{t} + \theta_{1}w_{t-1} + \cdots + \theta_{q}w_{t-q} \end{aligned}\] \[\begin{aligned} x_{t}&= \mu + \phi_{1}(x_{t-1} - \mu)+ \cdots + \phi_{p}(x_{t-p} - \mu) + w_{t} + \theta_{1}w_{t-1} + \cdots + \theta_{q}w_{t-q} \end{aligned}\] \[\begin{aligned} x_{t} &= \alpha + \phi_{1}x_{t-1} + \cdots + \phi_{p}x_{t-p} + w_{t} + \theta_{1}w_{t-1} + \cdots + \theta_{q}w_{t-q} \end{aligned}\] \[\begin{aligned} \alpha &= \mu(1 - \phi_{1} - \cdots - \phi_{p}) \end{aligned}\]The model is called an AR model of order p when \(q = 0\) and a MA model of order q when \(p = 0\).

ARMA(p, q) can be written in a more concise form:

\[\begin{aligned} \phi(B)x_{t} &= \theta(B)w_{t} \end{aligned}\]Parameter Redundancy

Consider a white noise process:

\[\begin{aligned} x_{t} &= w_{t} \end{aligned}\]We could turn the process into what seem like an ARMA(1, 1) model by mutiplying both sides by \(1 - 0.5B\):

\[\begin{aligned} (1 - 0.5B)x_{t} &= (1 - 0.5B)w_{t}\\ x_{t} &= 0.5 x_{t-1} - 0.5 w_{t-1} + w_{t} \end{aligned}\]If we fit an ARMA(1, 1) model to white noise, the parameter estimates will be significant:

set.seed(8675309)

dat <- tsibble(

x = rnorm(150, mean = 5),

t = 1:150,

index = t

)

fit <- dat |>

model(ARIMA(x ~ pdq(1, 0, 1) + PDQ(0, 0, 0)))

> tidy(fit) |>

select(-.model)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 ar1 -0.960 0.169 -5.68 6.63e- 8

2 ma1 0.953 0.175 5.44 2.08e- 7

3 constant 9.89 0.142 69.4 6.79e-116 The fitted model would look like:

\[\begin{aligned} (1 + 0.96B)x_{t} &= (1 + 0.95B)w_{t} \end{aligned}\]There are 3 main problems when it comes to ARMA(p, q) models.

1) Parameter Redundant Models

To address this problem, we require \(\phi(z)\) and \(\theta(z)\) to have no common factors.

2) Stationary AR models that depend on the future

To address this problem, we require the model to be causal:

\[\begin{aligned} x_{t} &= \sum_{j=0}^{\infty}\psi_{j}w_{t-j}\\ &= \psi(B)w_{t} \end{aligned}\] \[\begin{aligned} \psi(B) &= \sum_{j=0}^{\infty}\psi_{j}B^{j} \end{aligned}\] \[\begin{aligned} \sum_{j=0}^{\infty}|\psi_{j}| &< \infty\\ \psi_{0} &= 1 \end{aligned}\]For an ARMA(p, q) model:

\[\begin{aligned} \psi(z) &= \sum_{j=0}^{\infty}\psi_{j}z^{j}\\ &= \frac{\theta(z)}{\phi(z)} \end{aligned}\]In order to be causal:

\[\begin{aligned} \phi(z) &\neq 0\\ |z| &\leq 1 \end{aligned}\]Another way to phrase the above is for the roots of \(\phi(z)\) to lie outside the unit circle:

\[\begin{aligned} \phi(z) &= 0\\ |z| &> 1 \end{aligned}\]In other words, having \(\phi(z) = 0\) only when \(\mid z\mid > 1\).

3) MA models that are not unique

An ARMA(p, q) model is said to be invertible if:

\[\begin{aligned} \pi(B)x_{t} &= \sum_{j = 0}^{\infty}\pi_{j}x_{t-j}\\ &= w_{t}\\[5pt] \pi(B) &= \sum_{j=0}^{\infty}\pi_{j}B^{j} \end{aligned}\] \[\begin{aligned} \sum_{j=0}^{\infty}|\pi_{j}| &< \infty \end{aligned}\]An ARMA(p, q) model is invertible if and only if:

\[\begin{aligned} \pi(z) &= \sum_{j= 0}^{\infty}\pi_{j}z^{j}\\ &= \frac{\phi(z)}{\theta(z)} \end{aligned}\] \[\begin{aligned} \theta(z) &\neq 0\\ |z| &\leq 1 \end{aligned}\]In other words, an ARMA process is invertible only when the roots of \(\theta(z)\) lie outside the unit circle.

Example: Parameter Redundancy, Causality, Invertibility

Consider the process:

\[\begin{aligned} x_{t} &= 0.4 x_{t-1} + 0.45x_{t-2} + w_{t} + w_{t-1} + 0.25w_{t-2}\\ \end{aligned}\]It seem like the process is an ARMA(2, 2) process:

\[\begin{aligned} x_{t} - 0.4 x_{t-1} - 0.45x_{t-2} &= w_{t} + w_{t-1} + 0.25w_{t-2}\\ (1 - 0.4B - 0.45B^{2})x_{t} &= (1 + B + 0.25B^{2})w_{t} \end{aligned}\]But if we factorize the process:

\[\begin{aligned} (1 - 0.4B - 0.45B^{2})x_{t} &= (1 + B + 0.25B^{2})w_{t}\\ (1 + 0.5B)(1 - 0.9B)x_{t} &= (1 + 0.5B)^{2}w_{t} \end{aligned}\]The common factor can be canceled out so that we get an ARMA(1, 1) model:

\[\begin{aligned} (1 + 0.5B)(1 - 0.9B)x_{t} &= (1 + 0.5B)^{2}w_{t}\\ (1 - 0.9B)x_{t} &= (1 + 0.5B)w_{t}\\ \end{aligned}\]Back to the model in polynomial form:

\[\begin{aligned} x_{t} &= 0.9 x_{t-1} + 0.5 w_{t-1} + w_{t} \end{aligned}\] \[\begin{aligned} \phi(z) &= (1 - 0.9z)\\ \theta(z) &= (1 + 0.5z)\\ x_{t} &= 0.9x_{t-1} + 0.5w_{t-1} + w_{t} \end{aligned}\]The model is causal because:

\[\begin{aligned} \phi(z) &= (1 - 0.9z)\\ &= 0\\ z &= \frac{10}{9} > 1 \end{aligned}\]And the model is invertible because:

\[\begin{aligned} \theta(z) &= (1 + 0.5z)\\ &= 0\\ z &= -1 \times 2\\ &= -2 \end{aligned}\] \[\begin{aligned} |z| &> 1 \end{aligned}\]To obtain the \(\psi\)-weights:

\[\begin{aligned} \phi(z)\psi(z) &= \theta(z) \end{aligned}\] \[\begin{aligned} (1 - 0.9z)(1 + \psi_{1}z + \psi_{2}z^{2} + \cdots + \psi_{j}z^{j} + \cdots) &= 1 + 0.5z\\ 1 + (\psi_{1} - 0.9)z + (\psi_{2} - 0.9\psi_{1})z^{2} + \cdots + (\psi_{j} - 0.9\psi_{j-1})z^{j} + \cdots &= 1+0.5z \end{aligned}\]For \(j = 1\):

\[\begin{aligned} \psi_{1} &= 0.5 + 0.9\\ &= 1.4\\ \end{aligned}\]For \(j > 1\):

\[\begin{aligned} \psi_{j} - 0.9\psi_{j-1} &= 0 \end{aligned}\] \[\begin{aligned} \psi_{j} &= 0.9\psi_{j-1} \end{aligned}\]Thus:

\[\begin{aligned} \psi_{j} &= 1.4(0.9)^{j-1} \end{aligned}\] \[\begin{aligned} j &\geq 1\\ \psi_{0} &= 1\\ \end{aligned}\] \[\begin{aligned} x_{t} &= \sum_{j = 0}^{\infty}\psi_{j}w_{t-j}\\ &= w_{t} + 1.4\sum_{j=1}^{\infty}0.9^{j-1}w_{t-j} \end{aligned}\]We can get the values of \(\psi_{j}\) in R:

> ARMAtoMA(ar = 0.9, ma = 0.5, 10)

[1] 1.4000000 1.2600000 1.1340000 1.0206000 0.9185400

[6 ]0.8266860 0.7440174 0.6696157 0.6026541 0.5423887The invertible representation can be obtained by matching coefficients of:

\[\begin{aligned} \theta(z)\pi(z) &= \phi(z) \end{aligned}\] \[\begin{aligned} (1 + 0.5z)(1 + \pi_{1}z + \pi_{2}z^{2} + \cdots) &= 1 - 0.9z\\ 1 + (\pi_{1} + 0.5)z + (\pi_{2} + 0.5\pi_{1})z^{2} + \cdots + (\pi_{j} + 0.5\pi_{j-1})z^{j} + \cdots &= 1 - 0.9z \end{aligned}\] \[\begin{aligned} \pi_{1} + 0.5 &= -0.9\\ \pi_{1} &= -1.4 \end{aligned}\] \[\begin{aligned} \pi_{2} + 0.5\pi_{1} &= 0\\ \pi_{2} &= -0.5 \pi_{1}\\ \pi_{2} &= -0.5 \times -1.4\\ \end{aligned}\] \[\begin{aligned} \pi_{j} &= (-1)^{j}\sum_{j=1}^{\infty}(-0.5)^{j-1}x_{t-j} + w_{t} \end{aligned}\]The values of \(\pi_{j}\) can be calculated in R by reversing the roles of \(w_{t}\) and \(x_{t}\):

\[\begin{aligned} w_{t} &= -0.5w_{t-1} + x_{t} - 0.9x_{t-1} \end{aligned}\]> ARMAtoMA(ar = -0.5, ma = -0.9, 10)

[1] -1.400000000 0.700000000 -0.350000000 0.175000000 -0.087500000

[6] 0.043750000 -0.021875000 0.010937500 -0.005468750 0.002734375Example: Causal Conditions for AR(2) Process

For an AR(2) model to be causal, the two roots of \(\phi(z)\) have to lie outside of the unit circle:

\[\begin{aligned} (1 - \phi_{1}B - \phi_{2}B^{2})x_{t} &= w_{t} \end{aligned}\] \[\begin{aligned} \phi(z) &= 1 - \phi_{1}z - \phi_{2}z^{2} \end{aligned}\]The conditions must satisfied:

\[\begin{aligned} |z|_{i} &= \Big|\frac{\phi_{1} \pm \sqrt{\phi_{1}^{2} + 4\phi_{2}}}{-2\phi_{2}} \Big| > 1\\ i &= \{1, 2\} \end{aligned}\]The roots could be real, equal, or complex conjugate pair. We can show that the following equivalent condition for causality:

\[\begin{aligned} \phi_{1} + \phi_{2} &< 1\\ \phi_{2} - \phi_{1} &< 1\\ |\phi_{2}| &< 1 \end{aligned}\]This specifies a triangular region in the 2D parameter space.

Difference Equations

Suppose we have the following sequence of numbers:

\[\begin{aligned} u_{n} - \alpha u_{n-1} &= 0 \end{aligned}\]Note that this is similar to the ACF of an AR(1) process:

\[\begin{aligned} \rho(h) - \phi\rho(h - 1) &= 0 \end{aligned}\]Homogeneous Difference Equation of Order 1

This sort of equation is known as the homogeneous difference equation of order 1.

To solve this equation, we can write:

\[\begin{aligned} u_{1} &= \alpha u_{0}\\ u_{2} &= \alpha u_{1}\\ &= \alpha^{2}u_{0}\\ \vdots\\ u_{n} &= \alpha u_{n-1}\\ &= \alpha^{n}u_{0} \end{aligned}\]Given initial condition:

\[\begin{aligned} u_{0} &= c\\ u_{n} &= \alpha^{n}c \end{aligned}\]In operator notation:

\[\begin{aligned} (1 - \alpha B)u_{n} &= 0 \end{aligned}\]And associated polynomial:

\[\begin{aligned} \alpha(z) &= 1 - \alpha z\\ \alpha(z_{0}) &= 1 - \alpha z_{0}\\ &= 0\\ \alpha &= \frac{1}{z_{0}} \end{aligned}\]A solution involves the root of the polynomial \(z_{0}\):

\[\begin{aligned} u_{n} &= \alpha^{n}c\\ &= \Big(\frac{1}{z_{0}}\Big)^{n}c \end{aligned}\]Homogeneous Difference Equation of Order 2

Consider the sequence:

\[\begin{aligned} u_{n}- \alpha_{1}u_{n-1} - \alpha_{2}u_{n-1} &= 0 \end{aligned}\]The corresponding polynomial is:

\[\begin{aligned} \alpha(z) &= 1 - \alpha_{1}z - \alpha_{2}z^{2} \end{aligned}\]There are 2 roots \(z_{1}, z_{2}\).

Distinct Roots

Suppose \(z_{1} \neq z_{2}\). The general solution is:

\[\begin{aligned} u_{n} &= c_{1}z_{1}^{-n} + c_{2}z_{2}^{-n} \end{aligned}\]We can verify this by direction substitution:

\[\begin{aligned} (c_{1}&z_{1}^{-n} + c_{2}z_{2}^{-n}) - \alpha_{1}(c_{1}z_{1}^{-(n-1)} + c_{2}z_{2}^{-(n-1)}) - \alpha_{2}(c_{1}z_{1}^{-(n-2)} + c_{2}z_{2}^{-(n-2)})\\ &= c_{1}z_{1}^{-n}(1 - \alpha_{1}z_{1} - \alpha_{2}z_{1}^{2}) + c_{2}z_{2}^{-n}(1 - \alpha_{1}z_{2} - \alpha_{2}z_{2}^{2})\\ &= c_{1}z_{1}^{-n}\alpha(z_{1}) + c_{2}z_{2}^{-n}\alpha(z_{2})\\ &= c_{1}z_{1}^{-n}\times 0 + c_{2}z_{2}^{-n}\times 0\\ &= 0 \end{aligned}\]Given 2 initial conditions \(u_{0}\) and \(u_{1}\), we can solve for \(c_{1}\) and \(c_{2}\):

\[\begin{aligned} u_{0} &= c_{1} + c_{2}\\ u_{1} &= c_{1}z_{1}^{-1} + c_{2}z_{2}^{-1} \end{aligned}\]\(\alpha_{1}, \alpha_{2}\) can be solved using the quadratic formula, and \(z_{1}\) and \(z_{2}\) can be solved in terms of \(\alpha_{1}, \alpha_{2}\).

Repeated Roots

When the roots are equal, the general solution is:

\[\begin{aligned} z_{0} &= z_{1} = z_{2}\\ u_{n} &= z_{0}^{-n}(c_{1} + c_{2}n) \end{aligned}\]Given initial conditions \(u_{0}\) and \(u_{1}\), , we can solve for \(c_{1}\) and \(c_{2}\):

\[\begin{aligned} u_{0} &= c_{1}\\ u_{1} &= (c_{1} + c_{2})z_{0}^{-1} \end{aligned}\]We can verify this by direction substitution:

\[\begin{aligned} z_{0}^{-n}&(c_{1} + c_{2}n) - \alpha_{1}(z_{0}^{-(n-1)}(c_{1} + c_{2}(n-1))) - \alpha_{2}(z_{0}^{-(n-2)}(c_{1} + c_{2}(n-2)))\\ &= z_{0}^{-n}(c_{1} + c_{2}n)(1 - \alpha_{1}z_{0} - \alpha_{2}z_{0}^{2}) + c_{2}z_{0}^{-n+1}(\alpha_{1} + 2\alpha_{2}z_{0})\\ &= z_{0}^{-n}(c_{1} + c_{2}n) \times 0 + c_{2}z_{0}^{-n+1}(\alpha_{1} + 2\alpha_{2}z_{0})\\ &= c_{2}z_{0}^{-n+1}(\alpha_{1} + 2\alpha_{2}z_{0}) \end{aligned}\]To show that \((\alpha_{1} + 2\alpha_{2}z_{0}) = 0\), we first note that:

\[\begin{aligned} \alpha_{1} &= 2z_{0}^{-1}\\ \alpha_{2} &= z_{0}^{-2} \end{aligned}\]We can show this by verifying:

\[\begin{aligned} \alpha(z_{0}) &= 1 - 2z_{0}^{-1}z_{0} +z_{0}^{-2}z_{0}^{2}\\ &= 1 - 2 + 1\\ &= 0 \end{aligned}\]Hence:

\[\begin{aligned} 1 - \alpha_{1}z - \alpha_{2}z^{2} &= (1 - z_{0}^{-1}z)^{2}\\ \frac{d}{dz}(1 - \alpha_{1}z - \alpha_{2}z^{2}) &= \frac{d}{dz}((1 - z_{0}^{-1}z)^{2})\\ \alpha_{1} + 2\alpha_{2}z &= 2z_{0}^{-1}(1 - z_{0}^{-1}z)\\ \alpha_{1} + 2\alpha_{2}z_{0} &= 2z_{0}^{-1}(1 - z_{0}^{-1}z_{0})\\ &= 0 \end{aligned}\]To summarize, in the cast of distinct roots, the solution to the homogeneous difference equation of degree 2:

\[\begin{aligned} u_{n} =&\ z_{1}^{-n} \times (\text{a polynomial of degree } m_{1} - 1) +\\ &\ z_{2}^{-n} \times (\text{a polynomial of degree } m_{2} - 1) \end{aligned}\]Where \(m_{1}, m_{2}\) are multiplicity of the root \(z_{1}, z_{2}\). In this case, \(m_{1} = m_{2} = 1\).

To summarize, in the case of repeated roots, the solution to the homogeneous difference equation of degree 2:

\[\begin{aligned} u_{n} =&\ z_{0}^{-n} \times (\text{a polynomial of degree } m_{0} - 1) \end{aligned}\]Where \(m_{0}\) is the multiplicity of the root \(z_{0}\). In this case, \(m_{0} = 2\), so we have the polynomial of degree 1 as \(c_{1} + c_{2}n\).

The general homogeneous difference equation of order p:

\[\begin{aligned} u_{n} - \alpha_{1}u_{n-1} - \cdots - \alpha_{p}u_{n-p} &= 0 \end{aligned}\] \[\begin{aligned} \alpha_{p} &\neq 0 \end{aligned}\] \[\begin{aligned} n &= p, p+1, \cdots \end{aligned}\]The associated polynomial is:

\[\begin{aligned} \alpha(z) &= 1 - \alpha_{1}z - \cdots - \alpha_{p}z^{p} \end{aligned}\]Now suppose \(\alpha(z)\) has \(r\) distinct roots, \(z_{1}\) with multiplicity \(m_{1}\), …, and \(z_{r}\) with multiplicity \(m_{r}\) such that:

\[\begin{aligned} m_{1} + m_{2} + \cdots + m_{r} &= p \end{aligned}\]Then the general solution to the difference equation is:

\[\begin{aligned} u_{n} &= z_{1}^{-n}P_{1}(n) + z_{2}^{-n}P_{2}(n) + \cdots + z_{r}^{-n}P_{r}(n) \end{aligned}\]Where \(P_{j}(n)\) is a polynomial in \(n\), of degree \(m_{j} - 1\).

Given \(p\) initial conditions, \(u_{0}, \cdots, u_{p-1}\), we can solve for \(P_{j}(n)\).

Example: ACF of an AR(2) Process

Suppose a causal AR(2) process:

\[\begin{aligned} x_{t} = \phi_{1}x_{t-1} + \phi_{2}x_{t-2} + w_{t} \end{aligned}\]Multiply each side by \(x_{t-h}\) and take expectation:

\[\begin{aligned} E[x_{t}x_{t-h}] &= \phi_{1}E[x_{t-1}x_{t-h}] + \phi_{2}E[x_{t-2}x_{t-h}] + E[w_{t}x_{t-h}] \end{aligned}\]Note that:

\[\begin{aligned} E[w_{t}x_{t-h}] &= E\Big[w_{t}\sum_{j=0}^{\infty}\psi_{j}w_{t-h-j}\Big]\\ &= 0 \end{aligned}\]Divide through by \(\gamma(0)\):

\[\begin{aligned} \gamma(h) &= \phi_{1}\gamma(h - 1) + \phi_{2}\gamma(h - 2) + 0\\[5pt] \rho(h) &= \phi_{1}\rho(h-1) + \phi_{2}\rho(h - 2) \end{aligned}\] \[\begin{aligned} \rho(h) - \phi_{1}\rho(h-1) - \phi_{2}\rho(h - 2) &= 0 \end{aligned}\]The initial conditions are:

\[\begin{aligned} \rho(0) &= 1\\ \end{aligned}\]And to get the initial conditions for \(\rho(1), \rho(-1)\), taking \(h = 1\):

\[\begin{aligned} \rho(1) &= \phi_{1}\rho(1-1) + \phi_{2}\rho(1 - 2)\\ &= \phi_{1}\rho(0) + \phi_{2}\rho(-1)\\ \phi_{1}\rho(0) &= \rho(1) - \phi_{2}\rho(-1) \end{aligned}\] \[\begin{aligned} \rho(0) &= 1\\ \rho(1) &= \rho(-1) \end{aligned}\] \[\begin{aligned} \phi_{1}\rho(0) &= \rho(-1) - \phi_{2}\rho(-1)\\ \phi_{1} &= \rho(-1)(1 - \phi_{2})\\[5pt] \rho(-1) &= \frac{\phi_{1}}{1 - \phi_{2}} \end{aligned}\]The associated polynomial:

\[\begin{aligned} \phi(z) &= 1 - \phi_{1}z - \phi_{2}z^{2} \end{aligned}\]We know the process is causal and hence both roots are outside the unit circle:

\[\begin{aligned} |z_{1}| &> 1\\ |z_{2}| & > 1 \end{aligned}\]Consider 3 cases:

1) \(z_{1}, z_{2}\) are real and distinct

\[\begin{aligned} \rho(h) &= c_{1}z_{1}^{-h} + c_{2}z_{2}^{-h} \end{aligned}\] \[\begin{aligned} \lim_{h \rightarrow}\rho(h) &\rightarrow 0 \end{aligned}\]2) \(z_{1} = z_{2} = z_{0}\) are real and repeated

\[\begin{aligned} \rho(h) &= z_{0}^{-h}(c_{1} + c_{2}h) \end{aligned}\] \[\begin{aligned} \lim_{h \rightarrow}\rho(h) &\rightarrow 0 \end{aligned}\]3) \(z_{1} = \bar{z_{2}}\) are complex conjugates

If \(z_{1}, z_{2}\) are complex conjugate, then \(c_{1}, c_{2}\) are complex conjugates too because \(\rho(h)\) is real:

\[\begin{aligned} z_{1} &= \bar{z_{2}}\\ c_{2} &= \bar{c_{1}} \end{aligned}\] \[\begin{aligned} \rho(h) &= c_{1}z_{1}^{-h} + \bar{c}_{1}\bar{z}_{1}^{-h}\\ \end{aligned}\]Rewriting in exponential form:

\[\begin{aligned} z_{1} &= |z_{1}|e^{i\theta} \end{aligned}\]And given the identity:

\[\begin{aligned} e^{i\alpha} + e^{-i\alpha} &= 2\mathrm{cos}(\alpha) \end{aligned}\]Then the solution has the form:

\[\begin{aligned} \rho(xh) &= a|z_{1}|^{-h}\mathrm{cos}(h\theta + b) \end{aligned}\]Where a and b are determined by initial conditions and:

\[\begin{aligned} \lim_{h \rightarrow}\rho(h) &\rightarrow 0 \end{aligned}\]Example: AR(2) with Complex Roots

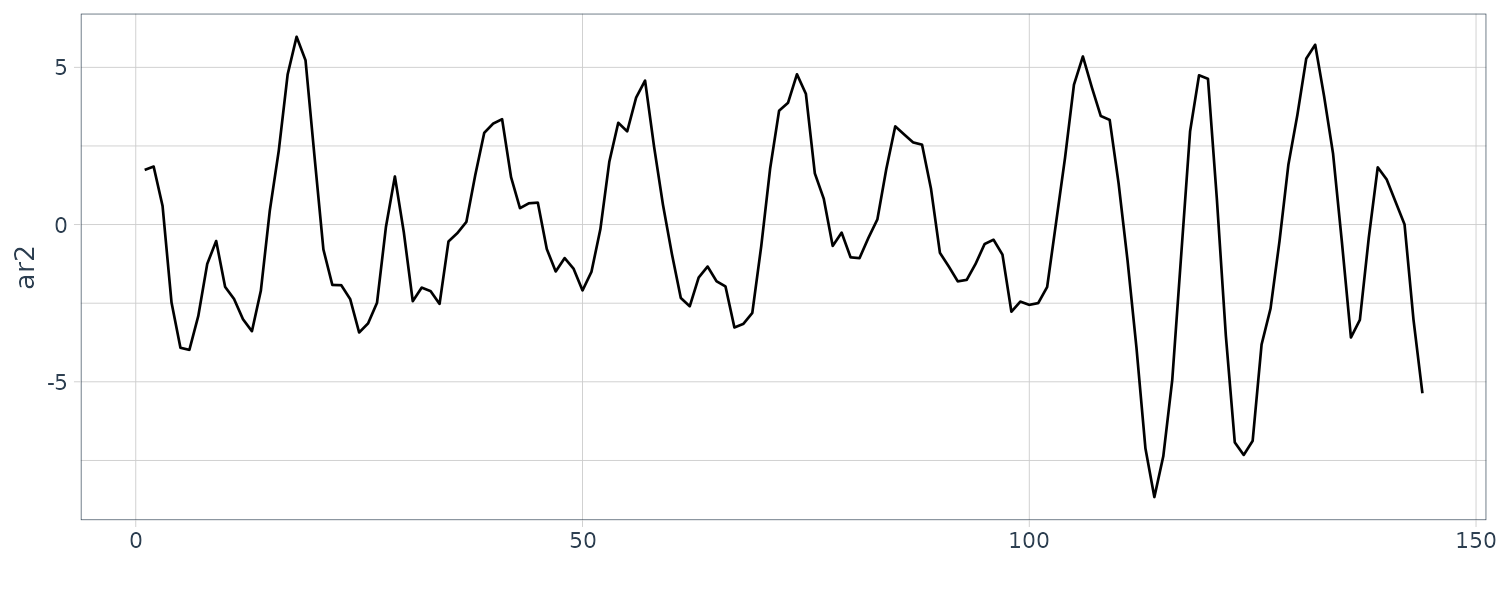

Suppose we generate \(n = 144\) observations from an AR(2) model:

\[\begin{aligned} x_{t} &= 1.5 x_{t-1} - 0.75 x_{t-2} + w_{t} \end{aligned}\] \[\begin{aligned} \sigma_{w}^{2} &= 1 \end{aligned}\]The AR polynomial is:

\[\begin{aligned} \phi(z) &= 1 - 1.5z + 0.75z^{2}\\[5pt] \phi(z) &= 1 \pm \frac{i}{\sqrt{3}}\\[5pt] \theta &= \mathrm{tan}^{-1}\Big(\frac{1}{\sqrt{3}}\Big)\\ &= \frac{2\pi}{12} \end{aligned}\]To convert \(\frac{2\pi}{12}\) rad per unit time to cycles per unit time:

\[\begin{aligned} \frac{2\pi}{12} &= \frac{2\pi}{12} \times \frac{1}{2\pi}\\ &= \frac{1}{12} \end{aligned}\]To simulate AR(2) with complex roots:

set.seed(8675309)

dat <- tsibble(

t = 1:144,

ar2 = arima.sim(

list(

order = c(2, 0, 0),

ar = c(1.5, -.75)

),

n = 144

),

index = t

)

Using R to compute the roots:

z <- polyroot(c(1, -1.5, .75))

> z

[1] 1+0.57735i 1-0.57735i

> Mod(z)

[1] 1.154701 1.154701

> Arg(z)

[1] 0.5235988 -0.5235988

arg <- Arg(z) / (2 * pi)

> arg

[1] 0.08333333 -0.08333333

> 1 / arg

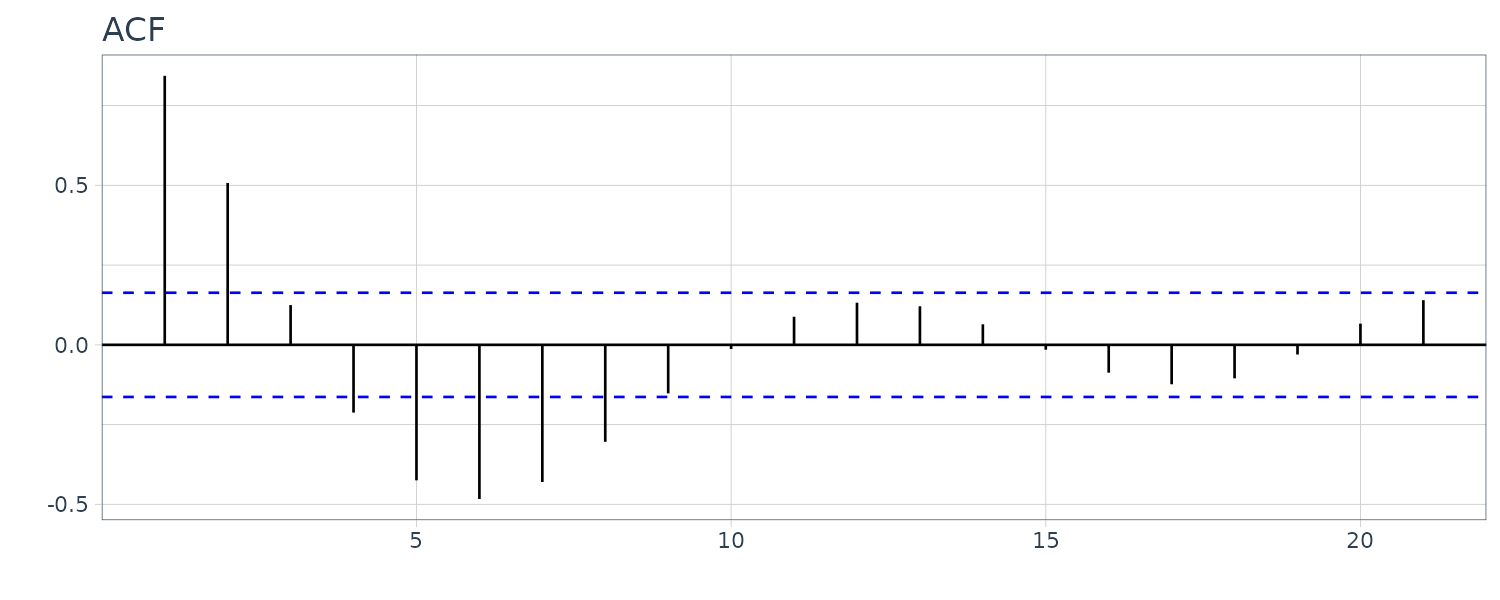

[1] 12 -12And plotting the ACF. Note that the ACF decreases exponentially and in a sinosoidal manner:

dat |>

ACF(ar2) |>

autoplot() +

xlab("") +

ylab("") +

ggtitle("ACF") +

theme_tq()

Example: \(\psi\)-weights for an ARMA(p, q) Process

For a causal ARMA(p, q) model, where the zeros of \(\phi(z)\) are outside the unit circle:

\[\begin{aligned} \phi(B)x_{t} &= \theta(B)w_{t}\\ x_{t} &= \frac{\theta(B)}{\phi(B)}w_{t}\\ x_{t} &= \sum_{j = 0}^{\infty}\psi_{j}w_{t-j} \end{aligned}\]To solve for the weights in general, we match the coefficients:

\[\begin{aligned} (1 - \phi_{1}z - \phi_{2}z^{2} - \cdots -\phi_{p}z^{p})(\psi_{0} + \psi_{z} + \psi_{2}z^{2} + \cdots) &= (1 + \theta_{1}z + \theta_{2}z^{2} + \cdots) \end{aligned}\]The first few values are:

\[\begin{aligned} \psi_{0} &= 1\\ \psi_{1} - \phi_{1}\psi_{0} &= \theta_{1}\\ \psi_{2} - \phi_{1}\psi_{1} - \phi_{2}\psi_{0} &= \theta_{2}\\ \psi_{3} - \phi_{1}\psi_{2} - \phi_{2}\psi_{1} - \phi_{3}\psi_{0} &= \theta_{3}\\ \ \ \vdots \end{aligned}\] \[\begin{aligned} \phi_{j} &= 0\\ j &> p \end{aligned}\] \[\begin{aligned} \theta_{j} &= 0\\ j &> q \end{aligned}\]The \(\psi\)-weights satisfy the following homogeneous difference equation:

\[\begin{aligned} \psi_{j} - \sum_{k=1}^{p}\phi_{k}\psi_{j-k} &= 0 \end{aligned}\] \[\begin{aligned} j &\geq \text{max}(p, q + 1) \end{aligned}\]With initial conditions:

\[\begin{aligned} \psi_{j} - \sum_{k=1}^{p}\phi_{k}\psi_{j-k} &= \theta_{j} \end{aligned}\] \[\begin{aligned} 0 \leq j < \text{max}(p, q + 1) \end{aligned}\]Consider the ARMA(1, 1) process:

\[\begin{aligned} x_{t} &= 0.9x_{t-1} + 0.5w_{t-1} + w_{t}\\ \end{aligned}\]With \(\text{max}(p, q + 1) = 2\):

\[\begin{aligned} \psi_{0} &= 1\\ \end{aligned}\] \[\begin{aligned} \psi_{1} - \phi_{1}\psi_{0} &= 0.5\\ \end{aligned}\] \[\begin{aligned} \psi_{1} &= 0.5 + \phi_{1}\psi_{0}\\ \psi_{1} &= 0.5 + 0.9 \times 1\\ &= 1.4 \end{aligned}\]The remaining \(\psi\)-weights:

\[\begin{aligned} \psi_{j} - 0.9\psi_{j-1} &= 0\\ \end{aligned}\] \[\begin{aligned} \psi_{j} &= 0.9\psi_{j-1}\\ \psi_{2} &= 0.9 \times 1.4\\ \psi_{3} &= 1.4(0.9^{2})\\ \ \ \vdots\\ \psi_{j} &= 1.4(0.9^{j-1})\\ \end{aligned}\]> ARMAtoMA(ar = 0.9, ma = 0.5, 50) |>

head()

[1] 1.400000 1.260000 1.134000 1.020600 0.918540 0.826686

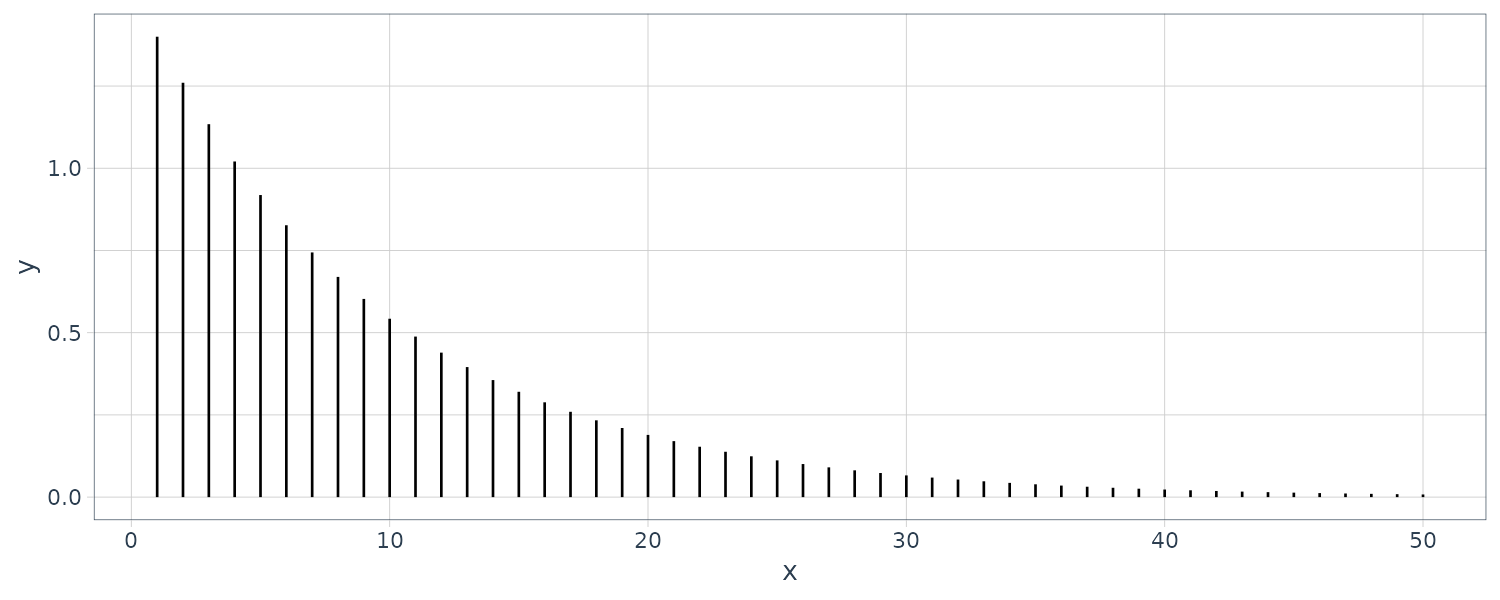

tibble(

x = 1:50,

acf = ARMAtoMA(ar = 0.9, ma = 0.5, 50)

) |>

ggplot(aes(x = x, y = y)) +

geom_segment(

aes(x = x, xend = x, y = 0, yend = acf)

) +

theme_tq()

Autocorrelation and Partial Autocorrelation

MA(q) Model

Consider the MA(q) process \(x_{t}\). The process is stationary with mean 0:

\[\begin{aligned} E[x_{t}] &= \sum_{j=0}^{q}\theta_{j}E[w_{t-j}]\\ &= 0\\ \end{aligned}\] \[\begin{aligned} \theta_{0}&= 1 \end{aligned}\]With autocovariance function:

\[\begin{aligned} \gamma(h) &= \textrm{cov}(x_{t+h}, x_{t})\\ &= E[x_{t+h}x_{t}]\\ &= E\Big[\sum_{j=0}^{q}\theta_{j}w_{t+h-j}\sum_{j=0}^{q}\theta_{j}w_{t-j}\Big]\\ &= \begin{cases} \sigma_{w}^{2}\sum_{j=0}^{q-h}\theta_{j}\theta_{j+h} & 0 \leq h \leq q\\ 0 & h > q \end{cases} \end{aligned}\]And variance:

\[\begin{aligned} \gamma(0) &= \sigma_{w}^{2}\sum_{j=0}^{q}\theta_{j}\theta_{j}\\ &= \sigma_{w}^{2}(1 + \theta_{1}^{2} + \cdots + \theta_{q}^{2}) \end{aligned}\]The cutting off of \(\gamma(h)\) after \(q\) lags is the signature of the MA(q) model.

The ACF:

\[\begin{aligned} \rho(h)&= \begin{cases} \frac{\sum_{j=0}^{q-h}\theta_{j}\theta_{j+h}}{1 + \theta_{1}^{2} + \cdots + \theta_{q}^{2}} & 1 \leq h \leq q\\ 0 & h > q \end{cases} \end{aligned}\]ARMA(p, q) Model

Consider the causal ARMA(p, q) process \(x_{t}\). The process is stationary with mean 0:

\[\begin{aligned} x_{t} &= \sum_{j=0}^{\infty}\psi_{j}w_{t-j} \end{aligned}\] \[\begin{aligned} E[x_{t}] &= 0 \end{aligned}\]The autocovariance function:

\[\begin{aligned} \gamma(h) &= \textrm{cov}(x_{t+h}, x_{t})\\ &= E\Big[\sum_{j=0}^{\infty}\psi_{j}w_{t+h-j}\sum_{j=0}^{\infty}\psi_{j}w_{t-j}\Big]\\ &= \sigma_{w}^{2}\sum_{j=0}^{\infty}\psi_{j}\psi_{j+h} \end{aligned}\] \[\begin{aligned} h &\geq 0 \end{aligned}\]We could obtain a homogeneous difference equation directly in terms of \(\gamma(h)\):

\[\begin{aligned} \gamma(h) &= \textrm{cov}(x_{t+h}, x_{t})\\ &= E\Big[\Big(\sum_{j=1}^{p}\phi_{j}x_{t+h-j} + \sum_{j=1}^{q}\theta_{j}w_{t+h-j}\Big)x_{t}\Big]\\ &= E\Big[\sum_{j=1}^{p}\phi_{j}x_{t+h-j}x_{t}\Big] + E\Big[\sum_{j=1}^{q}\theta_{j}w_{t+h-j}x_{t}\Big]\\ &= \sum_{j= 1}^{p}\phi_{j}\gamma(h-j) + \sigma_{w}^{2}\sum_{j=h}^{q}\theta_{j}\psi_{j-h} \end{aligned}\]Using the fact that:

\[\begin{aligned} \textrm{cov}(w_{t+h-j}, x_{t}) &= \textrm{cov}\Big(w_{t+h-j}, \sum_{k=0}^{\infty}\psi_{k}w_{t-k}\Big)\\ &= E\Big[w_{t+h-j}\sum_{k=0}^{\infty}\psi_{k}w_{t-k}\Big]\\ &= \psi_{j-h}\sigma_{w}^{2} \end{aligned}\]We can write a general homogeneous equation for the autocovariance function of a causal ARMA process:

\[\begin{aligned} \gamma(h) - \sum_{j=1}^{p}\phi_{j}\gamma(h-j) &= \sigma_{w}^{2}\sum_{j=h}^{q}\theta_{j}\psi_{j-h} \end{aligned}\] \[\begin{aligned} 0 \leq h < \text{max}(p, q + 1) \end{aligned}\] \[\begin{aligned} \gamma(h) - \phi_{1}\gamma(h-1) - \cdots - \phi_{p}\gamma(h-p) &= 0 \end{aligned}\] \[\begin{aligned} h \geq \text{max}(p, q+1) \end{aligned}\]Example: ACF of AR(p)

For the general case:

\[\begin{aligned} x_{t} = \phi_{1}x_{t-1} + \cdots + \phi_{p}x_{t-p} + w_{t} \end{aligned}\]It follows that:

\[\begin{aligned} \rho(h) - \phi_{1}\rho(h - 1) - \cdots - \phi(p)\rho(h-p) &= 0 \end{aligned}\] \[\begin{aligned} h &\geq p \end{aligned}\]The general solution to the above difference solution (\(z_{j}\) are roots of \(\phi(x)\)):

\[\begin{aligned} \rho(h) &= z_{1}^{-h}P_{1}(h) + \cdots + z_{r}^{-h}P_{r}(h) \end{aligned}\] \[\begin{aligned} m_{1} + \cdots + m_{r} &= p \end{aligned}\]Where \(P_{j}(h)\) is a polynomial in \(h\) of degree \(m_{j}-1\).

If the roots are real, then \(\rho(h)\) dampens exponentially fast to zero. If some of the roots are complex, then \(\rho(h)\) will dampen in a sinusoidal fashion.

Example: ACF of ARMA(1, 1)

Consider the ARMA(1, 1) process:

\[\begin{aligned} x_{t} &= \phi x_{t-1} + \theta w_{t-1} + w_{t} \end{aligned}\] \[\begin{aligned} |\phi| &< 1 \end{aligned}\]The autocovariance function satisfies:

\[\begin{aligned} \gamma(h) - \phi \gamma(h-1) &= 0\\ h = 2, 3, \cdots \end{aligned}\]It follows from the general homogeneous equation for the ACF of a causal ARMA process for \(h \geq \text{max}(p, q+1)\):

\[\begin{aligned} \gamma(h) &= c\phi^{h}\\ h &= 1, 2, \cdots \end{aligned}\]Recall the \(\psi\)-weights for a ARMA(1, 1) process:

\[\begin{aligned} \psi_{0} &= 1\\ \psi_{1} - \phi\psi_{0} &= \theta\\ \psi_{1} &= \theta + \phi\psi_{0}\\ &= \theta + \phi\\ \psi_{2} &= \phi \psi_{1}\\ &= \phi(\theta + \phi)\\ &= \theta\phi + \phi^{2} \end{aligned}\]The initial conditions, we use the equations for the generic ARMA(p, q):

\[\begin{aligned} \gamma(0) &= \phi\gamma(-1) + \sigma_{w}^{2}\sum_{j=0}^{1}\theta_{j}\psi_{j}\\ &= \phi\gamma(1) + \sigma_{w}^{2}(1 + \theta(\theta + \phi))\\ &= \phi \gamma(1) + \sigma_{w}^{2}(1 + \theta \phi + \theta^{2})\\[5pt] \gamma(1) &= \phi \gamma(0) + \sigma_{w}^{2}\theta \end{aligned}\]Solving for \(\gamma(0)\) and \(\gamma(1)\):

\[\begin{aligned} \gamma(0) &= \sigma_{w}^{2}\frac{1 + 2\theta\phi + \theta^{2}}{1 - \phi^{2}}\\[5pt] \gamma(1) &= \sigma_{w}^{2}\frac{(1 + \theta \phi)(\phi + \theta)}{1 - \phi^{2}} \end{aligned}\]To solve for c:

\[\begin{aligned} \gamma(1) &= c\phi\\ c &= \frac{\gamma(1)}{\phi} \end{aligned}\]The specific solution for \(h \geq 1\):

\[\begin{aligned} \gamma(h) &= \frac{\gamma(1)}{\phi}\phi^{h}\\ &= \sigma_{w}^{2}\frac{(1 + \theta \phi)(\phi + \theta)}{1 - \phi^{2}}\phi^{h-1} \end{aligned}\]Dividing by \(\gamma(0)\):

\[\begin{aligned} \rho(h) &= \frac{\gamma(h)}{\gamma(0)}\\[5pt] &= \frac{\sigma_{w}^{2}\frac{(1 + \theta \phi)(\phi + \theta)}{1 - \phi^{2}}\phi^{h-1}}{\sigma_{w}^{2}\frac{1 + 2\theta\phi + \theta^{2}}{1 - \phi^{2}}}\\[5pt] &= \frac{(1 + \theta \phi)(\phi + \theta)}{1 + 2\theta\phi + \theta^{2}}\phi^{h-1} \end{aligned}\]Note that the general pattern of ACF for ARMA(1, 1) is the same as AR(1).

Partial Autocorrelation Function (PACF)

Recall that if \(X, Y, Z\) are random variables, the the partial correlation between \(X, Y\) given \(Z\) is obtained by regressing \(X\) on \(Z\) to obtain \(\hat{X}\), and similarly \(\hat{Y}\) and calculating the partial correlation:

\[\begin{aligned} \rho_{XY|Z} &= \text{corr}(X - \hat{X}, Y - \hat{Y}) \end{aligned}\]Consider a causal AR(1) model:

\[\begin{aligned} x_{t} &= \phi x_{t-1} + w_{t} \end{aligned}\]The autocovariance function:

\[\begin{aligned} \gamma_{x}(2) &= \textrm{cov}(x_{t}, x_{t-2})\\ &= \textrm{cov}(\phi x_{t-1} + w_{t}, x_{t-2})\\ &= \textrm{cov}(\phi^{2}x_{t-2} + \phi w_{t-1} + w_{t}, x_{t-2})\\ &= E[(\phi^{2}x_{t-2} + \phi w_{t-1} + w_{t}) x_{t-2}]\\ &= E[(\phi^{2}x_{t-2}x_{t-2}] + 0\\ &= \phi^{2}\gamma_{x}(0) \end{aligned}\]The correlation between \(x_{t}, x_{t-2}\) is not zero, because \(x_{t}\) is dependent on \(x_{t-2}\) through \(x_{t-1}\). We can break the dependence by:

\[\begin{aligned} \textrm{cov}(x_{t} - \phi x_{t-1}, x_{t-2} - \phi x_{t-1}) &= \textrm{cov}(\phi x_{t-1} + w_{t} - \phi{x}_{t-1}, x_{t-2} - \phi x_{t-1})\\ &= \textrm{cov}(w_{t}, x_{t-2} - \phi x_{t-1})\\ &= 0 \end{aligned}\]We define \(\hat{x}_{t}\) as the regression of \(x_{t+h}\) on \(\{x_{t+1}, \cdots, x_{t+h-1}\}\):

\[\begin{aligned} \hat{x}_{t+h} &= \beta_{1}x_{t+h-1} + \beta_{2}x_{t+h-2} + \cdots + \beta_{h-1}x_{t+1} \end{aligned}\]Note if the mean of \(x_{t}\) is not 0, we can replace \(x_{t}\) by \(x_{t} - \mu\).

We define \(\hat{x}_{t}\) to be the regression of \(x_{t}\) on \(\{x_{t+1}, \cdots, x_{t+h-1}\}\):

\[\begin{aligned} \hat{x}_{t} &= \beta_{1}x_{t+1} + \beta_{2}x_{t+2} + \cdots + \beta_{h-1}x_{t+h-1} \end{aligned}\]In addition, note that because of stationarity, we have the same set of coefficients \(\beta_{1}, \cdots, \beta_{h-1}\) for \(\hat{x}_{t}\). This will be evident in the examples later.

The PACF of a stationary process \(x_{t}\):

\[\begin{aligned} \phi_{11} &= \rho(x_{t+1}, x_{t})\\ &= \rho(1) \end{aligned}\] \[\begin{aligned} \phi_{hh} &= \rho(x_{t+h} - \hat{x}_{t+h}, x_{t} - \hat{x}_{t}) \end{aligned}\] \[\begin{aligned} h &\geq 2 \end{aligned}\]The reason why we are using a double subscript \(\phi_{hh}\) will be evident when we cover the forecast section. It is the coefficient for \(x_{1}\) when predicting \(x_{p+1}^{p}\).

The PACF is the correlation between \(x_{t+h}, x_{t}\) with the linear dependence of each of \(\{x_{t+1}, \cdots, x_{t+h-1}\}\) removed.

If the process \(x_{t}\) is Gaussian, then \(\phi_{hh}\) is the correlation coefficient between \(x_{t+h}, x_{t}\) in the bivariate distribution of \((x_{t+h}, x_{t})\) conditional on \(\{x_{t+1}, \cdots, x_{t+h-1}\}\):

\[\begin{aligned} \phi_{hh} &= \text{corr}(x_{t+h}, x_{t}|x_{t+1}, \cdots, x_{t+h-1}) \end{aligned}\]Example: The PACF of AR(1)

Consider:

\[\begin{aligned} x_{t} &= \phi x_{t-1} + w_{t} \end{aligned}\] \[\begin{aligned} |\phi| &<1 \end{aligned}\]By definition:

\[\begin{aligned} \phi_{11}&= \rho(1) \\ &= \phi \end{aligned}\]We choose \(\beta\) to minimize:

\[\begin{aligned} \hat{x}_{t+2} &= \beta x_{t+2-1}\\ &= \beta x_{t+1} \end{aligned}\] \[\begin{aligned} E[(x_{t+2} - \hat{x}_{t+2})^{2}] &= E[(x_{t+2} - \beta x_{t+1})^{2}]\\ &= E[x_{t+2}^{2}] - 2\beta E[x_{t+2}x_{t+1}] + \beta^{2}E[x_{t+1}^{2}]\\ &= \gamma(0) - 2\beta \gamma(1) + \beta^{2}\gamma(0) \end{aligned}\]Taking derivatives w.r.t \(\beta\):

\[\begin{aligned} \frac{d}{d\beta}(\gamma(0) - 2\beta \gamma(1) + \beta^{2}\gamma(0)) &=0\\ 0 - 2\gamma(1) + 2\beta\gamma(0) &= 0 \end{aligned}\] \[\begin{aligned} \beta &= \frac{\gamma(1)}{\gamma(0)}\\ &= \rho(1)\\ &= \phi \end{aligned}\]Next we consider:

\[\begin{aligned} \hat{x}_{t} &= \beta x_{t+1} \end{aligned}\] \[\begin{aligned} E[(x_{t} - \hat{x}_{t})^{2}] &= E[(x_{t} - \beta x_{t+1})^{2}]\\ &= E[x_{t}^{2}] - 2\beta E[x_{t}x_{t+1}] + \beta^{2}E[x_{t+1}^{2}]\\ &= \gamma(0) - 2\beta \gamma(1) + \beta^{2}\gamma(0) \end{aligned}\]This is the same equation as above, so \(\beta = \phi\).

Hence by causality:

\[\begin{aligned} \phi_{22} &= \text{corr}(x_{t+2} - \hat{x}_{t+2}, x_{t} - \hat{x}_{t})\\ &= \text{corr}(x_{t+2} - \phi x_{t+1}, x_{t} - \phi x_{t+1})\\ &= \text{corr}(w_{t+2}, x_{t} - \phi x_{t+1})\\ &= 0 \end{aligned}\]We will see in the next example that in fact \(\phi_{hh} = 0\) for all \(h>1\).

Example: PACF of AR(p)

Consider the AR(p) model:

\[\begin{aligned} x_{t+h} &= \sum_{j=1}^{p}\phi_{j}x_{t+h-j} + w_{t+h} \end{aligned}\]The regression of \(x_{t+h}\) on \(\{x_{t+1, \cdots, x_{t+h-1}}\}\) when \(h > p\) is (we will prove this later):

\[\begin{aligned} \hat{x}_{t+h} &= \sum_{j=1}^{p}\phi_{j}x_{t+h-j} \end{aligned}\]And when \(h > p\):

\[\begin{aligned} \hat{x}_{t+h} &= \beta_{1}x_{t+h-1} + \beta_{2}x_{t+h-2} + \cdots + \beta_{h-1}x_{t+h-p} \end{aligned}\] \[\begin{aligned} \hat{x}_{t} &= \beta_{1}x_{t+1} + \beta_{2}x_{t+2} + \cdots + \beta_{h-1}x_{t+h-1} \end{aligned}\] \[\begin{aligned} \phi_{hh} &= \text{corr}(x_{t+h} - \hat{x_{t+h}}, x_{t}-\hat{x}_{t})\\ &= \text{corr}(w_{t+h}, x_{t} - \hat{x}_{t})\\ &= \text{corr}(w_{t+h}, x_{t} - \sum_{j}^{h-1}\beta_{j}x_{t+j})\\ &= 0 \end{aligned}\]We will see later than in fact:

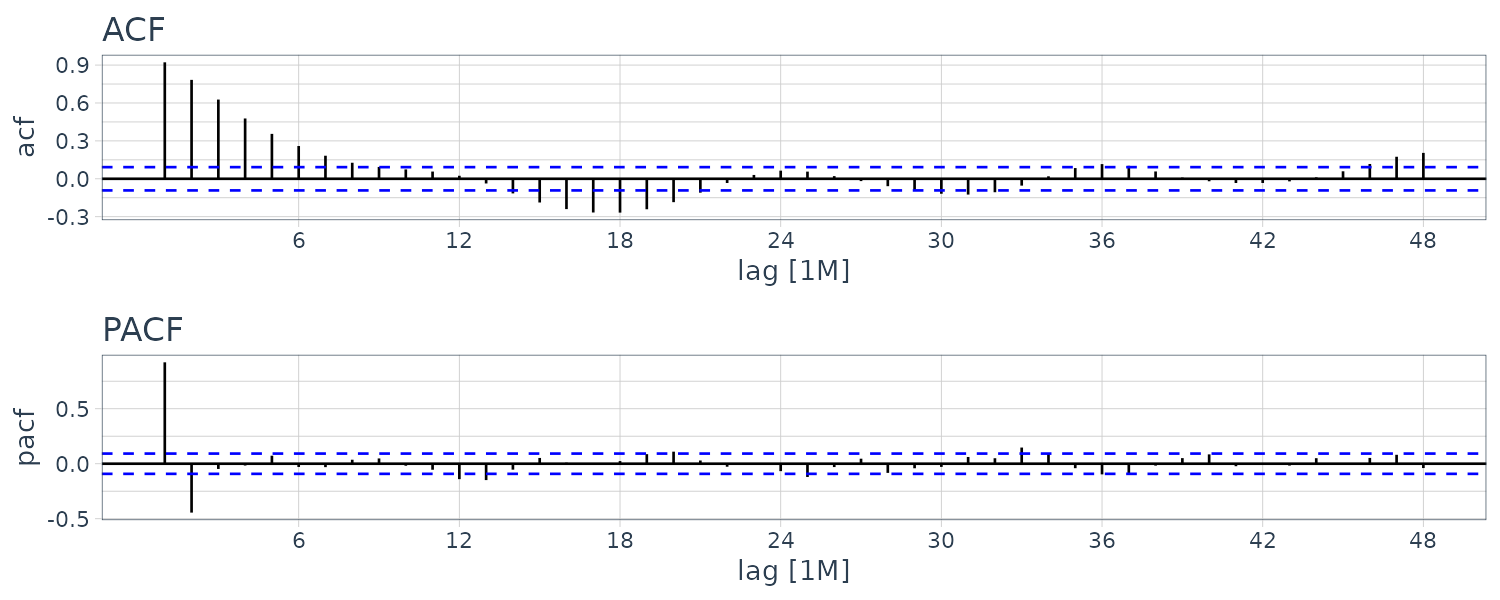

\[\begin{aligned} \phi_{pp} &= \phi_{p} \end{aligned}\]Let’s plot the ACF and PACF for \(\phi_{1} = 1.5\) and \(\phi_{2} = -0.75\):

dat <- tibble(

acf = ARMAacf(

ar = c(1.5, -.75),

ma = 0,

24

)[-1],

pacf = ARMAacf(

ar = c(1.5, -.75),

ma = 0,

24,

pacf = TRUE

),

lag = 1:24

)

Example: PACF of an Invertible MA(q)

For an invertible MA(q) model, we can write as an infinite AR model:

\[\begin{aligned} x_{t} &= -\sum_{j=1}^{\infty}\pi_{j}x_{t-j} + w_{t} \end{aligned}\]Similar to the case of AR(p), the PACF will never cut off.

We can show that for MA(1) model in general (using similar calculation for AR(1) model), the PACF:

\[\begin{aligned} \phi_{hh} &= -\frac{(-\theta)^{h}(1 - \theta^{2})}{1 - \theta^{2(h + 1)}} \end{aligned}\] \[\begin{aligned} h &\geq 1 \end{aligned}\]The PACF for MA models behave like ACF for AR models. Also, the PACF for AR models behave like ACF for MA models.

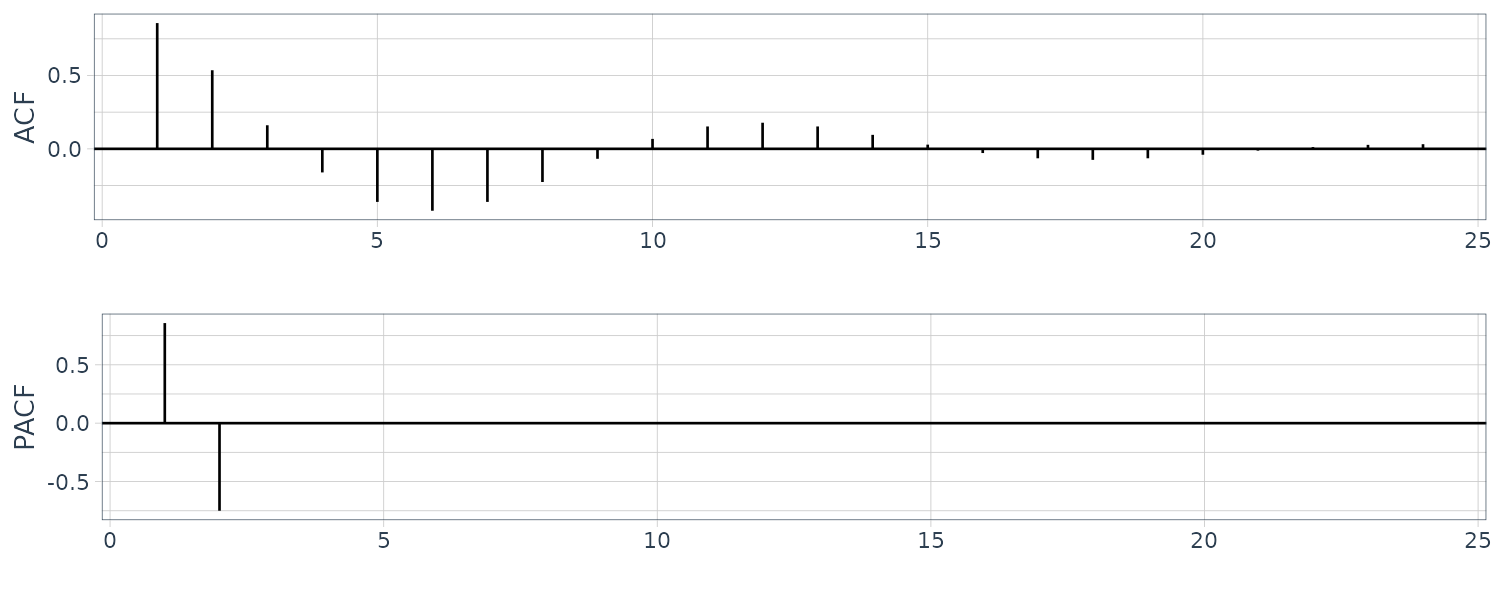

Example: Preliminary Analysis of the Recruitment Series

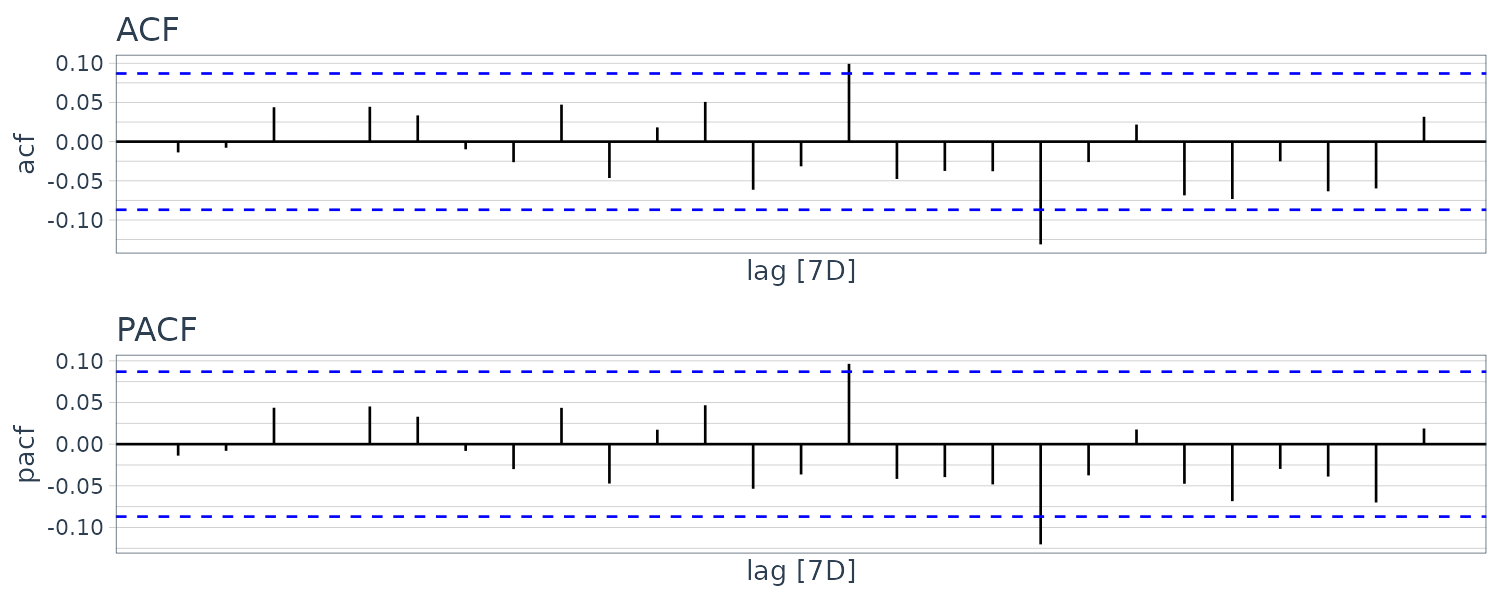

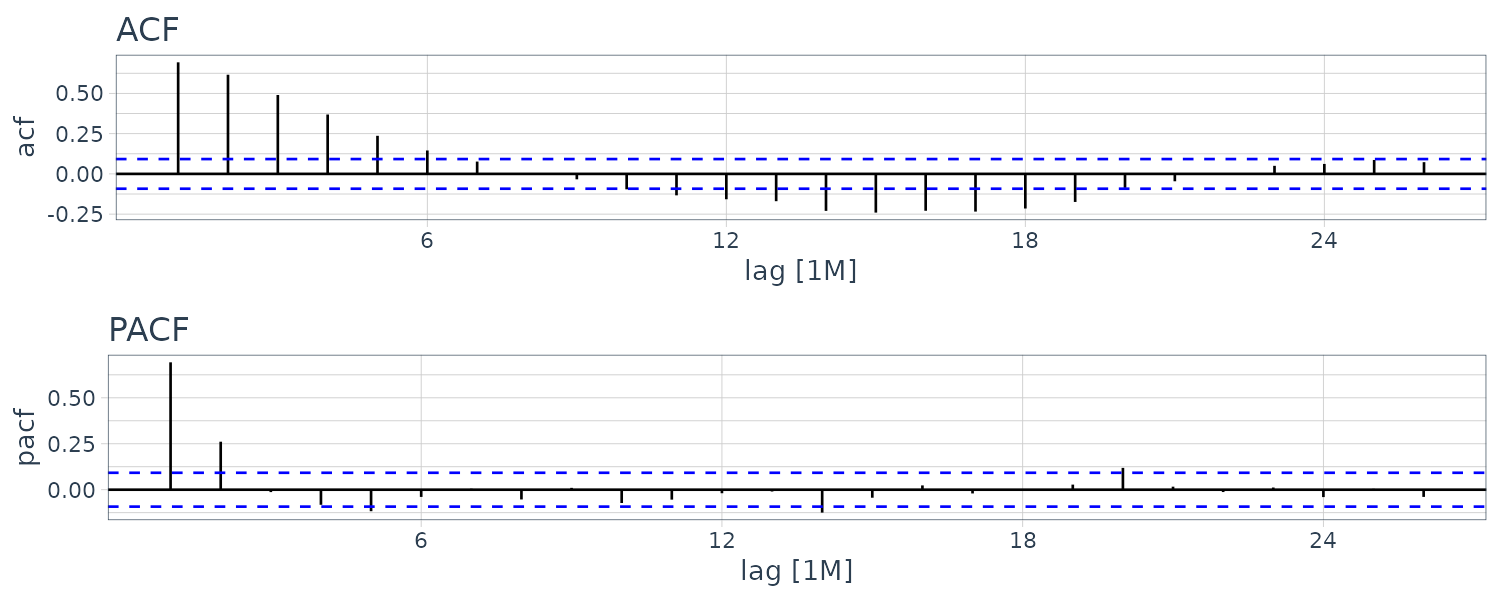

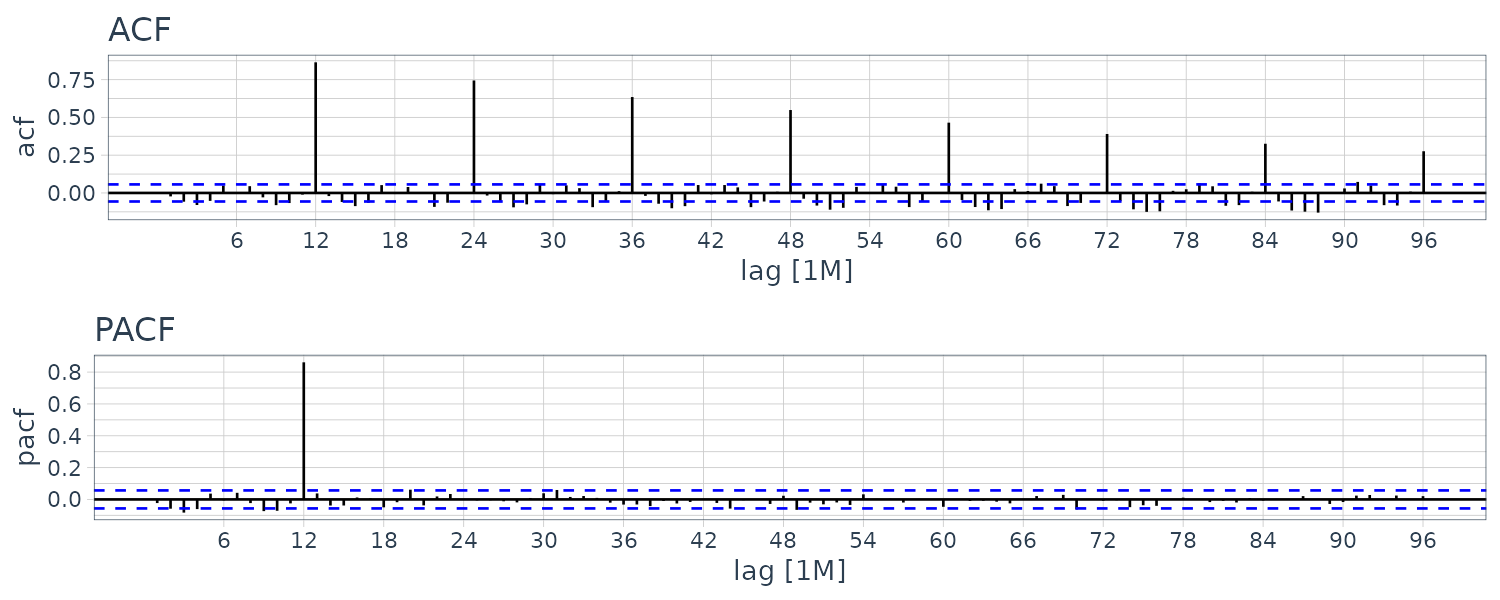

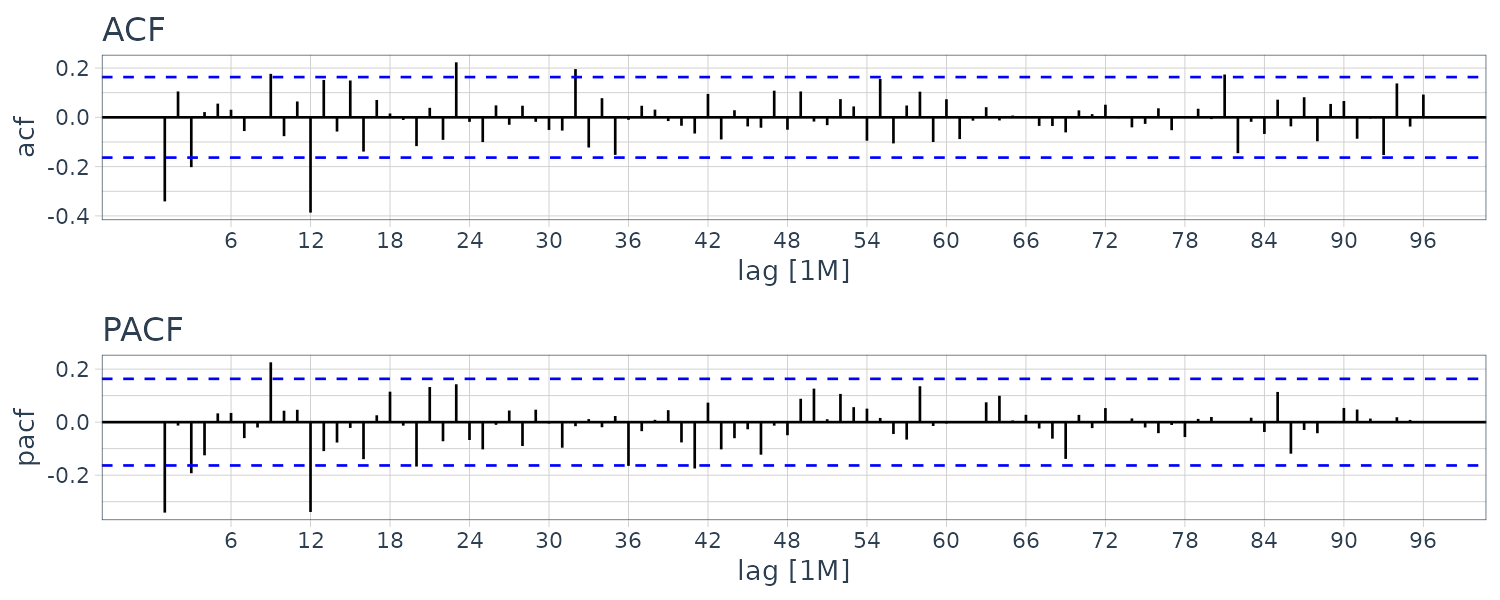

Let’s look at the ACF and PACF of the Recruitment series:

The ACF seem to have roughly 12-month period cycles. PACF only have 2 main significant values. This suggest a AR(2) model:

\[\begin{aligned} x_{t} &= \phi_{0} + \phi_{1}x_{t-1} + \phi_{2}x_{t-2} + w_{t} \end{aligned}\]Next we fit the model and get the following coefficients:

\[\begin{aligned} \hat{\phi}_{0} &= 6.80_{(0.44)}\\[5pt] \hat{\phi}_{1} &= 1.35_{(0.0416)}\\[5pt] \hat{\phi}_{2} &= -0.46_{(0.0417)} \end{aligned}\] \[\begin{aligned} \hat{\sigma}_{w}^{2} &= 89.9 \end{aligned}\]fit <- rec |>

model(

ARIMA(value ~ pdq(2, 0, 0) + PDQ(0, 0, 0))

)

> tidy(fit) |>

select(-.model)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 ar1 1.35 0.0416 32.5 1.98e-120

2 ar2 -0.461 0.0417 -11.1 2.29e- 25

3 constant 6.80 0.440 15.4 1.48e- 43

> fit |>

glance() |>

pull(sigma2)

year

89.92993 Forecasting

In forecasting, the goal is to predict future values of a time series \(x_{n+m}\), given data collected to the present:

\[\begin{aligned} x_{1:n} &= {x_{1}, x_{2}, \cdots, x_{n}} \end{aligned}\]We will assume the time series is stationary and the model parameters known. We will touch on parameter estimation in the next section.

We define the minimum mean square error predictor as:

\[\begin{aligned} x_{n+m}^{n} &= E[x_{n+m}|x_{1:n}] \end{aligned}\]For example, \(x_{2}^{1}\) is a one-step-ahead linear forecast of \(x_{2}\) given \(x_{1}\). \(x_{3}^{2}\) is a one-step-ahead linear forecast of \(x_{3}\) given \(x_{1}, x_{2}\).

It is the minimum because the conditional expectation minimizes the mean square error:

\[\begin{aligned} E[(x_{n+m} - g(x_{1:n}))^{2}] &= E[(x_{n+m} - x_{n+m}^{n}) ^{2}] \end{aligned}\]Where \(g(x_{1:n})\) is a function of the observations.

Firstly, we shall restrict to linear functions of the data and predictors of the form:

\[\begin{aligned} x_{n+m}^{n} &= \alpha_{0} + \sum_{k=1}^{n}\alpha_{k}x_{k} \end{aligned}\]Such linear form that minimizes the mean square prediction error (if the process is Gaussian) are known as Best Linear Predictors (BLPs). Such linear prediction depends only on the second-order moments of the process (which is easy to estimate) as we shall see later.

The BLP \(x_{n+m}^{n}\) can be found by solving the “Prediction Equations”:

\[\begin{aligned} E[(x_{n+m} - x_{n+m}^{n})x_{k}] &= 0 \end{aligned}\] \[\begin{aligned} k &= 0, 1, \cdots, n \end{aligned}\] \[\begin{aligned} x_{0} &= 1 \end{aligned}\]The prediction equations can be shown to be true by using least squares:

\[\begin{aligned} Q &= E\Big[\Big(x_{n+m} - \sum_{k=0}^{n}\alpha_{k}x_{k}\Big)^{2}\Big] \end{aligned}\] \[\begin{aligned} \frac{\partial Q}{\partial \alpha_{j}} &= 0 \end{aligned}\]The first equation \(k = 0\):

\[\begin{aligned} E[(x_{n+m} - x_{n+m}^{n})x_{0}] &= 0\\ x_{0} &= 1\\ E[x_{n+m} - x_{n+m}^{n}] &= 0\\ E[x_{n+m}] - E[x_{n+m}^{n}] &= 0 \end{aligned}\] \[\begin{aligned} E[x_{n+m}^{n}] &= E[x_{n+m}]\\ E[x_{n+m}^{n}] &= \mu\\ \end{aligned}\]Hence:

\[\begin{aligned} E[x_{n+m}^{n}] &= \alpha_{0} + \sum_{k=1}^{n}\alpha_{k}E[x_{k}]\\ \mu &= \alpha_{0} + \sum_{k=1}^{n}\alpha_{k}\mu\\ \alpha_{0} &= \mu\Big(1 - \sum_{k=1}^{n}\alpha_{k}\Big) \end{aligned}\]Hence, the BLP is:

\[\begin{aligned} x_{n+m}^{n} &= \alpha_{0} + \sum_{k=1}^{n}\alpha_{k}x_{k}\\ & =\mu - \mu\sum_{k=1}^{n}\alpha_{k} + \sum_{k=1}^{n}\alpha_{k}x_{k}\\ &= \mu + \sum_{k=1}^{n}\alpha_{k}(x_{k} - \mu) \end{aligned}\]There is no loss of generality in considering the case that \(\mu = 0\) which result in \(\alpha_{0} = 0\).

We first consider the one-step-ahead prediction. We wish to forecast \(x_{n+1}\) given \(\{x_{1}, \cdots, x_{n}\}\).

Taking \(m=1\) and taking \(\mu = 0\), the BLP of \(x_{n+1}\) is of the form:

\[\begin{aligned} x_{n+1}^{n} &= \phi_{n1}x_{n} + \phi_{n2}x_{n-1} + \cdots + \phi_{nn}x_{1} \end{aligned}\] \[\begin{aligned} \alpha_{k} &= \phi_{n, n+1-k}\\ k &= 1, \cdots, n \end{aligned}\]We would want the coefficients to satisfy:

\[\begin{aligned} E\Big[\Big(x_{n+1} - \sum_{j=1}^{n}\phi_{nj}x_{n+1-j}\Big)x_{n+1-k}\Big] &= 0\\ E[x_{n+1}x_{n+1-k}] - E\Big[\sum_{j=1}^{n}\phi_{nj}x_{n+1-j} x_{n+1-k}\Big] & = 0 \end{aligned}\] \[\begin{aligned} \gamma(k) - \sum_{j= 1}^{n}\phi_{nj}\gamma(k - j) &= 0\\ \sum_{j= 1}^{n}\phi_{nj}\gamma(k - j) &= \gamma(k) \end{aligned}\]We can represent this in matrix form:

\[\begin{aligned} \boldsymbol{\Gamma}_{n}\boldsymbol{\phi}_{n} &= \boldsymbol{\gamma}_{n} \end{aligned}\] \[\begin{aligned} \boldsymbol{\Gamma}_{n} &= \{\gamma(k - j)\}_{j, k = 1}^{n}\\[5pt] \boldsymbol{\phi}_{n} &= \begin{bmatrix} \phi_{n1}\\ \vdots\\ \phi_{nn} \end{bmatrix}\\[5pt] \boldsymbol{\gamma}_{n} &= \begin{bmatrix} \gamma(1)\\ \vdots\\ \gamma(n) \end{bmatrix}\\ \end{aligned}\]The matrix \(\boldsymbol{\Gamma}_{n}\) is nonnegative definite. If the matrix is nonsingular, the elements of \(\boldsymbol{\phi}_{n}\) are unique and:

\[\begin{aligned} \boldsymbol{\phi}_{n} &= \boldsymbol{\Gamma}^{-1}\gamma_{n}\\ x_{n+1}^{n} &= \boldsymbol{\phi}_{n}^{T}\textbf{x} \end{aligned}\]The mean square one-step-ahead prediction error is:

\[\begin{aligned} P_{n+1}^{n} &= E[(x_{n+1} - x_{n+1}^{n})^{2}]\\ &= E[(x_{n+1} - \boldsymbol{\phi}_{n}^{T}x)^{2}]\\ &= E[(x_{n+1} - \boldsymbol{\gamma}_{n}^{T}\boldsymbol{\Gamma}_{n}^{-1}\textbf{x})^{2}]\\ &= E[x_{n+1}^{2} - 2\boldsymbol{\gamma}_{n}'\boldsymbol{\Gamma}_{n}^{-1}\textbf{x} \textbf{x}_{n+1} + \boldsymbol{\gamma}_{n}^{T}\boldsymbol{\Gamma}_{n}^{-1}\textbf{x x}^{T} \boldsymbol{\Gamma}_{n}^{-1}\boldsymbol{\gamma}_{n}]\\ &= \gamma(0) - 2\boldsymbol{\gamma}_{n}^{T}\boldsymbol{\Gamma}_{n}^{-1}\boldsymbol{\gamma}_{n} + \boldsymbol{\gamma}_{n}^{T}\boldsymbol{\Gamma}_{n}^{-1}\boldsymbol{\Gamma}_{n}\boldsymbol{\Gamma}_{n}^{-1}\boldsymbol{\gamma}_{n}\\ &= \gamma(0) - 2\boldsymbol{\gamma}_{n}^{T}\boldsymbol{\Gamma}_{n}^{-1}\boldsymbol{\gamma}_{n} + \boldsymbol{\gamma}_{n}^{T}\boldsymbol{\Gamma}_{n}^{-1}\boldsymbol{\gamma}_{n}\\ &= \gamma(0) - \boldsymbol{\gamma}_{n}'\boldsymbol{\Gamma}_{n}^{-1}\boldsymbol{\gamma}_{n} \end{aligned}\]Example: Prediction of an AR(2) Model

Suppose we have a causal AR(2) process:

\[\begin{aligned} x_{t} &= \phi_{1}x_{t-1} + \phi_{2}x_{t-2} + w_{t} \end{aligned}\]The one-step-ahead prediction based on \(x_{1}\):

\[\begin{aligned} x_{2}^{1} &= \phi_{11}x_{1} \end{aligned}\]Recall:

\[\begin{aligned} \sum_{j=1}^{n}\phi_{nj}\gamma(k - j) &= \gamma(k)\\ \phi_{11}\gamma(1 - 1) &= \gamma(1)\\ \phi_{11} &= \frac{\gamma(1)}{\gamma(0)}\\ &= \rho(1) \end{aligned}\]Hence:

\[\begin{aligned} x_{2}^{1} &= \phi_{11}x_{1}\\ &= \rho(1)x_{1} \end{aligned}\]Now if we want the prediction of \(x_{3}\) based on \(x_{1}, x_{2}\):

\[\begin{aligned} x_{3}^{2} &= \phi_{21}x_{2} + \phi_{22}x_{1} \end{aligned}\]We have the following set of equations:

\[\begin{aligned} \phi_{21}\gamma(0) + \phi_{22}\gamma(1) &= \gamma(1)\\ \phi_{21}\gamma(1) + \phi_{22}\gamma(0) &= \gamma(2) \end{aligned}\] \[\begin{aligned} \begin{bmatrix} \gamma(0) & \gamma(1)\\ \gamma(1) & \gamma(0) \end{bmatrix} \begin{bmatrix} \phi_{21}\\ \phi_{22} \end{bmatrix} &= \begin{bmatrix} \gamma(1)\\ \gamma(2) \end{bmatrix}\\ \end{aligned}\] \[\begin{aligned} \begin{bmatrix} \phi_{21}\\ \phi_{22} \end{bmatrix} &= \begin{bmatrix} \gamma(0) & \gamma(1)\\ \gamma(1) & \gamma(0) \end{bmatrix}^{-1} \begin{bmatrix} \gamma(1)\\ \gamma(2) \end{bmatrix}\\ \end{aligned}\]It is evident that:

\[\begin{aligned} x_{3}^{2} &= \phi_{1}x_{2} + \phi_{2}x_{1} \end{aligned}\]Because we can see that:

\[\begin{aligned} E[(x_{3} - (\phi_{1}x_{2} + \phi_{2}x_{1}))x_{1}] &= E[w_{3}x_{1}] &= 0\\ E[(x_{3} - (\phi_{1}x_{2} + \phi_{2}x_{1}))x_{2}] &= E[w_{3}x_{2}]\\ &= 0 \end{aligned}\]It follows that from the uniqueness of the coefficients:

\[\begin{aligned} \phi_{21} &= \phi_{1}\\ \phi_{22} &= \phi_{2} \end{aligned}\]In fact, for \(n \geq 2\):

\[\begin{aligned} x_{n+1}^{n} &= \phi_{1}x_{n} + \phi_{2}x_{n-1} \end{aligned}\] \[\begin{aligned} \phi_{n1} &= \phi_{1}\\ \phi_{n2} &= \phi_{2}\\ \phi_{nj} &= 0\\ j &= 3, 4, \cdots \end{aligned}\]And further generalizing to AR(p) model:

\[\begin{aligned} x_{n+1}^{n} &= \phi_{1}x_{n} + \phi_{2}x_{n-1} + \cdots + \phi_{p}x_{n-p+1} \end{aligned}\]For ARMA models, the prediction equations will not be as simple as the pure AR case. And also for large \(n\), the inversion of a large matrix is prohibitive.

The Durbin-Levinson Algorithm

\(\boldsymbol{\phi}_{n}\) and the mean square one-step-ahead prediction error \(P_{n+1}^{n}\) can be solved iteratively as follows:

\[\begin{aligned} \phi_{00} &= 0\\ P_{1}^{0} &= \gamma(0) \end{aligned}\]For \(n \geq 1\):

\[\begin{aligned} \phi_{nn} &= \frac{\rho(n) - \sum_{k = 1}^{n-1}\phi_{n-1, k}\rho(n-k)}{1 - \sum_{k=1}^{n-1}\phi_{n-1,k}\rho(k)} \end{aligned}\] \[\begin{aligned} P_{n+1}^{n} &= P_{n}^{n-1}(1 - \phi_{nn}^{2}) \end{aligned}\]For \(n \geq 2\):

\[\begin{aligned} \phi_{nk} &= \phi_{n-1, k} - \phi_{nn}\phi_{n-1, n-k} \end{aligned}\] \[\begin{aligned} k &= 1, 2, \cdots, n-1 \end{aligned}\]Example: Using the Durbin-Levinson Algorithm

Start with:

\[\begin{aligned} \phi_{00} &= \rho(1)\\[5pt] P_{2}^{0} &= \gamma(0) \end{aligned}\]For \(n = 1\):

\[\begin{aligned} \phi_{11} &= \rho(1)\\[5pt] P_{2}^{1} &= \gamma(0)(1 - \phi_{11}^{2}) \end{aligned}\]For \(n = 2\):

\[\begin{aligned} \phi_{22} &= \frac{\rho(2) - \phi_{11}\rho(1)}{1 - \phi_{11}\rho(1)}\\[5pt] \phi_{21} &= \phi_{11}- \phi_{22}\phi_{11} \end{aligned}\] \[\begin{aligned} P_{3}^{2} &= P_{2}^{1}(1 - \phi_{22}^{2})\\ &= \gamma(0)(1 - \phi_{11}^{2})(1 - \phi_{22}^{2}) \end{aligned}\]For \(n = 3\):

\[\begin{aligned} \phi_{33} &= \frac{\rho(3) - \phi_{21}\rho(2) - \phi_{22}\rho(1)}{1 - \phi_{21}\rho(1) - \phi_{22}\rho(2)}\\[5pt] \phi_{32} &= \phi_{22}- \phi_{33}\phi_{21}\\[5pt] \phi_{31} &= \phi_{21} - \phi_{33}\phi_{22} \end{aligned}\] \[\begin{aligned} P_{4}^{3} &= P_{3}^{2}(1 - \phi_{33}^{2})\\ &= \gamma(0)(1 - \phi_{11}^{2})(1 - \phi_{22}^{2})(1 - \phi_{33}^{2}) \end{aligned}\]In general, the standard error is the square root of:

\[\begin{aligned} P_{n+1}^{n} &= \gamma(0)\Pi_{j=1}^{n}(1 - \phi_{jj}^{2}) \end{aligned}\]Example: PACF of an AR(2) Model

For an AR(p) model:

\[\begin{aligned} x_{p+1}^{p} &= \phi_{p1}x_{p} + \phi_{p2}x_{p-1} + \cdots + \phi_{pp}x_{1} \end{aligned}\]The coefficient at lag p, \(\phi_{pp}\), is also the PACF cofficient.

To show this for AR(2), recall that:

\[\begin{aligned} \rho(h) - \phi_{1}\rho(h - 1) - \phi_{2}\rho(h-2) &= 0 \end{aligned}\] \[\begin{aligned} \rho(1) &= \frac{\phi_{1}}{1 - \phi_{2}}\\ \rho(2) &= \phi_{1}\rho(1) + \phi_{2} \end{aligned}\] \[\begin{aligned} \rho(3) - \phi_{1}\rho(2) - \phi_{2}\rho(1) &= 0 \end{aligned}\] \[\begin{aligned} \phi_{11} &= \rho(1)\\ &= \frac{\phi_{1}}{1 - \phi_{2}} \end{aligned}\] \[\begin{aligned} \phi_{22} &= \frac{\rho(2) - \rho(1)^{2}}{1 - \rho(1)^{2}}\\ &= \frac{\Big(\phi_{1}\frac{\phi_{1}}{1 - \phi_{2}} + \phi_{2}\Big) - \Big(\frac{\phi_{1}}{1 - \phi_{2}}\Big)^{2}}{1 - \Big(\frac{\phi_{1}}{1 - \phi_{2}}\Big)^{2}}\\ &= \phi_{2} \end{aligned}\] \[\begin{aligned} \phi_{21} &= \rho(1)(1 - \phi_{2})\\ &= \phi_{1} \end{aligned}\] \[\begin{aligned} \phi_{33} &= \frac{\rho(3) - \phi_{1} - \phi_{2}\rho(1)}{1 - \phi_{1}\rho(1) - \phi_{2}\rho(2)}\\ &= 0 \end{aligned}\]m-Step-Ahead Predictor

Given data \(\{x_{1}, \cdots, x_{n}\}\), the m-step-ahead predictor is:

\[\begin{aligned} x_{n+m}^{n} &= \phi_{n1}^{(m)}x_{n} + \phi_{n2}^{(m)}x_{n - 1} + \cdots + \phi_{nn}^{(m)}x_{1} \end{aligned}\]Satisfying the prediction equations:

\[\begin{aligned} E\Big[\Big(x_{n+m} - \sum_{j=1}^{n}\phi_{nj}^{(m)}x_{n+1-j}\Big)x_{n+1-k}\Big] &= 0\\ E[x_{n+m}x_{n+1-k}] - \sum_{j=1}^{n}\phi_{nj}^{(m)}E[x_{n+1-j}x_{n+1 - k}] &= 0 \end{aligned}\] \[\begin{aligned} \sum_{j=1}^{n}\phi_{nj}^{(m)}E[x_{n+1-j}x_{n+1-k}] &= E[x_{n+m}x_{n+1-k}]\\ \sum_{j=1}^{n}\phi_{nj}^{(m)}\gamma(k - j) &= \gamma(m + k -1)\\ k &= 1, \cdots, n \end{aligned}\]The prediction equations can be written in matrix notation:

\[\begin{aligned} \boldsymbol{\Gamma}_{n}\boldsymbol{\phi}_{n}^{(m)} &= \boldsymbol{\gamma}_{n}^{(m)} \end{aligned}\]Where:

\[\begin{aligned} \boldsymbol{\Gamma}_{n} &= \{\gamma(k - j)\}_{j, k = 1}^{n}\\[5pt] \boldsymbol{\phi}_{n}^{(m)} &= \begin{bmatrix} \phi_{n1}\\ \vdots\\ \phi_{nn} \end{bmatrix}\\[5pt] \boldsymbol{\gamma}_{n}^{(m)} &= \begin{bmatrix} \gamma(m)\\ \vdots\\ \gamma(m+n-1) \end{bmatrix}\\ \end{aligned}\]The mean square m-step-ahead prediction error is:

\[\begin{aligned} P_{n+m}^{n} &= E[(x_{n+m} - x_{n+m}^{n})^{2}]\\ &= \gamma(0) - \boldsymbol{\gamma}_{n}^{(m)T}\Gamma_{n}^{-1}\boldsymbol{\gamma}_{n}^{(m)} \end{aligned}\]Innovations Algorithm

The innovations:

\[\begin{aligned} x_{t} - x_{t}^{t-1}\\ x_{s} - x_{s}^{s-1}\\ \end{aligned}\]Are uncorrelated for \(s\neq t\)

The one-step-ahead predictors and their mean-squared errors can be calculated iteratively as:

\[\begin{aligned} x_{1}^{0} &= 0\\ x_{t+1}^{t} &= \sum_{j=1}^{t}\theta_{tj}(x_{t+1-j} - x_{t+1-j}^{t-j}) \end{aligned}\] \[\begin{aligned} P_{1}^{0} &= \gamma(0)\\ P_{t+1}^{t} &= \gamma(0) - \sum_{j=0}^{t-1}\theta_{t,t-j}^{t-1}\theta_{t, t-j}^{2}P_{j+1}^{j} \end{aligned}\] \[\begin{aligned} \theta_{t, t-j} &= \frac{\gamma(t-j)-\sum_{k=0}^{j-1}\theta_{j, j-k}\theta_{t,t-k}P_{k+1}}{P_{j+1}^{j}}\\ j &= 0, 1, \cdots, t-1 \end{aligned}\]In the general case:

\[\begin{aligned} x_{n+m}^{n}&= \sum_{j=m}^{n+m-1}\theta_{n+m-1, j}(x_{n+m-j}- x_{n+m-j}^{n_m-j-1})\\ P_{n+m}^{n} &= \gamma(0) - \sum_{j = m}^{n+m-1}\theta_{n+m-1, j}^{2}P_{n+m-j}^{n+m-j-1}\\ \theta_{t, t-j} &= \frac{\gamma(t-j)-\sum_{k=0}^{j-1}\theta_{j, j-k}\theta_{t,t-k}P_{k+1}}{P_{j+1}^{j}} \end{aligned}\]Example: Prediction for an MA(1) Model

The innovations algorithm is well-suited for moving average processes. Consider an MA(1) model:

\[\begin{aligned} x_{t} &= w_{t} + \theta w_{t-1} \end{aligned}\]Recall that:

\[\begin{aligned} \gamma(0) &= (1 + \theta^{2})\sigma_{w}^{2}\\[5pt] \gamma(1) &= \theta\sigma_{w}^{2}\\[5pt] \gamma(h) &= 0\\[5pt] h &> 1 \end{aligned}\]Using the innovations algorithm:

\[\begin{aligned} \theta_{n1} &= \frac{\theta \sigma_{w}^{2}}{P_{n}^{n-1}}\\[5pt] \theta_{nj} &= 0 \end{aligned}\] \[\begin{aligned} j &= 2, \cdots, n \end{aligned}\] \[\begin{aligned} P_{1}^{0} &= (1 + \theta^{2})\sigma_{w}^{2}\\ P_{n+1}^{n} &= (1 + \theta^{2} - \theta\theta_{n1})\sigma_{w}^{2} \end{aligned}\]Finally, the one-step-ahead predictor is:

\[\begin{aligned} x_{n+1}^{n} &= \theta_{n+1 - 1, 1}(x_{n} - x_{n}^{n-1})\\ &= \theta_{n, 1}(x_{n} - x_{n}^{n-1})\\ &= \frac{\theta(x_{n}-x_{n}^{n-1})\sigma_{w}^{2}}{P_{n}^{n-1}} \end{aligned}\]Forecasting ARMA Processes

The general prediction equations:

\[\begin{aligned} E[(x_{n+m} - x_{n+m}^{n})x_{k}] &= 0 \end{aligned}\]Provide little insight into forecasting for ARMA models in general.

Throughout this section, we assume \(x_{t}\) is a causal and invertible ARMA(p, q) process:

\[\begin{aligned} \phi(B)x_{t} &= \theta(B)w_{t}\\ w_{t} &\sim N(0, \sigma_{w}^{2}) \end{aligned}\]And the minimum mean square error predictor of \(x_{n+m}\):

\[\begin{aligned} x_{n+m}^{n} &= E[x_{n+m}|x_{n}, \cdots, x_{1}] \end{aligned}\]For ARMA models, it is easier to calculate the predictor of \(x_{n+m}\), assuming we have the complete history (infinite past):

\[\begin{aligned} \tilde{x}_{n+m}^{n} &= E[x_{n+m}|x_{n}, x_{n-1}, \cdots, x_{1}, x_{0}, x_{-1}, \cdots] \end{aligned}\]For large samples, \(\tilde{x}_{n+m}\) is a good approximation to \(x_{n+m}^{n}\).

We can write \(x_{n+m}\) in its causal and invertible forms:

\[\begin{aligned} x_{n+m} &= \sum_{j=0}^{\infty}\psi_{j}w_{n+m-j} \end{aligned}\] \[\begin{aligned} \psi_{0} &= 1 \end{aligned}\] \[\begin{aligned} w_{n+m} &= \sum_{j=0}^{\infty}\pi_{j}x_{n+m-j} \end{aligned}\] \[\begin{aligned} \pi_{0} &= 1 \end{aligned}\]Taking conditional expectations:

\[\begin{aligned} \tilde{x}_{n+m}^{n} &= \sum_{j=0}^{\infty}\psi_{j}\tilde{w}_{n+m-j}\\ &= \sum_{j=m}^{\infty}\psi_{j}\tilde{w}_{n+m-j}\\ \end{aligned}\]This is because by causality (\(t > n\)), and invertibility (\(t\leq n\)):

\[\begin{aligned} \tilde{w}_{t} &= E[w_{t}|x_{n}, x_{n-1}, \cdots, x_{0}, x_{-1}, \cdots]\\ &= \begin{cases} 0 & t > n\\ w_{t} & t \leq n \end{cases} \end{aligned}\]Similarly:

\[\begin{aligned} \tilde{w}_{n+m} &= \sum_{j=0}^{\infty}\pi_{j}\tilde{x}_{n+m-j}\\ &= \tilde{x}_{n+m} + \sum_{j=1}^{\infty}\pi_{j}\tilde{x}_{n+m-j}\\ &= 0\\ \tilde{x}_{n+m} &= -\sum_{j=1}^{\infty}\pi_{j}\tilde{x}_{n+m-j}\\ &= -\sum_{j=1}^{m-1}\pi_{j}\tilde{x}_{n+m-j} - \sum_{j=m}^{\infty}\pi x_{n+m-j} \end{aligned}\]Because for \(t \leq n\):

\[\begin{aligned} \tilde{x}_{t} &= E[x_{t}|x_{n}, x_{n-1}, \cdots, x_{0}, x_{-1}, \cdots] \\ &= x_{t} \end{aligned}\]Prediction is accomplished recursively, starting from \(m = 1\), and then continuing for \(m = 2, 3, \cdots\):

\[\begin{aligned} \tilde{x}_{n+m} &= -\sum_{j=1}^{m-1}\pi_{j}\tilde{x}_{n+m-j} - \sum_{j=m}^{\infty}\pi x_{n+m-j} \end{aligned}\]For the error:

\[\begin{aligned} x_{n+m} - \tilde{x}_{n+m} &= \sum_{j=0}^{\infty}\psi_{j}w_{n+m-j} - \sum_{j=m}^{\infty}\psi_{j}\tilde{w}_{n+m-j}\\ &= \sum_{j=0}^{m-1}\psi_{j}w_{n+m-j} \end{aligned}\]Hence, the mean-square prediction error is:

\[\begin{aligned} P_{n+m}^{n} &= E[x_{n+m} - \tilde{x}_{n+m}^{2}]\\ &= \sigma_{w}^{2}\sum_{j=0}^{m-1}\psi_{j}^{2} \end{aligned}\]For a fixed sample size \(N\), the prediction errors are correlated:

\[\begin{aligned} E[(x_{n+m} - \tilde{x_{n+m}})(x_{n+m+k} - \tilde{x}_{n+m+k})] &= \sigma_{w}^{2}\sum_{j=0}^{m-1}\psi_{j}\psi_{j+k} \end{aligned}\] \[\begin{aligned} k & \geq 1 \end{aligned}\]Example: Long-Range Forecasts

Consider forecasting an ARMA process with mean \(\mu_{x}\)

\[\begin{aligned} x_{n+m} - \mu_{x} &= \sum_{j=0}^{\infty}\psi_{j}w_{n+m-j}\\ x_{n+m} &= \mu_{x} + \sum_{j=0}^{\infty}\psi_{j}w_{n+m-j}\\ \tilde{x}_{n+m} &= \mu_{x} + \sum_{j=m}^{\infty}\psi_{j}w_{n+m-j}\\ \end{aligned}\]As the \(\psi\)-weights dampen to 0 exponentially fast:

\[\begin{aligned} \lim_{m \rightarrow \infty}\tilde{x}_{n+m} \rightarrow \mu_{x} \end{aligned}\] \[\begin{aligned} \lim_{m \rightarrow \infty}P_{n+m}^{n} &= \lim_{m \rightarrow \infty}\sigma_{w}^{2}\sum_{j=0}^{\infty}\psi_{j}^{2}\\ &= \gamma_{x}(0)\\ &= \sigma_{x}^{2} \end{aligned}\]In other words, ARMA forecasts quickly settle to the mean with a constant prediction error as \(m\) grows.

When \(n\) is small, the general prediction equations can be used:

\[\begin{aligned} E[(x_{n+m} - x_{n+m}^{n})x_{k}] &= 0 \end{aligned}\]And when \(n\) is large, we would use the above but by truncating as we do not observe \(x_{0}, x_{-1}, \cdots\):

\[\begin{aligned} \tilde{x}_{n+m}^{n} &= -\sum_{j=1}^{m-1}\pi_{j}\tilde{x}_{n+m-j} - \sum_{j=m}^{\infty}\pi x_{n+m-j}\\ \tilde{x}_{n+m}^{n} &\cong -\sum_{j=1}^{m-1}\pi_{j}\tilde{x}_{n+m-j} - \sum_{j=m}^{n+m-1}\pi x_{n+m-j} \end{aligned}\]For AR(p) models, and when \(n > p\), the following equations yields the exact predictor:

\[\begin{aligned} \tilde{x}_{n+m}^{n} &= x_{n+m}^{n} \end{aligned}\] \[\begin{aligned} E[(x_{n+1} - x_{n+1}^{n})^{2}] &= \sigma_{w}^{2} \end{aligned}\]For ARMA(p, q) models, which includes MA(q) models, the truncated predictors for are:

\[\begin{aligned} \tilde{x}_{n+m}^{n} &= \phi_{1}\tilde{x}_{n+m - 1}^{n} + \cdots + \phi_{p}\tilde{x}_{n+m - p}^{n} + \theta_{1}\tilde{w}_{n+m-1}^{n} + \cdots + \theta_{q}\tilde{w}_{n+m-q}^{n} \end{aligned}\] \[\begin{aligned} 1 &\leq t \leq n\\ \tilde{x}_{t}^{n} &= 0\\ t &\leq 0 \end{aligned}\]The truncated prediction errors are given by:

\[\begin{aligned} \tilde{w}_{t}^{n} &= 0 \end{aligned}\] \[\begin{aligned} 0 \geq t < n\\ \end{aligned}\] \[\begin{aligned} \tilde{w}_{t}^{n} &= \phi(B)\tilde{x}_{t}^{n} - \theta_{1}\tilde{w}_{t-1}^{n} - \cdots - \theta_{q}\tilde{w}_{t-q}^{n} \end{aligned}\] \[\begin{aligned} 1 &\leq t \leq n \end{aligned}\]Example: Forecasting an ARMA(1, 1) Series

Suppose we have the model:

\[\begin{aligned} x_{n+1} &= \phi x_{n} + w_{n+1} + \theta w_{n} \end{aligned}\]The one-step-ahead truncated forecast is:

\[\begin{aligned} \tilde{x}_{n+1}^{n} &= \phi x_{n} + 0 + \theta \tilde{w}_{n}^{n} \end{aligned}\]For \(m \geq 2\):

\[\begin{aligned} \tilde{x}_{n+m}^{n} &= \phi \tilde{x}_{n+m-1}^{n} \end{aligned}\]To calculate \(\tilde{w}_{n}^{n}\), the model can be writen as:

\[\begin{aligned} w_{t} &= x_{t} - \phi x_{t-1} - \theta w_{t-1} \end{aligned}\]For truncated forecasting:

\[\begin{aligned} \tilde{w}_{0}^{n} &= 0\\ x_{0} &= 0 \end{aligned}\] \[\begin{aligned} \tilde{w}_{t}^{n} &= x_{t} - \phi x_{t-1} - \theta \tilde{w}_{t-1}^{n} \end{aligned}\] \[\begin{aligned} t &= 1, \cdots, n \end{aligned}\]The \(\psi\)-weights satisfy:

\[\begin{aligned} \psi_{0} &= 1\\ \psi_{1} &= \theta + \phi\\ \psi_{j} &= \phi\psi_{j-1}\\ \psi_{2} &= \phi(\theta + \phi)\\ &\ \ \vdots\\ \psi_{j} &= (\phi + \theta)\phi^{j-1} \end{aligned}\]And the mean-squared errors:

\[\begin{aligned} P_{n+m}^{n} &= \sigma_{w}^{2}\sum_{j=0}^{m-1}\psi_{j}^{2}\\ &= \sigma_{w}^{2}\Big[1 + (\phi + \theta)^{2}\sum_{j=1}^{m-1}\phi^{2(j-1)}\Big]\\ &= \sigma_{w}^{2}\Big[1 + \frac{(\phi + \theta)^{2}(1 - \phi^{2(m-1)})}{1 - \phi^{2}}\Big] \end{aligned}\]The prediction intervals are of the form:

\[\begin{aligned} x_{n+m}^{n} \pm c_{\frac{\alpha}{2}}\sqrt{P_{n+m}^{n}} \end{aligned}\]Choosing \(c_{\frac{0.5}{2}} = 1.96\) will yield an approximate 95% prediction interval for \(x_{n+m}\).

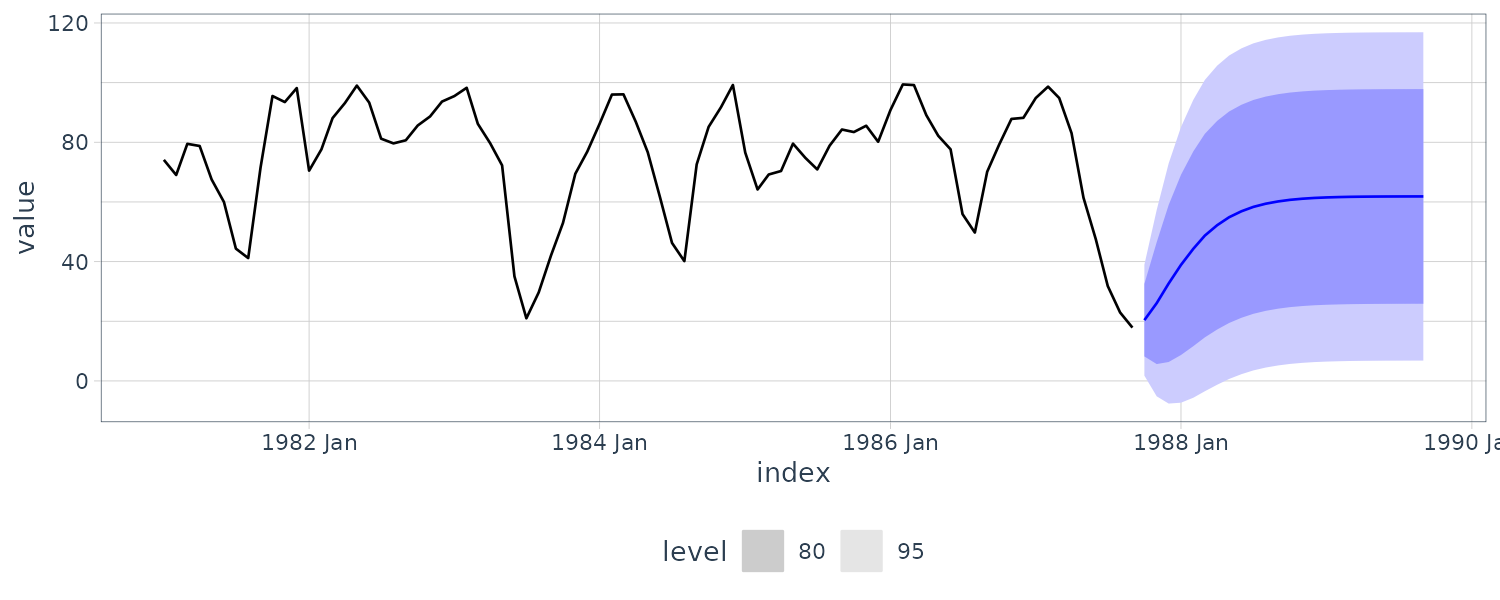

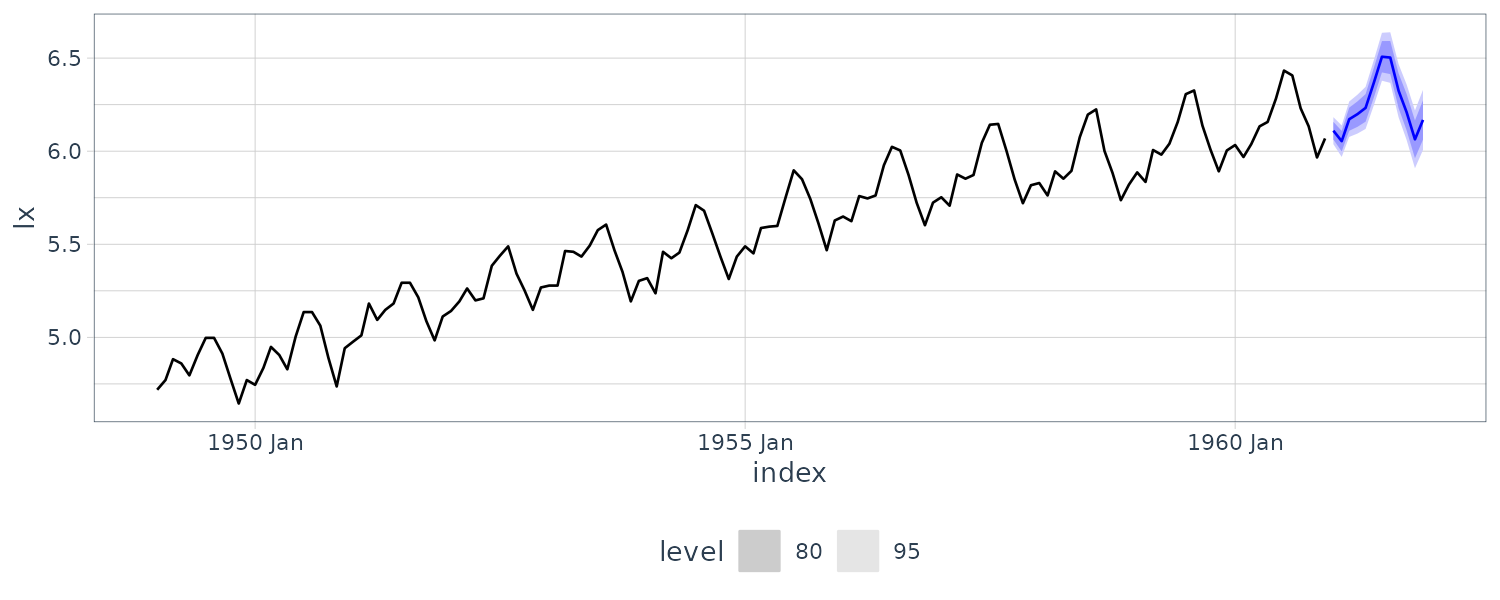

Example: Forecasting the Recruitment Series

Using the parameter estimates as the actual parameter values:

\[\begin{aligned} x_{n+m}^{n} &= 6.74 + 1.35 x_{n+m-1}^{n}- 0.46x_{n+m-2}^{n} \end{aligned}\]We have the following recurrence for the \(\psi\)-weights:

\[\begin{aligned} \psi_{j} &= 1.35\psi_{j-1} - 0.46 \psi_{j-2} \end{aligned}\] \[\begin{aligned} j &\geq 2 \end{aligned}\] \[\begin{aligned} \psi_{0} &= 1\\ \psi_{1} &= 1.35 \end{aligned}\]For \(n = 453\) and \(\hat{\sigma}_{w}^{2} = 89.72\):

\[\begin{aligned} P_{n+1}^{n} &= 89.72\\ P_{n+2}^{n} &= 89.72(\psi_{0}^{2} + \psi_{1}^{2})\\ &= 89.72(1 + 1.35^{2})\\ \psi_{2} &= 1.35 \times \psi_{1} - 0.46 \times \psi_{0}\\ &= 1.35^{2} - 0.46\\ P_{n+3}^{n} &= 89.72(\psi_{0}^{2} + \psi_{1}^{2} + \psi_{2}^{2})\\ &= 89.72(1 + 1.35^{2} + (1.35^{2} - 0.46)^{2}) \vdots \end{aligned}\]fit <- rec |>

model(ARIMA(value ~ pdq(2, 0, 0) + PDQ(0, 0, 0)))

> tidy(fit) |>

select(-.model)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 ar1 1.35 0.0416 32.5 1.98e-120

2 ar2 -0.461 0.0417 -11.1 2.29e- 25

3 constant 6.80 0.440 15.4 1.48e- 43

fit |>

forecast(h = 24) |>

autoplot(rec |> filter(year(index) > 1980)) +

theme_tq()

Backcasting

In stationary models, the BLP backward in time is the same as the BLP forward in time. Assuming the models are Gaussian, we also have the same minimum mean square error prediction.

The backward model:

\[\begin{aligned} x_{t} &= \phi x_{t+1} + \theta v_{t+1} + v_{t} \end{aligned}\]Put \(\tilde{v}_{n}^{n} = 0\) as an initial approximation, and then generate the errors backward:

\[\begin{aligned} \tilde{v}_{t}^{n} &= x_{t} - \phi x_{t+1} - \theta\tilde{v}_{t+1}^{n}\\ t &= n-1, n-2, \cdots, 1 \end{aligned}\]Then:

\[\begin{aligned} \tilde{x}_{0}^{n} &= \phi x_{1} + \theta \tilde{v}_{1}^{n} + \tilde{v}_{n}\\ &= \phi x_{t} + \theta\tilde{v}_{1}^{n} \end{aligned}\]Because:

\[\begin{aligned} \tilde{v}_{t}^{n} &= 0\\ t &\leq 0 \end{aligned}\]The general truncated backcasts:

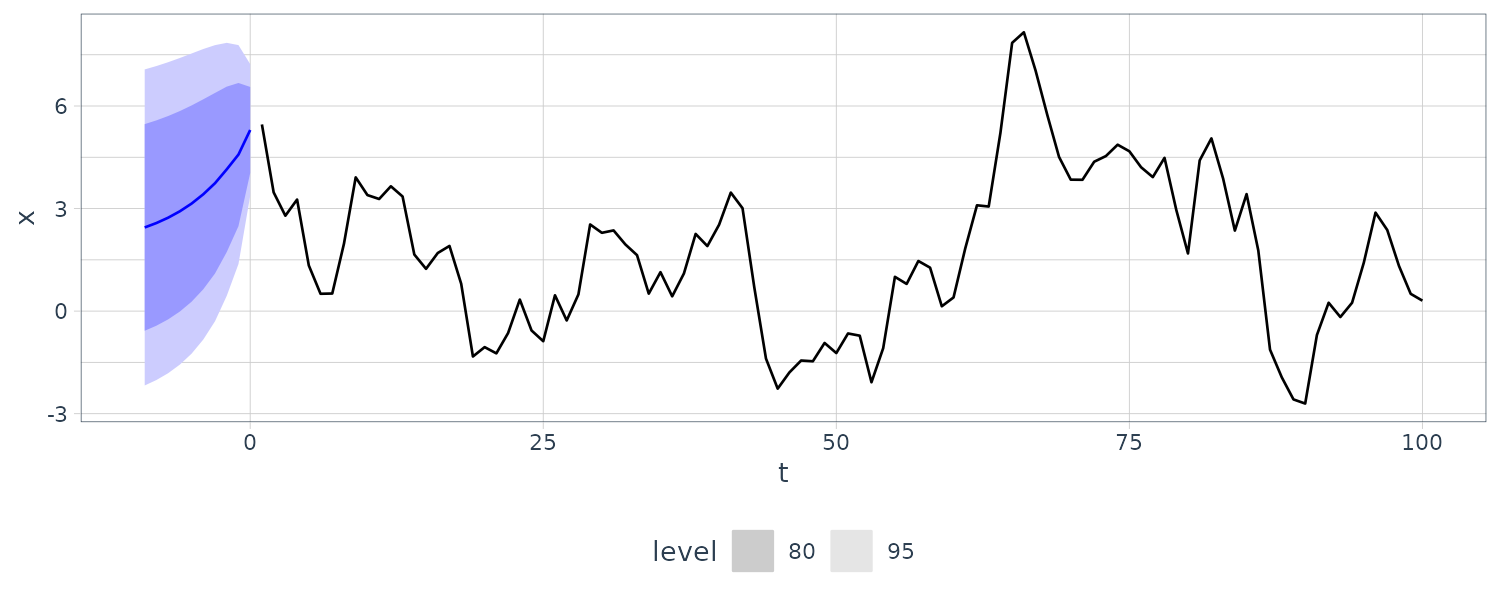

\[\begin{aligned} \tilde{x}_{1-m}^{n} &= \phi\tilde{x}_{2-m}^{n}\\ m &= 2, 3, \cdots \end{aligned}\]To backcast data in R, reverse the data, fit the model and predict. As an example, we backcasted a simulated ARMA(1, 1) process:

set.seed(90210)

dat <- tsibble(

t = 1:100,

x = arima.sim(

list(order = c(1, 0, 1), ar = .9, ma = .5),

n = 100

),

index = t

)

backcasts <- dat |>

mutate(reverse_time = rev(row_number())) |>

update_tsibble(index = reverse_time) |>

model(ARIMA(x ~ pdq(2, 0, 1) + PDQ(0, 0, 0))) |>

forecast(h = 10) |>

mutate(t = dat$t[1] - (1:10)) |>

as_fable(

index = t,

response = "x",

distribution = "x"

)

backcasts |>

autoplot(dat) +

theme_tq()

Estimation

We would assume the ARMA(p, q) process is a causal and invertible Gaussian process and mean is 0. Our goal is to estimate the parameters:

\[\begin{aligned} \phi_{1}, &\cdots, \phi_{p}\\ \theta_{1}, &\cdots, \theta_{q}\\ &\sigma_{w}^{2} \end{aligned}\]Method of Moments Estimators

The idea is to equate population moments to sample moments and then solving for the parameters. They can sometimes lead to suboptimal estimators.

We first consider the AR(p) models which the method of moments lead to optimal estimators.

\[\begin{aligned} x_{t} &= \phi_{1}x_{t-1} + \cdots + \phi_{p}x_{t-p} + w_{t} \end{aligned}\]The Yule-Walker equations are:

\[\begin{aligned} x_{t} &= \phi_{1}x_{t-1} + \cdots + \phi_{p}x_{t-p} + w_{t} \end{aligned}\] \[\begin{aligned} E[x_{t+h}x_{t}] &= \phi_{1}E[x_{t+h}x_{t-1}] + \cdots + \phi_{p}E[x_{t+h}x_{t-p}] + E[x_{t+h}w_{t}] \end{aligned}\] \[\begin{aligned} \gamma(h) &= \phi_{1}\gamma(h-1) + \cdots + \phi_{p}\gamma(h-p) \end{aligned}\] \[\begin{aligned} h = 1, 2, \cdots, p \end{aligned}\] \[\begin{aligned} w_{t} &= x_{t}- \phi_{1}x_{t-1} - \cdots - \phi_{p}x_{t-p} \end{aligned}\] \[\begin{aligned} E[w_{t}x_{t}] &= E[x_{t}x_{t}]- \phi_{1}E[x_{t}x_{t-1}] - \cdots - \phi_{p}E[x_{t}x_{t-p}] \end{aligned}\] \[\begin{aligned} \sigma_{w}^{2} &= \gamma(0) - \phi_{1}\gamma(1) - \cdots - \phi_{p}\gamma(p) \end{aligned}\]And Yule-Walker equations in matrix notation:

\[\begin{aligned} \boldsymbol{\Gamma}_{p}\phi &= \boldsymbol{\gamma}_{p} \end{aligned}\] \[\begin{aligned} \sigma_{w}^{2} &= \gamma(0) - \boldsymbol{\phi}^{T}\boldsymbol{\gamma}_{p} \end{aligned}\] \[\begin{aligned} \boldsymbol{\Gamma}_{p} &= \{\gamma(k-j)\}_{j, k =1}^{p}\\[5pt] \boldsymbol{\phi} &= \begin{bmatrix} \phi_{1}\\ \vdots\\ \phi_{p} \end{bmatrix}\\[5pt] \boldsymbol{\gamma}_{p} &= \begin{bmatrix} \gamma_{1}\\ \vdots\\ \gamma_{p} \end{bmatrix} \end{aligned}\]Using the method of moments, we replace \(\gamma(h)\) by \(\hat{\gamma}(h)\):

\[\begin{aligned} \hat{\boldsymbol{\phi}} &= \hat{\boldsymbol{\Gamma}}_{p}^{-1}\hat{\boldsymbol{\gamma}}_{p}\\[5pt] \hat{\sigma}_{w}^{2} &= \hat{\gamma}(0) - \hat{\gamma}_{p}^{T}\hat{\boldsymbol{\Gamma}}_{p}^{-1}\hat{\gamma}_{p} \end{aligned}\]The above is known as Yule-Walker estimators.

For calculation purposes, it is sometimes more convenient to work with sample ACF rather than autocovariance functions:

\[\begin{aligned} \hat{\boldsymbol{\phi}} &= \hat{\textbf{R}}_{p}^{-1}\hat{\boldsymbol{\rho}}_{p} \end{aligned}\] \[\begin{aligned} \hat{\sigma}_{w}^{2} &= \hat{\boldsymbol{\gamma}}(0)(1 - \hat{\boldsymbol{\rho}}_{p}^{T}\hat{\textbf{R}}_{p}^{-1}\hat{\boldsymbol{\rho}}_{p} \end{aligned}\] \[\begin{aligned} \boldsymbol{R}_{p} &= \{\rho(k-j)\}_{j, k =1}^{p}\\[5pt] \boldsymbol{\rho}_{p} &= \begin{bmatrix} \rho_{1}\\ \vdots\\ \rho_{p} \end{bmatrix} \end{aligned}\]For AR(p) models, if the sample size is large, the Yule–Walker estimators are approximately normally distributed, and \(\hat{\sigma}_{w}^{2}\) is close to the true value of \(\sigma_{w}^{2}\).

Large Sample Results for Yule–Walker Estimators

The asymptotic behavior (\(n \rightarrow \infty\)) of the Yule-Walker estimators for a causal AR(p) process:

\[\begin{aligned} \sqrt{n}(\hat{\boldsymbol{\phi}} - \boldsymbol{\phi}) &\overset{d}{\rightarrow} N(0, \sigma_{w}^{2}\boldsymbol{\Gamma}_{p}^{-1})\\[5pt] \hat{\sigma_{w}}^{2} &\overset{p}{\rightarrow}\sigma_{w}^{2} \end{aligned}\]The Durbin-Levinson algorithm can be used to calculate \(\hat{\phi}\) without inverting by replacing \(\gamma(h)\) by \(\hat{\gamma}(h)\) in the algorithm.

Example: Yule–Walker Estimation for an AR(2) Process

Suppose we have the following AR(2) model:

\[\begin{aligned} x_{t} &= 1.5x_{t-1} - 0.75x_{t-2} + w_{t} \end{aligned}\] \[\begin{aligned} w_{t} &\sim N(0, 1) \end{aligned}\] \[\begin{aligned} \hat{\gamma}(0) &= 8.903\\ \hat{\rho}(1) &= 0.849\\ \hat{\rho}(2) &= 0.519 \end{aligned}\]Thus:

\[\begin{aligned} \hat{\boldsymbol{\phi}} &= \begin{bmatrix} 1 & 0.849\\ 0.849 & 1 \end{bmatrix}^{-1} \begin{bmatrix} 0.849\\ 0.519 \end{bmatrix}\\ &= \begin{bmatrix} 1.463\\ -0.723 \end{bmatrix} \end{aligned}\] \[\begin{aligned} \hat{\sigma}_{w}^{2} &= 8.903\Bigg(1 - \begin{bmatrix} 0.849 & 0.519 \end{bmatrix}\begin{bmatrix} 1.463\\ -0.723 \end{bmatrix}\Bigg)\\ &= 1.187 \end{aligned}\]The asymptotic variance-covariance matrix of \(\hat{\boldsymbol{\phi}}\) is:

\[\begin{aligned} \frac{1}{n} \times \frac{\hat{\sigma}_{w}^{2}}{\hat{\gamma}(0)}\textbf{R}_{p}^{-1} &= \frac{1}{144}\times\frac{1.187}{8.903}\\[5pt] \begin{bmatrix} 1 & 0.849\\ 0.849 & 1 \end{bmatrix}^{-1} &= \begin{bmatrix} 0.058^{2} & -0.003\\ -0.003 & 0.058^{2} \end{bmatrix} \end{aligned}\]The above matrix can be used to get confidence intervals. For example, a 95% confidence interval for \(\phi_{2}\) which contains the true value of -0.75:

\[\begin{aligned} -0.723 \pm 1.96 \times 0.058 &= (-0.838, -0.608) \end{aligned}\]Example: Yule–Walker Estimation of the Recruitment Series

We will fit an AR(2) model using Yule-Walker for the recruitment series in R:

rec <- as_tsibble(rec)

rec <- as.ts(rec)

rec.yw <- ar.yw(rec, order = 2)

> rec.yw

Call:

ar.yw.default(x = rec, order.max = 2)

Coefficients:

1 2

1.3316 -0.4445

Order selected 2 sigma^2 estimated as 94.8

> rec.yw$x.mean

[1] 62.26278

> rec.yw$ar

[1] 1.3315874 -0.4445447

> rec.yw$asy.var.coef

[,1] [,2]

[1,] 0.001783067 -0.001643638

[2,] -0.001643638 0.001783067

> rec.yw$var.pred

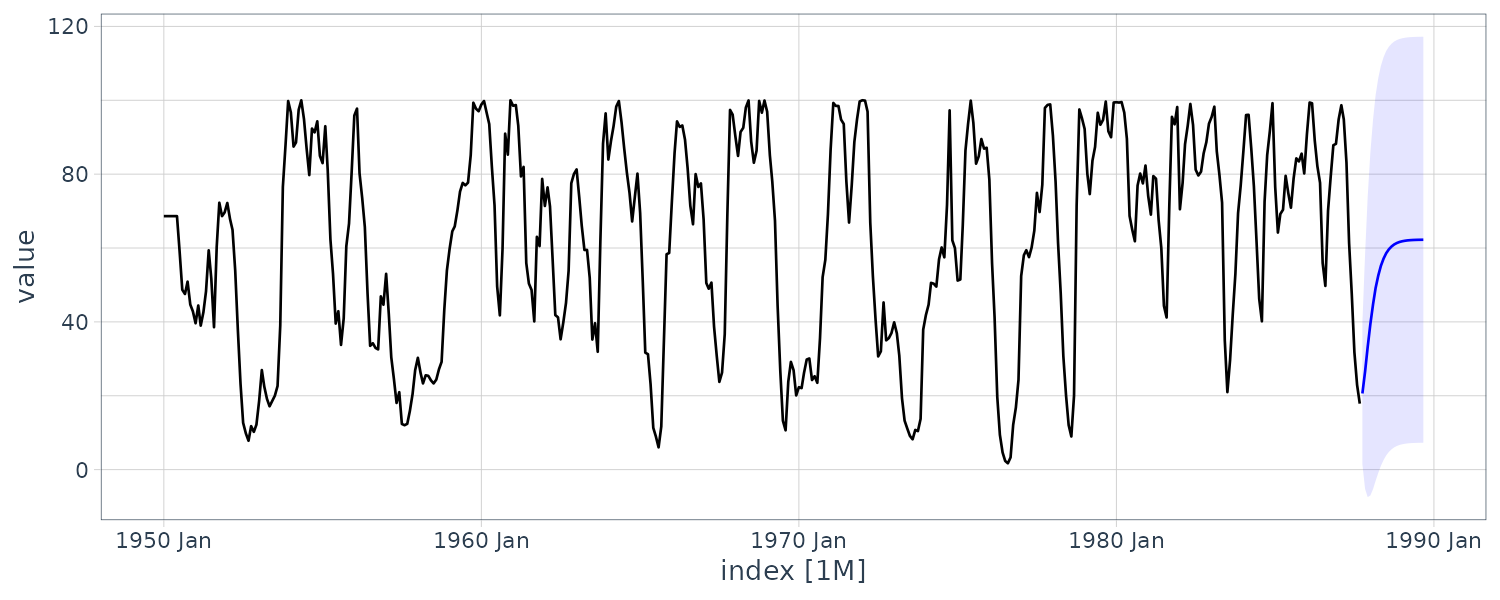

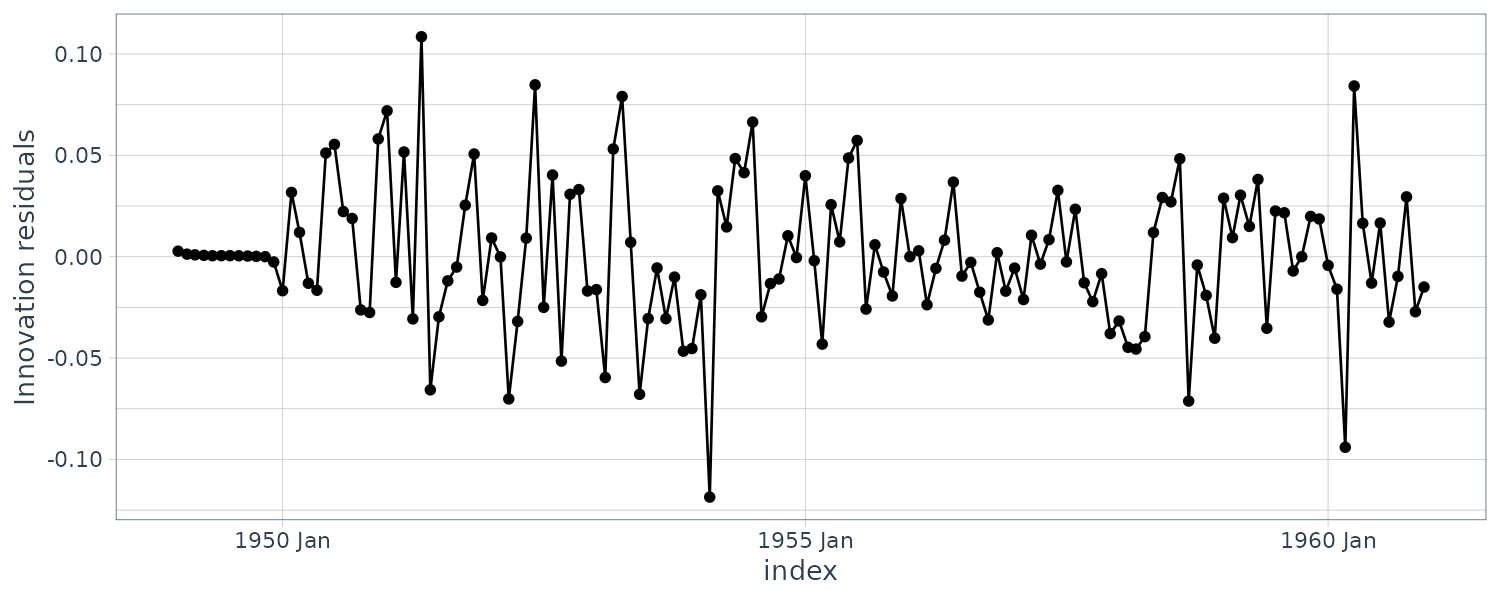

[1] 94.79912Plotting prediction:

rec <- as_tsibble(rec)

rec.pr <- predict(

rec.yw,

rec,

n.ahead = 24

)

dat <- as_tsibble(rec.pr$pred) |>

rename(pred = value) |>

mutate(

lwr = pred - 1.96 * rec.pr$se,

upr = pred + 1.96 * rec.pr$se

)

rec |>

autoplot() +

geom_line(

aes(x = index, y = pred),

col = "blue",

data = dat

) +

geom_ribbon(

aes(ymin = lwr, ymax = upr, y = pred),

alpha = 0.1,

fill = "blue",

data = dat

) +

theme_tq()

AR(p) models are linear models and the Yule-Walker estimators are essentially least squares estimators. If we use method of moments for MA or ARMA models, we will not get optimal estimators because such processes are nonlinear in the parameters.

Example: Method of Moments Estimation for MA(1) Model

Consider the time series:

\[\begin{aligned} x_{t} &= w_{t} + \theta w_{t-1} \end{aligned}\] \[\begin{aligned} |\theta| &< 1 \end{aligned}\]The model can then be rewritten as:

\[\begin{aligned} x_{t} &= \sum_{j=1}^{\infty}(-\theta)^{j}x_{t-j} + w_{t} \end{aligned}\]Which is nonlinear in \(\theta\). The first two population autocovariances:

\[\begin{aligned} \gamma(0) &= \sigma_{w}^{2}(1 + \theta^{2})\\[5pt] \gamma(1) &= \sigma_{w}^{2}\theta \end{aligned}\]Using the Durbin-Levinson algorithm:

\[\begin{aligned} \phi_{11} &= \rho(1)\\ \hat{\rho}(1) &= \frac{\hat{\gamma}(1)}{\hat{\gamma(0)}}\\ &= \frac{\hat{\theta}}{1 + \hat{\theta}^{2}} \end{aligned}\] \[\begin{aligned} (1 + \hat{\theta}^{2})\hat{\rho}(1) &= \hat{\theta}\\ \hat{\theta}^{2}\hat{\rho}(1) + \hat{\rho}(1) - \hat{\theta} &= 0 \end{aligned}\]If \(\mid\hat{\rho}(1)\mid \leq \frac{1}{2}\), the solutions are real and otherwise a real solution does not exists. In the real solution case, the invertible estimate is:

\[\begin{aligned} \hat{\theta} &= \frac{1 - \sqrt{1 - 4\hat{\rho}(1)^{2}}}{2\hat{\rho}(1)} \end{aligned}\]Maximum Likelihood and Least Squares Estimation

Let’s start with the causal AR(1) model:

\[\begin{aligned} x_{t} &= \mu + \phi(x_{t}- \mu)+ w_{t} \end{aligned}\] \[\begin{aligned} |\phi| &< 1\\ \end{aligned}\] \[\begin{aligned} w_{t} &\sim N(0, \sigma_{w}^{2}) \end{aligned}\]The likelihood of the data:

\[\begin{aligned} L(\mu, \phi, \sigma_{w}^{2}) &= f(x_{1}, \cdots, x_{n}|\mu, \phi, \sigma_{w}^{2}) \end{aligned}\]In the case of an AR(1) model, we may write the likelihood as (chain rule):

\[\begin{aligned} L(\mu, \phi, \sigma_{w}^{2}) &= f(x_{1})f(x_{2}|x_{1})\cdots f(x_{n}|x_{n-1}) \end{aligned}\]Because of AR(1) model, we know that:

\[\begin{aligned} x_{t}|x_{t-1} &\sim N(\mu + \phi(x_{t-1} - \mu), \sigma_{w}^{2}) \end{aligned}\]So we can say:

\[\begin{aligned} f(x_{t}|x_{t-1}) &= f_{w}[(x_{t} - \mu)- \phi(x_{t-1} - \mu)] \end{aligned}\]We may then rewrite the likehood as:

\[\begin{aligned} L(\mu, \phi, \sigma_{w}) &= f(x_{1})\Pi_{t=2}^{n}f_{w}[(x_{t} - \mu)- \phi(x_{t-1} - \mu)]\\ \end{aligned}\]We can show that:

\[\begin{aligned} L(\mu, \phi, \sigma_{w}^{2}) &= \frac{(1 - \phi^{2})^{\frac{1}{2}}}{(2\pi\sigma_{w}^{2})^{\frac{n}{2}}}e^{-\frac{S(\mu, \phi)}{2\sigma_{w}^{2}}}\\ S(\mu, \phi) &= (1 - \phi^{2})(x_{1} - \mu)^{2} + \sum_{t=2}^{n}[(x_{t} - \mu) - \phi(x_{t-1}- \mu)]^{2} \end{aligned}\]\(S(\mu, \phi)\) is called the unconditional sum of squares.

Taking the partial derivative of the log of the likelihood function with respect to \(\sigma_{w}^{2}\), we get the maximum likelihood estimate of \(\sigma_{w}^{2}\):

\[\begin{aligned} \hat{\sigma}_{w}^{2} &= \frac{S(\hat{\mu}, \hat{\phi})}{n} \end{aligned}\]Where \(\hat{\mu}, \hat{\phi}\) are MLE as well.

Taking the log of the likelihood:

\[\begin{aligned} l(\mu, \phi) &= \mathrm{log}\Big(\frac{S(\mu, \phi)}{n}\Big) - \frac{\mathrm{log}(1 - \phi^{2})}{n}\\[5pt] &\propto -2 \mathrm{log}L(\mu, \phi, \hat{\sigma}_{w}^{2})\\[5pt] \hat{\sigma}_{w}^{2} &= \frac{S(\hat{\mu}, \hat{\phi})}{n} \end{aligned}\]Because the log-likelihood or the likelihood are complicated functions of the parameters, the minimization is done numerically. In the case of AR models, conditional on initial values, they are linear models:

\[\begin{aligned} L(\mu, \phi, \sigma_{w}^{2}|x_{1}) &= \Pi_{t=2}^{n}f_{w}[(x_{t} - \mu) - \phi(x_{t-1} - \mu)]\\ &= \frac{1}{(2\pi \sigma_{w}^{2})^{\frac{n-1}{2}}}e^{\frac{S_{c}(\mu, \phi)}{2\sigma_{w}^{2}}} \end{aligned}\]The conditional MLE of \(\sigma_{w}^{2}\) is:

\[\begin{aligned} \hat{\sigma}_{w}^{2} &= \frac{S_{c}(\hat{\mu}, \hat{\phi})}{n - 1} \end{aligned}\]The conditional sum of squares can be written as:

\[\begin{aligned} S_{c}(\mu, \phi) &= \sum_{t=2}^{n}[x_{t} - (\alpha + \phi x_{t-1})]^{2}\\ \alpha &= \mu(1 - \phi) \end{aligned}\]The above equation is now a linear regression problem. The results from least squares estimation:

The MLE estimates are:

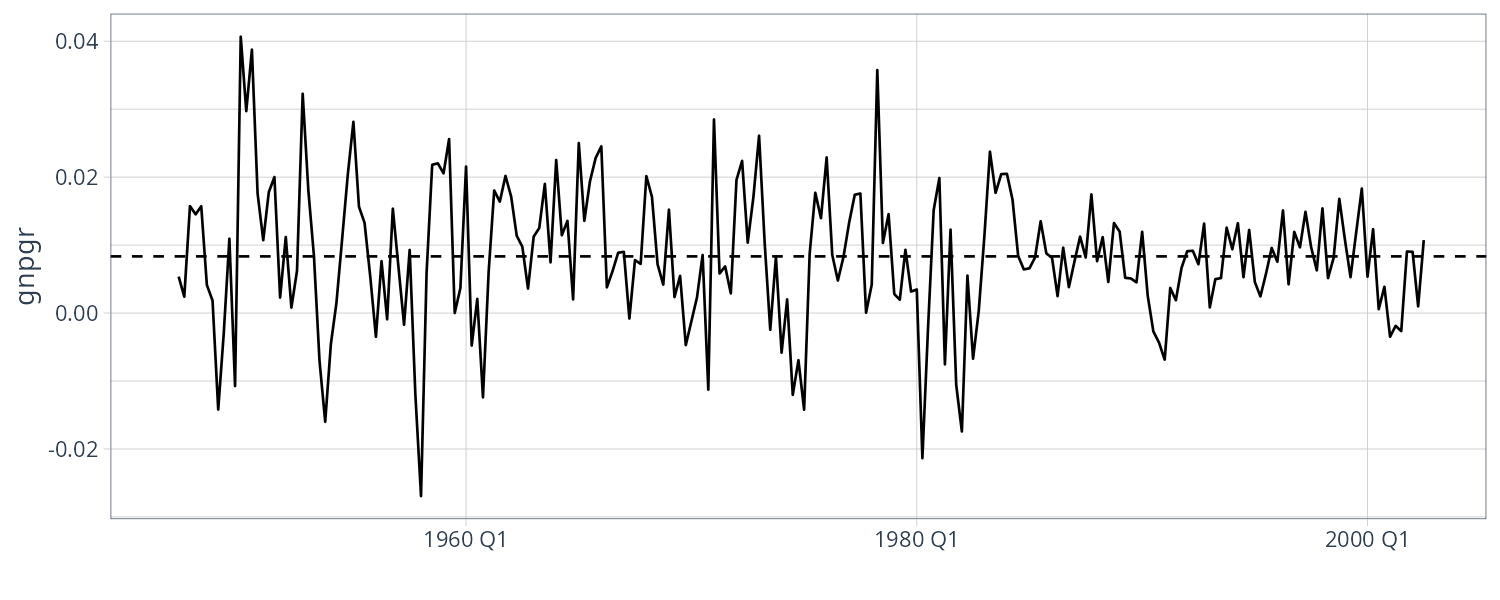

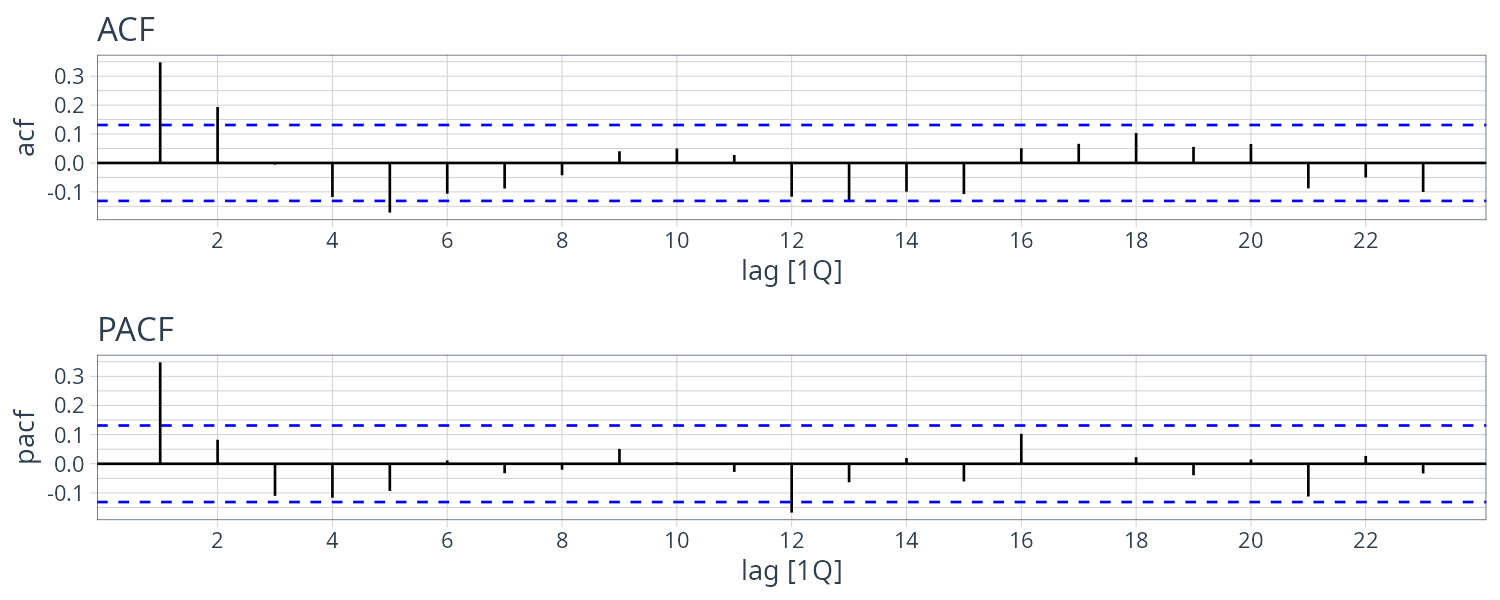

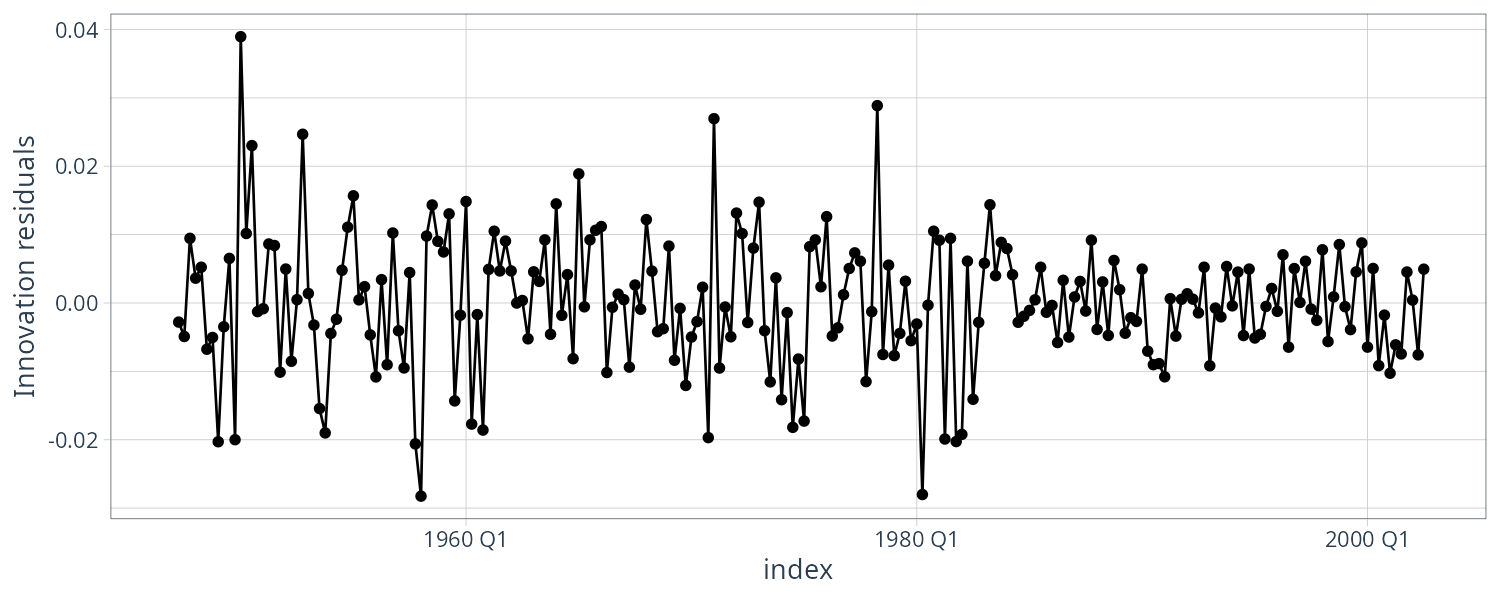

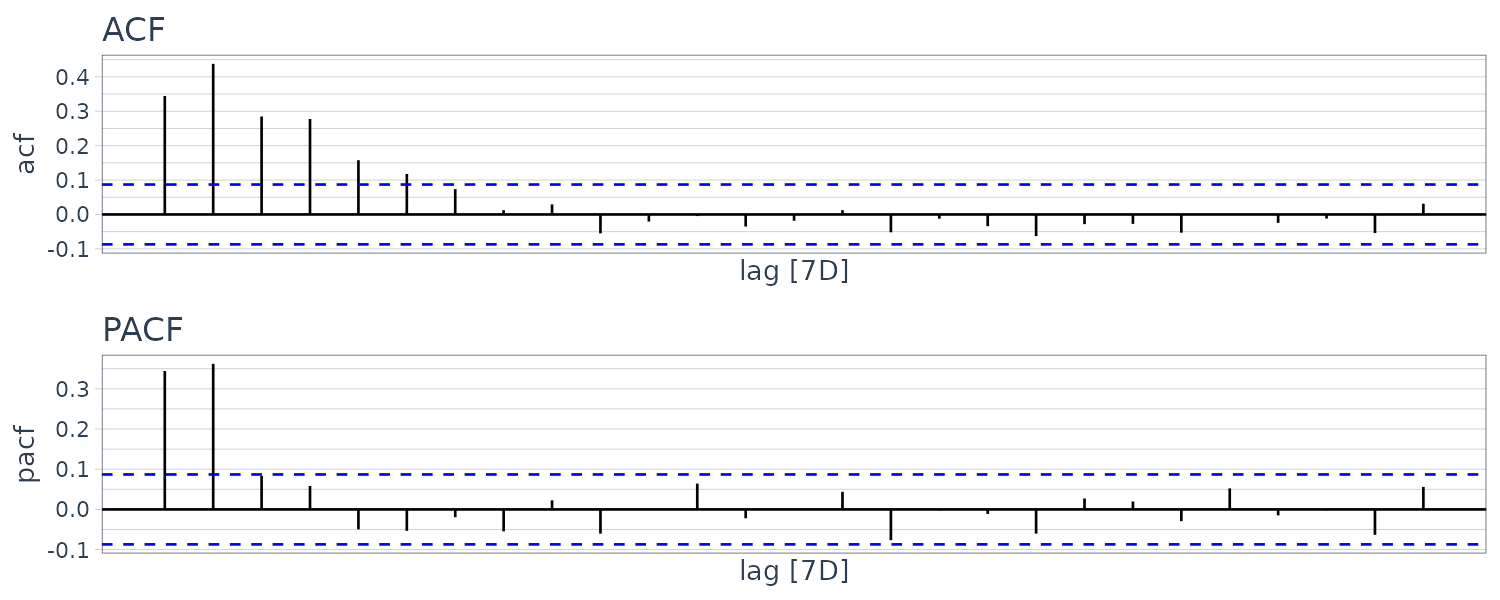

\[\begin{aligned} \hat{\alpha} &= \bar{x}_{(2)} - \hat{\phi}\bar{x}_{(1)}\\ \hat{\mu} &= \frac{\bar{x}_{(2)} - \hat{\phi}\bar{x}_{(1)}}{1 - \hat{\phi}}\\ \end{aligned}\] \[\begin{aligned} \hat{\phi}&= \frac{\sum_{t=2}^{n}(x_{t} - \bar{x}_{(2)})(x_{t-1} - \bar{x}_{(1)})}{\sum_{t=2}^{n}(x_{t-1} - \bar{x}_{(1)})^{2}} \end{aligned}\]Note that the approximation is the Yule-Walker estimators: