Econometrics (Time-Series Analysis)

Read PostIn this post, we will cover Carter R., Griffiths W., Lim G. (2018) Principles of Econometrics, focusing on time series analysis.

Contents:

- Introduction

- Modeling Dynamic Relationships

- Autocorrelation

- Stationarity

- Forecasting

- Granger Causality

- Testing for Serially Correlated Errors

- Time-Series Regressions for Policy Analysis

- Nonstationarity

- Unit Root Tests

- Cointegration

- Regression With No Cointegration

- Vector Autoregressive Models

- ARCH Models

- GARCH Models

- See Also

- References

Introduction

It is important to distinguish cross-sectional data and time-series data. Cross-sectional observations are typically collected by way of a random sample, and so they are uncorrelated. However, time-series observations, observed over a number of time periods, are likely to be correlated.

A second distinguishing feature of time-series data is a natural ordering to time. There is no such ordering in cross-sectional data and one could shuffle the observations. Time-series data often exhibit a dynamic relationship, in which the change in a variable now has an impact on the same or other variables in future time periods.

For example, it is common for a change in the level of an explanatory variable to have behavioral implications for other variables beyond the time period in which it occurred. The consequences of economic decisions that result in changes in economic variables can last a long time. When the income tax rate is increased, consumers have less disposable income, reducing their expenditures on goods and services, which reduces profits of suppliers, which reduces the demand for productive inputs, which reduces the profits of the input suppliers, and so on. The effect of the tax increase ripples through the economy. These effects do not occur instantaneously but are spread, or distributed, over future time periods.

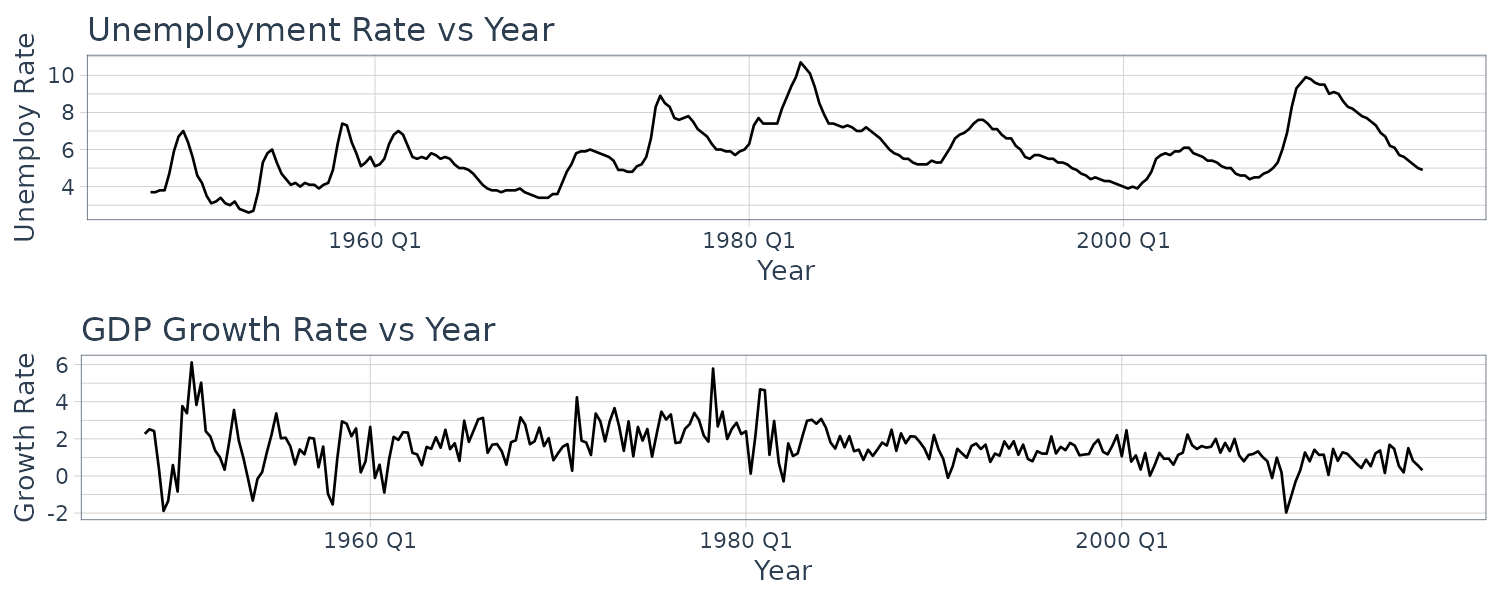

Example: Plotting the Unemployment Rate and the GDP Growth Rate for the United States

Using the dataset usmacro which contain the US quarterly unemployment rate and the US quarterly growth rate for GDP. We wish to understand how series such as these evolve over time, how current values of each data series are correlated with their past values, and how one series might be related to current and past values of another.

Plotting the unemployment rate and gdp growth rate:

usmacro$date <- as.Date(

usmacro$dateid01,

format = "%m/%d/%Y") |>

yearquarter()

usmacro <- as_tsibble(

usmacro,

index = date

)

plot_grid(

usmacro |>

autoplot(u) +

ylab("Unemployment Rate") +

xlab("Year") +

theme_tq(),

usmacro |>

autoplot(g) +

ylab("Growth Rate") +

xlab("Year") +

theme_tq(),

ncol = 1

)

Modeling Dynamic Relationships

Time-series data are usually dynamic. What this mean is their current values are correlated to their past values and current and past values of other variables. This particular dynamic relation can be modelled using lagged values such as:

\[\begin{aligned} y_{t-1}, y_{t-2}, \cdots, y_{t-q}\\ x_{t-1}, x_{t-2}, \cdots, x_{t-p}\\ e_{t-1}, e_{t-2}, \cdots, e_{t-s}\\ \end{aligned}\]In this section, we will describe a number of the time-series models that arise from introducing lags of these kinds and explore the relationships between them.

Finite Distributed Lag Models (FDL)

Suppose that \(y\) depends on current and lag value of \(x\):

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \beta_{1}x_{t - 1} + \cdots + + \beta_{q}x_{t-q} + e_{t} \end{aligned}\]It is called finite distributed lag because \(x\) cuts off after q lags and the impact of change in \(x\) is distributed over future time periods.

Model of this kind can be used for forecasting or policy analysis.

For example in forecasting, we might be interested in using information on past interest rates to forecast future inflation. And in policy analysis, a central bank might be interested in how inflation will react now and in the future to a change in the current interest rate.

Autoregressive Models (AR)

An autoregressive model is where \(y\) depends on past values of itself. An AR(p) model:

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \cdots + \theta_{p}y_{t-p} + e_{t} \end{aligned}\]For example, an AR(2) model for the unemployment series would be:

\[\begin{aligned} U_{t} &= \delta + \theta_{1}U_{t-1} + \theta_{2}U_{t-2} + e_{t} \end{aligned}\]AR models can be used to describe time paths of variables and they are generally use for forecasting.

Autoregressive Distributed Lag Models (ARDL)

A more general case which includes autoregressive model and FDL model is the ARDL(p, q) model:

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \cdots + \theta_{p}y_{t-p} + \beta_{0}x_{t} + \cdots \beta_{q}x_{t-q} + e_{t} \end{aligned}\]For example, an ARDL(2, 1) model relating the uemployment rate \(U\) to the economy \(G\):

\[\begin{aligned} U_{t} &= \delta + \theta_{1}U_{t-1} + \theta_{2}U_{t-2} + \beta_{0}G_{t} + \beta_{1}G_{t-1} + e_{t} \end{aligned}\]ARDL models can be used for both forecasting and policy analysis.

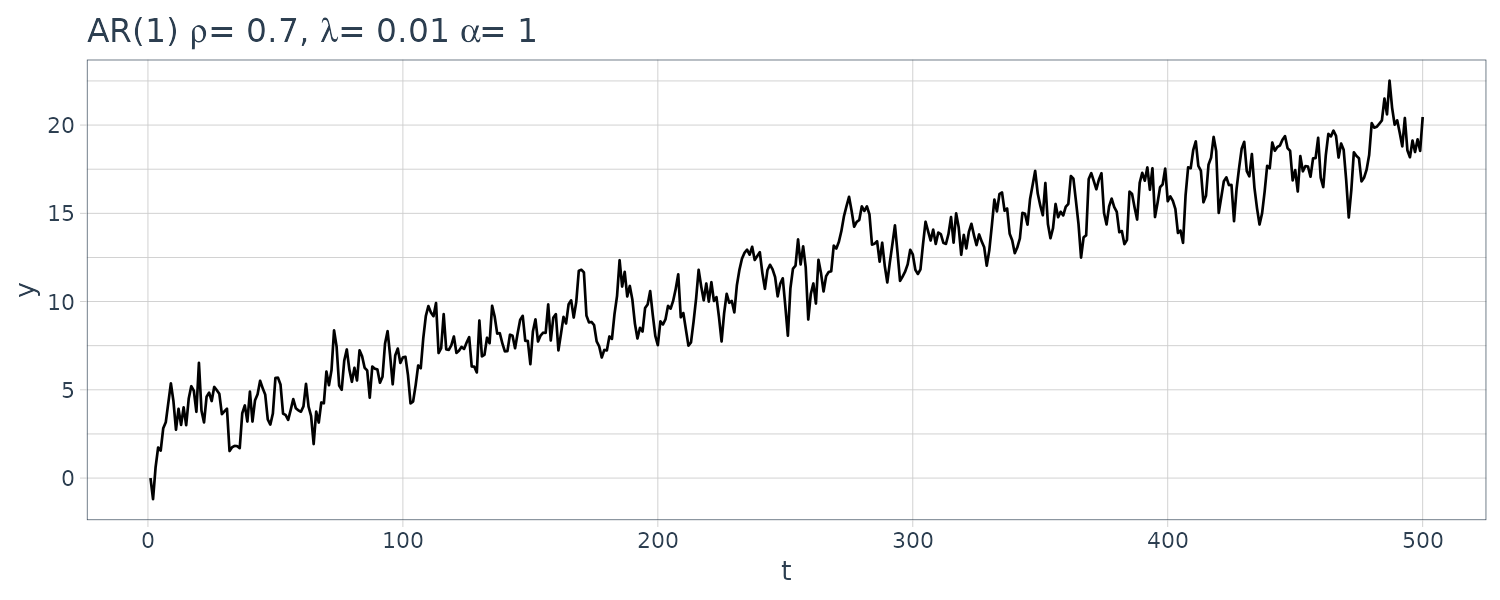

Infinite Distributed Lag Models (IDL)

An IDL Model is the same as FDL Model except it goes to infinity.

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \beta_{1}x_{t-1} + \cdots \beta_{q}x_{t-q} + \cdots + e_{t} \end{aligned}\]In order to make the model realistic, \(\beta_{s}\) has to eventually decline to 0.

There are many possible lag pattern, but a popular one is the geometrically declining lag pattern:

\[\begin{aligned} \beta_{s} &= \lambda^{s}\beta_{0} \end{aligned}\] \[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \lambda\beta_{0}x_{t-1} + \lambda^{2}\beta_{0}x_{t-2} + \cdots + e_{t} \end{aligned}\] \[\begin{aligned} 0 < \lambda < 1 \end{aligned}\]It is possible to change an geometrically decaying IDL into an ARDL(1, 0):

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \lambda\beta_{0}x_{t-1} + \lambda^{2}\beta_{0}x_{t-2} + \cdots + e_{t}\\[5pt] y_{t-1} &= \alpha + \beta_{0}x_{t-1} + \lambda\beta_{0}x_{t-2} + \lambda^{2}\beta_{0}x_{t-3} + \cdots + e_{t}\\[5pt] \lambda y_{t-1} &= \lambda\alpha + \lambda\beta_{0}x_{t-1} + \lambda^{2}\beta_{0}x_{t-2} + \lambda^{3}\beta_{0}x_{t-3} + \cdots + \lambda e_{t} \end{aligned}\] \[\begin{aligned} y_{t} - \lambda y_{t-1} &= \alpha(1 - \lambda) + \beta_{0}x_{t} + e_{t} - \lambda e_{t-1} \end{aligned}\] \[\begin{aligned} y_{t} &= \alpha(1 - \lambda) + \lambda y_{t-1} + \beta_{0}x_{t} + e_{t} - \lambda e_{t-1}\\ y_{t} &= \delta + \theta y_{t-1} + \beta_{0}x_{t} + v_{t} \end{aligned}\] \[\begin{aligned} \delta &= \alpha(1 - \lambda)\\ \theta &= \lambda\\ v_{t} &= e_{t} - \lambda e_{t-1} \end{aligned}\]It is also possible to turn an ARDL model into an IDL model. Generally, ARDL(p, q) models can be transformed into IDL models as long as the lagged coefficients eventually decline to 0.

The ARDL formulation is useful for forecasting while the IDL provides useful information for policy analysis.

Autoregressive Error Models

Another way in which lags can enter a model is through the error term:

\[\begin{aligned} e_{t} &= \rho e_{t-1} + v \end{aligned}\]In contrast to AR model, there is no intercept parameter as \(e_{t}\) has a zero mean.

Let’s include this error term in a model:

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + e_{t}\\ &= \alpha + \beta_{0}x_{t} + \rho e_{t-1} + v_{t}\\ \end{aligned}\]\(e_{t-1}\) can be written as:

\[\begin{aligned} y_{t-1} &= \alpha + \beta_{0}x_{t-1} + e_{t-1}\\ e_{t-1} &= y_{t-1} - \alpha - \beta_{0}x_{t-1}\\ \rho e_{t-1} &= \rho y_{t-1} - \rho \alpha - \rho \beta_{0}x_{t-1} \end{aligned}\]We can then turn a AR(1) error model into an ARDL(1, 1) model:

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \rho e_{t-1} + v\\ &= \alpha + \beta_{0}x_{t} + (\rho y_{t-1} - \rho \alpha - \rho \beta_{0}x_{t-1}) + v\\ &= \alpha(1 - \rho) + \rho y_{t-1} + \beta_{0}x_{t} - \rho \beta_{0}x_{t-1} + v_{t}\\ &= \delta + \theta y_{t-1} + \beta_{0}x_{t} + \beta_{1}x_{t-1} + v_{t} \end{aligned}\] \[\begin{aligned} y_{t} &= \delta + \theta y_{t-1} + \beta_{0}x_{t} + \beta_{1}x_{t-1} + v_{t} \end{aligned}\] \[\begin{aligned} \delta &= \alpha(1 - \rho)\\ \theta &= \rho \end{aligned}\]Note that we have a constraint here which is not in a general ARDL model and nonlinear:

\[\begin{aligned} \beta_{1} &= -\rho\beta_{0} \end{aligned}\]Autoregressive error models with more lags than one can also be transformed to special cases of ARDL models.

Summary of Dynamic Models for Stationary Time Series Data

ARDL(p, q) Model

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \cdots + \theta_{p}y_{t-p} + \beta_{0}x_{t} + \cdots \beta_{q}x_{t-q} + e_{t} \end{aligned}\]FDL Model

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \beta_{1}x_{t - 1} + \cdots + \beta_{q}x_{t-q} + e_{t} \end{aligned}\]IDL Model

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \beta_{1}x_{t-1} + \cdots + e_{t} \end{aligned}\]AR(p) Model

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \cdots + \theta_{p}y_{t-p} + e_{t} \end{aligned}\]IDL model with Geometrically Declining Lag Weights

\[\begin{aligned} \beta_{S} &= \lambda^{S}\beta_{0} \end{aligned}\] \[\begin{aligned} 0 < \lambda < 1 \end{aligned}\] \[\begin{aligned} y_{t} &= \alpha(1 - \lambda) + \lambda y_{t-1} + \beta_{0}x_{t} + e_{t} - \lambda e_{t-1} \end{aligned}\]Simple Regression with AR(1) Error

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + e_{t}\\ e_{t} &= \rho e_{t-1} + v_{t} \end{aligned}\] \[\begin{aligned} y_{t} &= \alpha(1 - \rho) + \rho y_{t-1} + \beta_{0}x_{t} - \rho \beta_{0}x_{t-1} + v_{t} \end{aligned}\]How we interpret each model depends on whether the model is used for forecasting or policy analysis. On assumption we make for all models is that the variables in the models are stationary and weakly dependent.

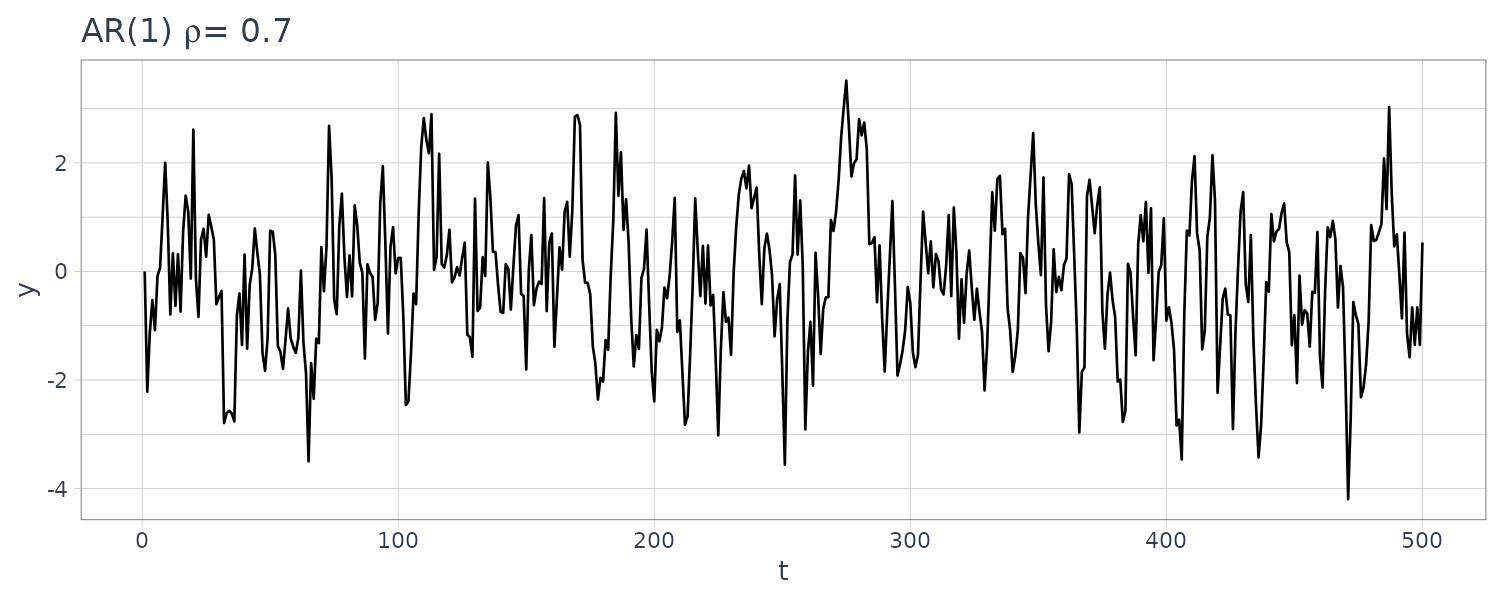

Autocorrelation

Consider a time series of observations, \(x_{1}, \cdots, x_{T}\) with:

\[\begin{aligned} E[x_{t}] &= \mu_{X}\\ \text{var}(x_{t}) &= \sigma_{X}^{2} \end{aligned}\]We assume the mean and variance do not change over time. The population autocorrleation that are s periods apart:

\[\begin{aligned} \rho_{s} &= \frac{\mathrm{Cov}(x_{t}, x_{t-s})}{\mathrm{Var(x_{t})}} \end{aligned}\] \[\begin{aligned} x &= 1, 2, \cdots \end{aligned}\]Sample Correlation

Population autocorrelations theoretically require a conceptual time series that goes on forever, from the infinite past and continuing to the infinite future. Sample autocorrelations are a sample of observations for a finite time period to estimate the population autocorrelations.

To estimate \(\rho_{1}\):

\[\begin{aligned} \hat{\text{cov}}(x_{t}, x_{t-1}) &= \frac{1}{T}\sum_{t=2}^{T}(x_{t} - \bar{x})(x_{t-1} - \bar{x})\\ \hat{\text{var}}(x_{t}) &= \frac{1}{T-1}\sum_{t=1}^{T}(x_{t} - \bar{x})^{2} \end{aligned}\] \[\begin{aligned} r_{1} &= \frac{\sum_{t=2}^{T}(x_{t}- \bar{x})(x_{t-1} - \bar{x})}{\sum_{t=1}^{T}(x_{t}-\bar{x})^{2}} \end{aligned}\]More generally:

\[\begin{aligned} r_{s} &= \frac{\sum_{t=s+1}^{T}(x_{t} - \bar{x})(x_{t-s} - \bar{x})}{\sum_{t=1}^{T}(x_{t} - \bar{x})^{2}} \end{aligned}\]Because only \((T-s)\) observations are used to compute the numerator and \(T\) observations are used to compute the denominator, an alternative calculation that leads to a larger estimate is:

\[\begin{aligned} r'_{s} &= \frac{\frac{1}{T-s}\sum_{t=s+1}^{T}(x_{t} - \bar{x})(x_{t-s} - \bar{x})}{\frac{1}{T}\sum_{t=1}^{T}(x_{t} - \bar{x})^{2}} \end{aligned}\]Test of Significance

Assuming the null hypothesis as \(H_{0}: \rho_{s} = 0\) is true, \(r_{s}\) has an approximate normal distribution:

\[\begin{aligned} r_{s} \sim N\Big(0, \frac{1}{T}\Big) \end{aligned}\]Standardizing \(\hat{\rho}_{s}\):

\[\begin{aligned} Z &= \frac{r_{s} - 0}{\sqrt{\frac{1}{T}}}\\[5pt] &= \sqrt{T}r_{s}\\[5pt] Z &\sim N(0, 1) \end{aligned}\]Correlogram

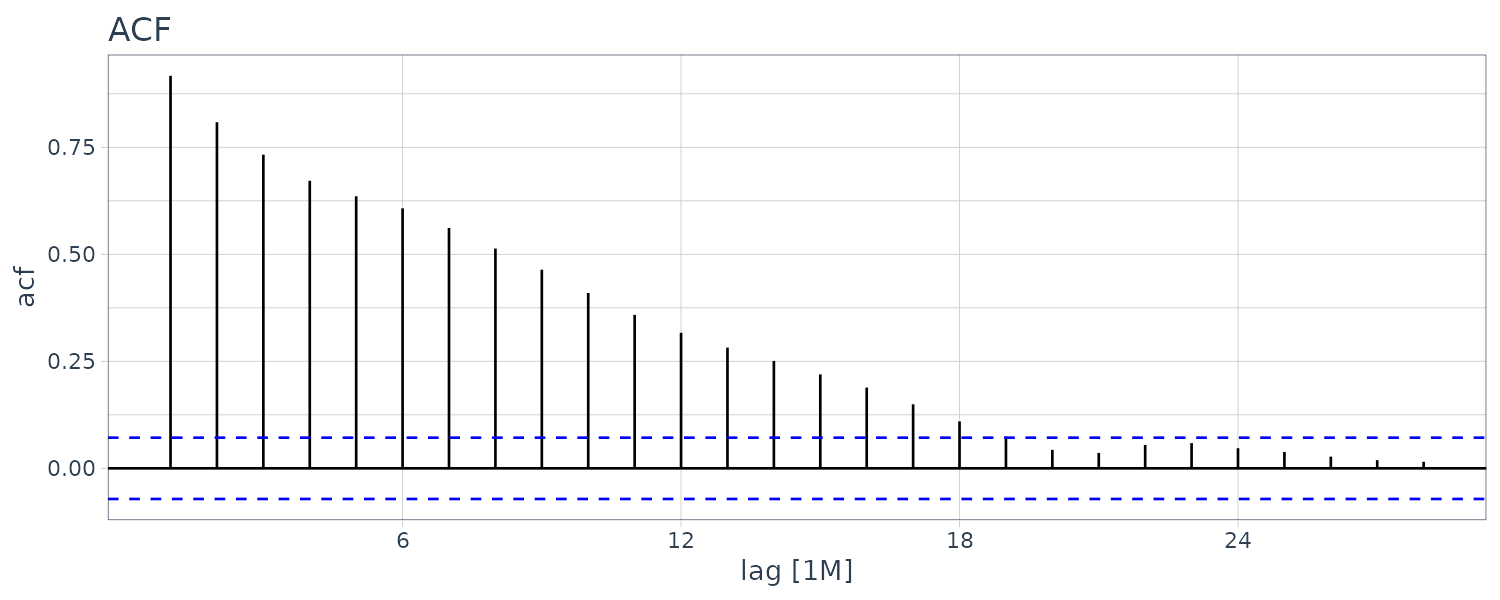

Also called the sample ACF, the correlogram is the sequence of autocorrelations \(r_{1}, r_{2}, \cdots\).

We say that \(r_{s}\) is significantly different from 0 at a 5% significance if:

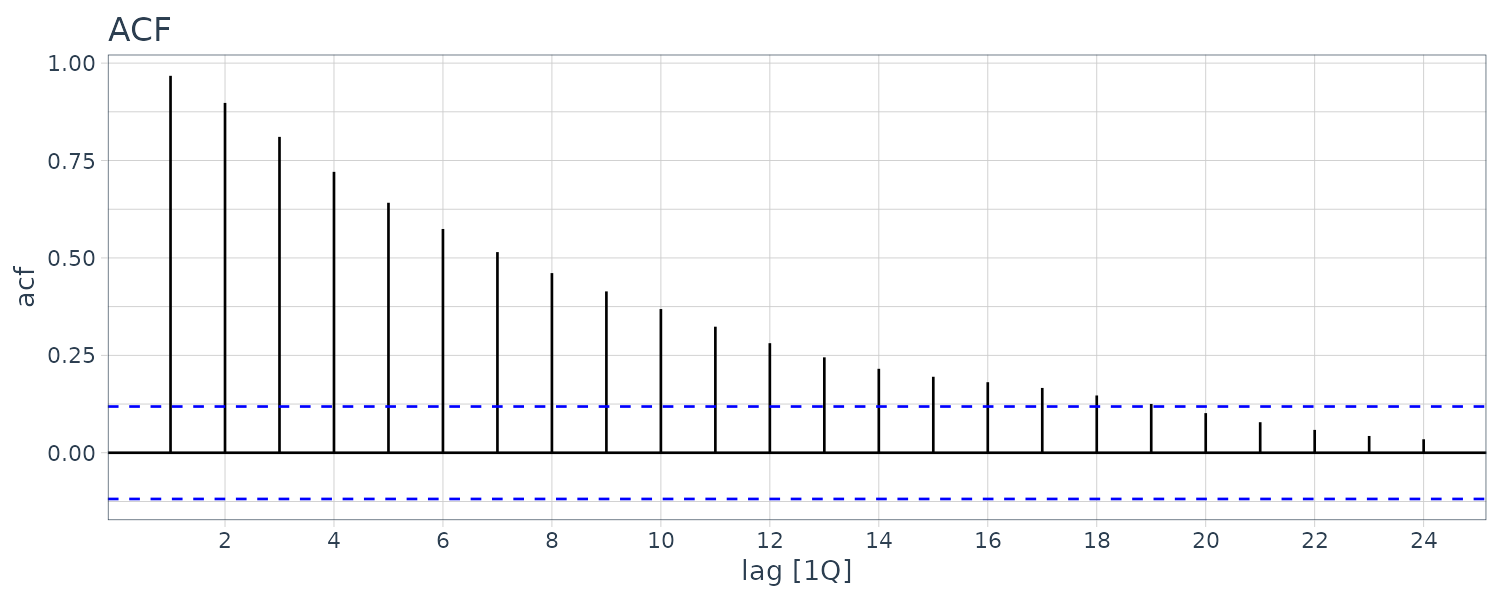

\[\begin{aligned} \sqrt{T}r_{s} &\leq -1.96\\ \sqrt{T}r_{s} &\geq 1.96 \end{aligned}\]Example: Sample Autocorrelations for Unemployment

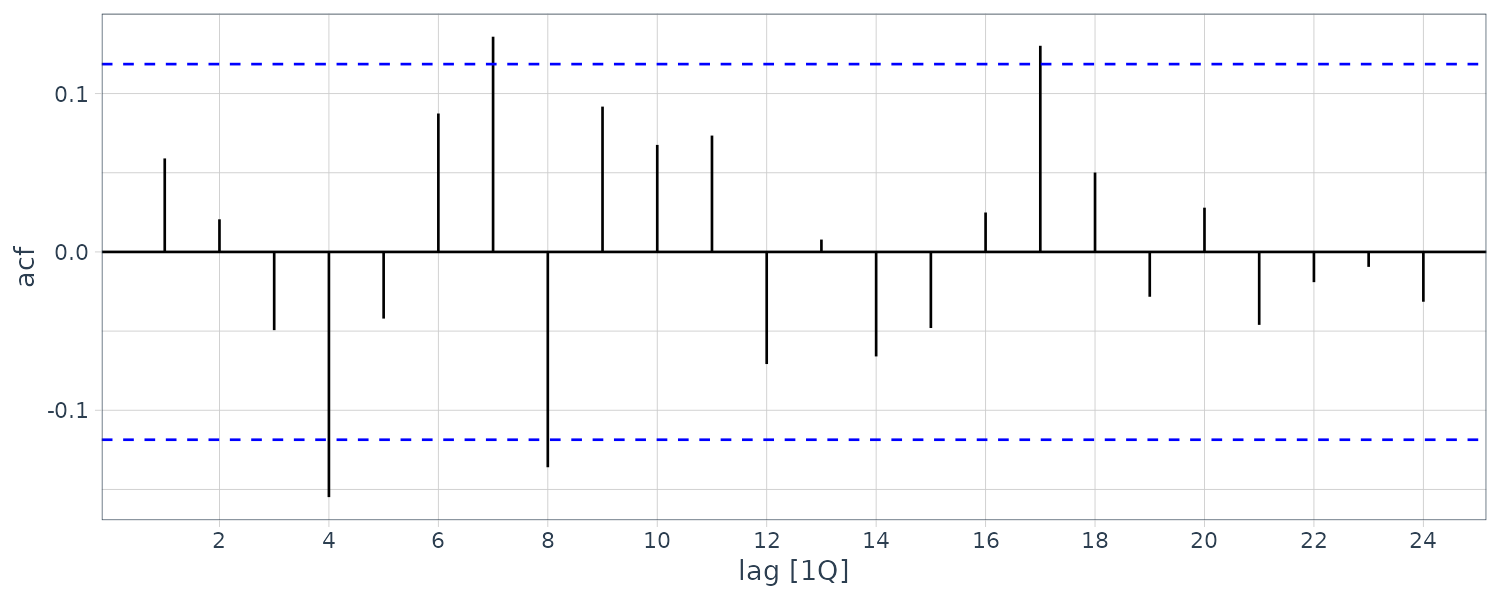

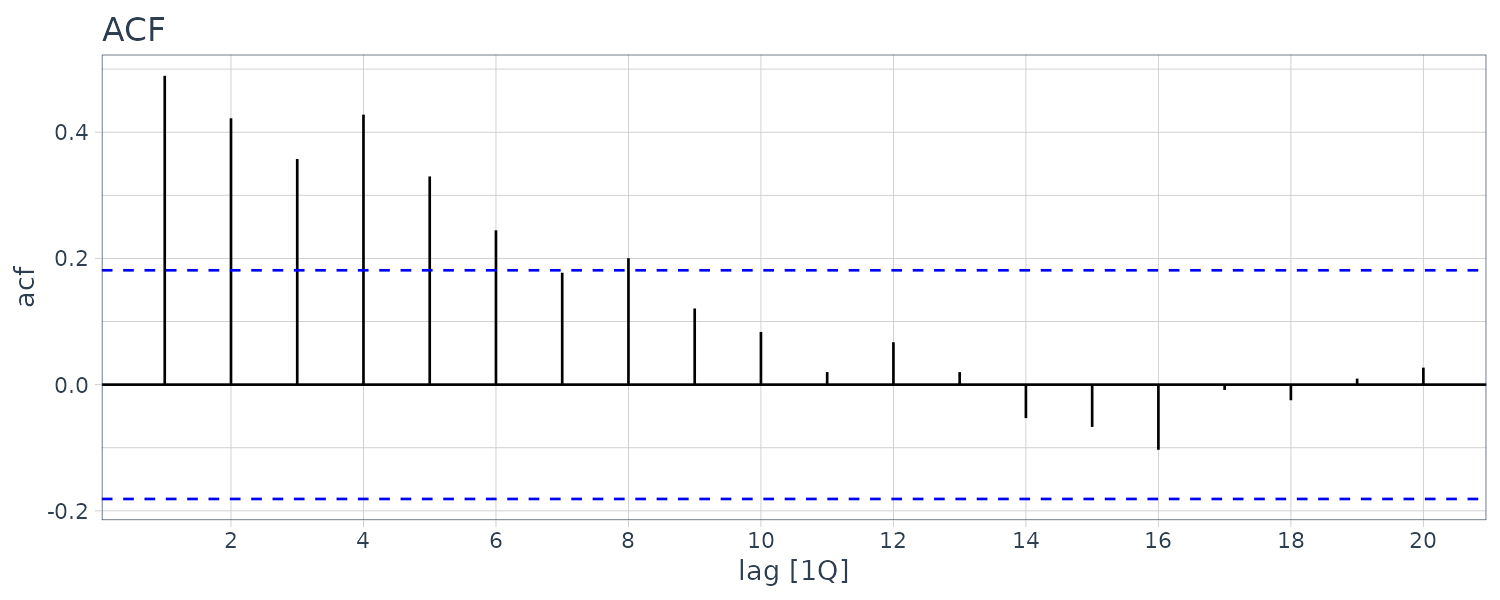

Consider the quarterly series for the U.S. unemployment rate found in the dataset usmacro.

The horizontal line is drawn at:

\[\begin{aligned} \pm\frac{1.96}{\sqrt{T}} &= \frac{1.96}{\sqrt{173}}\\[5pt] &= \pm 0.121 \end{aligned}\]usmacro |>

ACF(u) |>

autoplot() +

ggtitle("ACF") +

theme_tq()

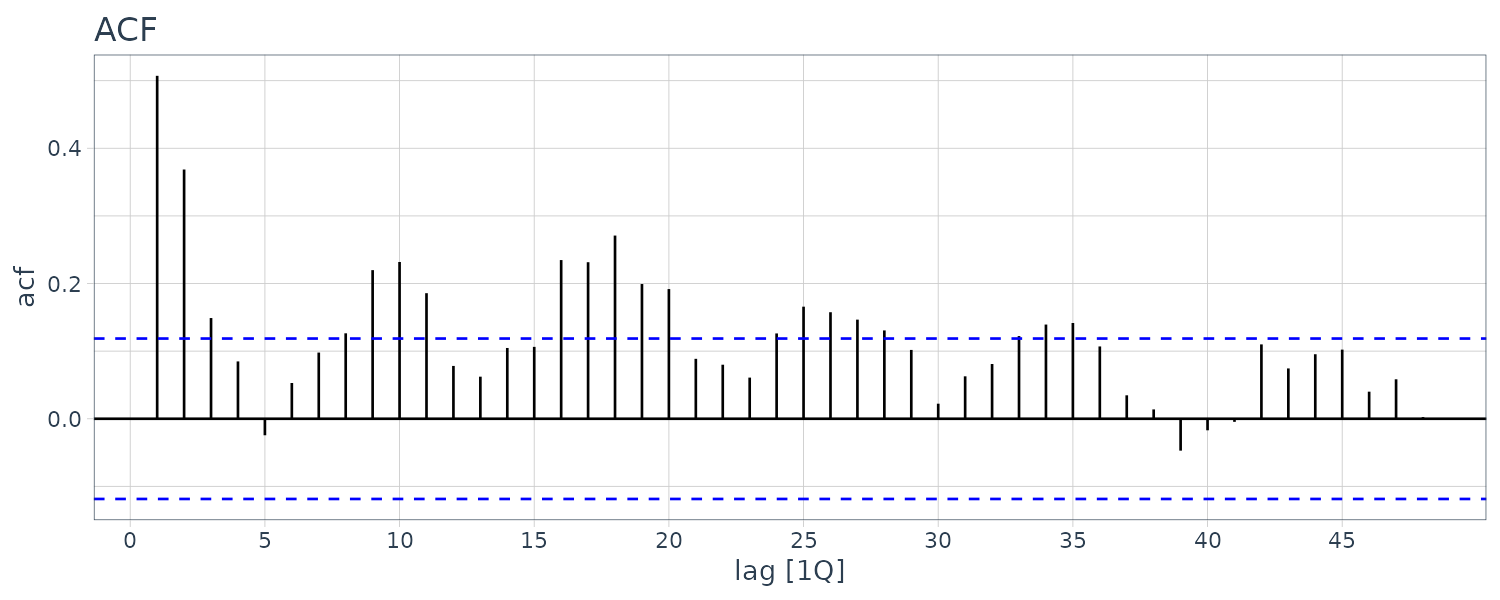

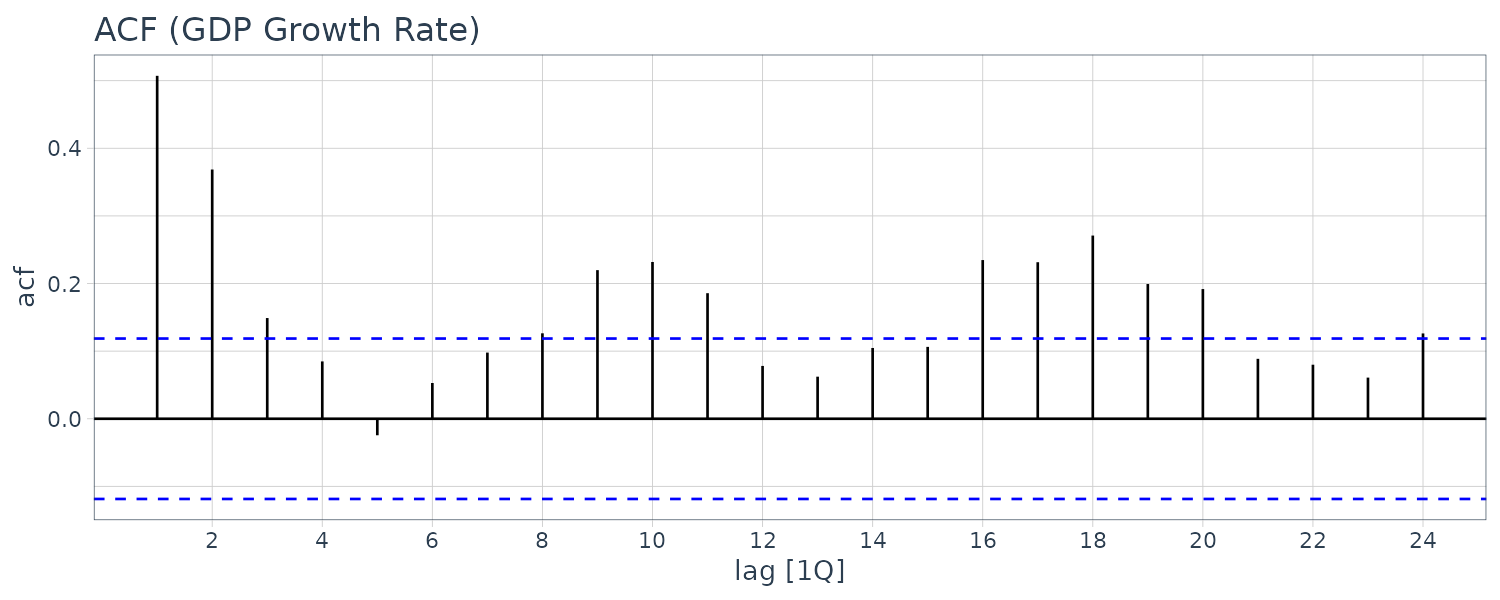

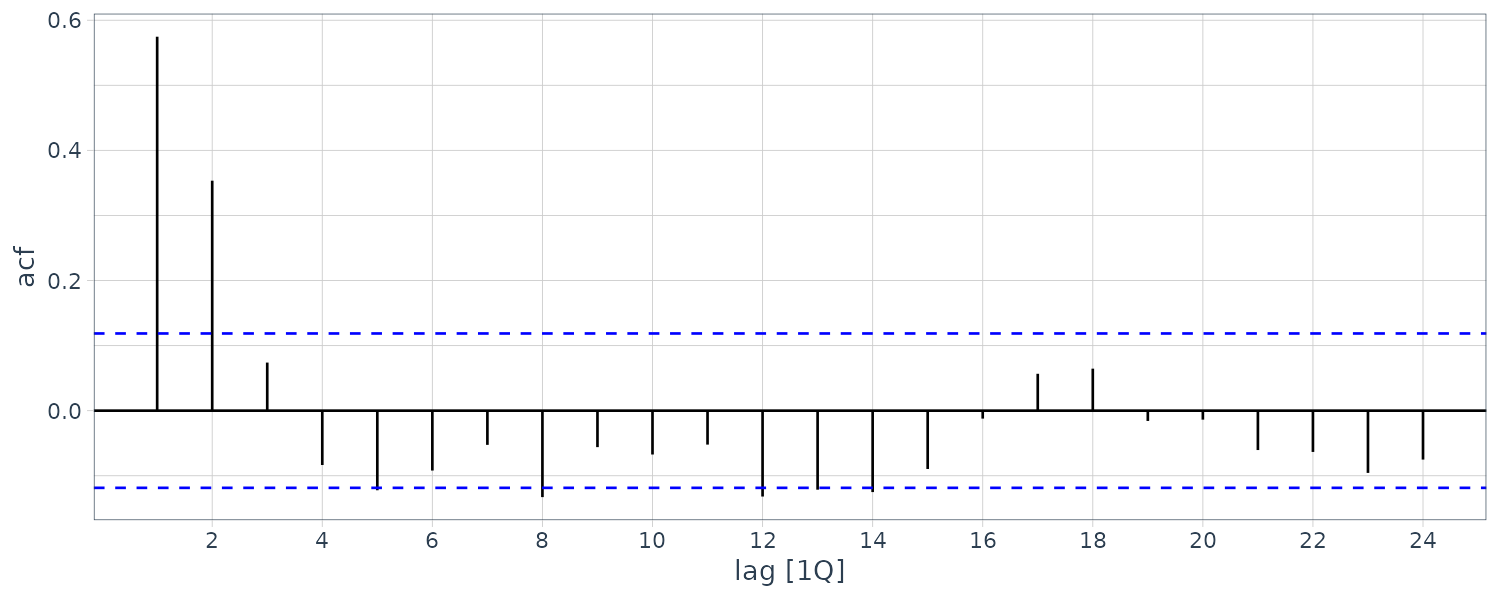

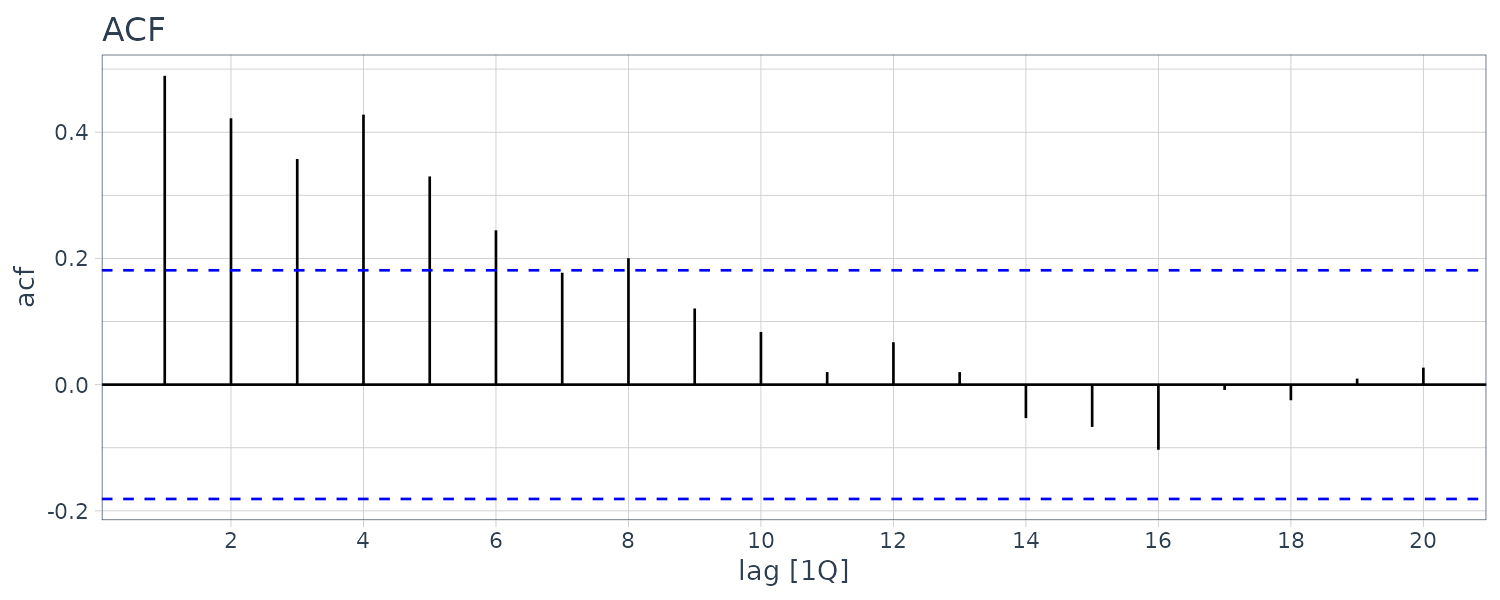

Example: Sample Autocorrelations for GDP Growth Rate

The autocorrelations are smaller than those for the unemployment series, but there’s a series of pattern where the correlations oscillate between signifcance and insignificance. This could be due to the business cycle.

usmacro |>

ACF(g, lag_max = 48) |>

autoplot() +

scale_x_continuous(

breaks = seq(0, 48, 5)

)+

ggtitle("ACF") +

theme_tq()

Stationarity

Stationarity means the mean and variances do not change over time and autocorrelation between variables depend only on how far apart they are in time. Another way to think of this is if you take any subset of the time series, they will have the same mean, variance, and \(\rho{1}, \rho_{2}, \cdots\). Up till now, we have assumed in the modeling that the variables are stationary.

Test of stationarity with “Unit Root Test” can be taken to detect non-stationarity which will cover later.

In addition to assuming that the variables are stationary, we also assume they are weakly dependent. Weak dependence implies that as \(s \rightarrow \infty\), \(x_{t}, x_{t+s}\) are becoming independent. In other words, as s grows larger, the autocorrelation decreases to zero. Stationary variables typically have weak dependence but not always.

Stationary variables need to be weakly dependent in order for the least squares estimator to be consistent.

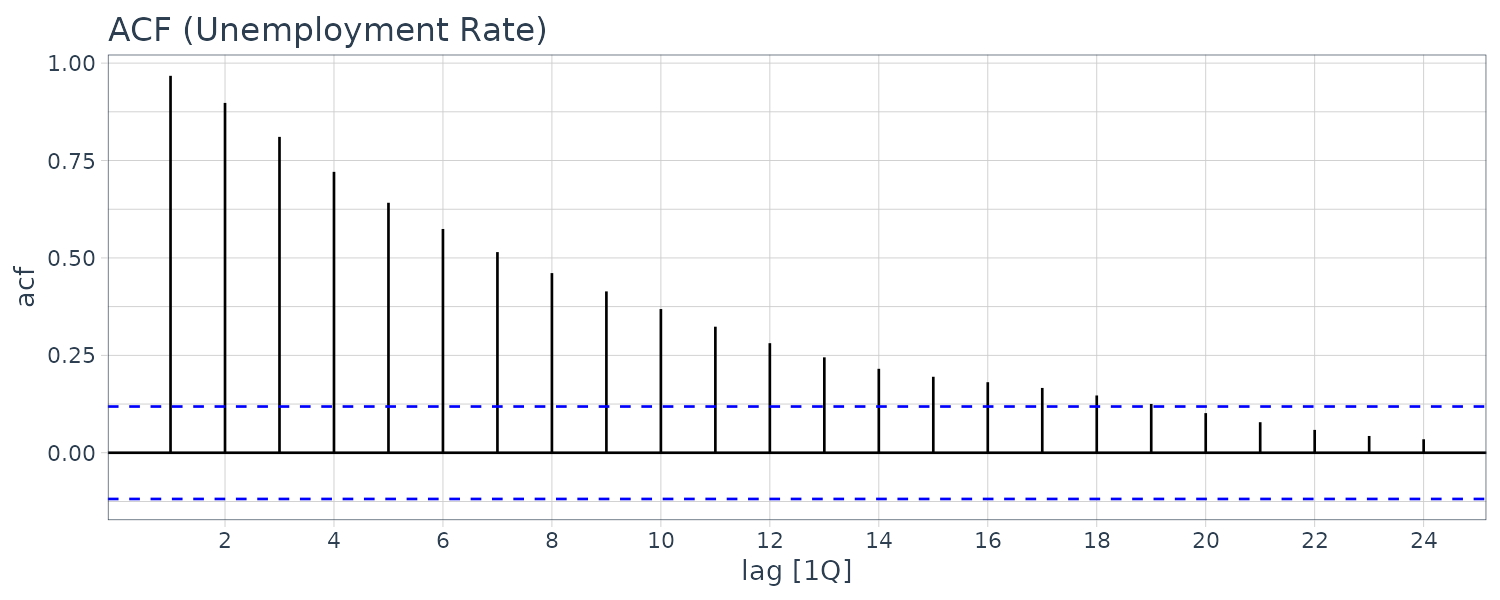

Example: Are the Unemployment and Growth Rate Series Stationary and Weakly Dependent?

We will answer the question of stationarity in terms of formal test later when we cover unit root test. For now, we graph the ACFs:

usmacro |>

ACF(u) |>

autoplot() +

ggtitle("ACF (Unemployment Rate)") +

theme_tq()

usmacro |>

ACF(g) |>

autoplot() +

ggtitle("ACF (GDP Growth Rate)") +

theme_tq()

We can see that the autocorrelations die out pretty quickly so we conclude it is weakly dependent. But we cannot ascertain whether it is stationary from the correlogram. It turns out that unemployment rate is indeed stationary.

GDP growth rate looks more stationary and certainly weakly dependent.

Knowing the unemployment and growth rates are stationary and weakly dependent means that we can proceed to use them for time-series regression models with stationary variables. With the exception of a special case known as cointegration, variables in time-series regressions must be stationary and weakly dependent for the least squares estimator to be consistent.

Forecasting

In this section, we consider forecasting using two different models, an AR model, and an ARDL model. The focus is on short-term forecasting, typically up to three periods into the future.

Suppose we have the following ARDL(2, 2) model:

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \theta_{2}y_{t-2} + \beta_{1}x_{t-1} + \beta_{2}x_{t-2} + e_{t} \end{aligned}\]Notice that the term \(x_{t}\) is missing. The reason is it is unlikely that \(x_{t}\) will be observed at the time of forecast so the omission is desirable.

Hence the ARDL forecast equation would be:

\[\begin{aligned} y_{T+1} &= \delta + \theta_{1}y_{T} + \theta_{2}y_{T-1} + \beta_{1}x_{T} + \beta_{2}x_{T-1} + e_{t} \end{aligned}\]Defining the information set to be the set of all current and past observations of \(y\) and \(x\) at time \(t\) as:

\[\begin{aligned} I_{t} &= {y_{t}, y_{t-1}, \cdots, x_{t}, x_{t-1}, \cdots} \end{aligned}\]Assuming that we are standing at the end of the sample period, having observed \(y_{T}, x_{T}\), the one-period ahead forecasting problem is to find a forecast \(\hat{y}_{T+1}\mid I_{T}\).

The best forecast in a minimizing the conditional mean-squared forecast error :

\[\begin{aligned} E[(\hat{y}_{T+1} - y_{T+1})^{2}\mid I_{T}] \end{aligned}\]Is the conditional expectation:

\[\begin{aligned} \hat{y}_{T+1} &= E[Y_{T+1}\mid I_{t}] \end{aligned}\]For example, suppose we believe only 2 lags of \(y, x\) are relevant, we are assuming that:

\[\begin{aligned} E[y_{T+1}\mid I_{t}] &= E[y_{T+1}\mid y_{T}, y_{T - 1}, x_{T}, x_{T-1}]\\ &= \delta + \theta_{1}y_{T-1} + \theta_{2}y_{T-2} + \beta_{1}x_{T-1} + \beta_{2}x_{T-2} \end{aligned}\]By employing an ARDL(2, 2) model, we are assuming that, for forecasting \(y_{T+1}\) , observations from more than two periods in the past do not convey any extra information relative to that contained in the most recent two observations.

Furthermore, we require:

\[\begin{aligned} E[e_{T+1}\mid I_{T}] &= 0 \end{aligned}\]For 2-period and 3-period ahead forecasts:

\[\begin{aligned} \hat{y}_{T + 2} = \ &E[y_{T+2}\mid I_{T}]\\[5pt] = \ &\delta + \theta E[Y_{T+1}\mid I_{T}] + \theta_{2}y_{T}\ + \\ &\beta_{1}E[x_{T+1}\mid I_{t}] + \beta_{2}x_{T} \end{aligned}\] \[\begin{aligned} \hat{y}_{T + 3} = \ &E[y_{T+3}\mid I_{T}]\\[5pt] = \ &\delta + \theta E[Y_{T+2}\mid I_{T}] + \theta_{2}E[Y_{T+1}\mid I_{T}]\ + \\ &\beta_{1}E[x_{T+2}\mid I_{t}] + \beta_{2}E[X_{T + 1}\mid I_{T}] \end{aligned}\]Note that we have estimate of \(E[y_{T+2} \mid I_{T}], [y_{T+1} \mid I_{T}]\) from previous period’s forecasts, but \([x_{T+2} \mid I_{T}], [x_{T+1} \mid I_{T}]\) require extra information. They could be derived from independent forecasts or in what-if type scenarios. If the model is a pure AR model, this issue does not arise.

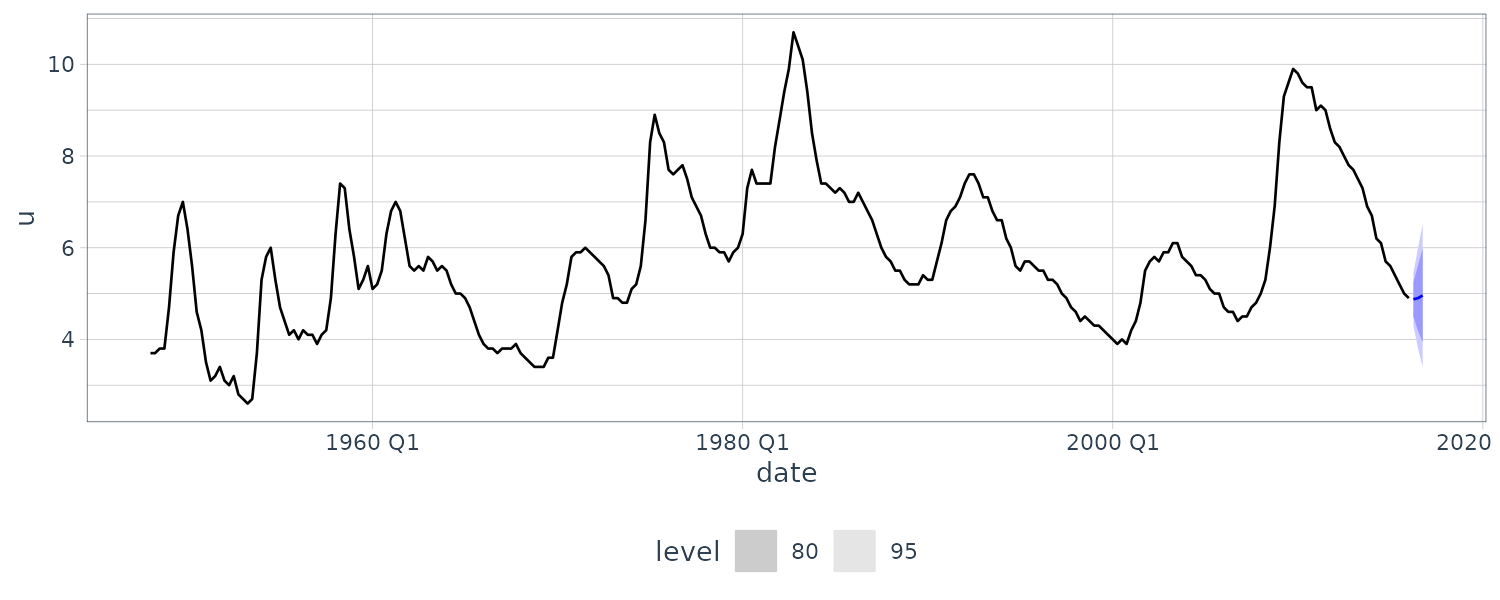

Example: Forecasting Unemployment with an AR(2) Model

To demonstrate how to use an AR model for forecasting, we consider this AR(2) model for forecasting US unemployment rate:

\[\begin{aligned} U_{t} &= \delta + \theta_{1}U_{t-1} + \theta_{2}U_{t-2} + e_{t} \end{aligned}\]We assume that past values of unemployment cannot be used to forecast the error in the current period:

\[\begin{aligned} E[e_{t} \mid I_{t-1}] &= 0 \end{aligned}\]The expressions for forecasts for the remainder of 2016:

\[\begin{aligned} \hat{U}_{2016Q2} &= E[U_{2016Q2}\mid I_{2016Q1}]\\[5pt] &= \delta + \theta_{1}U_{2016Q1} + \theta_{2}U_{2015Q4} \end{aligned}\] \[\begin{aligned} \hat{U}_{2016Q3} &= E[U_{2016Q3}\mid I_{2016Q1}]\\[5pt] &= \delta + \theta_{1}E[U_{2016Q2}\mid I_{2016Q1}] + \theta_{2}U_{2016Q1} \end{aligned}\] \[\begin{aligned} \hat{U}_{2016Q4} &= E[U_{2016Q4}\mid I_{2016Q1}]\\[5pt] &= \delta + \theta_{1}E[U_{2016Q3}\mid I_{2016Q1}] + \theta_{2}E[U_{2016Q2}\mid I_{2016Q_{1}}] \end{aligned}\]Example: OLS Estimation of the AR(2) Model for Unemployment

The assumption that \(E[e_{t}\mid I_{t-1}] = 0\) is sufficient for the OLS estimator to be consistent. However, the OLS estimator will be biased, but consistency gives it a large-sample justification. The reason it is biased is because even though:

\[\begin{aligned} \text{cov}(e_{t}, y_{t-s}) &= 0\\ \text{cov}(e_{t}, x_{t-s}) &= 0 \end{aligned}\] \[\begin{aligned} s &> 0 \end{aligned}\]It does not mean future values will not be correlated with \(e_{t}\), which is a condition for the estimator to be unbiased. In other words, the assumption of \(E[e_{t}\mid I_{t-1}]\) is weaker than the strict exogeneity assumption.

The OLS estimates yields the following equation:

\[\begin{aligned} \hat{U}_{t} &= 0.2885 + 1.6128 U_{t-1} - 0.6621 U_{t-2} \end{aligned}\] \[\begin{aligned} \hat{\sigma} &= 0.2947 \end{aligned}\] \[\begin{aligned} \text{se}(\theta_{0}) &= 0.0666\\ \text{se}(\theta_{1}) &= 0.0457\\ \text{se}(\theta_{2}) &= 0.0456 \end{aligned}\]The standard error and \(\hat{\sigma}\) estimates will be valid if the conditional homoskedasticity assumption is true:

\[\begin{aligned} \text{var}(e_{t}\mid U_{t-1}, U_{t-2}) &= \sigma^{2} \end{aligned}\]We can show this in R:

fit <- usmacro |>

model(

ARIMA(

u ~ 1 +

lag(u, 1) +

lag(u, 2) +

pdq(0, 0, 0) +

PDQ(0, 0, 0)

)

)

> tidy(fit) |>

select(-.model)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 lag(u, 1) 1.61 0.0454 35.5 6.95e-104

2 lag(u, 2) -0.662 0.0453 -14.6 4.80e- 36

3 intercept 0.289 0.0662 4.36 1.88e- 5

> report(fit)

Series: u

Model: LM w/ ARIMA(0,0,0) errors

Coefficients:

lag(u, 1) lag(u, 2) intercept

1.6128 -0.6621 0.2885

s.e. 0.0454 0.0453 0.0662

sigma^2 estimated as 0.08621: log likelihood=-51.92

AIC=111.84 AICc=111.99 BIC=126.27Example: Unemployment Forecasts

Having now the OLS estimates, we can use it for forecasting:

\[\begin{aligned} \hat{U}_{2016Q2} &= \hat{\delta} + \hat{\theta_{1}}U_{2016Q1} + \hat{\theta_{2}}U_{2015Q4}\\ &= 0.28852 + 1.61282 \times 4.9 - 0.66209 \times 5\\ &= 4.8809 \end{aligned}\] \[\begin{aligned} U_{2016Q1} &= 4.9\\ U_{2015Q4} &= 5 \end{aligned}\]Two quarters ahead forecast:

\[\begin{aligned} \hat{U}_{2016Q3} &= \hat{\delta} + \hat{\theta_{1}}\hat{U}_{2016Q2} + \hat{\theta_{2}}U_{2016Q1}\\ &= 0.28852 + 1.61282 \times 4.8809 - 0.66209 \times 4.9\\ &= 4.9163 \end{aligned}\] \[\begin{aligned} \hat{U}_{2016Q2} &= 4.8809\\ U_{2016Q1} &= 4.9 \end{aligned}\]Two quarters ahead forecast:

\[\begin{aligned} \hat{U}_{2016Q4} &= \hat{\delta} + \hat{\theta_{1}}\hat{U}_{2016Q3} + \hat{\theta_{2}}\hat{U}_{2016Q2}\\ &= 0.28852 + 1.61282 \times 4.9163 - 0.66209 \times 4.8809\\ &= 4.986 \end{aligned}\] \[\begin{aligned} \hat{U}_{2016Q3} &= 4.9163\\ \hat{U}_{2016Q2} &= 4.8809 \end{aligned}\]Forecast Intervals and Standard Errors

Given the general ARDL(2, 2) model:

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \theta_{2}y_{t-2} + \beta_{1}x_{t-1} + \beta_{2}x_{t-2} + e_{t} \end{aligned}\]The one-period ahead forecast error \(f_{1}\) is given by:

\[\begin{aligned} f_{1} &= y_{T+1} - \hat{y}_{T+1}\\ &= (\delta - \hat{\delta}) + (\theta_{1}- \hat{\theta}_{1})y_{T} + (\theta_{2} - \hat{\theta}_{2})y_{T-2} + (\beta_{1}- \hat{\beta}_{1})x_{T-1} + (\beta_{2} - \hat{\beta}_{2})x_{T-2} + e_{T+1} \end{aligned}\]To simplify calculation, we ignore the error from estimating the coefficients. The coefficients error in most cases pales with comparison with the variance of the random error. Using this simplication we have:

\[\begin{aligned} f_{1} &= e_{T+1} \end{aligned}\]For two-periods ahead forecast:

\[\begin{aligned} \hat{y}_{T+2} &= \delta + \theta_{1}\hat{y}_{T+1} + \theta_{2}y_{T} + \beta_{1}\hat{x}_{T+1} + \beta_{2}x_{T} \end{aligned}\]Assuming that the values for \(\hat{x}_{T+1}\) are given in a what-if scenario:

\[\begin{aligned} \hat{y}_{T+2} &= \delta + \theta_{1}\hat{y}_{T+1} + \theta_{2}y_{T} + \beta_{1}x_{T+1} + \beta_{2}x_{T} \end{aligned}\]And the actual two-period ahead:

\[\begin{aligned} y_{T+2} &= \delta + \theta_{1} y_{T+1} + \theta_{2}y_{T} + \beta_{1}x_{T+1} + \beta_{2}x_{T} + e_{T+2} \end{aligned}\]Then the two-period ahead forecast error is:

\[\begin{aligned} f_{2} &= y_{T+2} - \hat{y}_{T+2}\\ &= \theta_{1}(y_{T+1} - \hat{y}_{T+1}) + e_{T+2}\\ &= \theta_{1} f_{1} + e_{T+2}\\ &= \theta_{1}e_{T+1} + e_{T+2} \end{aligned}\]For the estimated three-period ahead and assuming that the values for \(\hat{x}_{T+1}\) are given in a what-if scenario:

\[\begin{aligned} \hat{y}_{T+3} &= \delta + \theta_{1}\hat{y}_{T+2} + \theta_{2}\hat{y}_{T+1} + \beta_{1}x_{T+2} + \beta_{2}x_{T+1} \end{aligned}\]And the actual three-period ahead:

\[\begin{aligned} y_{T+3} &= \delta + \theta_{1} y_{T+2} + \theta_{2}\hat{y}_{T+1} + \beta_{1}x_{T+2} + \beta_{2}x_{T+1} + e_{T+3} \end{aligned}\]Then the three-period ahead forecast error is:

\[\begin{aligned} f_{3} &= y_{T+3} - \hat{y}_{T+3}\\ &= \theta_{1}(y_{T+2} - \hat{y}_{T+2}) + \theta_{2}(y_{T+1} - \hat{y}_{T+1}) + e_{T+3}\\ &= \theta_{1} f_{2} + \theta_{2}f_{1} + e_{T+3}\\ &= \theta_{1}(\theta_{1}e_{T+1} + e_{T+2}) + \theta_{2}(e_{T+1}) + e_{T+3}\\ &= \theta_{1}^{2}e_{T+1} + \theta_{1}e_{T+2} + \theta_{2}e_{T+1} + e_{T+3}\\ &= (\theta_{1}^{2} + \theta_{2})e_{T+1} + \theta_{1}e_{T+2} + e_{T+3} \end{aligned}\]For the estimated 4-period ahead:

\[\begin{aligned} \hat{y}_{T+4} &= \delta + \theta_{1}\hat{y}_{T+3} + \theta_{2}\hat{y}_{T+2} + \beta_{1}x_{T+3} + \beta_{2}x_{T+2} \end{aligned}\]And the actual 4-period ahead:

\[\begin{aligned} y_{T+4} &= \delta + \theta_{1} y_{T+3} + \theta_{2}\hat{y}_{T+2} + \beta_{1}x_{T+3} + \beta_{2}x_{T+2} + e_{T+4} \end{aligned}\]Then the 4-period ahead forecast error is:

\[\begin{aligned} f_{4} &= y_{T+4} - \hat{y}_{T+4}\\ &= \theta_{1}(y_{T+3} - \hat{y}_{T+3}) + \theta_{2}(y_{T+2} - \hat{y}_{T+2}) + e_{T+4}\\ &= \theta_{1} f_{3} + \theta_{2}f_{2} + e_{T+4}\\ &= \theta_{1}((\theta_{1}^{2} + \theta_{2})e_{T+1} + \theta_{1}e_{T+2} + e_{T+3}) + \theta_{2}(\theta_{1}e_{T+1} + e_{T+2}) + e_{T+4}\\ &= \theta_{1}^{3}e_{T+1} + \theta_{2}\theta_{1}e_{T+1} + \theta_{1}^{2}e_{T+2} + \theta_{1}e_{T+3} + \theta_{2}\theta_{1}e_{T+1} + \theta_{2}e_{T+2} + e_{T+4}\\ &= (\theta_{1}^{3} + 2\theta_{2}\theta_{1} + \theta_{1}^{2})e_{T+1} + (\theta_{1}^{2} + \theta_{2})e_{T+2} + \theta_{1}e_{T+3}+ e_{T+4} \end{aligned}\]Taking variance of the error we get:

\[\begin{aligned} \sigma_{f1}^{2} &= \text{var}(f_{1}\mid I_{T})\\ &= \sigma^{2}\\[5pt] \sigma_{f2}^{2} &= \text{var}(f_{2}\mid I_{T})\\ &= \sigma^{2}(1 + \theta_{1}^{2})\\[5pt] \sigma_{f3}^{2} &= \text{var}(f_{3}\mid I_{T})\\ &= \sigma^{2}[(\theta_{1}^{2} + \theta_{2})^{2} + \theta_{1}^{2} + 1]\\ \sigma_{f4}^{2} &= \text{var}(f_{4}\mid I_{T})\\[5pt] &= \sigma^{2}[(\theta_{1}^{3} + 2\theta_{1}\theta_{2} + \theta_{2})^{2} + (\theta_{1}^{2} + \theta_{2})^{2} + \theta_{1}^{2} + 1]\\ \end{aligned}\]Example: Forecast Intervals for Unemployment from the AR(2) Model

Given the following AR(2) model:

\[\begin{aligned} U_{t} &= \delta + \theta_{1}U_{t-1} + \theta_{2}U_{t-2} + e_{t} \end{aligned}\]To forecast in R, we first fit the model using fable:

fit <- usmacro |>

model(ARIMA(u ~ pdq(2, 0, 0) + PDQ(0, 0, 0)))

> tidy(fit) |>

select(-.model)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 ar1 1.61 0.0449 35.9 2.09e-105

2 ar2 -0.661 0.0451 -14.7 2.44e- 36

3 constant 0.278 0.0174 16.0 4.77e- 41 The coefficients are slightly different because fable uses MLE while the book uses OLS. As the forecast variance calculation is not directly supported:

fit <- usmacro |>

model(

ARIMA(

u ~ 1 +

lag(u, 1) +

lag(u, 2) +

pdq(0, 0, 0) +

PDQ(0, 0, 0)

)

)

> tidy(fit) |>

select(-.model)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 lag(u, 1) 1.61 0.0454 35.5 6.95e-104

2 lag(u, 2) -0.662 0.0453 -14.6 4.80e- 36

3 intercept 0.289 0.0662 4.36 1.88e- 5 To forecast in R:

fcast <- fit |>

forecast(h = 3) |>

hilo(95) |>

select(-.model)

> fcast

# A tsibble: 3 x 4 [1Q]

date u .mean `95%`

<qtr> <dist> <dbl> <hilo>

1 2016 Q2 N(4.9, 0.087) 4.87 [4.297622, 5.452135]95

2 2016 Q3 N(4.9, 0.31) 4.90 [3.804995, 5.995970]95

3 2016 Q4 N(5, 0.64) 4.96 [3.391705, 6.525075]95 And the standard errors:

> sqrt(distributional::variance(fcast$u))

[1] 0.2945241 0.5589325 0.7993436And graphing the forecast:

fit |>

forecast(h = 3) |>

autoplot(usmacro) +

theme_tq()

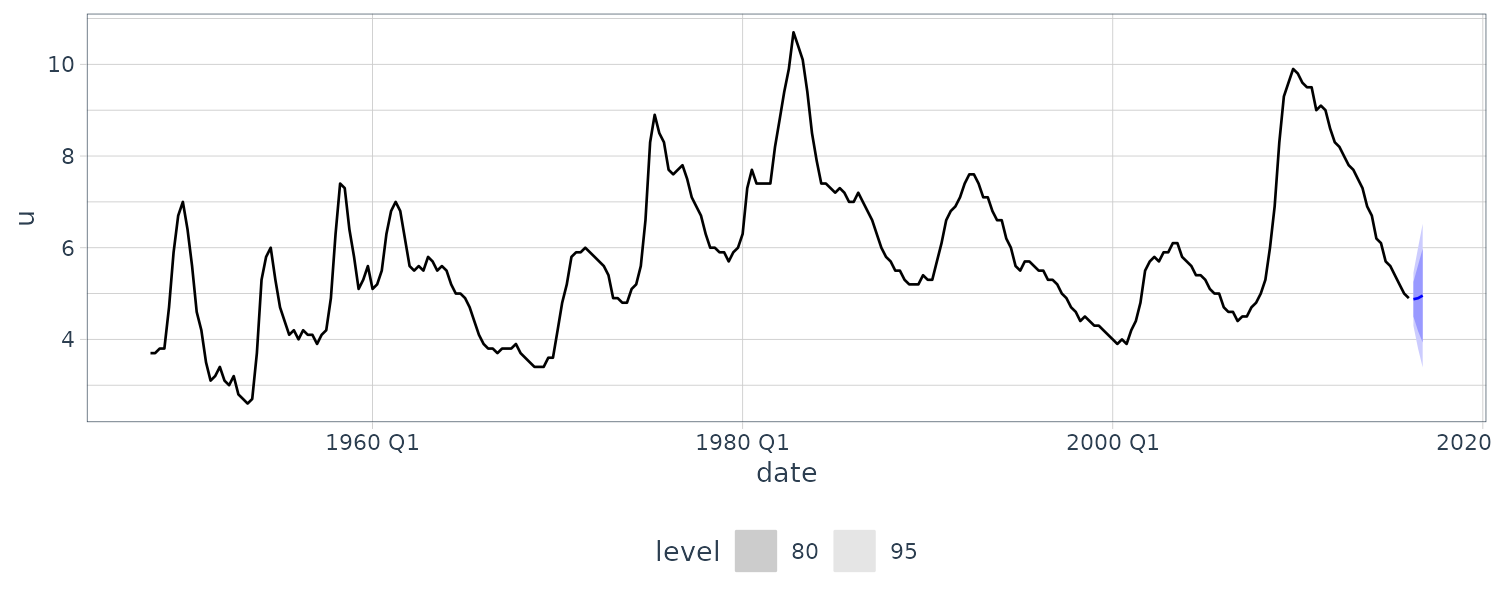

Example: Forecasting Unemployment with an ARDL(2, 1) Model

We include a lagged value of the growth rate of the GDP. The OLS model is:

\[\begin{aligned} \hat{U}_{t} &= 0.3616 + 1.5331 U_{t-1} - 0.5818 U_{t-2} - 0.04824 G_{t-1} \end{aligned}\]Unfortunately fable doesn’t support ARDL models directly. However we can do this using the dLagM package:

library(dLagM)

usmacro$g1 <- lag(usmacro$g)

rem.p <- list(

g1 = c(1)

)

rem.q <- c()

remove <- list(

p = rem.p, q = rem.q

)

fit <- ardlDlm(

u ~ g1,

p = 1,

q = 2,

data = usmacro,

remove = remove

)

> summary(fit)

Time series regression with "ts" data:

Start = 3, End = 273

Call:

dynlm(formula = as.formula(model.text), data = data)

Residuals:

Min 1Q Median 3Q Max

-0.83591 -0.17311 -0.02963 0.15226 1.20016

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.36157 0.07228 5.002 1.03e-06 ***

g1.t -0.04824 0.01949 -2.475 0.0139 *

u.1 1.53310 0.05555 27.597 < 2e-16 ***

u.2 -0.58179 0.05559 -10.465 < 2e-16 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.2919 on 267 degrees of freedom

Multiple R-squared: 0.9685, Adjusted R-squared: 0.9681

F-statistic: 2734 on 3 and 267 DF, p-value: < 2.2e-16We can see that the above result matches the OLS estimates.

Using a what-if scenario, we assume that:

\[\begin{aligned} G_{2016Q2} &= 0.869\\ G_{2016Q3} &= 1.069 \end{aligned}\]fcast <- dLagM::forecast(

fit,

x = c(0.31, 0.869, 1.069),

h = 3,

interval = TRUE

)

> fcast

$forecasts

95% LB Forecast 95% UB

1 4.402419 4.949870 5.522786

2 4.139368 5.057540 6.107228

3 3.842399 5.183947 6.747698

$call

forecast.ardlDlm(model = fit,

x = c(0.31, 0.869, 1.069),

h = 3, interval = TRUE)

attr(,"class")

[1] "forecast.ardlDlm" "dLagM" To retrieve the standard errors:

> (fcast$forecasts[, 3] - fcast$forecasts[, 2]) / 1.96

[1] 0.2923041 0.5355552 0.7978317In fable, we can use the autocorrelation of the error to get similar coefficients. Note that the intercept is different from ARDL as the equation is fundamentally different. The coefficients are slightly different due to MLE vs OLS:

\[\begin{aligned} \hat{U}_{t} &= 5.76 - 0.00574 G_{t-1} + e_{t}\\ e_{t} &= 1.61 e_{t-1} - 0.659 e_{t-2} \end{aligned}\]fit <- usmacro |>

model(

ARIMA(

u ~ lag(g) +

pdq(2, 0, 0) +

PDQ(0, 0, 0)

)

)

> tidy(fit) |>

select(-.model)

# A tibble: 4 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 ar1 1.61 0.0454 35.5 4.25e-104

2 ar2 -0.659 0.0456 -14.5 1.52e- 35

3 lag(g) -0.00574 0.0119 -0.482 6.30e- 1

4 intercept 5.76 0.362 15.9 1.04e- 40 nd <- new_data(usmacro, 3)

nd <- nd |>

mutate(

u = NA,

g = NA

)

nd$g[1] <- 0.869

nd$g[2] <- 1.069

fcast <- fit |>

forecast(new_data = nd) |>

hilo(95) |>

select(-.model)

> fcast

# A tsibble: 3 x 5 [1Q]

date u .mean g `95%`

<qtr> <dist> <dbl> <dbl> <hilo>

1 2016 Q2 N(4.9, 0.087) 4.88 0.869 [4.297938, 5.454094]95

2 2016 Q3 N(4.9, 0.31) 4.90 1.07 [3.802453, 5.994534]95

3 2016 Q4 N(5, 0.64) 4.96 NA [3.389150, 6.521940]95

> sqrt(distributional::variance(fcast$u))

[1] 0.2949431 0.5592147 0.7991958And plotting the forecast:

fit |>

forecast(nd) |>

autoplot(usmacro) +

theme_tq()

Note that the forecast variance we get is similar to dLagM, and recall the coefficients are similar too. So going forward while doing forecast, we would revert to using fable and using autocorrelation for the errors rather than using autoregressive terms.

Assumptions for Forecasting

Assumption 1

The time series \(y, x\) are stationary and weakly dependent.

Assumption 2

The conditional expectation is a linear function of a finite number of lags of \(y, x\):

\[\begin{aligned} E[y_{t}\mid I_{t-1}] &= \delta + \theta_{1}y_{t-1}+ \cdots + \theta_{p}y_{t-p} + \beta_{1}x_{t-1} + \cdots + \beta_{q}x_{t-1} \end{aligned}\]The error term is such that:

\[\begin{aligned} E[e_{t}\mid I_{t-1}] &= 0 \end{aligned}\]And not serially correlated:

\[\begin{aligned} E[e_{t}e_{s}\mid I_{t-1}] &= 0 \end{aligned}\] \[\begin{aligned} t &\neq s \end{aligned}\]For example, correlation between \(e_{t}, e_{t-1}\) implies that:

\[\begin{aligned} E[e_{t}\mid I_{t-1}] &= \rho e_{t-1}\\ E[y_{t}\mid I_{t-1}] &= \delta + \theta_{1}y_{t-1} + \rho e_{t-1} \end{aligned}\]This can be resolved by adding another lag of \(y\):

\[\begin{aligned} E[y_{t}\mid I_{t-1}] &= \delta + \theta_{1}y_{t-1} + \rho e_{t-1}\\ &= \delta(1 - \rho) + (\theta_{1} + \rho)y_{t-1} - \rho \theta_{1}y_{t-2} \end{aligned}\]The assumption that \(E[e_{t}\mid I_{t-1}] = 0\) does not mean that a past error \(e_{t-j}\) could not affect current and future values of \(x\). If \(x\) is a policy variable whose setting reacts to past values of \(e\) and \(y\), the least squares estimator is still consistent. However, correlation between \(e_{t}\) and past values of \(x\) must be uncorrelated.

Assumption 3

The errors are conditional homoskedastic:

\[\begin{aligned} \textrm{var}(e_{t}\mid I_{t-1}) &= \sigma^{2} \end{aligned}\]This assumption is needed for the traditional least squares standard errors to be valid and to compute the forecast standard errors.

Selecting Lag Lengths

How do we decide on \(p, q\)? There are a few ways to do this:

- Extend the lag lengths for \(y, x\) as long as their estimated coefficients are significantly different from 0.

- Choose \(p, q\) to minimize the AIC/BIC.

- Evaluate the out-of-sample forecasting performance of each \(p,q\) combination using a hold-out sample.

- To check for serial correlation in the error term.

Granger Causality

Granger causality refers to the ability of lags of one variable to contribute to the forecast of another variable. In this case the variable \(x\) “Granger cause” \(y\) if:

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \cdots + \theta_{p}y_{t-p} + \beta_{0}x_{t} + \cdots \beta_{q}x_{t-q} + e_{t} \end{aligned}\]Or if variable \(x\) does not “Granger clause” \(y\) if:

\[\begin{aligned} E[y_{t}|y_{t-1}, y_{t-2}, \cdots, y_{t-p}, x_{t-1}, x_{t-2}, \cdots, x_{t-q}] &= E[y_{t}|y_{t-1}, y_{t-2}, \cdots, y_{t-p}] \end{aligned}\]To test for Granger Causality, we use the following hypothesis testing and running a F-test:

\[\begin{aligned} H_{0}: &\beta_{1} = 0, \cdots, \beta_{q} = 0\\ H_{1}: &\text{At least one }\beta_{i} \neq 0 \end{aligned}\]This can be done using the F-test.

Note that if x Granger causes y, it does not necessarily imply a direct causal relationship between x and y. It means that having information on past x values will improve the forecast for y. Any causal effect can be an indirect one.

Example: Does the Growth Rate Granger Cause Unemployment?

Recall:

\[\begin{aligned} \hat{U}_{t} &= 0.3616 + 1.5331 U_{t-1} - 0.5818 U_{t-2} - 0.04824 G_{t-1} \end{aligned}\]To calculate the F-statistic:

\[\begin{aligned} F &= t^{2}\\ &= \Bigg(\frac{0.04824}{\text{SE}(G_{t-1})}\Bigg)^{2}\\ &= \Bigg(\frac{0.04824}{0.01949}\Bigg)^{2}\\ &= 6.126 \end{aligned}\]We can calculate the F-value for \(F_{(0.95, 1, 267)}\) in R:

> qf(0.95, df1 = 1, df2 = 267)

3.876522Or we could use anova() function:

fitR <- lm(

u ~ lag(u) +

lag(u, 2),

data = usmacro

)

fitU <- lm(

u ~ lag(u) +

lag(u, 2) +

lag(g),

data = usmacro

)

> anova(fitU, fitR)

Analysis of Variance Table

Model 1: u ~ lag(u) + lag(u, 2) + lag(g)

Model 2: u ~ lag(u) + lag(u, 2)

Res.Df RSS Df Sum of Sq F Pr(>F)

1 267 22.753

2 268 23.276 -1 -0.52209 6.1264 0.01394 *

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Since \(6.126 > 3.877\), we would reject the null hypothesis and conclude that \(G\) Granger causes \(U\).

If there is more than one lag:

\[\begin{aligned} \hat{U}_{t} &= \delta + \theta_{1}U_{t-1} + \theta_{2}U_{t-2} + \beta_{1}G_{t-1} + \beta_{2}G_{t-2} + \beta_{3}G_{t-3} + \beta_{4}G_{t-4} + e_{t} \end{aligned}\]Using anova() in R:

fitR <- lm(

u ~ lag(u)

+ lag(u, 2) +

lag(g),

data = usmacro[3:nrow(usmacro), ]

)

fitU <- lm(

u ~ lag(u) +

lag(u, 2) +

lag(g) +

lag(g, 2) +

lag(g, 3) +

lag(g, 4),

data = usmacro

)

> anova(

fitU,

fitR

)

Analysis of Variance Table

Model 1: u ~ lag(u) + lag(u, 2) + lag(g) + lag(g, 2) +

lag(g, 3) + lag(g, 4)

Model 2: u ~ lag(u) + lag(u, 2) + lag(g)

Res.Df RSS Df Sum of Sq F Pr(>F)

1 262 21.302

2 265 22.738 -3 -1.4358 5.8865 0.000667 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 Because anova require the same dataset, and the first 2 lags of the reduced model is not used, we need to subset the dataset.

The formula for F-statistic:

\[\begin{aligned} F &= \frac{Mean of squares between groups}{Mean of squares within groups}\\ &= \frac{\frac{SSE_{R} - SSE_{U}}{df_{R} - df_{U}}}{\frac{SSE_{U}}{df_{U}}} \end{aligned}\]Testing for Serially Correlated Errors

For the abscene of serial correlation, we require the conditional covariance between any two different errors to be 0:

\[\begin{aligned} E[e_{t}e_{s}\mid I_{t-1}] &= 0\\ t &\neq s \end{aligned}\]If the errors are serally correlated, then the usual least squares standard errors are invalid.

We will discuss 3 ways to test for serial correlated errors:

- Checking Correlogram

- Lagrange Multiplier Test

- Durbin-Watson Test

Checking the Correlogram of the Least Squares Residuals

We can check the correlogram for any autocorrelations that are significantly different from zero.

The kth order autocorrelation for the residuals is:

\[\begin{aligned} r_{k} &= \frac{\sum_{t=k+1}^{T}\hat{e}_{t}\hat{e}_{t-k}}{\sum_{t=1}^{T}\hat{e}_{t}^{2}} \end{aligned}\]For no correlation, ideally would like:

\[\begin{aligned} |r_{k}| < \frac{2}{\sqrt{T}} \end{aligned}\]Example: Checking the Residual Correlogram for the ARDL(2, 1) Unemployment Equation

fit <- lm(

u ~ lag(u) +

lag(u, 2) +

lag(g),

data = usmacro

)

usmacro$residuals <- c(NA, NA, resid(fit))

usmacro |>

ACF(residuals) |>

autoplot() +

ggtitle("ACF") +

theme_tq()

We can do this using fable as well:

fit <- usmacro |>

model(

ARIMA(

u ~ lag(g) +

pdq(2, 0, 0) +

PDQ(0, 0, 0)

)

)

fit |>

gg_tsresiduals() |>

purrr::pluck(2) +

theme_tq()

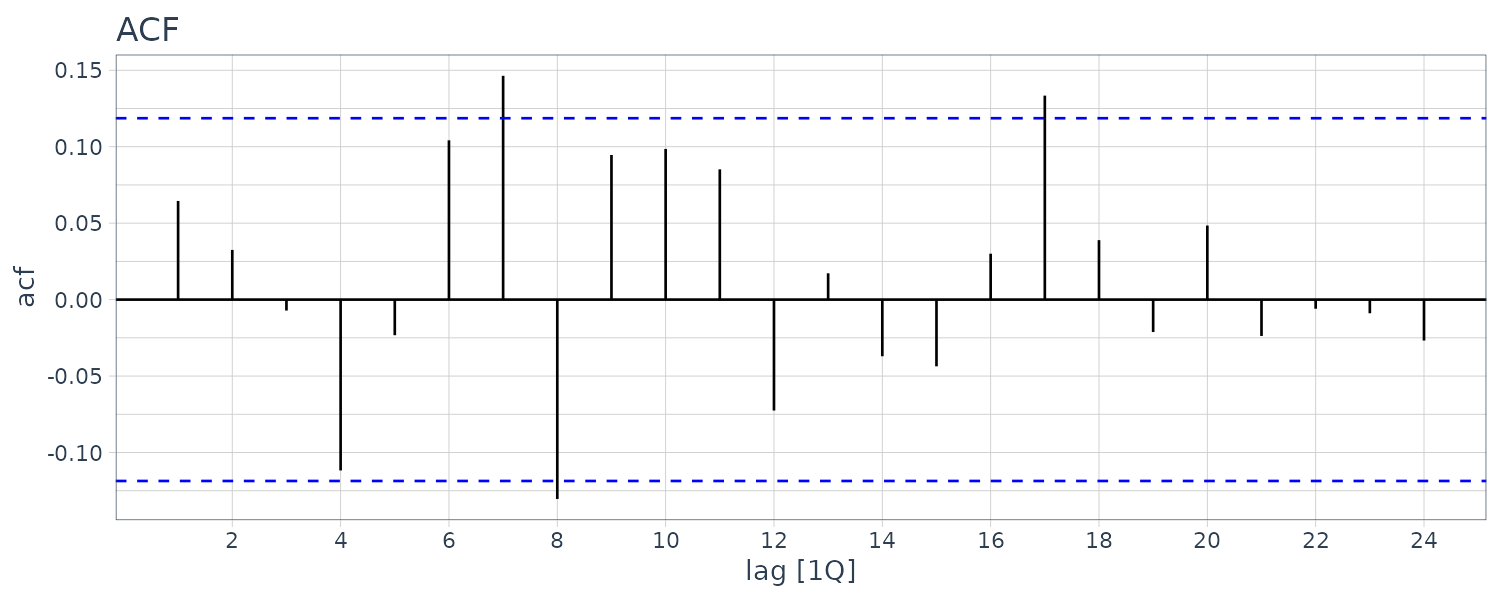

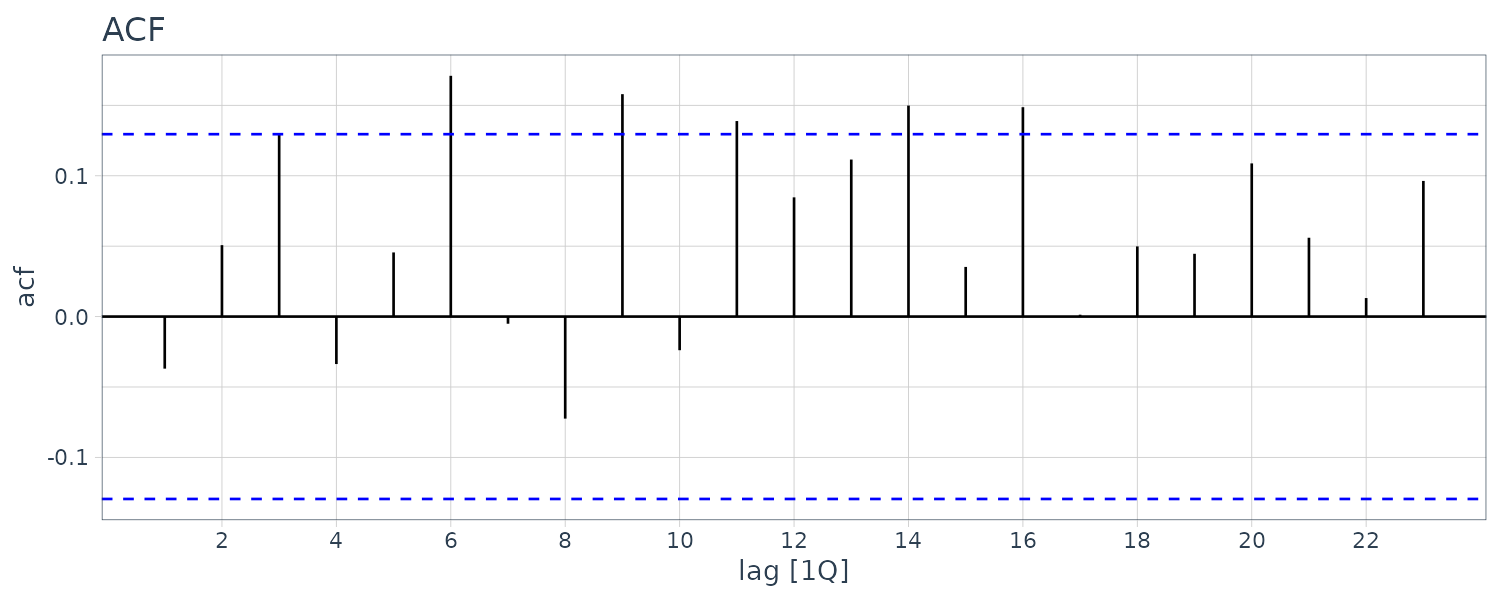

Example: Checking the Residual Correlogram for an ARDL(1, 1) Unemployment Equation

Suppose we omit \(U_{t-2}\). If \(U_{t-2}\) is an important predictor, it should lead to serial correlation in the errors. We can graph the correlogram to see this:

fit <- usmacro |>

model(

ARIMA(

u ~ lag(g) +

pdq(1, 0, 0) +

PDQ(0, 0, 0)

)

)

fit |>

gg_tsresiduals() |>

purrr::pluck(2) +

theme_tq()

Example: Lagrange Multiplier Test

Also known as the Breusch-Godfrey test. It is based on the idea of Lagrange multiplier testing.

Consider the ARDL(1, 1) model:

\[\begin{aligned} y_{t} &= \delta + \beta_{1}x_{t-1} + \beta_{2}x_{t-2} + e_{t} \end{aligned}\]The null hypothesis is that the errors \(e_{t}\) are uncorrelated. To express this null hypothesis in terms of restrictions on one or more parameters, we can introduce a model for an alternative hypothesis, with that model describing the possible nature of any autocorrelation. We will consider a number of alternative models.

Testing for AR(1) Errors

In the first instance, we consider an alternative hypothesis that the errors are correlated through the AR(1) process:

\[\begin{aligned} e_{t} &= \rho e_{t-1} + v_{t} \end{aligned}\]Where the errors \(v_{t}\) are uncorrelated. Substituting \(e_{t}\) in the above equation:

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \beta_{1}x_{t-1} + \rho e_{t-1} + v_{t} \end{aligned}\]We can draft the the hypothesis as:

\[\begin{aligned} H_{0}:&\ \rho = 0\\ H_{1}:&\ \rho \neq 0 \end{aligned}\]One way is to regress \(y_{t}\) on \(y_{t-1}, x_{t-1}, e_{t-1}\) and test for the significance of the coefficient of \(\hat{e}_{t-1}\) as we can’t observe \(e_{t-1}\) and to then use a t- or F-test to test the significance of the coefficient of \(e_{t-1}\).

A second way is to derive the relevant auxiliary regression for the autocorrelation LM test:

\[\begin{aligned} y_{t} &= \delta + \theta y_{t-1} + \beta_{1}x_{t-1} + \rho \hat{e}_{t-1} + v_{t} \end{aligned}\]Note that:

\[\begin{aligned} y_{t} &= \hat{y}_{t} + \hat{e}_{t}\\ &= \hat{\delta}+ \hat{\theta}_{1}y_{t-1} + \hat{\delta}_{1}x_{t-1} + \hat{e}_{t} \end{aligned}\]Subsitute into the original equation, we get:

\[\begin{aligned} \hat{\delta}_{t} + \hat{\theta}_{1}y_{t-1} + \hat{\beta}_{1}x_{t-1} + \hat{e}_{t} &= \delta + \theta y_{t-1} + \beta_{1}x_{t-1} + \rho \hat{e}_{t-1} + v_{t} \end{aligned}\]Rearranging the equation yields the auxiliary regression:

\[\begin{aligned} \hat{e}_{t} &= (\delta - \hat{\delta}) + (\theta_{1} - \hat{\theta}_{1})y_{t-1} + (\beta - \hat{\beta}_{1})x_{t-1} + \rho\hat{e}_{t-1} + v_{t}\\ &= \gamma_{1} + \gamma_{2}y_{t-1} + \gamma_{3}x_{t-1} + \rho\hat{e}_{t-1} + v_{t} \end{aligned}\] \[\begin{aligned} \gamma_{1} &= \delta - \hat{\delta}\\ \gamma_{2} &= \theta_{1} - \hat{\theta}_{1}\\ \gamma_{3} &= \delta_{1}- \hat{\delta}_{1} \end{aligned}\]Because \(\delta - \hat{\delta}, \theta_{1} - \hat{\theta}_{1}, \beta - \hat{\beta}_{1}\) are centered around zero, if there is significant explanatory power, it will come from \(\hat{e}_{t-1}\).

If \(H_{0}\) is true, then the LM has an approximately \(\chi_{(1)}^{2}\) distribution.

Testing for MA(1) Errors

The alternative hypothesis in this case is modeled using the MA(1) process:

\[\begin{aligned} e_{t} &= \phi v_{t-1} + v_{t} \end{aligned}\]Resulting in the following ARDL(1, 1) model:

\[\begin{aligned} y_{t} &= \delta + \theta_{1}y_{t-1} + \beta_{1}x_{t-1} + \phi v_{t-1} + v_{t} \end{aligned}\]The hypothesis would be:

\[\begin{aligned} H_{0}:&\ \phi = 0\\ H_{1}:&\ \phi \neq 0 \end{aligned}\]Testing for Higher Order AR or MA Errors

The LM test can be extended to alternative hypotheses in terms of higher order AR or MA models.

For example for an AR(4) model:

\[\begin{aligned} e_{t} &= \psi_{1}e_{t-1} + \psi_{2}e_{t-2} + \psi_{3}e_{t-3} + \psi_{4}e_{t-4} + v_{t} \end{aligned}\]And MA(4) model:

\[\begin{aligned} e_{t} &= \phi_{1}v_{t-1} + \phi_{2}v_{t-2} + \phi_{3}v_{t-3} + \phi_{4}v_{t-4} + v_{t} \end{aligned}\]AR(4) hypothesis:

\[\begin{aligned} H_{0}:&\ \psi_{1} = 0, \psi_{2} = 0, \psi_{3} = 0, \psi_{4} = 0\\ H_{1}:&\ \text{at least one } \psi_{i} \text{ is nonzero} \end{aligned}\] \[\begin{aligned} H_{0}:&\ \phi_{1} = 0, \phi_{2} = 0, \phi_{3} = 0, \phi_{4} = 0\\ H_{1}:&\ \text{at least one } \phi_{i} \text{ is nonzero} \end{aligned}\]The alternative auxillary equations will be::

\[\begin{aligned} \hat{y}_{t} &= \gamma_{1} + \gamma_{2}y_{t-1} + \gamma_{3}x_{t-1} + \psi_{1}\hat{e}_{t-1} + \psi_{2}\hat{e}_{t-2} +\psi_{3}\hat{e}_{t-3} +\psi_{4}\hat{e}_{t-4} + v_{t} \end{aligned}\]And MA(4) model:

\[\begin{aligned} \hat{e}_{t} &= \gamma_{1} + \gamma_{2}y_{t-1} + \gamma_{3}x_{t-1} + \phi_{1}\hat{e}_{t-1} + \phi_{2}\hat{e}_{t-2} +\phi_{3}\hat{e}_{t-3} +\phi_{4}\hat{e}_{t-4} + v_{t} \end{aligned}\]When \(H_{0}\) is true, the LM statistic would have a \(\chi_{(4)}^{2}\) distribution.

Durbin-Watson Test

Durbin-Watson test doesn’t require large sample approximation. The standard test is:

\[\begin{aligned} H_{0}:&\ \rho = 0\\ H_{1}:&\ \rho \neq 0 \end{aligned}\]For the AR(1) error model:

\[\begin{aligned} e_{t} &= \rho e_{t-1} + v_{t} \end{aligned}\]However it is not applicable when there are lagged dependent variables.

Both Ljung-Box and Durbin Watson are used roughly for the same purpose i.e. to check the autocorrelation in a data series. While Ljung-Box can be used for any lag value, Durbin Watson can be used just for the lag of 1.

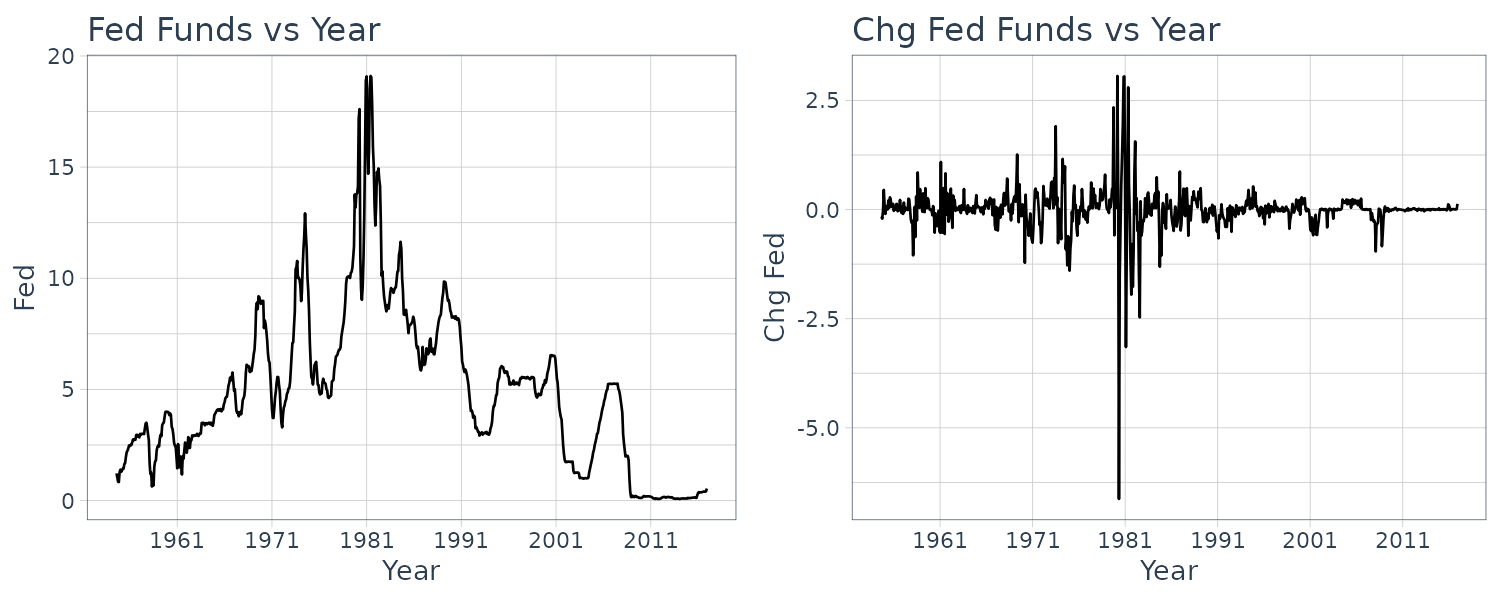

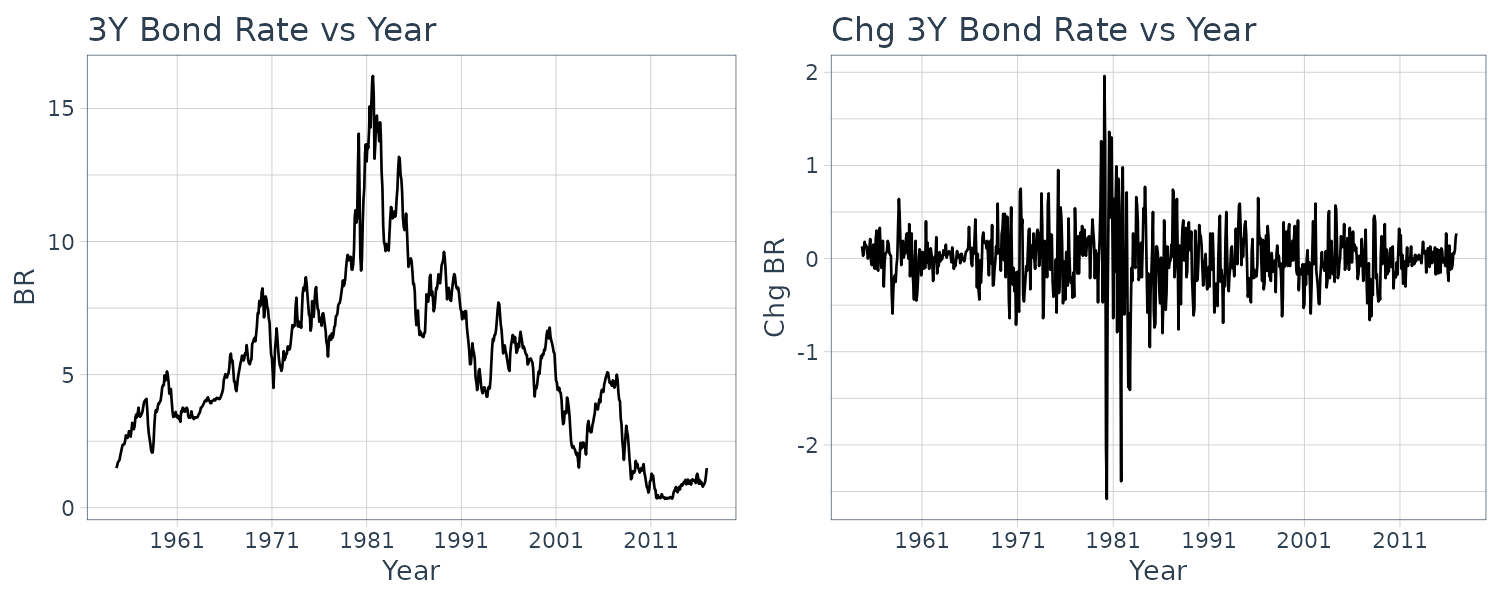

Time-Series Regressions for Policy Analysis

In the previous section, our main focus was to estimate the AR or ARDL condition expectation:

\[\begin{aligned} E[y_{t}\mid I_{t-1}] &= \delta + \theta_{1}y_{t-1} + \cdots + \theta_{p}y_{t-p} + \delta_{1}x_{t-1} + \cdots + \delta_{q}x_{t-1} \end{aligned}\]We were not concerned with the interpretation of individual coefficients, or with omitted variables. Valid forecasts could be obtained from either of the models or one that contains other explanatory variables and their lags. Moreover, because we were using past data to forecast the future, a current value of x was not included in the ARDL model.

Models for policy analysis differ in a number of ways. The individual coeffcients are of interest because they might have a causal interpretation, telling us how much the average outcome of a dependent variable changes when an explanatory variable and its lags change.

For example, central banks who set interest rates are concerned with how a change in the interest rate will affect inflation, unemployment, and GDP growth, now and in the future. Because we are interested in the current effect of a change, as well as future effects, the current value of explanatory variables can appear in distributed lag or ARDL models. In addition, omitted variables can be a problem if they are correlated with the included variables because then the coefficients may not reflect causal effects.

Interpreting a coefficient as the change in a dependent variable caused by a change in an explanatory variable:

\[\begin{aligned} \beta_{k} &= \frac{\partial E[y_{t}\mid \textbf{x}_{t}]}{\partial x_{tk}} \end{aligned}\]Recall that \(\mathbf{X}\) denotes all observations in all time periods and the following strict exogeneity condition:

\[\begin{aligned} E[e_{t}\mid \textbf{X}] &= 0 \end{aligned}\]Implies that there are no lagged dependent variables on the RHS, thus ruling out ARDL models.

And the absence of serial correlation in the errors:

\[\begin{aligned} \text{cov}(e_{t}, e_{s}\mid \mathbf{X}) &= 0 \end{aligned}\] \[\begin{aligned} t &\neq s \end{aligned}\]Means that the errors are uncorrelated with future x values, an assumption that would be violated if x was a policy variable, such as the interest rate, whose setting was influenced by past values of y, such as the inflation rate. The absence of serial correlation implies that variables omitted from the equation, and whose effect is felt through the error term, must not be serially correlated.

In the general framework of an ARDL model, the contemporaneous exogeneity assumption can be written as:

\[\begin{aligned} E[e_{t}\mid I_{t}] &= 0 \end{aligned}\]Feedback from current and past \(y\) to future \(x\) is possible under this assumption.

For the OLS standard errors to be valid for large sample inference, the serially uncorrelated error assumption:

\[\begin{aligned} \text{cov}(e_{t}, e_{s}\mid \mathbf{X}) \end{aligned}\]Can be weakened to:

\[\begin{aligned} \text{cov}(e_{t}, e_{s}\mid I_{t}) \end{aligned}\]Finite Distributed Lag Models (FDL)

Suppose we are interested in the impact of current and past values of \(x\):

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \beta_{1}x_{t - 1} + \cdots + + \beta_{q}x_{t-1} + e_{t} \end{aligned}\]It is called finite distributed lag because \(x\) cuts off after q lags and distributed because the impact of change in \(x\) is distributed over future time periods.

Once \(q\) lags of \(x\) have been included in the equation, further lags of \(x\) will not have an impact on \(y\).

For the coefficients \(\beta_{k}\) to represent causal effects, the errors must not be correlated with the current and all past values of \(x\):

\[\begin{aligned} E[e_{t}|x_{t}, x_{t-1}, \cdots] &= 0 \end{aligned}\]It then follows that:

\[\begin{aligned} E[y_{t}\mid x_{t}, x_{t-1}, \cdots] &= \alpha + \beta_{0}x_{t} + \beta_{1}x_{t - 1} + \cdots + + \beta_{q}x_{t-1}\\ &= E[y_{t}\mid x_{t}, x_{t-1}, \cdots, x_{t-q}]\\ &= E[y_{t}\mid \textbf{x}_{t}] \end{aligned}\]Given this assumption, a lag-coefficient \(\beta_{s}\) can be interpreted as the change in \(E[y_{t}\mid \textbf{x}_{t}]\) when \(x_{t-s}\) changes by 1 unit, given \(x\) held constant in other periods:

\[\begin{aligned} \beta_{s} &= \frac{\partial E[y_{t}|\textbf{x}_{t}]}{\partial x_{t-s}} \end{aligned}\]Also applicable for future forecast:

\[\begin{aligned} \beta_{s} &= \frac{\partial E[y_{t+s}|\textbf{x}_{t}]}{\partial x_{t}} \end{aligned}\]To show how the change propagate across time, suppose that \(x_{t}\) is increased by 1 unit and returned to its original level in subsequent periods. The immediate effect will be an increase in \(y_{t}\) by \(\beta_{0}\) units. On period later, \(y_{t+1}\) will be increased by \(\beta_{1}\) units and q periods later, \(y_{t+q}\) will be increased by \(\beta_{q}\) and finally return to the original level by \(y_{t+q+1} = y_{t}\). This is known as the interim multiplier.

If the change in \(x\) by 1 is sustained throughout the period, the immediate effect would be an increased by \(\beta_{0}\), followed by \(\beta_{0} + \beta_{1}\), until \(\sum_{s = 0}^{q}\beta_{s}\) and held there. This is known as the total multiplier.

For example \(y\) could be the inflation rate, and \(x\) the interest rate. So what the equation is saying is inflation at \(t\) depends on current and past interest rates.

Finite Distributed Lags models can be used in forecasting or policy analysis. For policy analysis, the central bank might want to know who inflation will react given a change in the current interest rate.

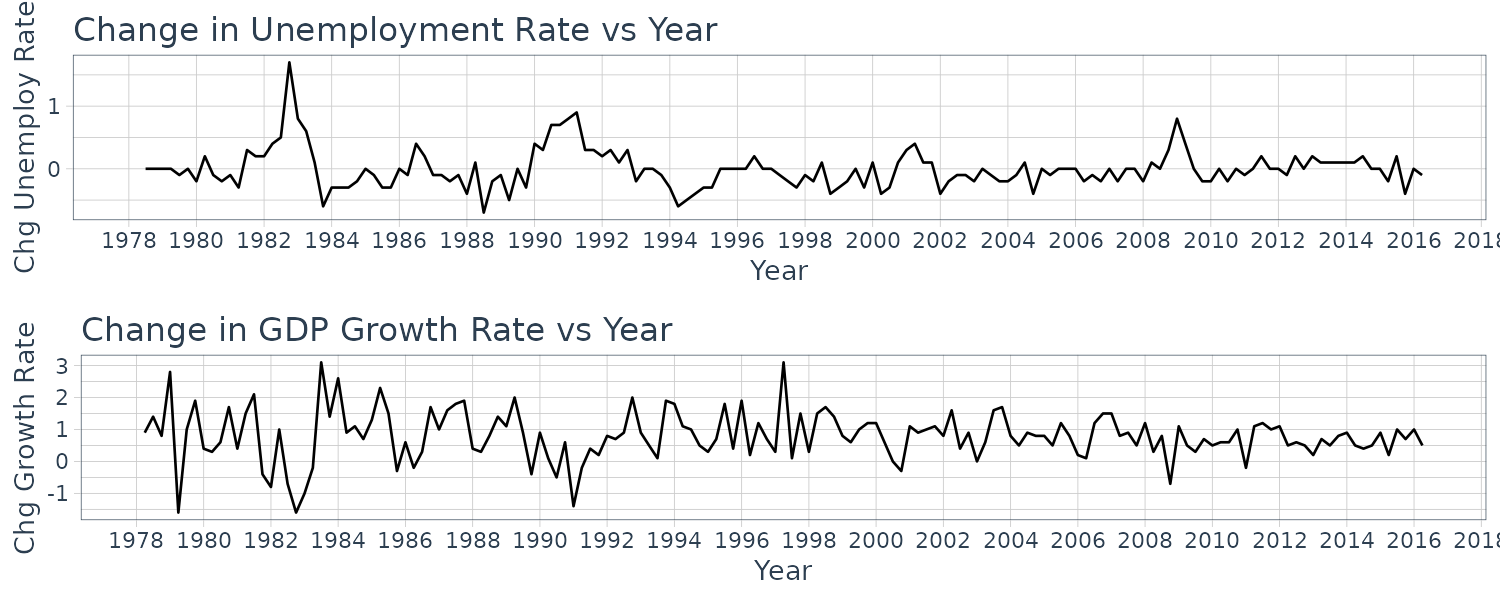

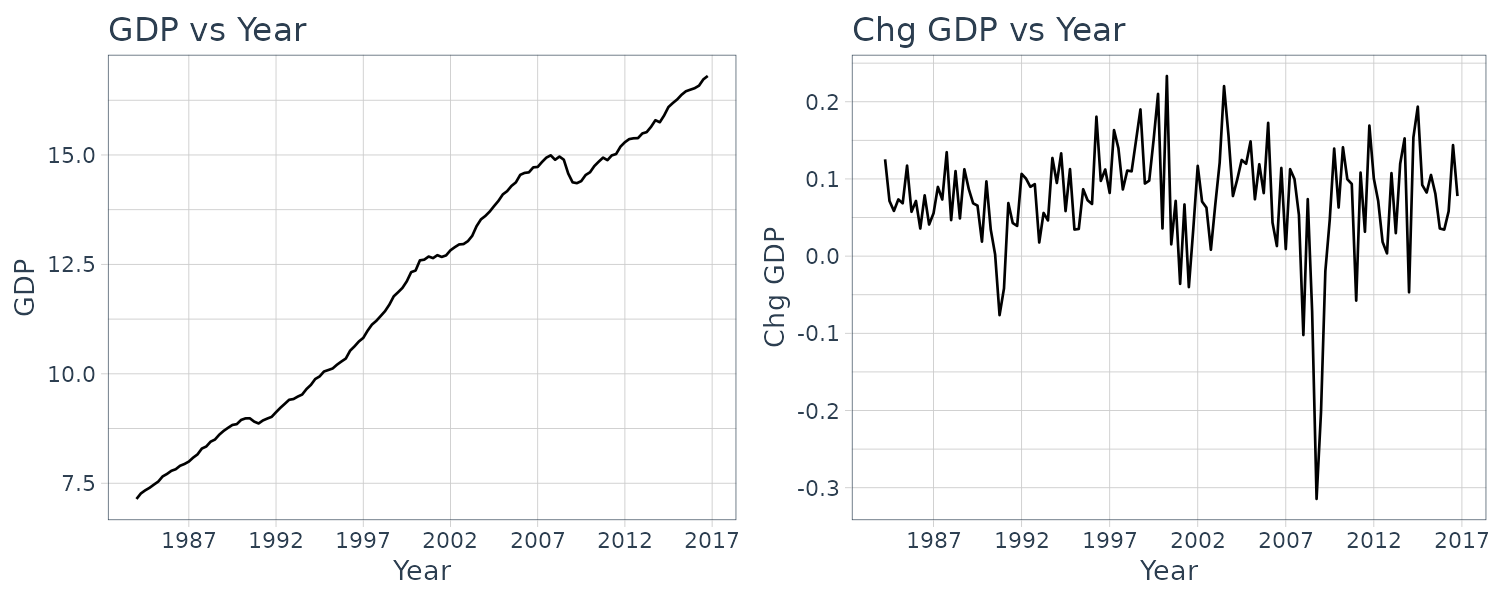

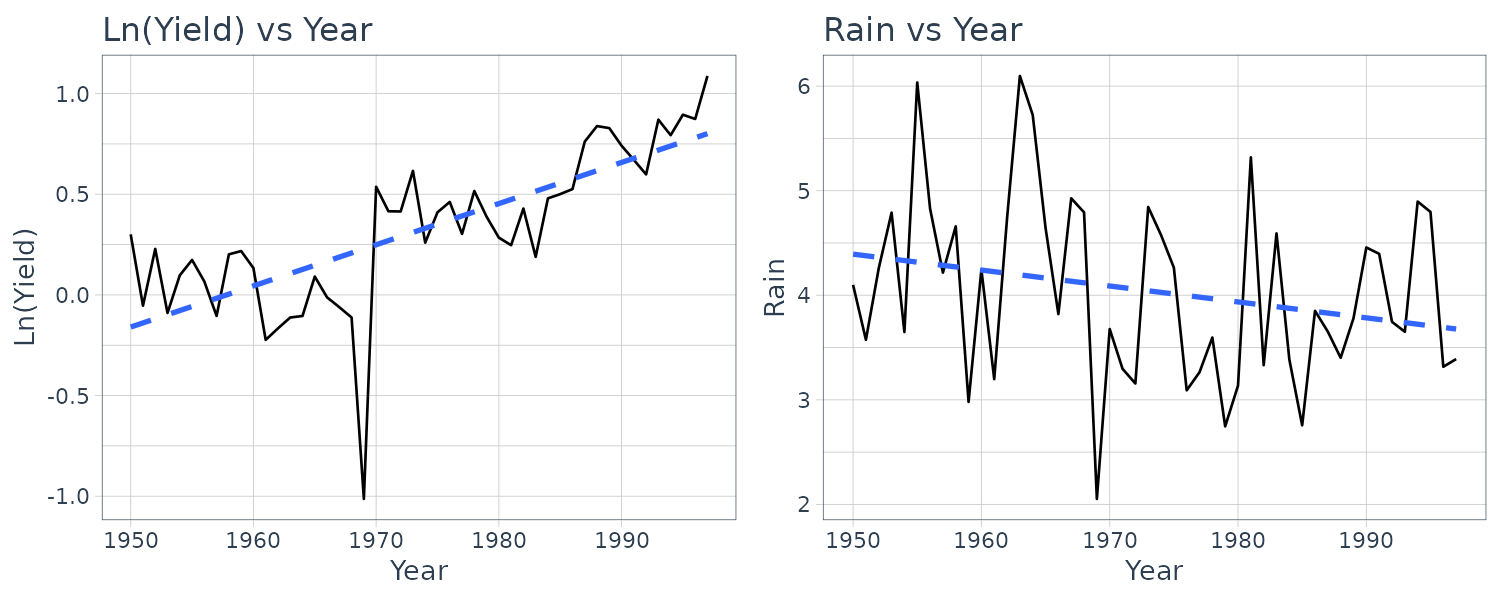

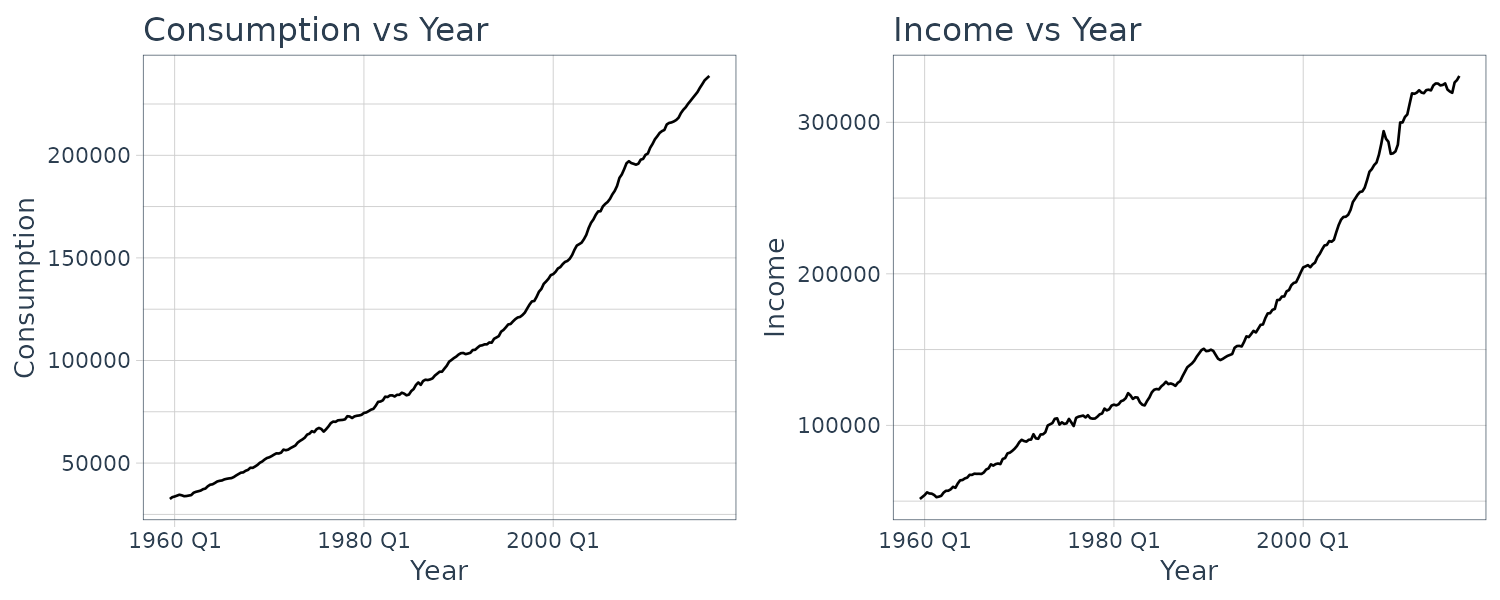

Okun’s Law Example

We are going to illustrate FDL by using an Okun’s Law example. The basic Okun’s Law shows the relationship between unemployment growth and GDP growth:

\[\begin{aligned} U_{t} - U_{t-1} &= -\gamma(G_{t} - G_{N}) \end{aligned}\]Where \(U_{t}\) is the unemployment rate in period \(t\), and \(G_{t}\) is the growth rate of output in period \(t\), and \(G_{N}\) is the neutral growth rate which we would assume to be constant. We would expect \(0 < \gamma < 1\), reflecting the output growth leads to less than one-to-one change in unemployment.

Rewriting the relationship and adding an error term:

\[\begin{aligned} \Delta U_{t} &= \gamma G_{N} -\gamma G_{t} + e_{t}\\ &= \alpha + \beta_{0}G_{t} + e_{t} \end{aligned}\]Expecting that changes in output are likely to have a distributed-lag effect on unemployment (possibly due to employment being sticky):

\[\begin{aligned} \Delta U_{t} &= \alpha + \beta_{0}G_{t} + \beta_{1}G_{t - 1} + \cdots + \beta_{q}G_{t-q} + e_{t} \end{aligned}\]To illustrate this, we would use the okun5_aus data which contain the quarterly Australian data on unemployment and percentage change in GDP.

Our purpose is not to forecast unemployment but to investigate the lagged responses of unemployment to growth in the economy. The normal growth rate \(G_{N}\) is the rate of output growth needed to maintain a constant unemployment rate. It is equal to the sum of labor force growth and labor productivity growth. We expect \(0 < \gamma < 1\), reflecting that output growth leads to less than one-to-one adjustments in unemployment.

Plotting the change in unemployment rate vs GDP:

okun5_aus$date <- as.Date(

okun5_aus$dateid01,

format = "%m/%d/%Y"

) |>

yearquarter()

okun5_aus <- okun5_aus |>

as_tsibble(index = date)

okun5_aus <- okun5_aus |>

mutate(

du = c(NA, diff(u))

)

plot_grid(

okun5_aus |>

autoplot(du) +

ylab("Chg Unemploy Rate") +

xlab("Year") +

ggtitle("Change in Unemployment Rate vs Year") +

scale_x_yearquarter(

date_breaks = "2 years",

date_labels = "%Y"

) +

theme_tq(),

okun5_aus |>

autoplot(g) +

ylab("Chg Growth Rate") +

xlab("Year") +

ggtitle("Change in GDP Growth Rate vs Year") +

scale_x_yearquarter(

date_breaks = "2 years",

date_labels = "%Y"

) +

theme_tq(),

ncol = 1

)

We fit for \(q = 5\):

fit <- lm(

du ~ g +

lag(g, 1) +

lag(g, 2) +

lag(g, 3) +

lag(g, 4) +

lag(g, 5),

data = okun5_aus

)

> summary(fit)

Call:

lm(formula = du ~ g + lag(g, 1) + lag(g, 2) + lag(g, 3) +

lag(g, 4) + lag(g, 5), data = okun5_aus)

Residuals:

Min 1Q Median 3Q Max

-0.68569 -0.13548 -0.00477 0.10739 0.94163

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.39298 0.04493 8.746 0.00000000000000601 ***

g -0.12872 0.02556 -5.037 0.00000142519603254 ***

lag(g, 1) -0.17207 0.02488 -6.915 0.00000000014967724 ***

lag(g, 2) -0.09320 0.02411 -3.865 0.000169 ***

lag(g, 3) -0.07260 0.02411 -3.012 0.003079 **

lag(g, 4) -0.06363 0.02407 -2.644 0.009127 **

lag(g, 5) 0.02317 0.02398 0.966 0.335547

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.2258 on 141 degrees of freedom

(5 observations deleted due to missingness)

Multiple R-squared: 0.5027, Adjusted R-squared: 0.4816

F-statistic: 23.76 on 6 and 141 DF, p-value: < 0.00000000000000022 All coefficients of \(G\) except for \(G_{t-5}\) are significant at the 5% level. Excluding lag 5 we get:

fit <- lm(

du ~ g +

lag(g, 1) +

lag(g, 2) +

lag(g, 3) +

lag(g, 4),

data = okun5_aus

)

> summary(fit)

Call:

lm(formula = du ~ g + lag(g, 1) + lag(g, 2) + lag(g, 3) +

lag(g, 4), data = okun5_aus)

Residuals:

Min 1Q Median 3Q Max

-0.67310 -0.13278 -0.00508 0.10965 0.97392

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.40995 0.04155 9.867 < 0.0000000000000002 ***

g -0.13100 0.02440 -5.369 0.0000003122555 ***

lag(g, 1) -0.17152 0.02395 -7.161 0.0000000000388 ***

lag(g, 2) -0.09400 0.02402 -3.912 0.000141 ***

lag(g, 3) -0.07002 0.02391 -2.929 0.003961 **

lag(g, 4) -0.06109 0.02384 -2.563 0.011419 *

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.2251 on 143 degrees of freedom

(4 observations deleted due to missingness)

Multiple R-squared: 0.4988, Adjusted R-squared: 0.4813

F-statistic: 28.46 on 5 and 143 DF, p-value: < 0.00000000000000022 So what does the estimate for \(q = 4\) tells us? A 1% increase in the growth rate leads to a fall in the expected unemployment rate of 0.13% in the curent quarter, a fall of 0.17% in the next quarter, and 0.09%, 0.07% and 0.06% in the next 2 to 4 quarters.

The interim multipliers, which give the effect of a sustained increase in the growth rate of 1% are -0.30% in the first quarter, -0.40% for 2 quarters, -0.47% for 3 quarters, and -0.53% for 4 quarters.

An estimate of \(\gamma\), the total effect of a change in output growth in a year:

\[\begin{aligned} \hat{\gamma} &= -\sum_{s=0}^{4}b_{s}\\ &= 0.5276\% \end{aligned}\]An estimate of the normal growth rate that is needed to maintain a constant unemployment rate for a quarter:

\[\begin{aligned} \hat{G}_{n} &= \frac{\hat{\alpha}}{\hat{\gamma}}\\ &= \frac{0.4100}{0.5276}\\ &= 0.78\% \end{aligned}\]Two of the FDL assumptions commonly violated are that the error term is not autocorrelated and the error term is homoskedastic:

\[\begin{aligned} \text{cov}(e_{t}, e_{s}\mid \mathbf{x}_{t}, \mathbf{x}_{s}) &= E[e_{t}e_{s}\mid \mathbf{x}_{t}, \mathbf{x}_{s}]\\ &= 0\\[5pt] \text{var}(e_{t}\mid \mathbf{x}_{t}, \mathbf{x}_{s}) &= \sigma^{2} \end{aligned}\]However, it is still possible to use the OLS estimator with the HAC standard errors if the above 2 assumptions are violated as we cover in the next section.

Heteroskedasticity and Autocorrelation Consistent (HAC) Standard Errors

First let us consider the simple regression model where we drop the lagged variables:

\[\begin{aligned} y_{t} &= \alpha + \beta_{2}x_{t} + e_{t} \end{aligned}\]First we note that:

\[\begin{aligned} b_{2} &= \frac{\sum_{t}(x_{t} - \bar{x})(y_{t} - \bar{y})}{\sum_{t}(x_{t}- \bar{x})^{2}}\\[5pt] &= \frac{\sum_{t}y_{t}(x_{t} - \bar{x})- \bar{y}\sum_{t}(x_{t} - \bar{x})}{\sum_{t}(x_{t}- \bar{x})^{2}}\\[5pt] &= \frac{\sum_{t}y_{t}(x_{t} - \bar{x})- 0}{\sum_{t}(x_{t}- \bar{x})^{2}}\\[5pt] &= \frac{\sum_{t}(x_{t} - \bar{x})y_{t}}{\sum_{t}(x_{t}- \bar{x})^{2}}\\[5pt] &= \sum_{t}\frac{x_{t}- \bar{x}}{\sum_{t}(x_{t} - \bar{x})^{2}}y_{t}\\[5pt] &= \sum_{t}w_{t}y_{t} \end{aligned}\]The least squares estimator can also be written as:

\[\begin{aligned} b_{2} &= \sum_{t}w_{t}y_{t}\\[5pt] &= \sum_{t}w_{t}(\beta_{2}x_{t} + e_{t})\\[5pt] &= \beta_{2}\sum_{t}w_{t} + \sum_{t}w_{t}e_{t}\\[5pt] &= \beta_{2} + \sum_{t}w_{t}e_{t}\\[5pt] &= \beta_{2} + \frac{\frac{1}{T}\sum_{t}(x_{t} - \bar{x})e_{t}}{\frac{1}{T}\sum_{t}(x_{t} - \bar{x})^{2}}\\[5pt] &= \beta_{2} + \frac{\frac{1}{T}\sum_{t}(x_{t} - \bar{x})e_{t}}{s_{x}^{2}} \end{aligned}\]When \(e_{t}\) is homoskedastic and uncorrelated, we can take the variance of the above and show that:

\[\begin{aligned} \text{var}(b_{2}|\mathbf{X}) &= \frac{\sigma_{e}^{2}}{\sum_{t=1}^{T}(x_{t} - \bar{x})^{2}}\\[5pt] &= \frac{\sigma_{e}^{2}}{Ts_{x}^{2}} \end{aligned}\]For a result that is not conditional on \(\mathbf{X}\), we obtained the large sample approximate variance from the variance of its asymptotic distribution:

\[\begin{aligned} \mathrm{var}(b_{2}) &= \frac{\sigma_{e}^{2}}{T\sigma_{x}^{2}}\\ s_{x}^{2} &\overset{p}{\rightarrow}\sigma_{x}^{2} \end{aligned}\]Hence given large sample approximate variance we get the following result:

\[\begin{aligned} \text{var}(b_{2}) &= \frac{\sigma_{e}^{2}}{T\sigma_{x}^{2}} \end{aligned}\]But what if both \(b_{2}\) and \(e_{t}\) are heteroskedastic and autocorrelated? Replacing \(s_{x}^{2}\) with \(\sigma_{x}^{2}\) and \(\bar{x}\) with \(\mu_{x}\):

\[\begin{aligned} \text{var}(b_{2}) &= \textrm{var}\Bigg(\frac{\frac{1}{T}\sum_{t=1}^{T}(x_{t} - \mu_{x})e_{t}}{\sigma_{x}^{2}}\Bigg)\\[5pt] &= \frac{1}{T^{2}(\sigma_{x}^{2})^{2}}\textrm{var}\Big(\sum_{t}^{T}q_{t}\Big)\\[5pt] &= \frac{1}{T^{2}(\sigma_{x}^{2})^{2}}\Bigg(\sum_{t=1}^{T}\textrm{var}(q_{t}) + 2\sum_{t=1}^{T-1}\sum_{s=1}^{T-t}\textrm{cov}(q_{t}, q_{t+s})\Bigg)\\[5pt] &= \frac{\sum_{t=1}^{T}\textrm{var}(q_{t})}{T^{2}(\sigma_{x}^{2})^{2}}\Bigg(1 + \frac{2\sum_{t=1}^{T-1}\sum_{s=1}^{T-t}\textrm{cov}(q_{t}, q_{t+s})}{\sum_{t=1}^{T}\text{var}(q_{t})}\Bigg) \end{aligned}\] \[\begin{aligned} q_{t} &= (x_{t}- \mu_{x})e_{t} \end{aligned}\]HAC standard errors are obtained by considering estimators for the quantity outside the big brackets and the quantity inside the big brackets. For the quantity outside the brackets, first note that \(q_{t}\) has a zero mean and:

\[\begin{aligned} \hat{\textrm{var}}_{HCE}(b_{2}) &= \frac{\sum_{t=1}^{T}\textrm{var}(\hat{q}_{t})}{T^{2}(\sigma_{x}^{2})^{2}}\\[5pt] &= \frac{T\sum_{t=1}^{T}(x_{t} - \bar{x})^{2}\hat{e}_{t}^{2}}{(T-K)\Big(\sum_{t=1}^{T}(x_{t} - \bar{x})^{2}\Big)^{2}}\\[5pt] \hat{\textrm{var}}(\hat{q}_{t}) &= \frac{\sum_{t=1}^{T}(x_{t} - \bar{x})^{2}\hat{e}_{t}^{2}}{T-k}\\[5pt] (s_{x}^{2})^{2} &= \Big(\frac{\sum_{t=1}^{T}(x_{t} - \bar{x})^{2}}{T}\Big)^{2} \end{aligned}\]Note that the extra \(T\) in the numerator is what due to the heteroskedasticity.

The quantity outside the brackets is the large sample unconditional variance of \(b_{0}\) when there is heteroskedasticity but no autocorrelation.

To get the variance estimator for least squares that is consistent in the presence of both heteroskedasticity and autocorrelation, we need to multiply the HCE variance estimator by the quantity inside the bracket which we will denote as \(g\):

\[\begin{aligned} g &= 1 + \frac{2\sum_{t=1}^{T-1}\sum_{s=1}^{T-t}\textrm{cov}(q_{t}, q_{t+s})}{\sum_{t=1}^{T}\text{var}(q_{t})}\\[5pt] &= 1 + \frac{2\sum_{t=1}^{T-1}(T-s)\sum_{s=1}^{T-s}\textrm{cov}(q_{t}, q_{t+s})}{T\text{var}(q_{t})}\\[5pt] &= 1 + 2\sum_{s=1}^{T-1}\Big(\frac{T-s}{T}\Big)\tau_{s} \end{aligned}\] \[\begin{aligned} \tau_{s} &= \frac{\textrm{cov}(q_{t}, q_{t+s})}{\textrm{var}(q_{t})}\\[5pt] &= \textrm{corr}(q_{t}, q_{t+s}) \end{aligned}\]As we can see \(\tau_{s}\) is the autocorrelation. So when there is no serial correlation:

\[\begin{aligned} \tau_{s} &= 0\\ g &= 1 + 0\\ &= 1 \end{aligned}\]To obtain a consistent estimator for \(g\), the summation is truncated at a lag much smaller than \(T\). The autocorrelation lags beyond that are taken as zero. For example, if 5 autocorrelations are used, the corresponding estimator is:

\[\begin{aligned} \hat{g}&= 1 + 2 \sum_{s=1}^{5}\Big(\frac{6-s}{6}\Big)\hat{\tau}_{s} \end{aligned}\]Note that different software packages might yield different HAC standard errors as they use different estimation of the number of lags.

Finallly, given a suitable estimator \(\hat{g}\), the large sample estimator for the variance of \(b_{2}\), allowing for both heteroscedasticity and autocorrelation in the errors (HAC) is:

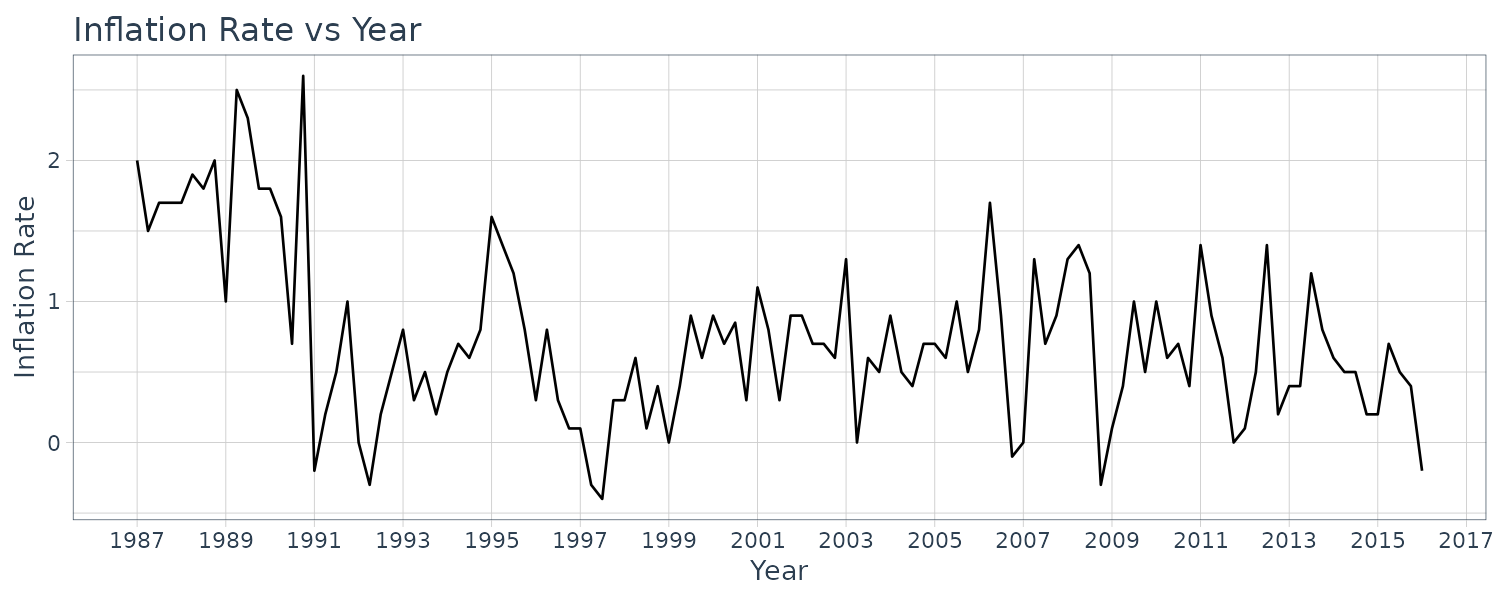

\[\begin{aligned} \hat{\textrm{var}}_{HAC}(b_{2}) &= \hat{\textrm{var}}_{HCE}(b_{2}) \times \hat{g} \end{aligned}\]Example: A Phillips Curve

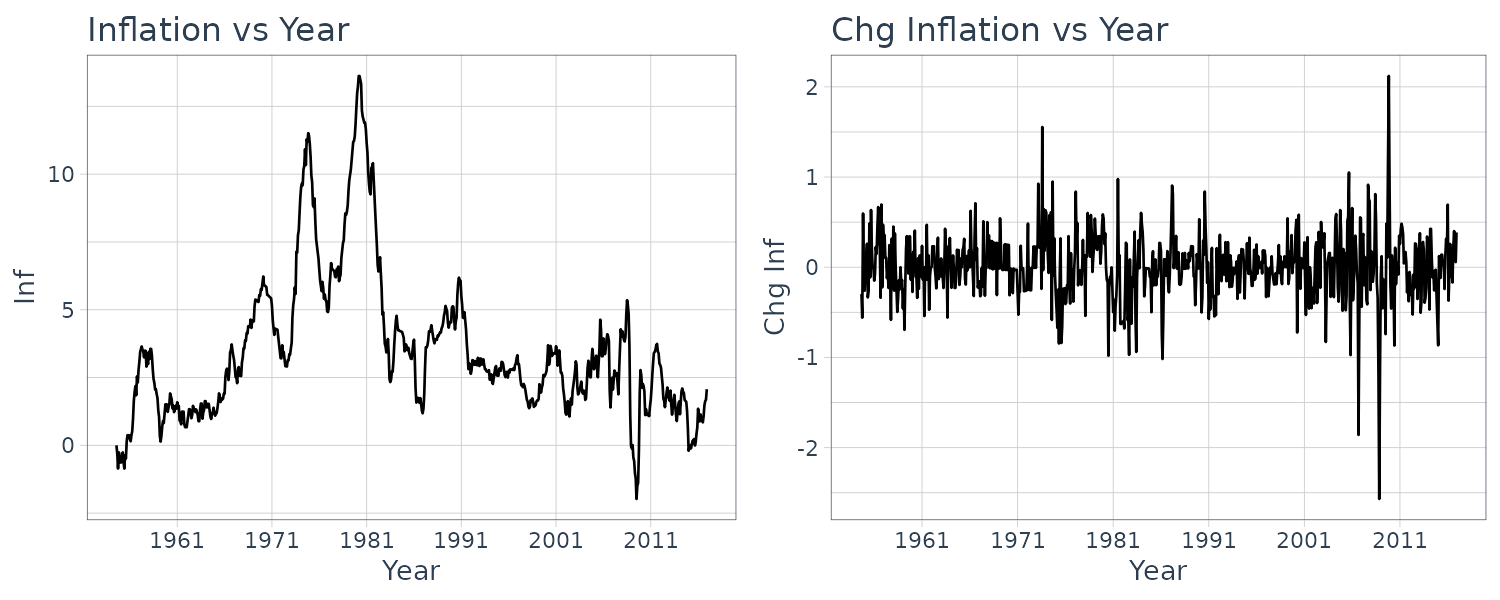

The Phillips curve describes the relationship between inflation and unemployment:

\[\begin{aligned} \text{INF}_{t} &= \text{INF}_{t}^{E}- \gamma(U_{t} - U_{t-1}) \end{aligned}\]\(\text{INF}_{t}^{E}\) is the expection inflation for period \(t\).

The hypothesis is that falling levels of unemployment will cause higher inflation from excess demand for labor that drives up wages and vice versa for rising levels of unemployment.

Written as simple regression model:

\[\begin{aligned} \text{INF}_{t} &= \alpha + \beta_{1}\Delta U_{t} + e_{t} \end{aligned}\]Using the quarterly Australian data phillips5_aus:

phillips5_aus$date <- as.Date(

phillips5_aus$dateid01,

format = "%m/%d/%Y"

) |>

yearquarter()

phillips5_aus <- phillips5_aus |>

as_tsibble(index = date)

phillips5_aus <- phillips5_aus |>

mutate(

du = c(NA, diff(u))

)Graphing the inflation rate:

phillips5_aus |>

autoplot(inf) +

ylab("Inflation Rate") +

xlab("Year") +

ggtitle("Inflation Rate vs Year") +

scale_x_yearquarter(

date_breaks = "2 years",

date_labels = "%Y"

) +

theme_tq()

Fitting the model and graphing the residuals:

fit <- lm(inf ~ du, data = phillips5_aus)

> summary(fit)

Call:

lm(formula = inf ~ du, data = phillips5_aus)

Residuals:

Min 1Q Median 3Q Max

-1.2115 -0.3919 -0.1121 0.2683 2.1473

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.73174 0.05606 13.053 <2e-16 ***

du -0.39867 0.20605 -1.935 0.0555 .

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.6043 on 115 degrees of freedom

Multiple R-squared: 0.03152, Adjusted R-squared: 0.0231

F-statistic: 3.743 on 1 and 115 DF, p-value: 0.05547

phillips5_aus |>

ACF(fit$residuals) |>

autoplot() +

ggtitle("ACF") +

theme_tq()

To calculate the HCE in R:

norms <- (phillips5_aus$du - mean(phillips5_aus$du))^2

T <- nrow(phillips5_aus)

hac <- (T * sum(norms * fit$residuals^2)) /

((T - 2)*(sum(norms)^2))

> sqrt(hac)

[1] 0.2631565Contrasts that with OLS SE of 0.2061.

To adjust for autocorrelation and heteroskedasticity (HAC):

acfs <- phillips5_aus |>

ACF(fit$residuals * norms) |>

pull(acf)

tmp <- 0

T <- 7

for (i in 1:T) {

tmp <- tmp + (T - i)/T * acfs[i]

}

tmp

g <- 1 + 2*tmp

> sqrt(hac * g)

[1] 0.2895455Estimation with AR(1) Errors

Using least squares with HAC standard errors overcomes the negative consequences that autocorrelated errors have for least squares standard errors. However, it does not address the issue of finding an estimator that is better in the sense that it has a lower variance. One way to proceed is to make an assumption about the model that generates the autocorrelated errors and to derive an estimator compatible with this assumption. In this section, we examine how to estimate the parameters of the regression model when one such assumption is made, that of AR(1) errors.

Consider the simple regression model:

\[\begin{aligned} y_{t} &= \alpha + \beta_{2}x_{t} + e_{t} \end{aligned}\]The AR(1) error model:

\[\begin{aligned} e_{t} &= \rho e_{t-1} + v_{t} \end{aligned}\] \[\begin{aligned} |\rho| &< 1 \end{aligned}\]The errors \(v_{t}\) are assumed to be uncorrelated, with zero mean and constant variances:

\[\begin{aligned} E[v_{t}\mid x_{t}, x_{t-1}, \cdots] &= 0\\ \textrm{var}(v_{t}\mid x_{t}) &= \sigma_{v}^{2}\\ \textrm{cov}(v_{t}, v_{s}) &= 0 \end{aligned}\]It can further be shown that this result in the following properties for \(e_{t}\):

\[\begin{aligned} E[e_{t}] &= 0 \end{aligned}\] \[\begin{aligned} \sigma_{e}^{2} &= \frac{\sigma_{v}^{2}}{1 - \rho^{2}} \end{aligned}\] \[\begin{aligned} \rho_{k} &= \rho^{k} \end{aligned}\]Recall that AR(1) error model leads to the following equation:

\[\begin{aligned} y_{t} &= \alpha(1 - \rho) + \rho y_{t-1} + \beta_{0}x_{t} - \rho \beta_{0}x_{t-1} + v_{t} \end{aligned}\]But now we have turned the coefficient of \(x_{t-1}\) to be \(-\rho\beta_{0}\) causing the whole equation to be nonlinear. To obtain estimates, we would need to employ numerical methods.

To introduce an alternative estimator for the AR(1) error model, we rewrite:

\[\begin{aligned} y_{t} - \rho y_{t-1} &= \alpha(1 - \rho) + \beta_{0}x_{t} - \rho \beta_{0}x_{t-1} + v_{t} \end{aligned}\] \[\begin{aligned} y_{t}^{*} &= \alpha^{*} + \beta_{0}x_{t}^{*} + v_{t} \end{aligned}\] \[\begin{aligned} y_{t}^{*} &= y_{t} - \rho y_{t-1}\\ \alpha^{*} &= \alpha(1 - \rho)\\ x^{*} &= x_{t} - \rho x_{t-1} \end{aligned}\]OLS can then be applied and if \(\rho\) is known, then the original intercept can be recovered:

\[\begin{aligned} \hat{\alpha} &= \frac{\alpha^{*}}{1 - \rho} \end{aligned}\]The case that the OLS estimator applied to transformed variables is known as a generalized least squares estimator. Here, we have transformed a model with autocorrelated errors into one with uncorrelated errors. Because \(\rho\) is not known and need to be estimated, the resulting estimator for \(\alpha, \beta_{0}\) is known as a feasible generalized least squares estimators.

One way to estimate \(\rho\) is to use \(r_{1}\) from the sample correlogram.

The steps to obtain the feasible generalized least squares estimator for \(\alpha, \beta_{0}\):

- Find OLS estimates for \(a, b_{0}\) from \(y_{t} = \alpha + \beta_{0}x_{t} + e_{t}\)

- Compute least squares residuals \(\hat{e}_{t} = y_{} - a - b_{0}x_{t}\)

- Estimate \(\rho\) by applying OLS to \(\hat{e}_{t} = \rho\hat{e}_{t-1} + v_{t}\)

- Compute the transformed variables \(y_{t}^{*} = y_{t}- \hat{\rho}y_{t-1}\) and \(x_{t}^{*} = x_{t} - \hat{\rho}x_{t-1}\)

- Apply OLS to the transformed equation \(y_{t}^{*} = \alpha^{*} + \beta_{0}x_{t}^{*} + v_{t}\)

- Calculate new residuals \(\hat{e}_{t} = y_{t} - \hat{\alpha} - \hat{\beta}_{0}x_{t}^{*} + v_{t}\) and repeat steps 3-5.

These steps can also be implemented in an iterative manner. New residuals can be obtained in step 5:

\[\begin{aligned} \hat{e}_{t} &= y_{t}- \hat{\alpha} - \hat{\beta}_{0}x_{t} \end{aligned}\]And steps 3-5 can be repeated until the estimates converge. The resulting converge estimator is known as the Cochrane-Orcutt estimator.

Assumptions

Time-series data typically violate the autocorrelated and homoskedasticity assumptions. The OLS estimator is still consistent, but its usual variance and covariance estimates and standard errors are not correct, leading to invalid t, F, and \(\chi^{2}\) tests. One solution is to use HAC standard error. However, the OLS estimator is no longer minimum variance but the t, F, and \(\chi^{2}\) will be valid.

A second solution to violation of uncorrelated errors is to assume a specific model for the autocorrelated errors and to use an estimator that is minimum variance for that model. The parameters of a simple regression model with AR(1) errors can be estimated by:

- Nonlinear Least Squares

- Feasible Generalized Least Squares

Under two extra conditions, both of these techniques yield a consistent estimator that is minimum variance in large samples, with valid t, F, and \(\chi^{2}\) tests. The first extra condition that is needed to achieve these properties is that the AR(1) error model is suitable for modeling the autocorrelated error. We can, however, guard against a failure of this condition using HAC standard errors following nonlinear least squares or feasible generalized least squares estimation. Doing so will ensure t, F, and \(\chi^{2}\) tests are valid despite the wrong choice for an autocorrelated error model.

The second extra condition is a stronger exogeneity assumption. Consider the nonlinear least squares equation:

\[\begin{aligned} y_{t} &= \alpha(1 - \rho) + \rho y_{t-1} + \beta_{0}x_{t} - \rho \beta_{0}x_{t-1} + v_{t} \end{aligned}\]The exogeneity assumption is:

\[\begin{aligned} E[v_{t}\mid x_{t}, x_{t-1}, \cdots] = 0 \end{aligned}\]Noting that \(v_{t} = e_{t} - \rho e_{t-1}\), this conditions becomes:

\[\begin{aligned} E[e_{t} - \rho e_{t-1}\mid x_{t}, x_{t-1}, \cdots] &= E[e_{t}\mid x_{t}, x_{t-1}, \cdots] - \rho E[e_{t-1}\mid x_{t}, x_{t-1}, \cdots]\\ &= 0 \end{aligned}\]Advancing the second term by one period, we can rewrite this condition as:

\[\begin{aligned} E[e_{t}\mid x_{t+1}, x_{t}, \cdots] - \rho E[e_{t}\mid x_{t+1}, x_{t}, \cdots] &= 0\\ \end{aligned}\]For this equation to be true for all possible values of \(\rho\): we require that:

\[\begin{aligned} E[e_{t}\mid x_{t+1}, x_{t}, \cdots] &= 0 \end{aligned}\]By the law of iterated expectations, the above implies that:

\[\begin{aligned} E[e_{t}\mid x_{t}, x_{t-1}, \cdots] &= 0 \end{aligned}\]Thus, the exogeneity requirement necessary for nonlinear least squares to be consistent, and it is the same for feasible generalized least squares, is:

\[\begin{aligned} E[e_{t}\mid x_{t+1}, x_{t}, \cdots] &= 0 \end{aligned}\]This requirement implies that \(e_{t}, x_{t+1}\) cannot be correlated. It rules out instances where \(x_{t+1}\) is set by a policy maker, such as central bank setting an interest rate in response to an error shock in the previous period. Thus, while modeling the autocorrelated error may appear to be a good strategy in terms of improving the efficiency of estimation, it could be at the expense of consistency if the stronger exogeneity assumption is not met. Using least squares with HAC standard errors does not require this stronger assumption.

Modeling autocorrelated errors with more than 1 lag requires \(e_{t}\) to be uncorrelated with \(x\) values further than 1 period into the future. A stronger exogeneity assumption:

\[\begin{aligned} E[e_{t}\mid \textbf{X}] &= 0 \end{aligned}\]Where \(\textbf{X}\) includes all current, past and future values of the explanatory variables.

Example: The Phillips Curve with AR(1) Errors

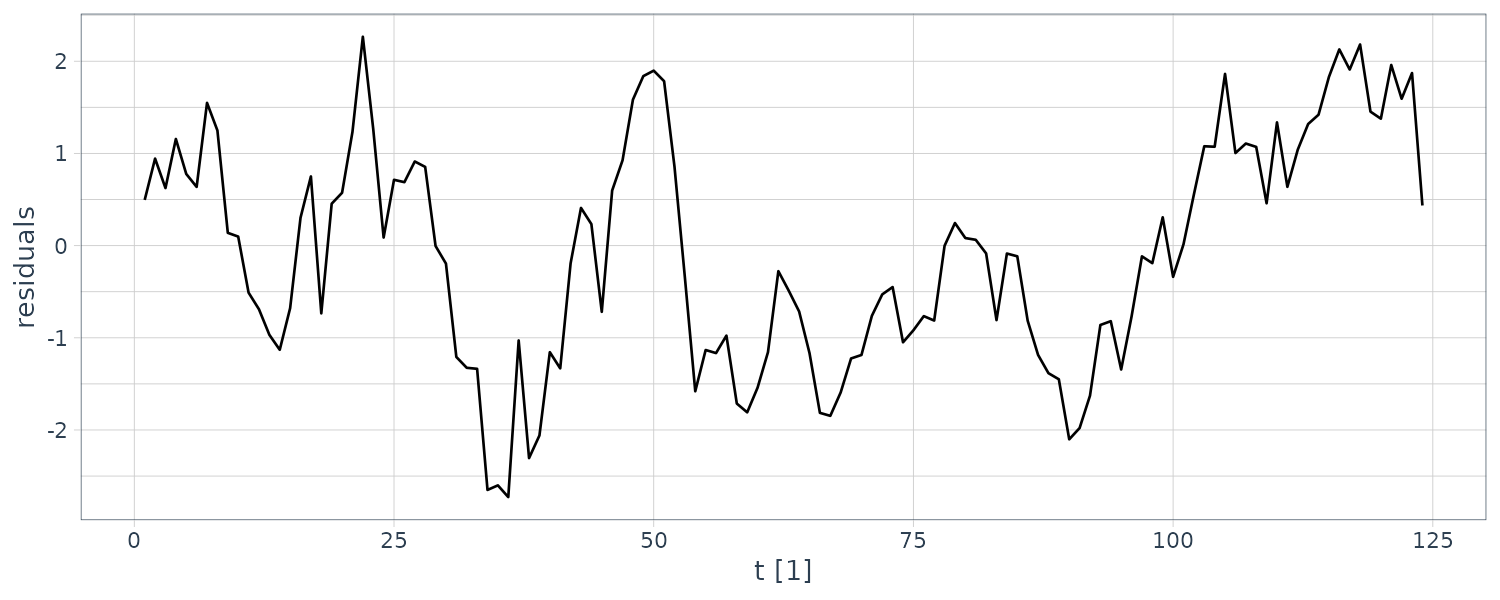

Returning to the earlier Philips curve example, looking at the correlogram of the residuals of the OLS fit:

We can see that the errors are correlated but AR(1) might not be adequate as the autocorrelations are not declining exponentially. Nontheless, let us fit an AR(1) error model.

We will first fit a nonlinear least squares (NLS):

\[\begin{aligned} y_{t} &= \alpha(1 - \rho) + \rho y_{t-1} + \beta_{0}x_{t} - \rho\beta_{0}x_{t-1} + v_{t} \end{aligned}\]Solving this in R using \(r_{1} = 0.489\) as a starting value:

phillips5_aus <- phillips5_aus |>

mutate(

inf1 = lag(inf, 1),

du1 = lag(du, 1)

)

fit <- nls(

inf ~ (1 - rho) * alpha +

rho * inf1 +

beta * du -

rho * beta * du1,

data = tmp,

start = c(

alpha = 0,

beta = 0,

rho = 0.489

)

)

> summary(fit)

Formula: inf ~ (1 - rho) * alpha + rho * inf1 +

beta * du - rho * beta * du1

Parameters:

Estimate Std. Error t value Pr(>|t|)

alpha 0.70084 0.09628 7.280 4.94e-11 ***

beta -0.38215 0.21141 -1.808 0.0733 .

rho 0.49604 0.08284 5.988 2.62e-08 ***

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

Residual standard error: 0.5191 on 112 degrees of freedom

Number of iterations to convergence: 5

Achieved convergence tolerance: 1.492e-06

(2 observations deleted due to missingness)And using fable in lieu of feasible generalized least squares (FGLS):

fit <- phillips5_aus |>

model(

ARIMA(

inf ~ du +

pdq(1, 0, 0) +

PDQ(0, 0, 0)

)

)

> tidy(fit) |>

select(-.model)

# A tibble: 3 × 5

term estimate std.error statistic p.value

<chr> <dbl> <dbl> <dbl> <dbl>

1 ar1 0.498 0.0815 6.11 1.35e- 8

2 du -0.389 0.208 -1.87 6.39e- 2

3 intercept 0.720 0.0944 7.63 7.22e-12 The NLS and FGLS standard errors for estimates of \(\beta_{0}\) are smaller than the corresponding OLS HAC standard error, perhaps representing an efficiency gain from modeling the autocorrelation. However, one must be cautious with interpretations like this because standard errors are estimates of standard deviations, not the unknown standard deviations themselves.

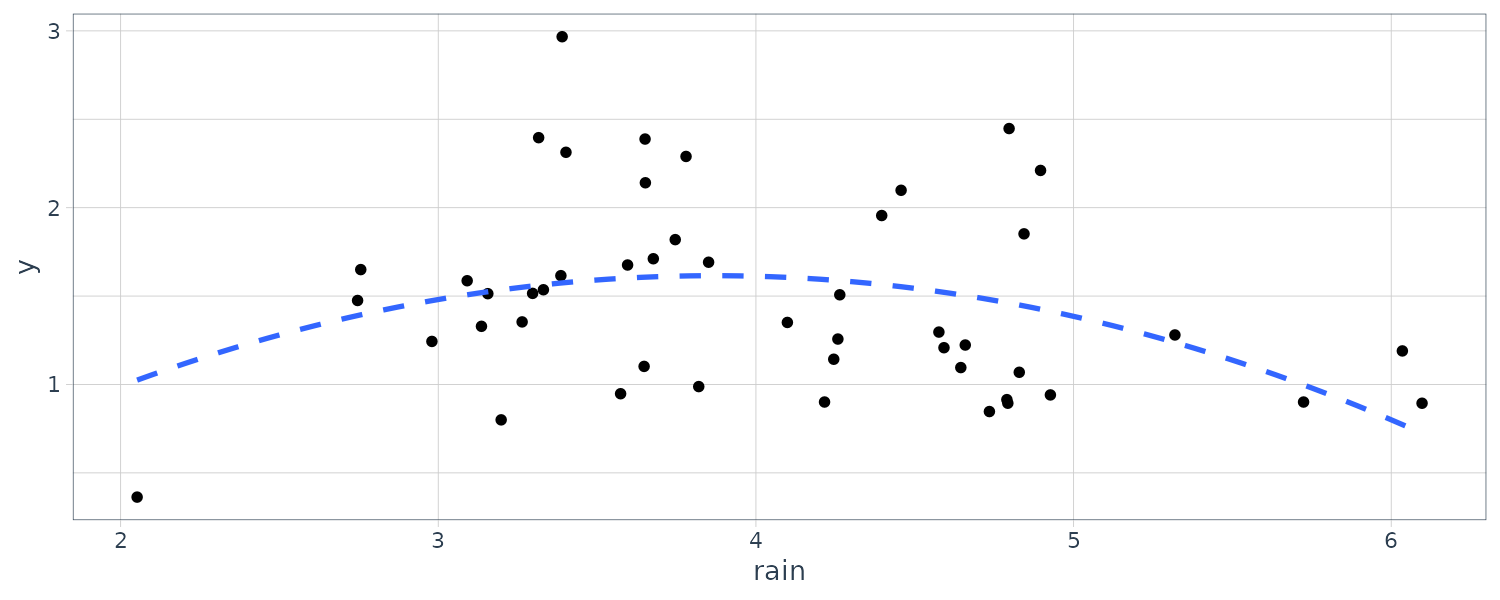

Infinite Distributed Lag Models (IDL)

An IDL Model is the same as FDL Model except that it goes to infinity.

\[\begin{aligned} y_{t} &= \alpha + \beta_{0}x_{t} + \cdots \beta_{q}x_{t-q} + \cdots + e_{t} \end{aligned}\]In order to make the model realistic, \(\beta_{s}\) has to eventually decline to 0. The interpretation of the coefficients (same as FDL):

\[\begin{aligned} \beta_{s} &= \frac{\partial E[y_{t}\mid x_{t}, x_{t-1}, \cdots]}{\partial x_{t-s}}\\ \sum_{j=0}^{s}\beta_{j}&= s\text{ period interim multiplier}\\ \sum_{j=0}^{\infty}\beta_{j}&= \text{ total multiplier} \end{aligned}\]A geometrically declining lag pattern is when:

\[\begin{aligned} \beta_{s} &= \lambda^{s}\beta_{0} \end{aligned}\]Recall that it will lead to a ARDL(1, 0) model when \(0 < \lambda < 1\):

\[\begin{aligned} y_{t} &= \alpha(1 - \lambda) + \lambda y_{t-1} + \beta_{0}x_{t} + e_{t} - \lambda e_{t-1} \end{aligned}\]The interim multipliers are given by:

\[\begin{aligned} \sum_{j=0}^{s}\beta_{j} &= \beta_{0} + \beta_{1} + \cdots\\ &= \beta_{0} + \beta_{0}\lambda + \beta_{0}\lambda^{2} + \cdots + \beta_{0}\lambda^{s}\\[5pt] &= \frac{\beta_{0}(1 - \lambda^{s+1})}{1 - \lambda} \end{aligned}\]And the total multiplier is given by:

\[\begin{aligned} \sum_{j=0}^{\infty}\beta_{j} &= \beta_{0} + \beta_{0}\lambda + \cdots\\ &= \frac{\beta_{0}}{1 - \lambda} \end{aligned}\]However, estimating the above ARDL model poses some difficulties. If we assume the original errors \(e_{t}\) are not autocorrelated, then:

\[\begin{aligned} v_{t} &= e_{t} - \lambda e_{t-1} \end{aligned}\]This means that \(v_{t}\) will be correlated with \(y_{t-1}\) and:

\[\begin{aligned} E[v_{t}\mid y_{t-1}, x_{t}] \neq 0 \end{aligned}\]This is because \(y_{t-1}\) also depends on \(e_{t-1}\). If we lag by 1 period:

\[\begin{aligned} y_{t-1} &= \delta + \beta_{0}x_{t-1} + \beta_{1}x_{t-2} + \cdots + e_{t-1} \end{aligned}\]We can also show that:

\[\begin{aligned} E[v_{t}y_{t-1}\mid x_{t-1}, x_{t-2}, \cdots] &= E[(e_{t} - \lambda e_{t-1})(\alpha + \beta_{0}x_{t-1} + \beta_{1}x_{t-2} + \cdots + e_{t-1})\mid x_{t-1}, x_{t-2}, \cdots]\\ &= E[(e_{t} - \lambda e_{t-1})e_{t-1}\mid x_{t-1}, x_{t-2}, \cdots]\\ &= E[e_{t}e_{t-1}\mid x_{t-1}, x_{t-2}, \cdots] - \lambda E[e_{t-1}^{2}\mid x_{t-1}, x_{t-2}, \cdots]\\ &= -\lambda\textrm{var}(e_{t-1}\mid x_{t-1}, x_{t-2}, \cdots) \end{aligned}\]One possible consistent estimator is using \(x_{t-1}\) as a suitable instrument variable estimator for \(y_{t-1}\). A special case would be that \(e_{t}\) follows a MA(1) process:

\[\begin{aligned} e_{t} &= \lambda e_{t-1} + u_{t}\\ v_{t}&= e_{t} - \lambda e_{t-1}\\ &= \lambda e_{t-1} + u_{t} - \lambda e_{t-1}\\ &= u_{t} \end{aligned}\]Testing for Consistency in the ARDL Representation of an IDL Model

The tests first assumes that the errors \(e_{t}\) in the IDL model follow an AR(1) process:

\[\begin{aligned} e_{t} &= \rho e_{t-1} + u_{t} \end{aligned}\]We then thest the hypothesis that:

\[\begin{aligned} H_{0}:\ &\rho = \lambda\\ H_{1}:\ &\rho \neq \lambda \end{aligned}\]Under the assumption that \(\rho\) and \(\lambda\) are different:

\[\begin{aligned} v_{t} &= e_{t} - \lambda e_{t-1} \end{aligned}\]Then:

\[\begin{aligned} y_{t} &= \lambda + \theta y_{t-1} + \beta_{0}x_{t} + v_{t}\\ &= \lambda + \lambda y_{t-1} + \beta_{0}x_{t} + e_{t} - \lambda e_{t-1}\\ &= \lambda + \lambda y_{t-1} + \beta_{0}x_{t} + \rho e_{t-1} - \lambda e_{t-1} + u_{t}\\ &= \lambda + \lambda y_{t-1} + \beta_{0}x_{t} + (\rho - \lambda) e_{t-1} + u_{t} \end{aligned}\]The test is based on whether or not an estimate of the error \(e_{t-1}\) adds explanatory power to the regression.

First compute the least squares residuals under the assumption of \(H_{0}\):

\[\begin{aligned} \hat{u}_{t} &= y_{t} - (\hat{\delta} + \hat{\lambda}y_{t-1} + \hat{\beta}_{0}x_{t})\\ t &= 2, 3, \cdots, T \end{aligned}\]Using the estimate \(\hat{\lambda}\), and starting with \(\hat{e}_{1} = 0\), compute recursively:

\[\begin{aligned} \hat{e}_{t} &= \hat{\lambda}\hat{e}_{t-1} + \hat{u}_{t}\\ t &= 2, 3, \cdots, T \end{aligned}\]Find the \(R^{2}\) from a least squares regression of \(\hat{u}\).

When \(H_{0}\) is true, and assuming that \(u_{t}\) is homoskedastic, then \((T-1) \times R^{2}\) has a \(\chi_{(1)}^{2}\) distribution in large samples.

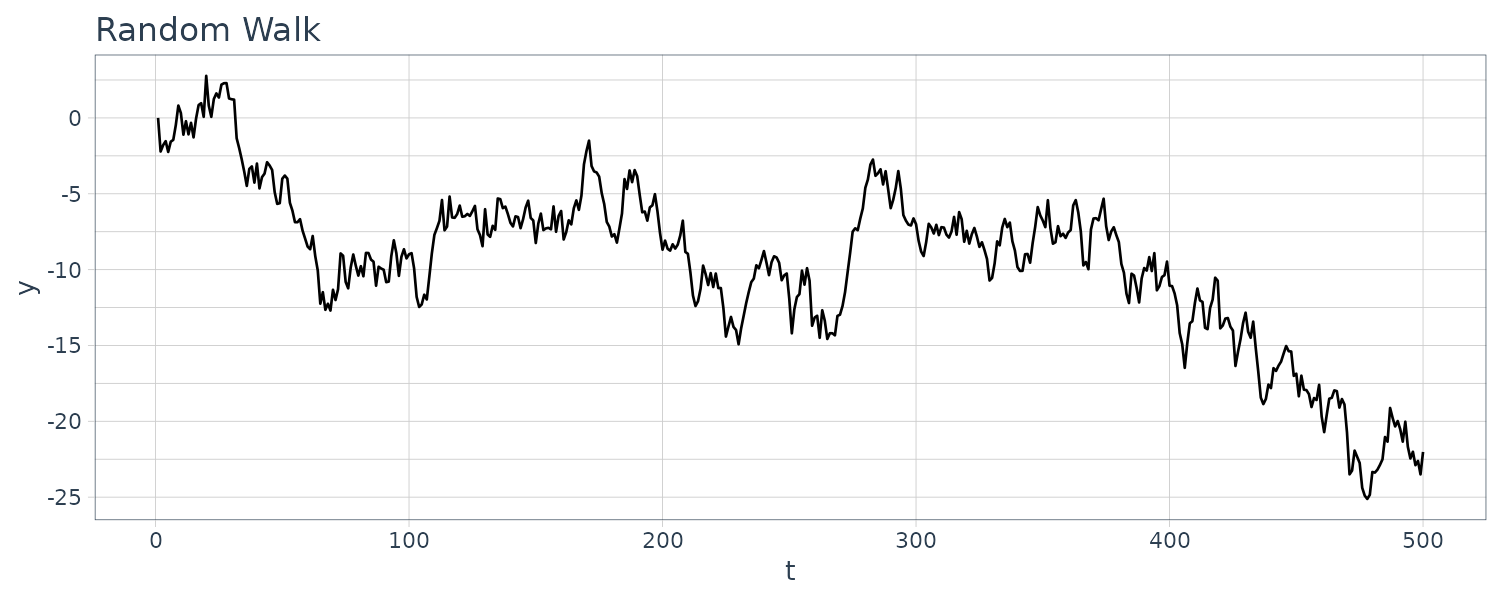

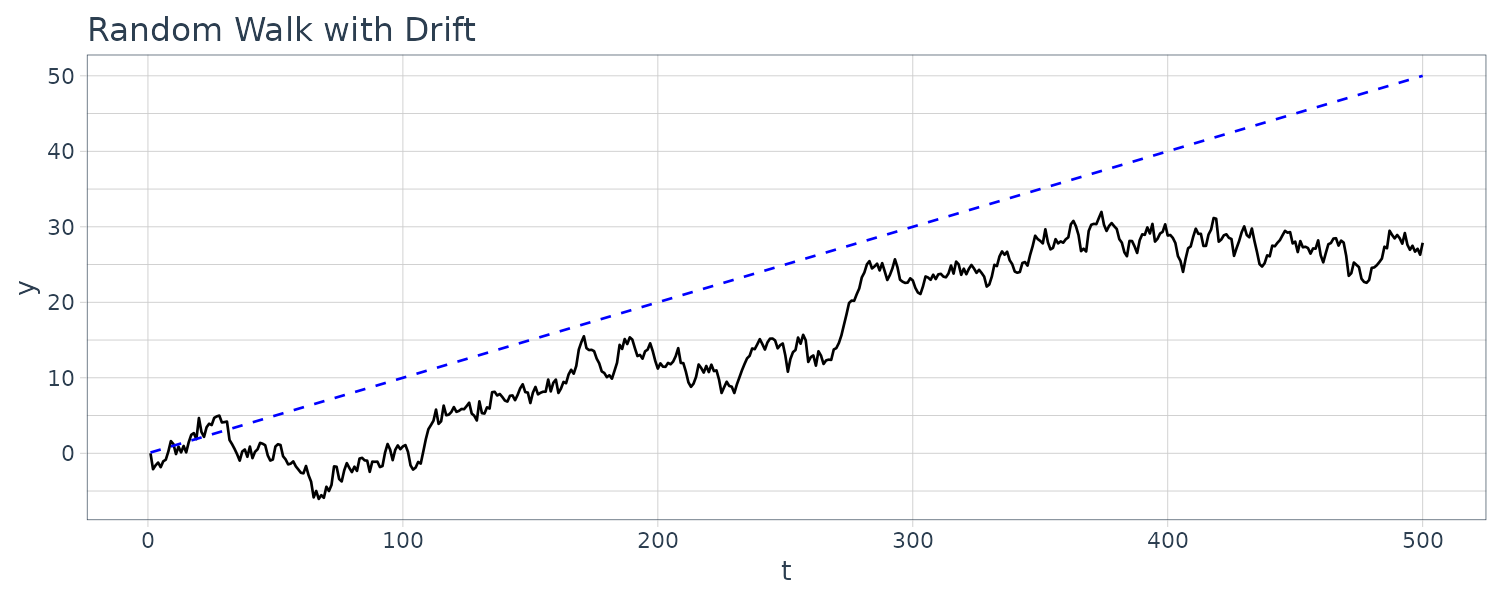

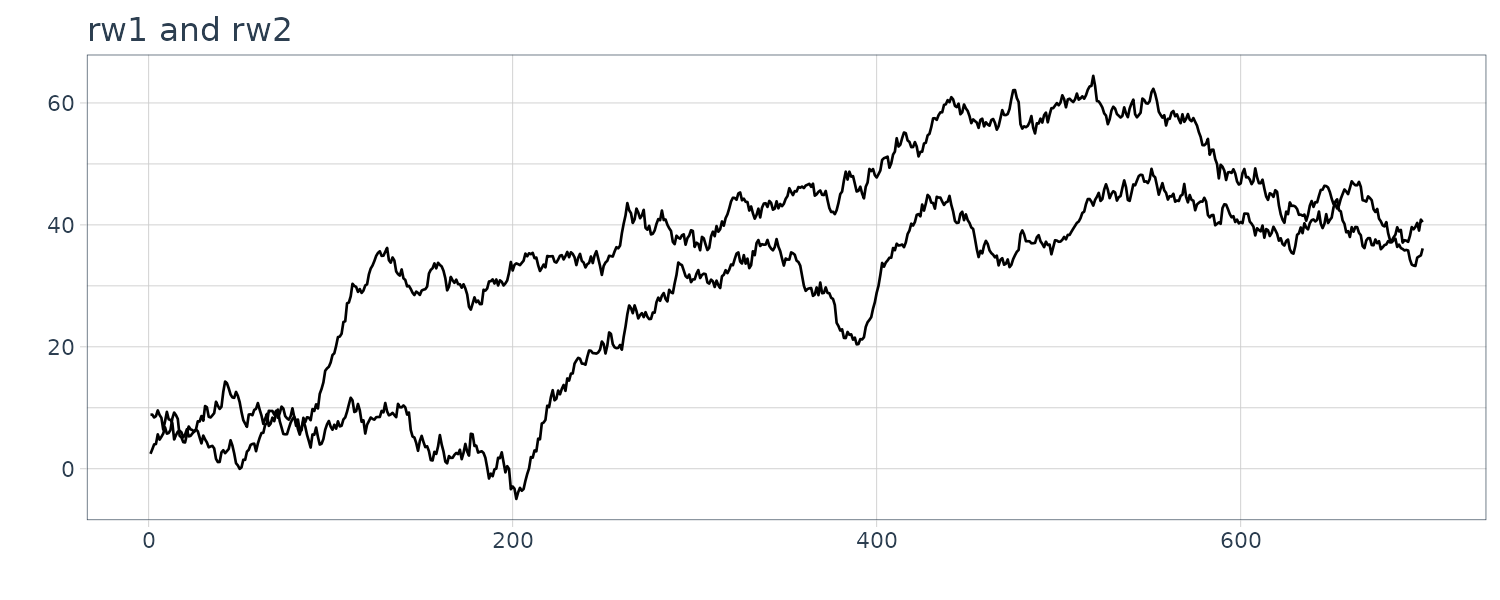

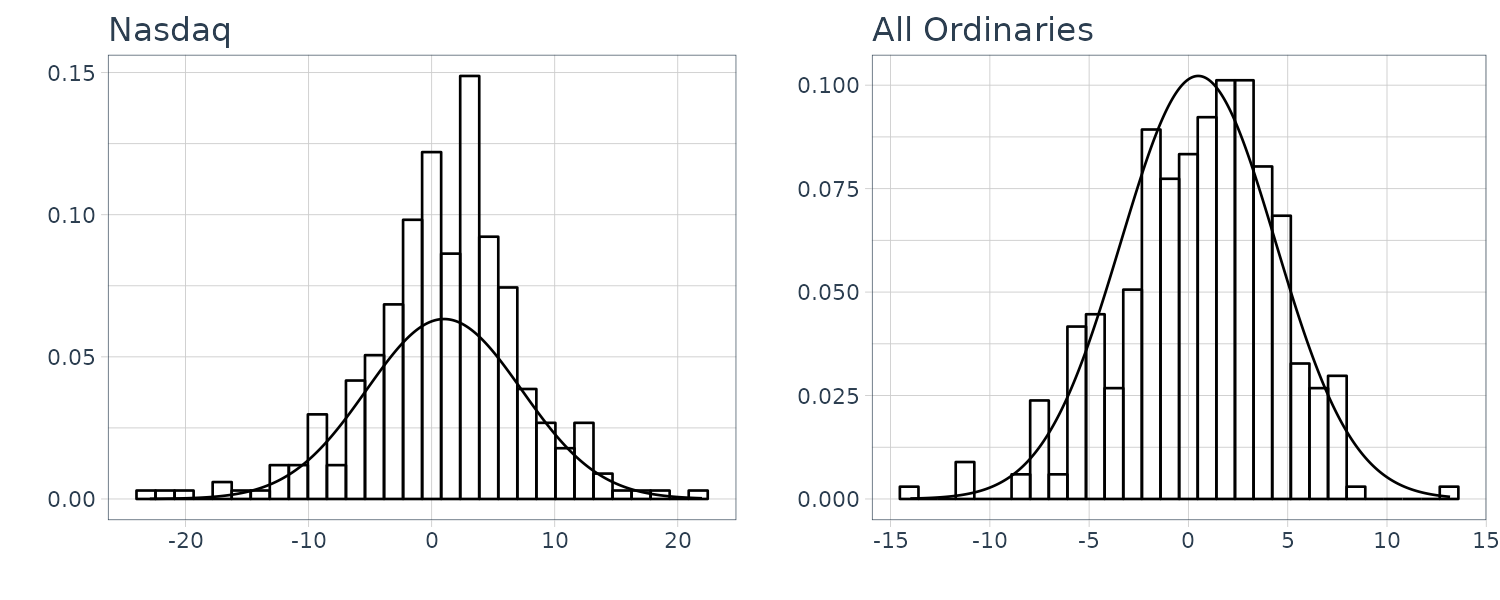

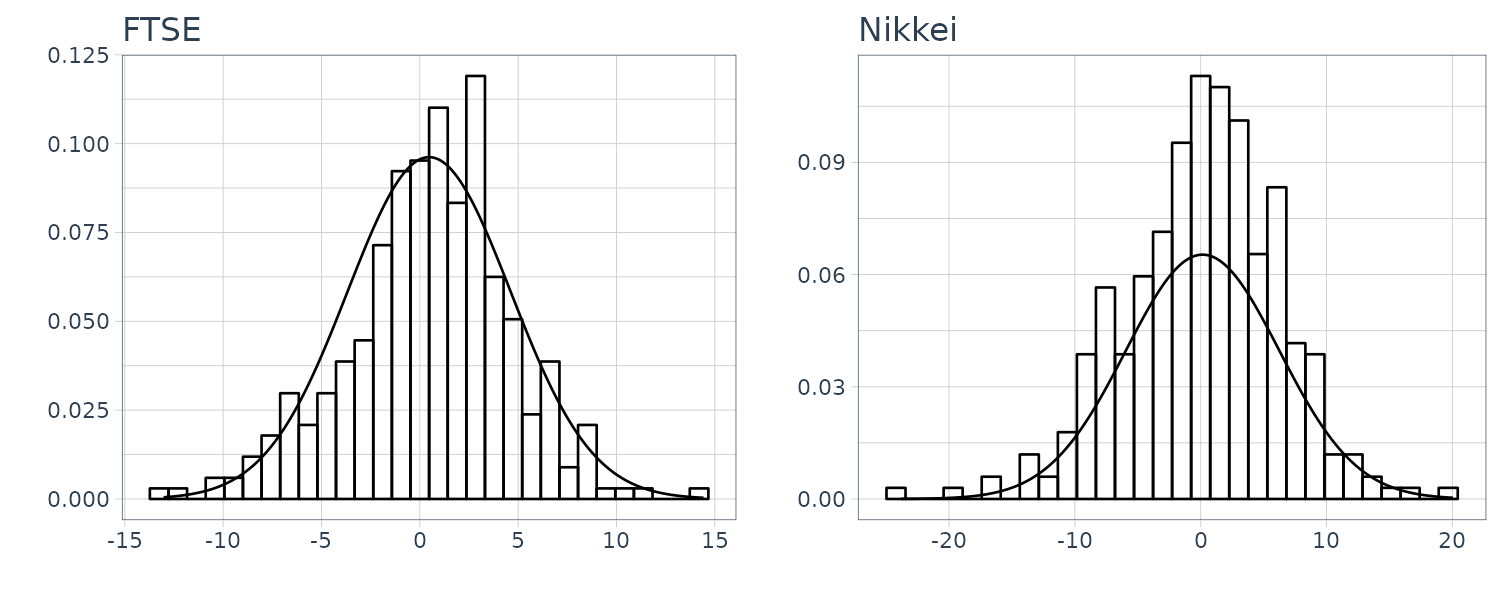

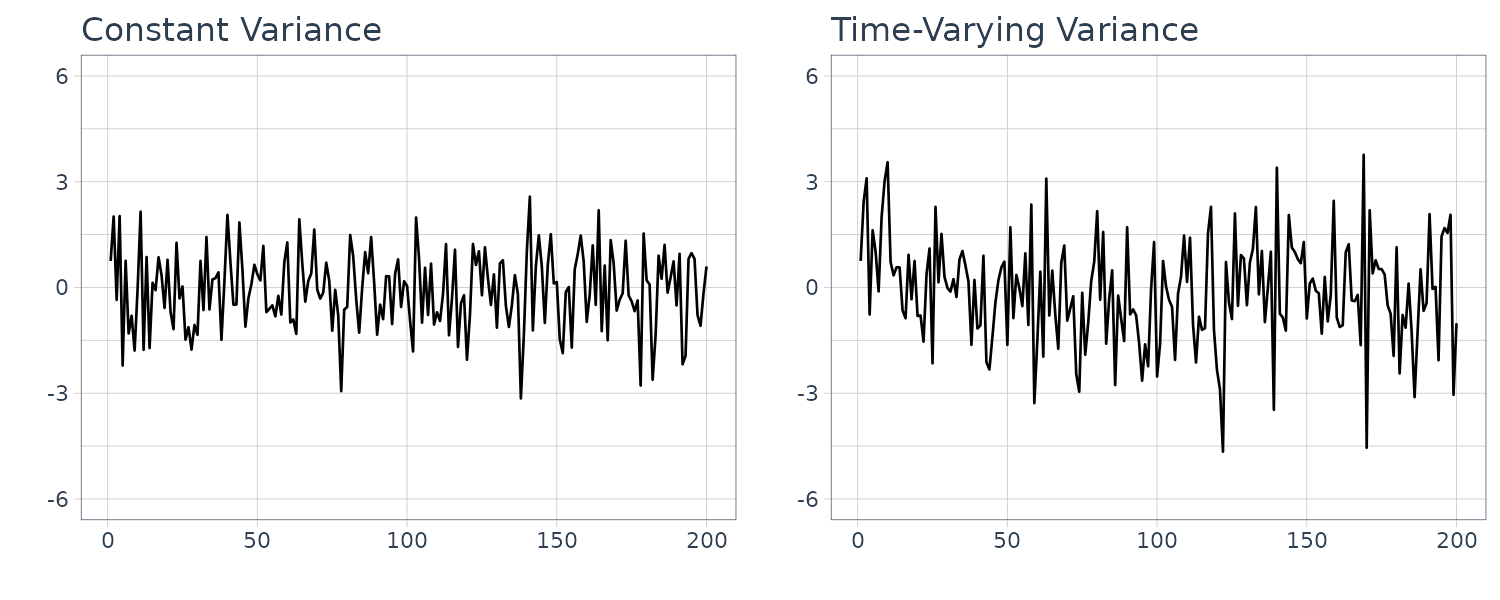

It can also be performed for ARDL(p, q) models where \(p > 1\). In such instances, the null hypothesis is that the coefficients in an AR(p) error model for \(e_{t}\) are equal to the ARDL coefficients on the lagged y’s, extra lags are included in the test procedure, and the chi-square statistic has p degrees of freedom; it is equal to the number of observations used to estimate the test equation multiplied by that equation’s \(R^{2}\).