Time Series Analysis for the State-Space Model with R/Stan

Read PostIn this post, we will cover Hagiwara J. (2021) Time Series Analysis for the State-Space Model with R/Stan.

Contents:

Fundamentals of Probability and Statistics

Mean and Variance

The mean value (or expected value) and variance for the random variable X is defined as:

\[\begin{aligned} E[X] &= \int x p(x)\ dx \end{aligned}\] \[\begin{aligned} \text{var}(X) &= E[(X - E[X])^{2}]\\ &= \int (x - E[X])^{2}p(x)\ dx \end{aligned}\]Let a and b be constants and X and Y be random variables. The following transformation relations hold for the mean and variance:

\[\begin{aligned} E[aX + b] &= aE[X] + b\\ E[X + Y]&= E[X] + E[Y] \end{aligned}\] \[\begin{aligned} E[X + Y]&= E[X] + E[Y] \end{aligned}\] \[\begin{aligned} \text{var}(X + Y) &= \text{var}(X) + \text{var}(Y) + 2E\Big[(X - E[X])(Y - E[Y])\Big] \end{aligned}\]Relation Among Multiple Random Variables

When there are multiple random variables, the probability that they will coincide is referred to as the joint probability. The probability distribution for the joint probability is referred to as the joint distribution. Let X and Y be the random variables; their joint distribution is denoted as:

\[\begin{aligned} p(x, y) \end{aligned}\]When there are multiple random variables, the probability of only a specific random variable is referred to as the marginal probability. The probability distribution of the marginal probability is referred to as the marginal distribution:

\[\begin{aligned} p(x) &= \int p(x, y)\ dy\\ p(y) &= \int p(x, y)\ dx \end{aligned}\]Regarding the conditional distribution, the relation below holds as a result of the product rule of probability:

\[\begin{aligned} p(x | y)p(y) &= p(x, y)\\ & = p(y |x)p(x) \end{aligned}\]And from the product rule of probability, Bayes’ Theorem:

\[\begin{aligned} p(x |y) &= \frac{p(y | x)p(x)}{p(y)}\\[10pt] p(y |x) &= \frac{p(x | y)p(y)}{p(x)} \end{aligned}\]Finally, when the joint distribution can be decomposed into a simple product of each marginal distribution, the random variables are said to be independent:

\[\begin{aligned} p(x, y) &= p(x)p(y) \end{aligned}\]Stochastic Process

A stochastic process is a series of random variables, which exactly corresponds to stochastically interpreted time series data. For a sequence, to express a time point t, a subscript is assigned to the random variable and its realizations such as \(Y_{t}\) and \(y_{t}\), respectively.

Covariance and Correlation

The covariance is defined as:

\[\begin{aligned} \text{cov}(X, Y) &= E\Big[(X - E[X])(Y - E[Y])\Big] \end{aligned}\]The autocovariance of stochastic process \(X_{t}\) is defined as:

\[\begin{aligned} \text{cov}(X_{t}, X_{t-k}) &= E\Big[(X_{t} - E[X])(X_{t-k} - E[X_{t-k}])\Big] \end{aligned}\]Stationary and Nonstationary Processes

Stationarity means that a stochastic feature does not change even if time changes. In more detail, when the probability distribution for a stochastic process is invariant independently of time point t, its stochastic process is referred to as strictly stationary.

On the contrary, when mean, variance, autocovariance, and the autocorrelation coefficient for a stochastic process are invariant independently of time point t, the stochastic process is referred to as weakly stationary.

Maximum Likelihood Estimation and Bayesian Estimation

We have implicitly assumed so far that the parameter \(\boldsymbol{\theta}\) in a probability distribution is known. The parameter is sometimes known but is generally unknown and must be specified by some means for analysis.

The likelihood of the entire stochastic process \(Y_{t}\) is expressed by:

\[\begin{aligned} p(y_{1}, y_{2}, \cdots, y_{T}; \boldsymbol{\theta}) \end{aligned}\]The likelihood is a single numerical indicator of how an assumed probability distribution fits the realizations. The natural logarithm of the likelihood is referred to as the log-likelihood as:

\[\begin{aligned} l(\boldsymbol{\theta}) &= \log p(y_{1}, \cdots, y_{T};\boldsymbol{\theta}) \end{aligned}\]The maximum likelihood method specifies the parameter \(\boldsymbol{\theta}\) where the likelihood is maximized. Function log() is a monotonically increasing function with the result that the maximization of likelihood has the same meaning as that of log-likelihood.

Let \(\hat{\boldsymbol{\theta}}\) be the estimator for parameters. The maximum likelihood estimation is expressed as:

\[\begin{aligned} \hat{\boldsymbol{\theta}} &= \text{argmax}_{\boldsymbol{\theta}}\ l(\boldsymbol{\theta}) \end{aligned}\]In the above description, the parameter is not considered to be a random variable. The frequentists generally take such a viewpoint, and parameter specification through maximum likelihood estimation is one approach in frequentism.

On the other hand, there is a means of considering parameter as a random variable. Bayesians generally take this viewpoint, and a parameter is estimated through Bayesian estimation based on Bayes’ theorem under Bayesianism.

Frequency Spectrum

The frequency spectrum can be derived by converting data in the time domain to the frequency domain; it is useful for confirming periodicity. The representations in the time and frequency domains have a paired relation.

In general, any periodic time series data \(y_{t}\) can be considered as the sum of trigonometric functions with various periods. This is known as Fourier series expansion

Fourier series can be expressed using sine and cosine functions, and more briefly using complex values. Specifically, the Fourier series expansion of observations \(y_{t}\) with fundamental period \(T_{0}\) can be expressed using complex values as:

\[\begin{aligned} y_{t} &= \sum_{n=-\infty}^{\infty}c_{n}e^{in\omega_{0}t} \end{aligned}\] \[\begin{aligned} \omega_{0} &= 2\pi f_{0}\\ f_{t} &= \frac{1}{T_{0}} \end{aligned}\]Where \(\omega_{0}\) (rad/s) is the fundamental angular frequency, and \(f_{0}\) (Hz) is the fundamental frequency.

The absolute value of the complex Fourier coefficient \(c_{n}\) indicates the extent to which the data contain individual periodical components.

The complex Fourier coefficient \(c_{n}\) can be derived explicitly as:

\[\begin{aligned} c_{n} &= \frac{1}{T_{0}}\int_{-\frac{T_{0}}{2}}^{\frac{T_{0}}{2}}y_{t}e^{-in\omega_{0}t}\ dt \end{aligned}\]Expanding the fundamental period \(T_{0}\) to infinity, we can apply the equation to arbitrary data. Such an extension is called a Fourier transform; the Fourier transform of \(y_{t}\) is generally expressed as \(\mathcal{F}[y_{t}]\), which is also known as the frequency spectrum. The absolute value of the frequency spectrum indicates to what extent an individual periodical component is contained in the data, similar to the complex Fourier coefficient.

Normalizing the energy \(\mid \mathcal{F}[y_{t}]\mid^{2}\) of the frequency spectrum by dividing by time and taking its limit, we can get power spectrum. Time series analysis in the frequency domain is often explained from the viewpoint of the power spectrum, which has a connection with the theoretical description.

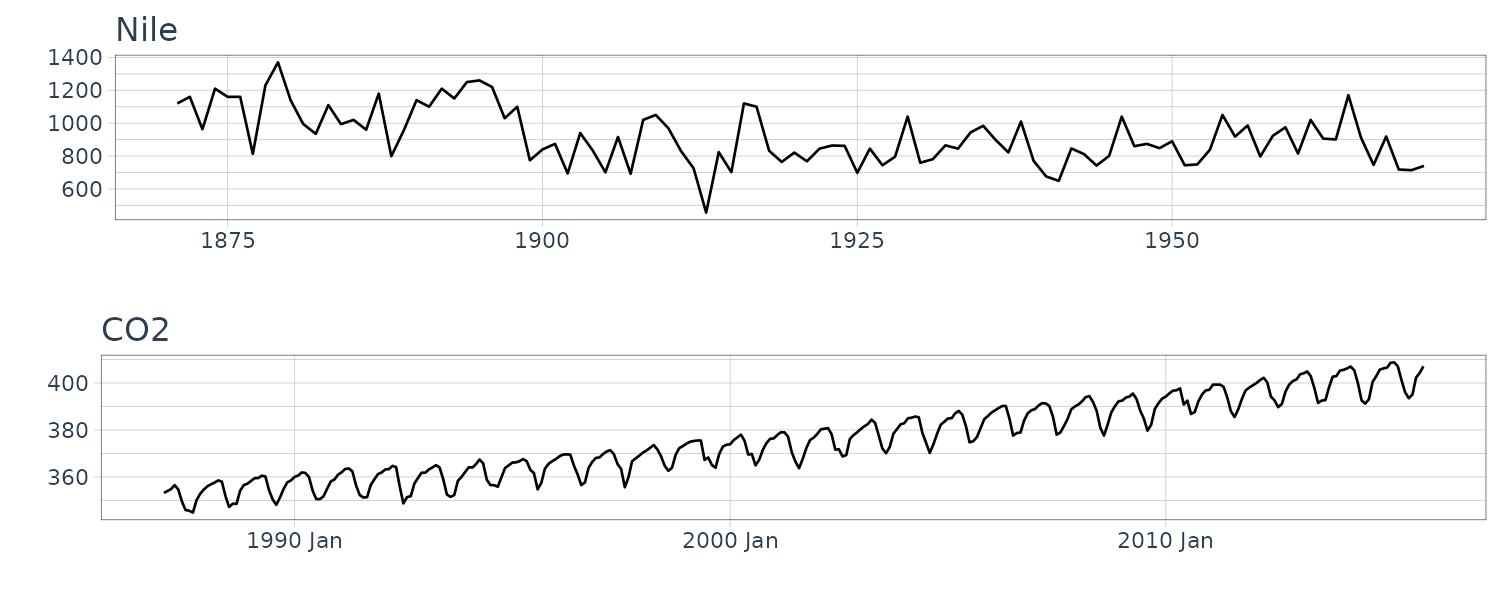

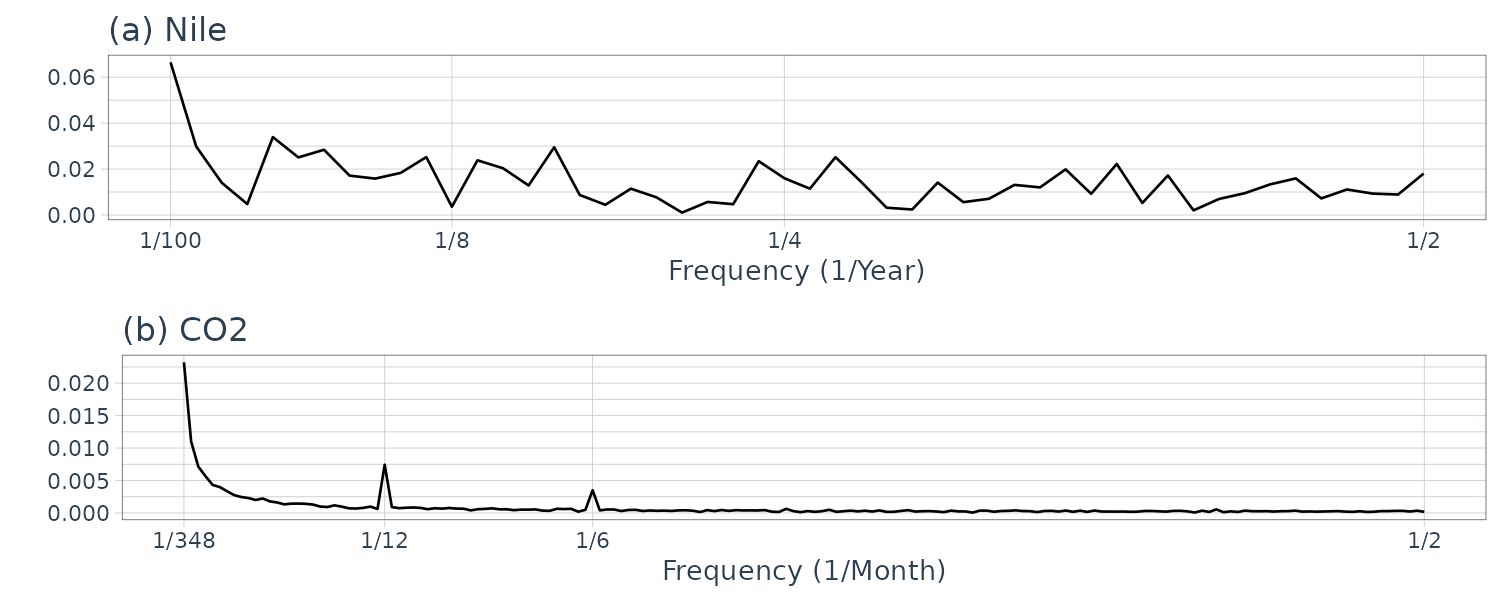

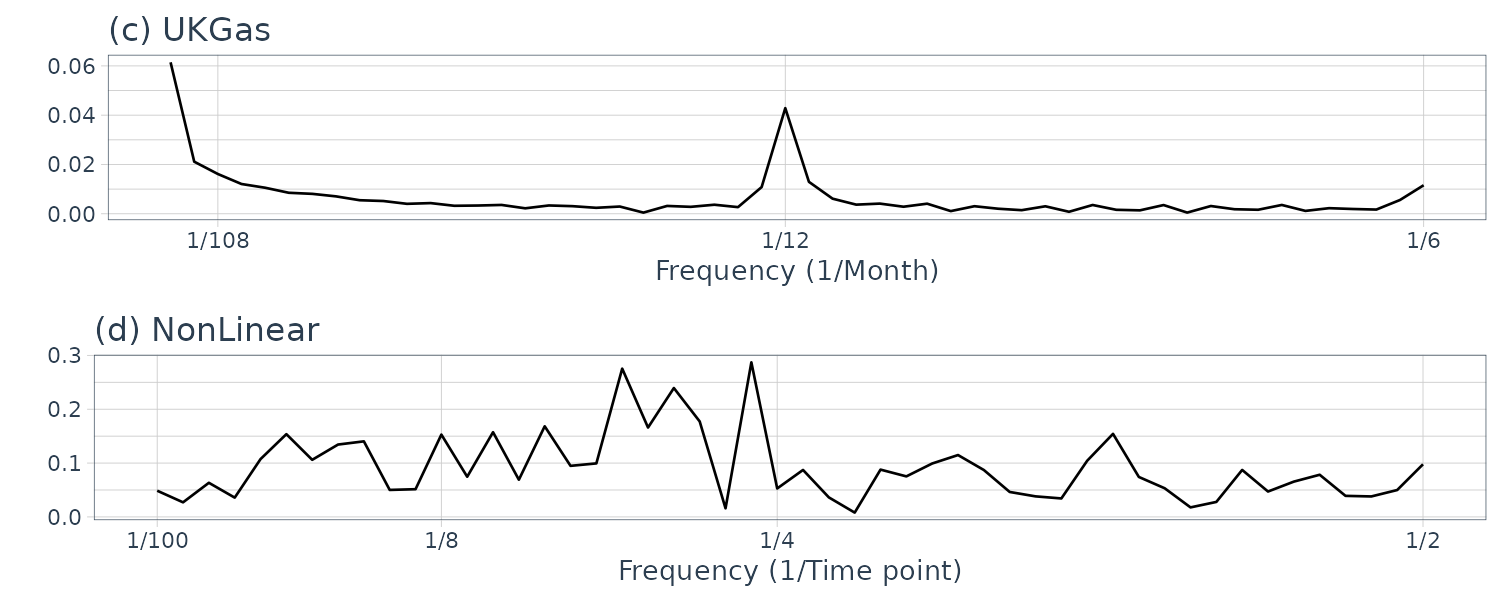

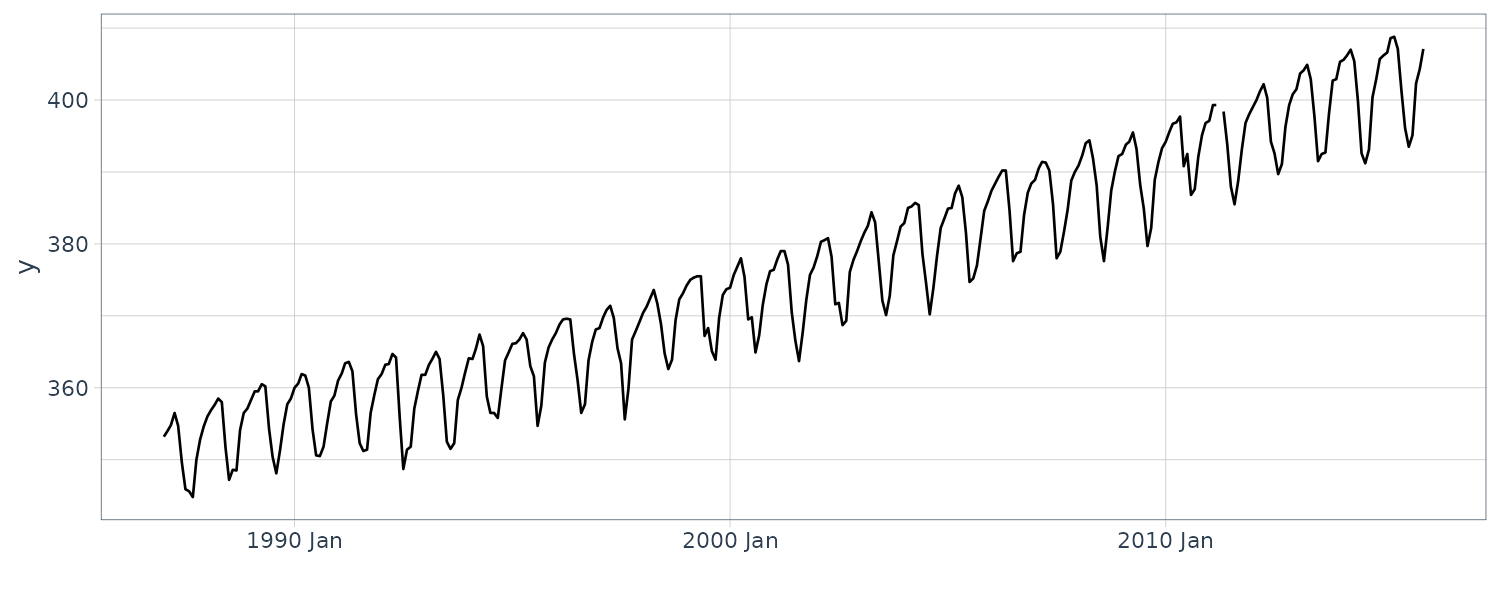

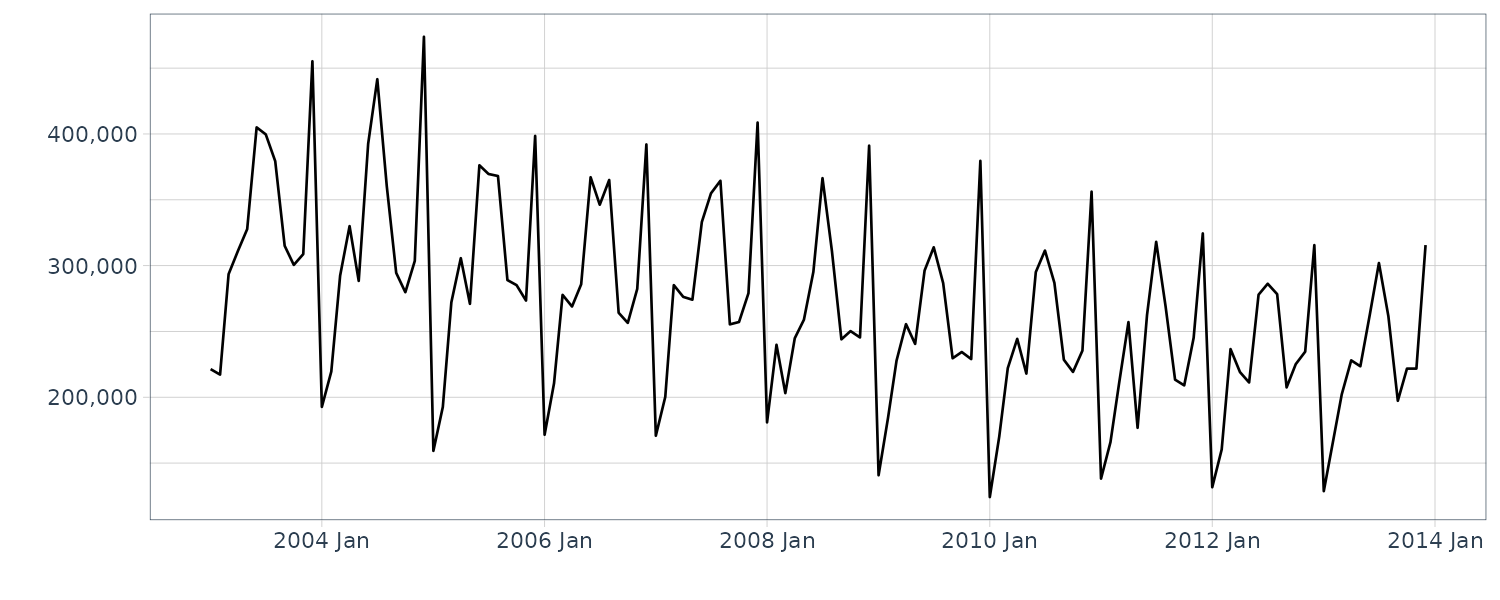

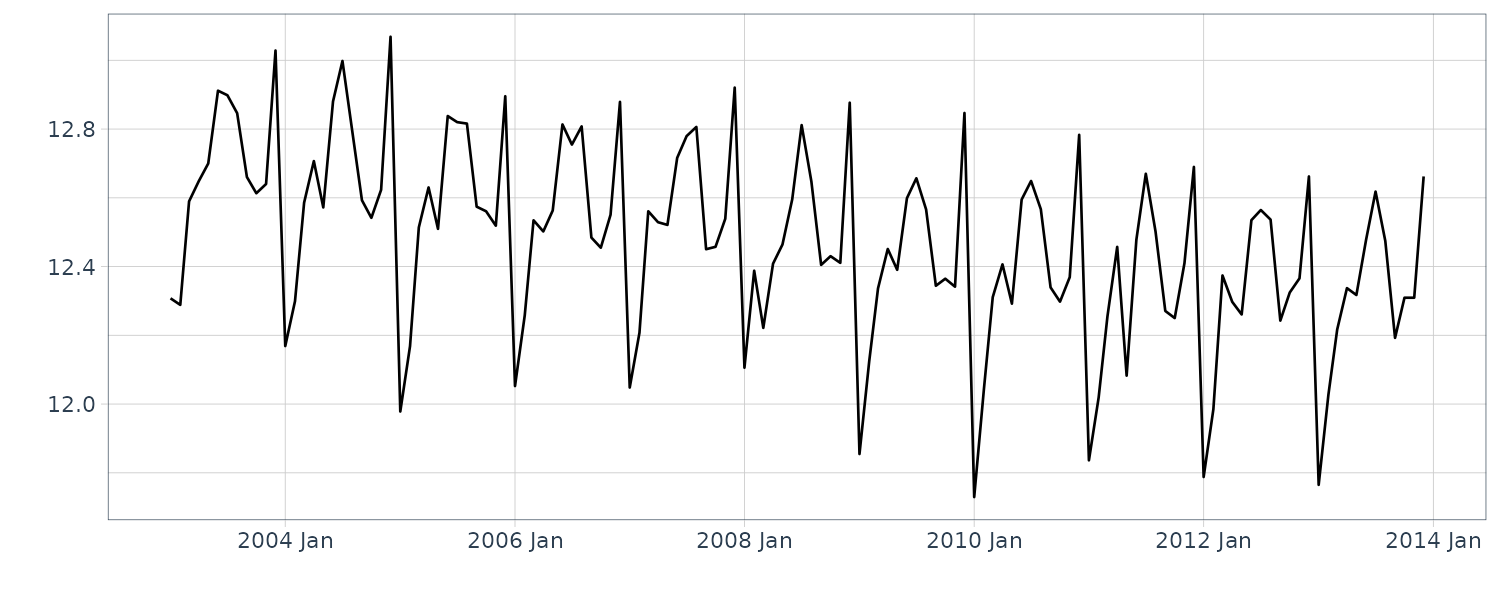

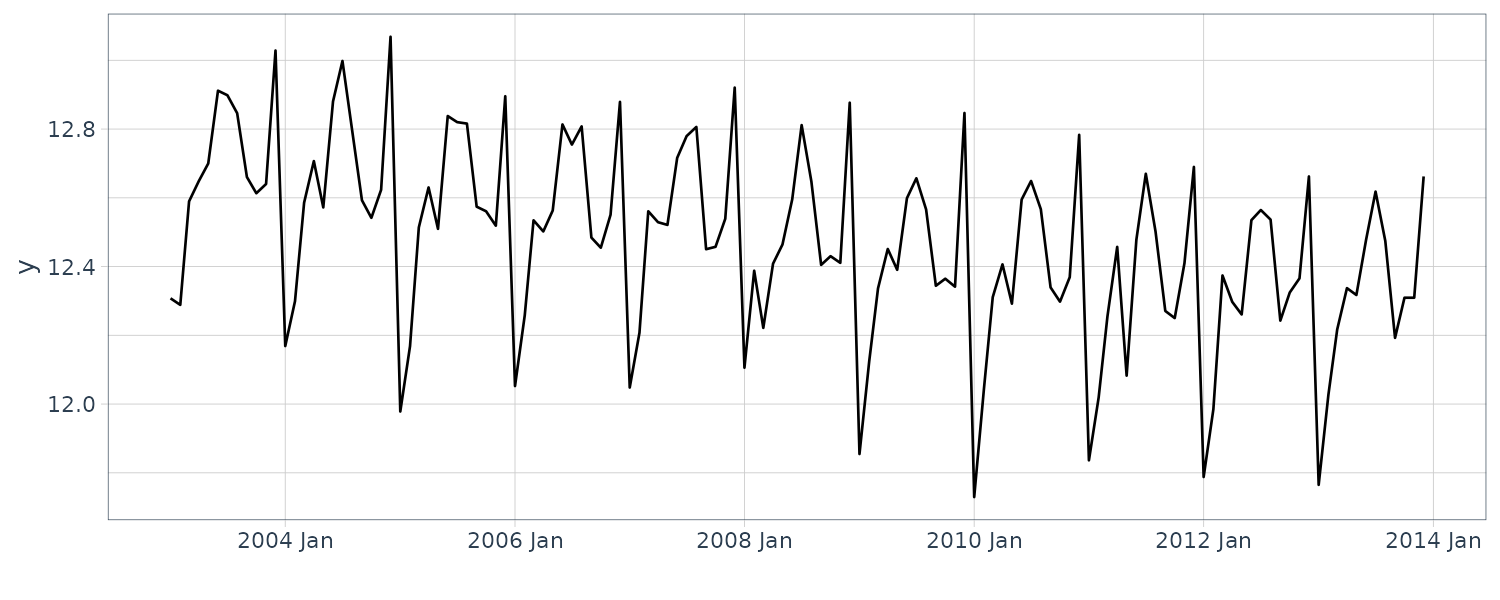

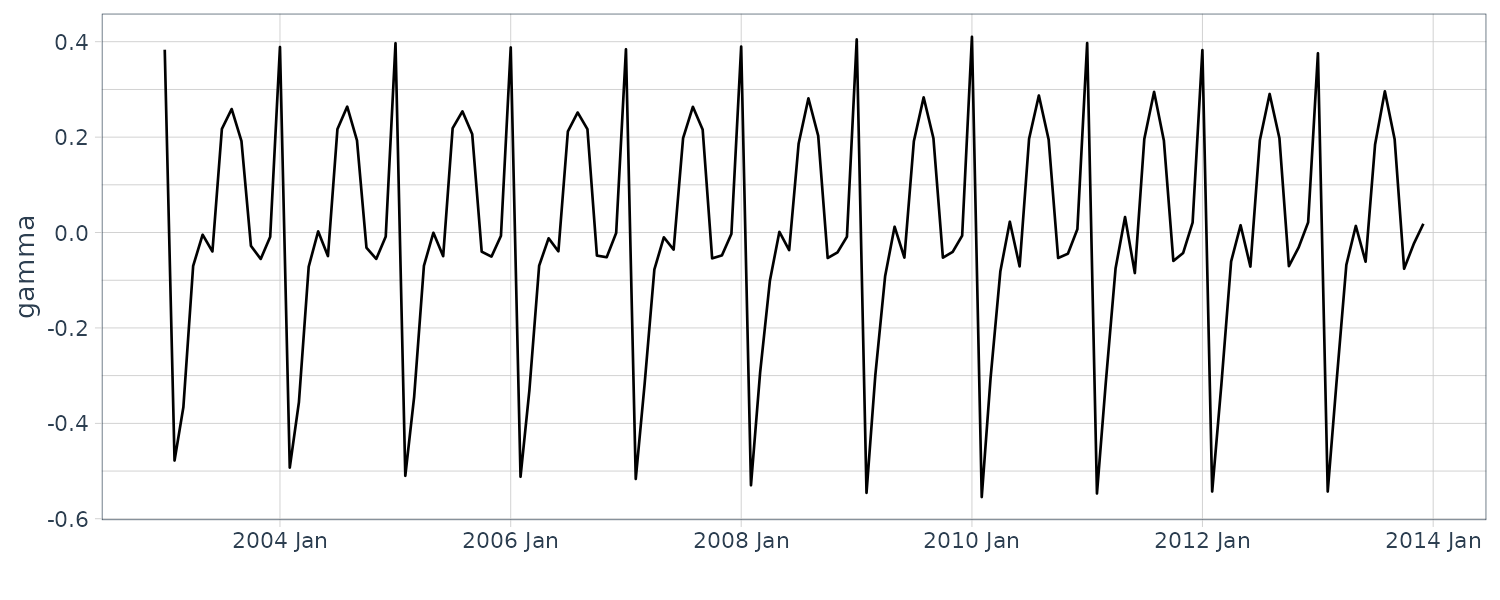

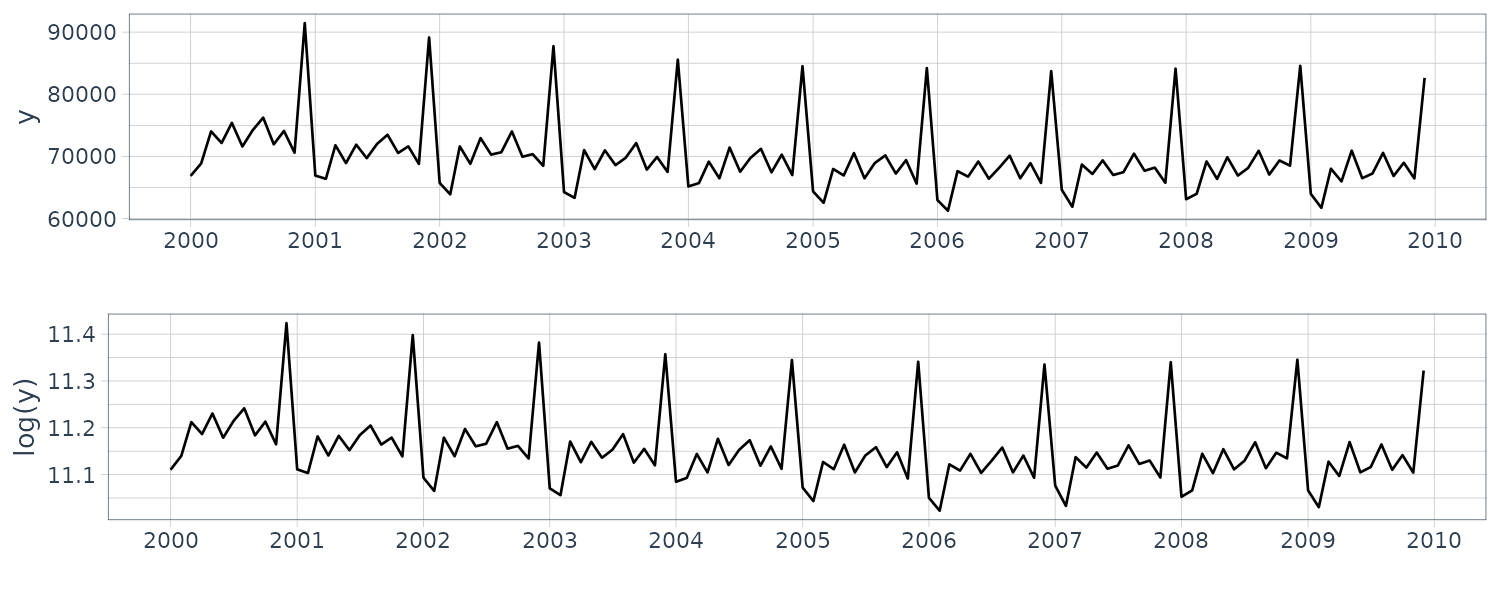

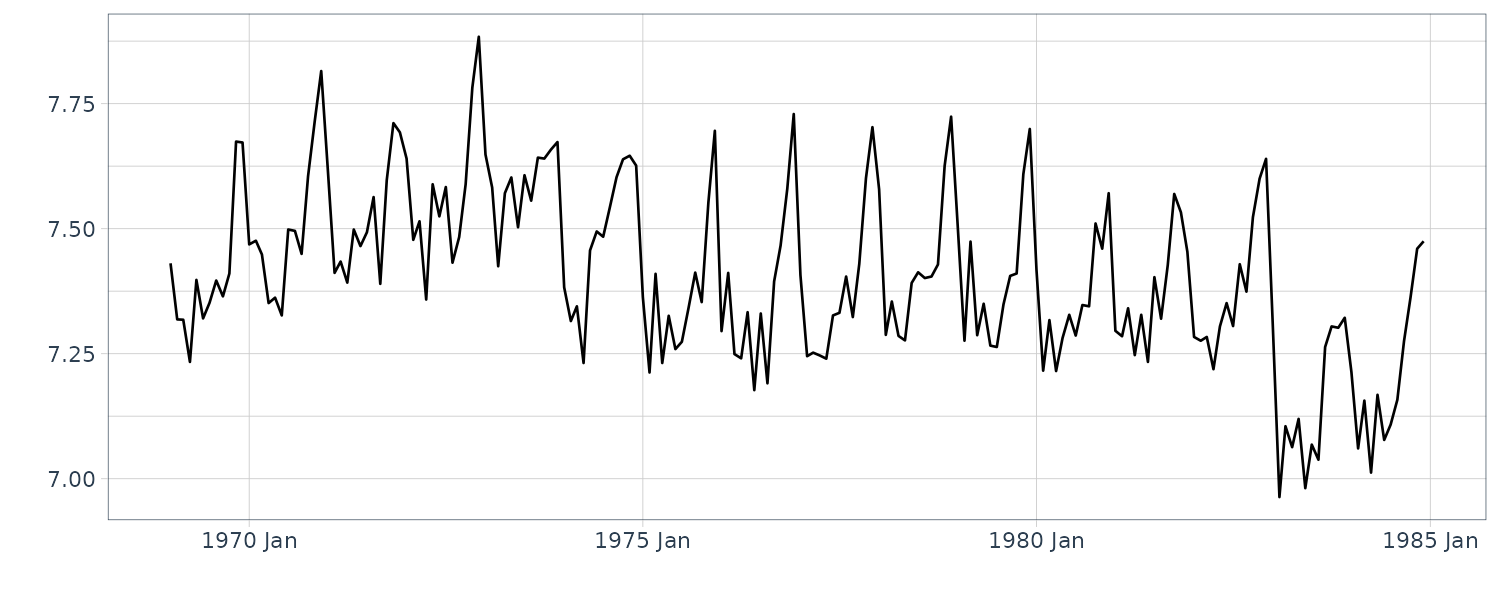

We now convert the data under analysis in the time domain to that in the frequency domain. We will use the following 4 datasets:

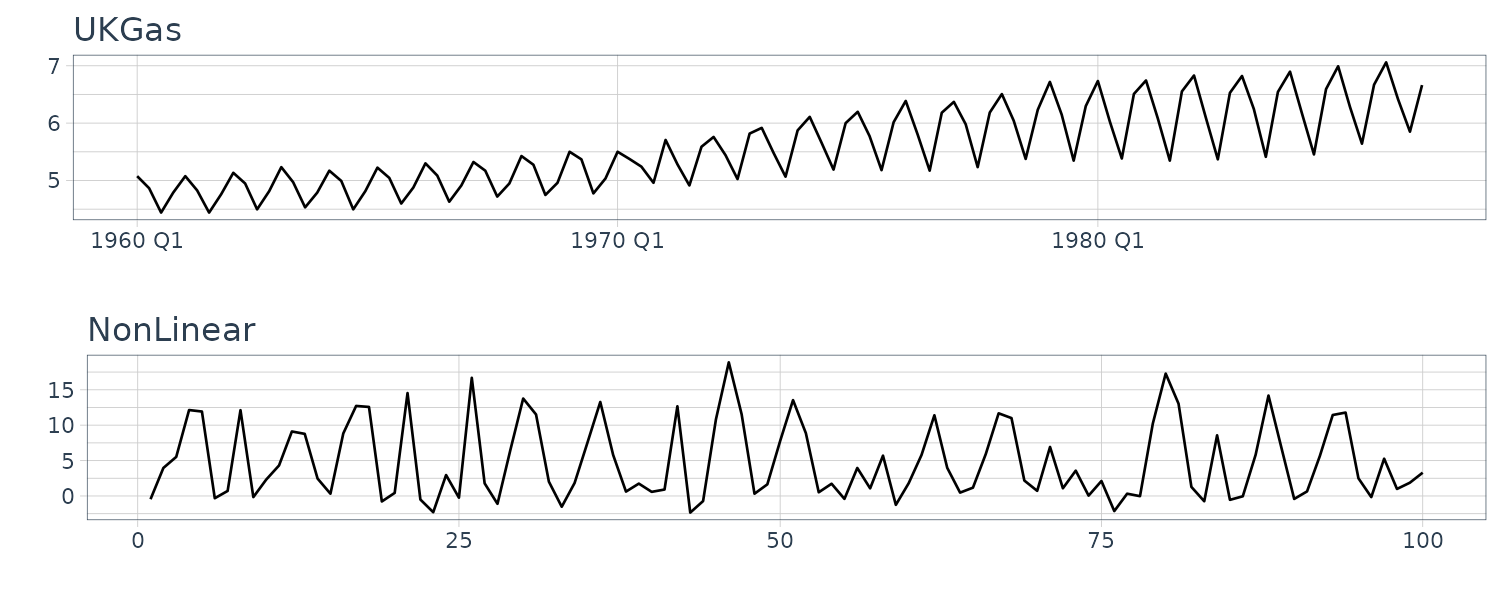

And plotting the frequency spectrum:

plot.spectrum <-

function(dat,

lab = "",

sub = "",

tick = c(8, 4),

unit = 1

) {

n <- nrow(dat)

freq_tick <- c(n, tick, 2)

# Frequency domain transform of data

dat_FFT <- abs(fft(dat$y)) |>

as_tibble()

max_value <- max(dat_FFT$value)

dat_FFT <- dat_FFT |>

mutate(

y = value / max_value,

f = (0:(n-1)) / n,

n = 1:n()

) |>

slice(2:(n() / 2 + 1))

freqs <- dat_FFT$f

dat_FFT |>

ggplot(aes(x = f, y = y)) +

geom_line() +

ylab("|Standardized frequency spectrum|") +

xlab(sprintf("Frequency (1/%s)", lab)) +

ggtitle(paste0(sub, " - num: ", n)) +

scale_x_continuous(

labels = sprintf("1/%d", freq_tick),

breaks = freqs[n / freq_tick * unit]

) +

theme_tq()

}# (a) Annual flow of the Nile

plot.spectrum(

Nile,

lab = "Year",

sub = "(a) Nile"

)

# (b) CO2 concentration in the atmosphere

plot.spectrum(

y_CO2,

lab = "Month",

sub = "(b) CO2",

tick = c(12, 6)

)

# (c) Quarterly gas consumption in the UK

plot.spectrum(

UKgas,

lab = "Month",

sub = "(c) UKGas",

tick = c(12, 6),

unit = 3

)

# (d) Artificially-generated data from a nonlinear model

plot.spectrum(

y_nonlinear,

lab = "Time point",

sub = "(d) NonLinear"

)

We normalize the absolute value of the frequency spectrum by its maximum value on the vertical axis to understand the contribution of the frequency component easily. Note that we exclude point 0 as it is just the offset but include it in the calculation of max value.

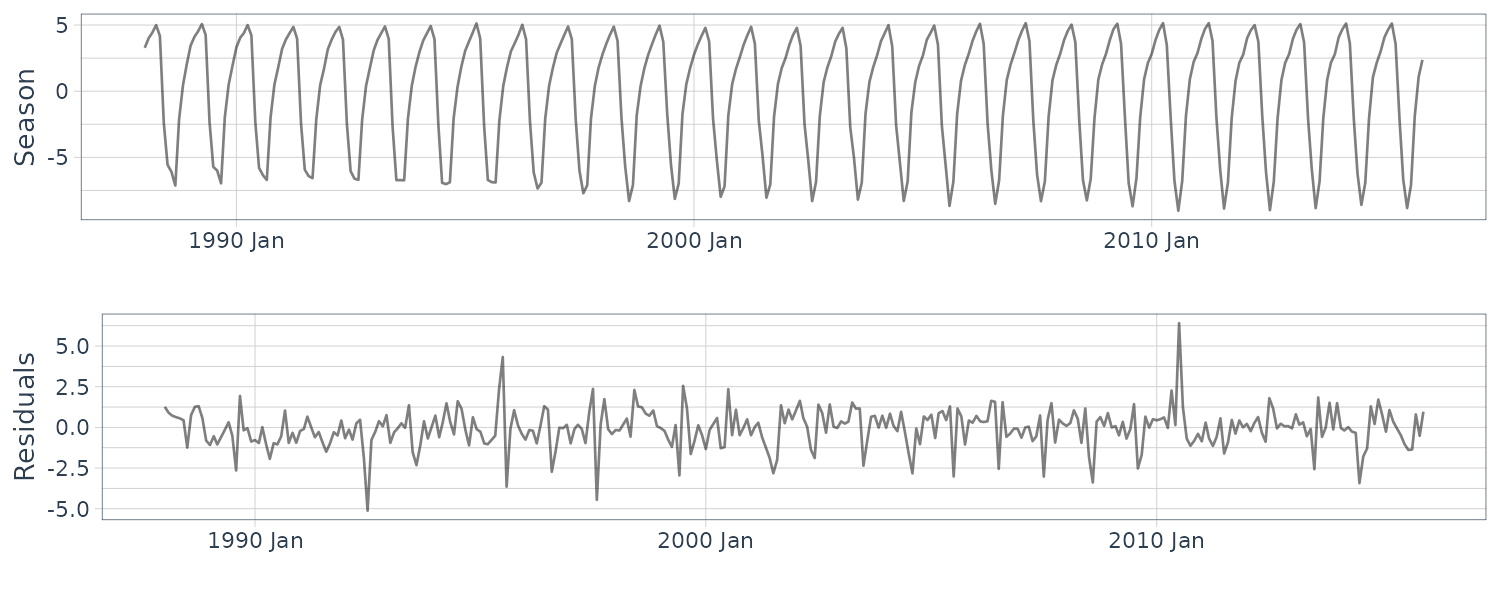

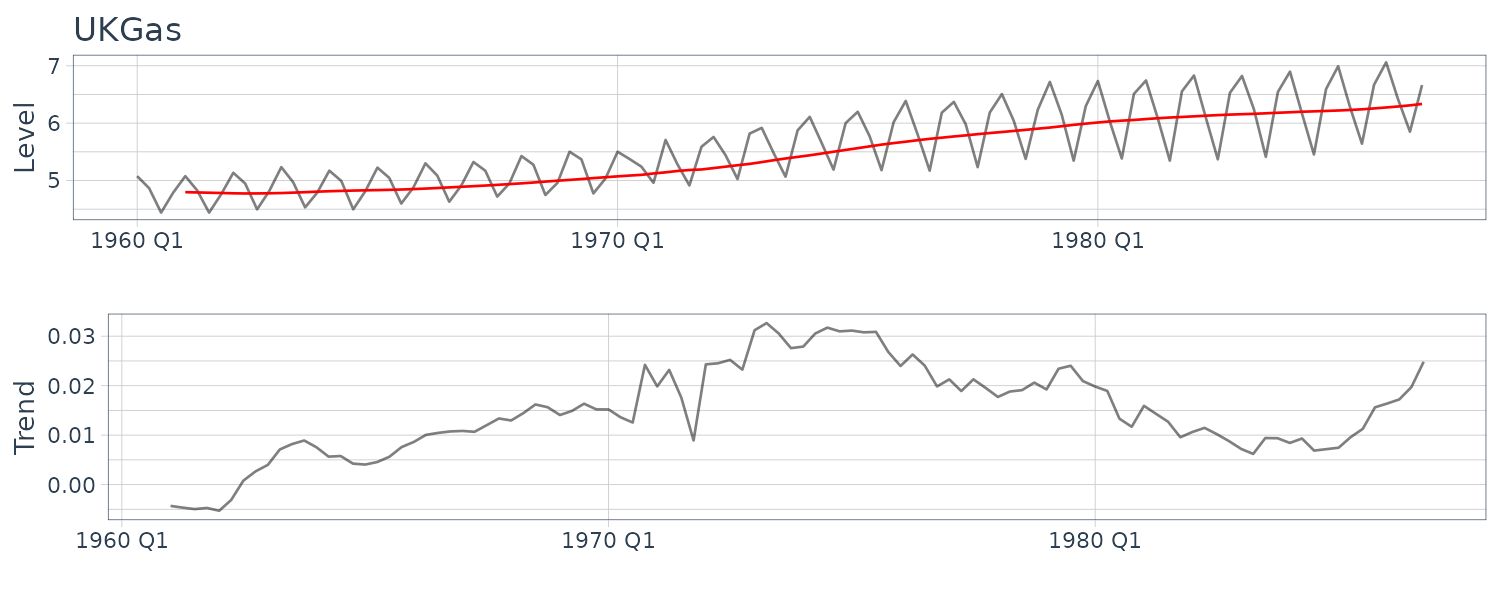

The data (a) appear to contain large values everywhere; hence, we cannot recognize a specific periodicity. The data (b) appear to contain large values at twelve and six months. Since the six-month cycle is incidentally derived from the twelve-month cycle comprising convex and concave halves, we can consider that only the twelve-month period is genuine. The data (c) also have large values at twelve and six months, but we consider that only the twelve-month period is genuine for the same reason. Since the data (d) appear to contain large values everywhere, we cannot recognize a specific periodicity.

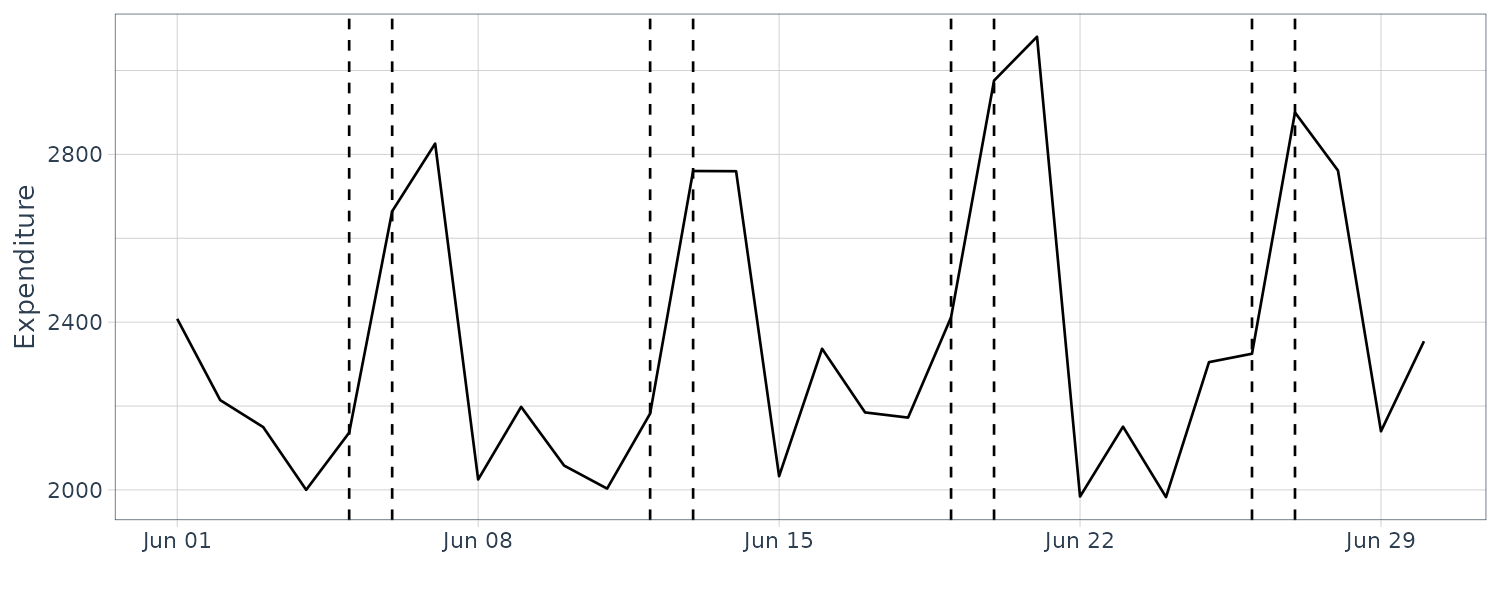

Model Definition

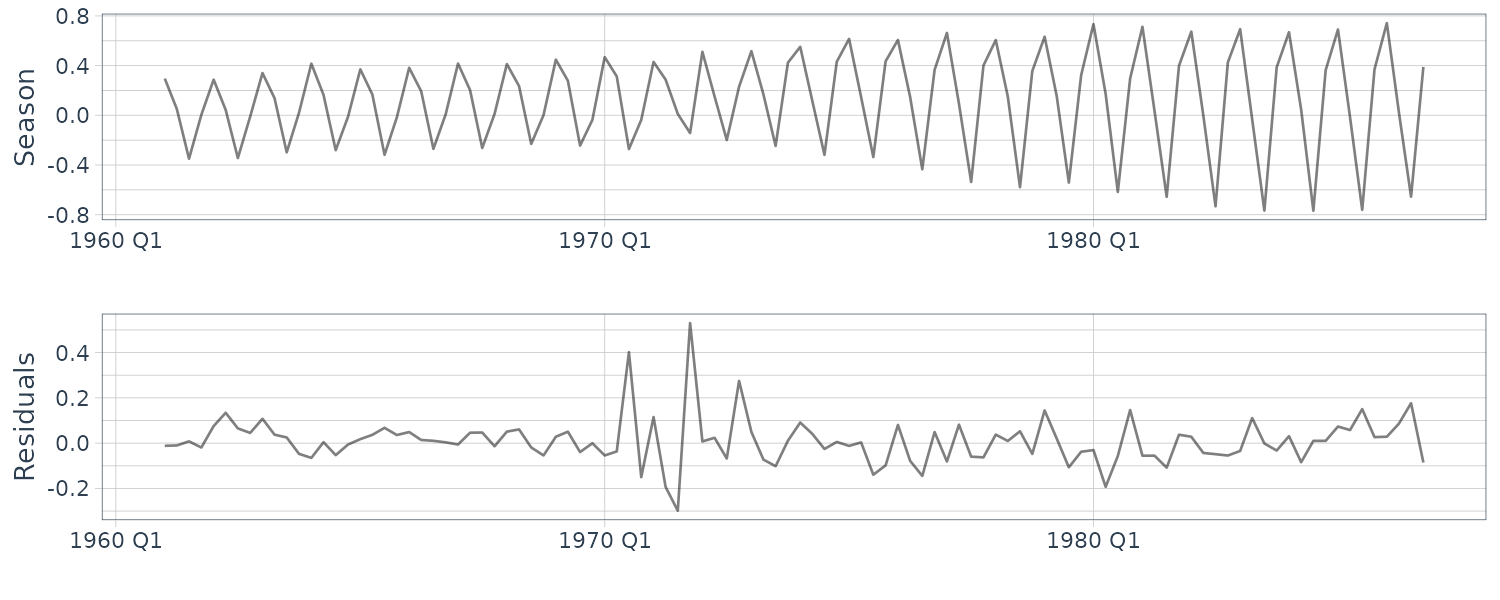

The model is a simple structure expressing the data characteristics. It is common to think of combining simpler structures in defining a model; this is also known as decomposition of time series data. This section explicitly considers “level + trend + season” as a starting point since this is widely recognized as a fundamental decomposition of time series data. Additionally, the remainders resulting from subtracting these estimated components from the original time series data are referred to as residuals.

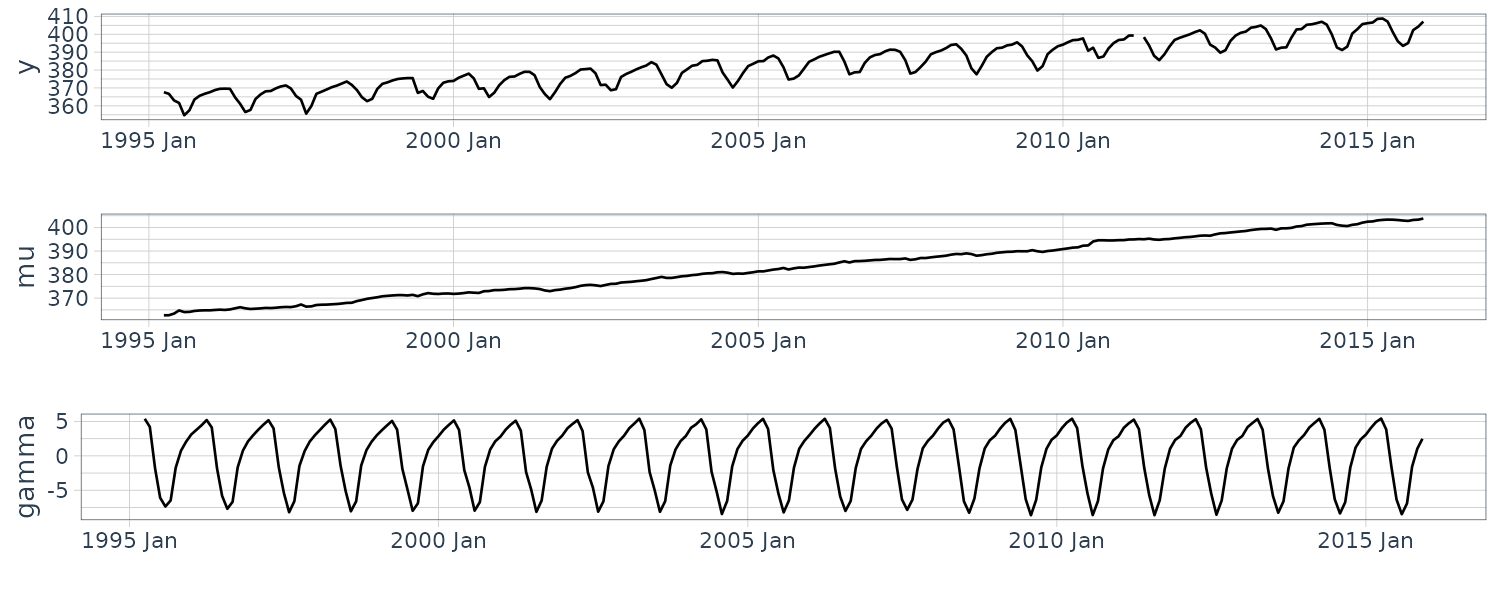

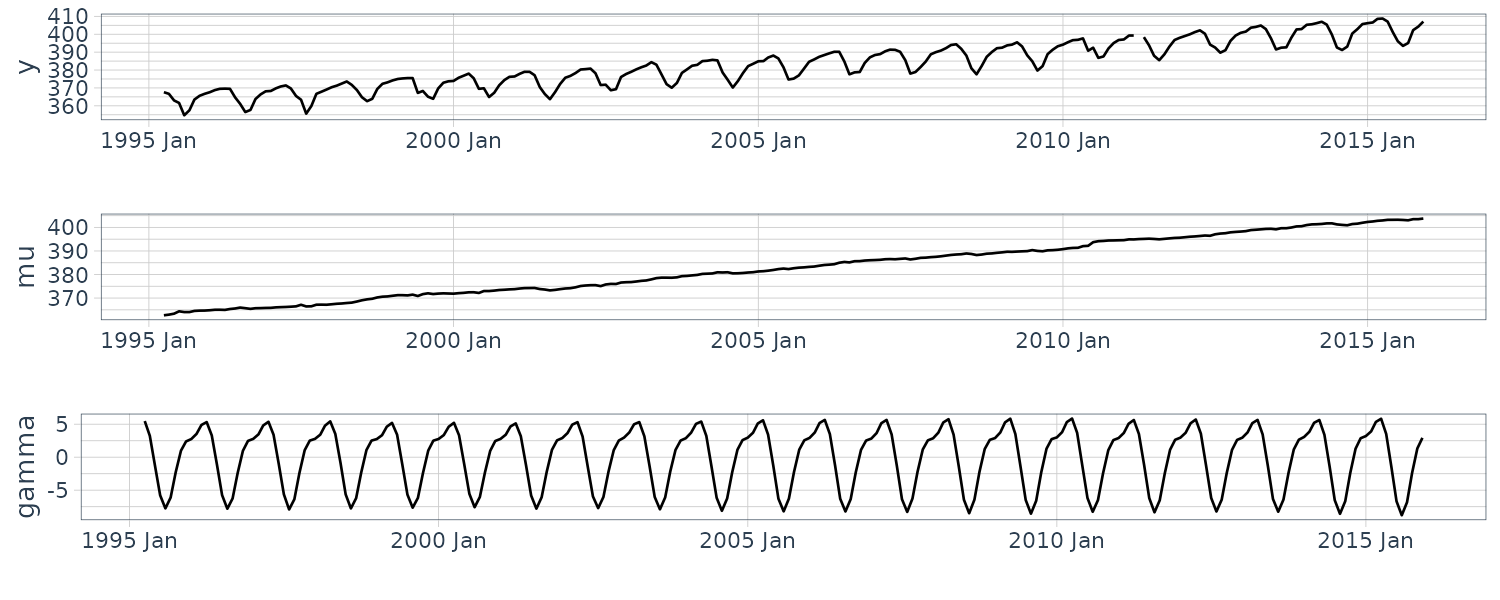

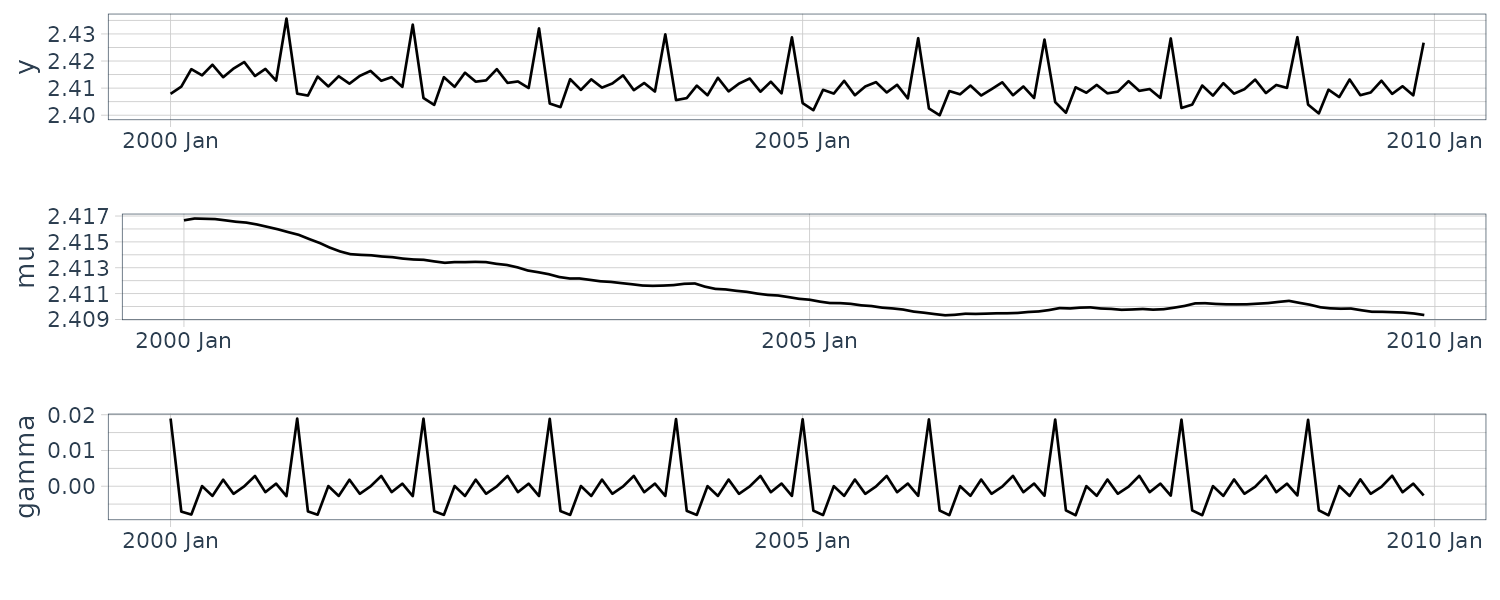

Holt–Winters method

The Holt–Winters method is a kind of exponentially weighted moving average (EWMA). When observations with a period p are obtained until \(y_{t}\), the k-steps-ahead prediction value \(\hat{y}_{t+k}\) obtained by the additive Holt–Winters method is as follows:

\[\begin{aligned} \hat{y}_{t+k} &= \text{level}_{t} + k\times\text{trend}_{t} + \text{season}_{t-p + k_{p}^{+}}\\ \text{level}_{t} &= \alpha(y_{t} - \text{season}_{t-p}) + (1 - \alpha)(\text{level}_{t-1}+ \text{trend}_{t-1})\\ \text{trend}_{t} &= \beta(\text{level}_{t} - \text{level}_{t-1}) + (1 - \beta)\text{trend}_{t-1}\\ \text{season}_{t} &= \gamma(y_{t} - \text{level}_{t}) + (1 - \gamma)\text{season}_{t-p} \end{aligned}\] \[\begin{aligned} k_{p}^{+} &= \left \lfloor{(k - 1)\text{ mod } p} + 1 \right \rfloor \end{aligned}\]The prediction value \(\hat{y}_{t}\) for k = 0 corresponds to the filtering value. The terms \(\text{level}_{t}, \text{trend}_{t}, \text{season}_{t}\) indicate level component, trend component, and seasonal component, respectively.

The terms \(\alpha, \beta, \gamma\) are an exponential weight for the level, trend, and seasonal component, respectively; they take values from 0 to 1.

While the Holt–Winters method is deterministic, it is known that the method becomes (almost) equivalent to the stochastic method in a specific setting: the equivalence with the autoregressive integrated moving average (ARIMA) model, whereas the equivalence with the state-space model is described later.

In the Holt–Winters method, the exponential weights \(\alpha, \beta, \gamma\) are basic parameters, respectively, and are typically determined to minimize the sum of the squared one-step-ahead prediction error over all time points.

Note that the terms \(\text{level}_{t}, \text{trend}_{t}, \text{season}_{t}\) are just estimates based on the specified parameters. The function HoltWinters() in R is available for the Holt–Winters method; it can perform the specification of parameter values and estimation simultaneously.

Once the model and parameters are specified, we perform the analysis process, i.e., filtering, prediction, and smoothing. This section describes the case of the deterministic Holt–Winters method; we will explain the example of the stochastic state-space model later.

The Holt–Winters method can perform filtering and prediction.

The code for filtering with the Holt–Winters method is as follows:

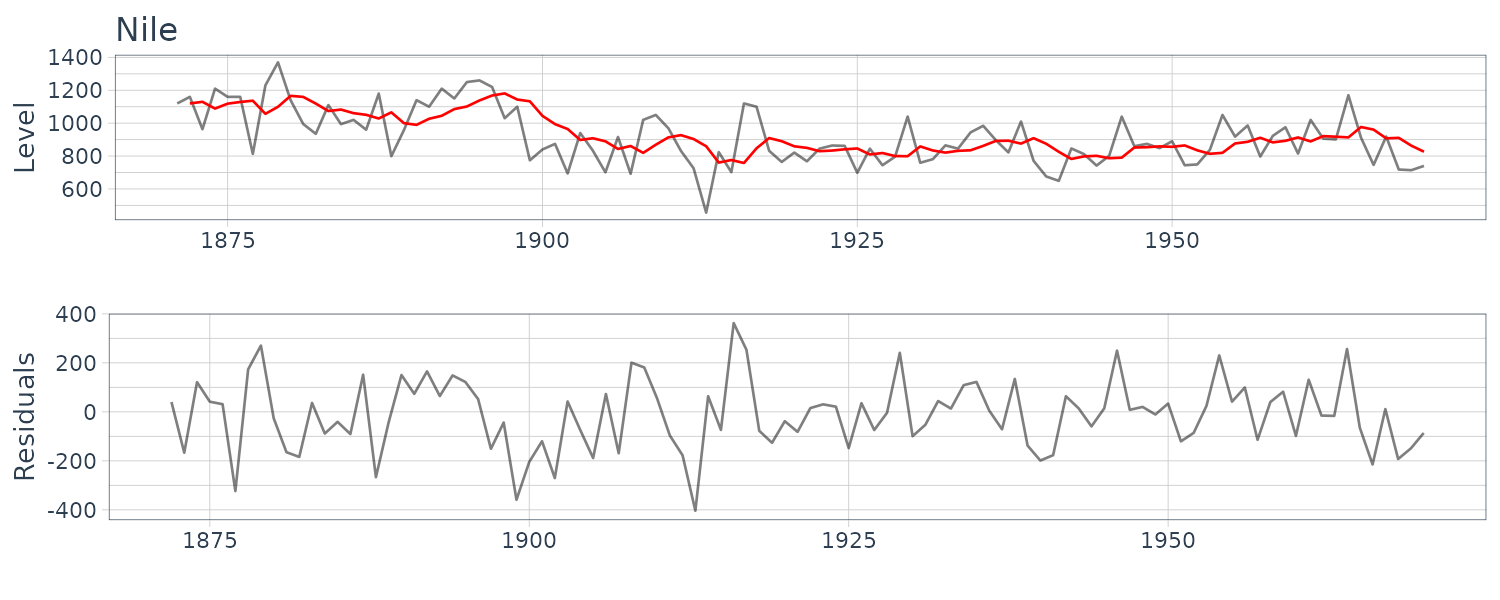

(a) Annual Flow of the Nile

HW_Nile <- HoltWinters(

Nile |>

select(y) |>

as.ts(),

beta = FALSE,

gamma = FALSE

)

> HW_Nile

Holt-Winters exponential smoothing without trend and

without seasonal component.

Call:

HoltWinters(x = as.ts(select(Nile, y)),

beta = FALSE, gamma = FALSE)

Smoothing parameters:

alpha: 0.2465579

beta : FALSE

gamma: FALSE

Coefficients:

[,1]

a 805.0389

Nile <- Nile |>

mutate(

pred = c(

NA,

HW_Nile$fitted[, "xhat"]

)

)

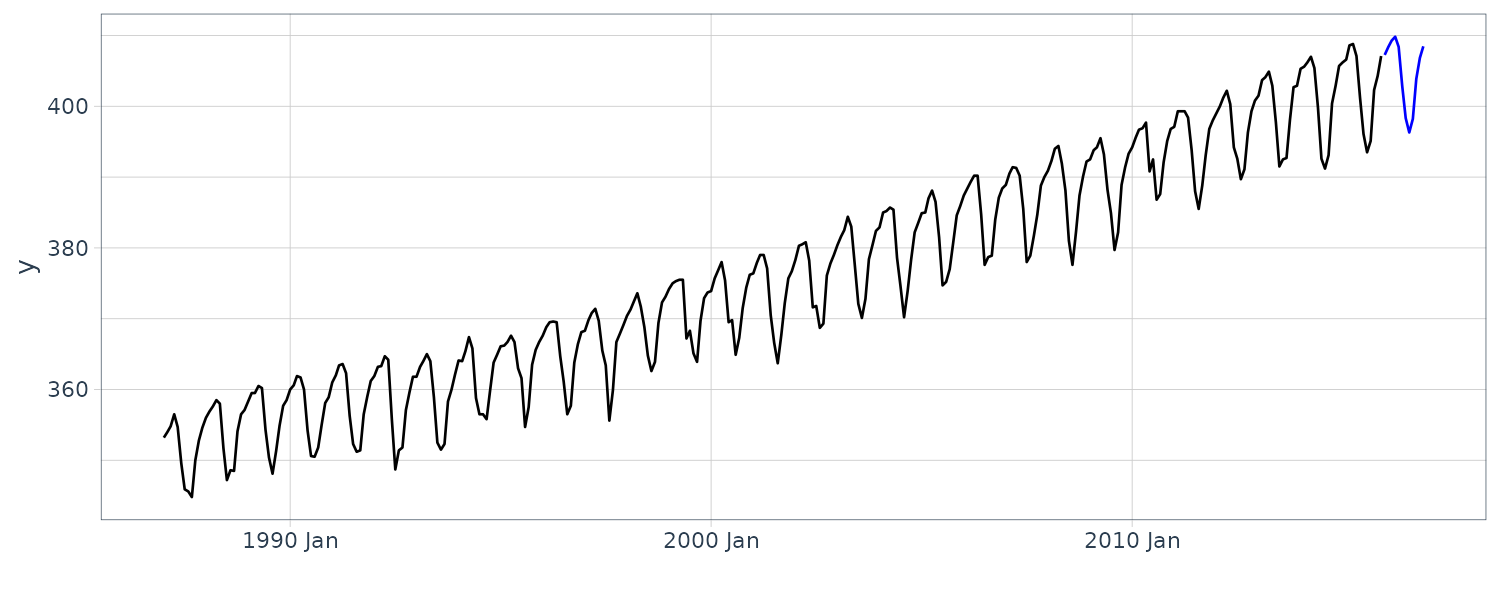

(b) CO2 Concentration in the Atmosphere

HW_CO2 <- HoltWinters(

as.ts(

y_CO2 |>

select(y)

)

)

> HW_CO2

Holt-Winters exponential smoothing with trend and

additive seasonal component.

Call:

HoltWinters(x = as.ts(select(y_CO2, y)))

Smoothing parameters:

alpha: 0.2447601

beta : 0.01251553

gamma: 0.1748568

Coefficients:

[,1]

a 404.0057970

b 0.1644596

s1 3.0893728

s2 4.0364050

s3 4.7730024

s4 5.1650952

s5 3.5645743

s6 -2.1365395

s7 -6.8188844

s8 -9.0204930

s9 -7.2691087

s10 -1.7723944

s11 1.0173080

s12 2.4942167

y_CO2 <- y_CO2 |>

mutate(

pred = c(rep(NA, 12), HW_CO2$fitted[, "xhat"])

)

Suppose \(N\) is the length of the time series and \(p = 12\). The cofficients represent:

\[\begin{aligned} \begin{bmatrix} a[N]\\ b[N]\\ s[N - 12 + 1]\\ s[N- 12 + 2]\\ \vdots\\ s[N] \end{bmatrix} \end{aligned}\](c) Quarterly Gas Consumption in the UK

HW_UKgas <- HoltWinters(

UKgas |>

select(y) |>

as.ts()

)

> HW_UKgas

Holt-Winters exponential smoothing with trend and

additive seasonal component.

Call:

HoltWinters(x = as.ts(select(UKgas, y)))

Smoothing parameters:

alpha: 0.02902249

beta : 1

gamma: 0.7246027

Coefficients:

[,1]

a 6.35670491

b 0.02234170

s1 0.76212717

s2 0.08604617

s3 -0.53107110

s4 0.32905534

UKgas <- UKgas |>

mutate(

pred = c(

rep(NA, 4),

HW_UKgas$fitted[, "xhat"]

)

)

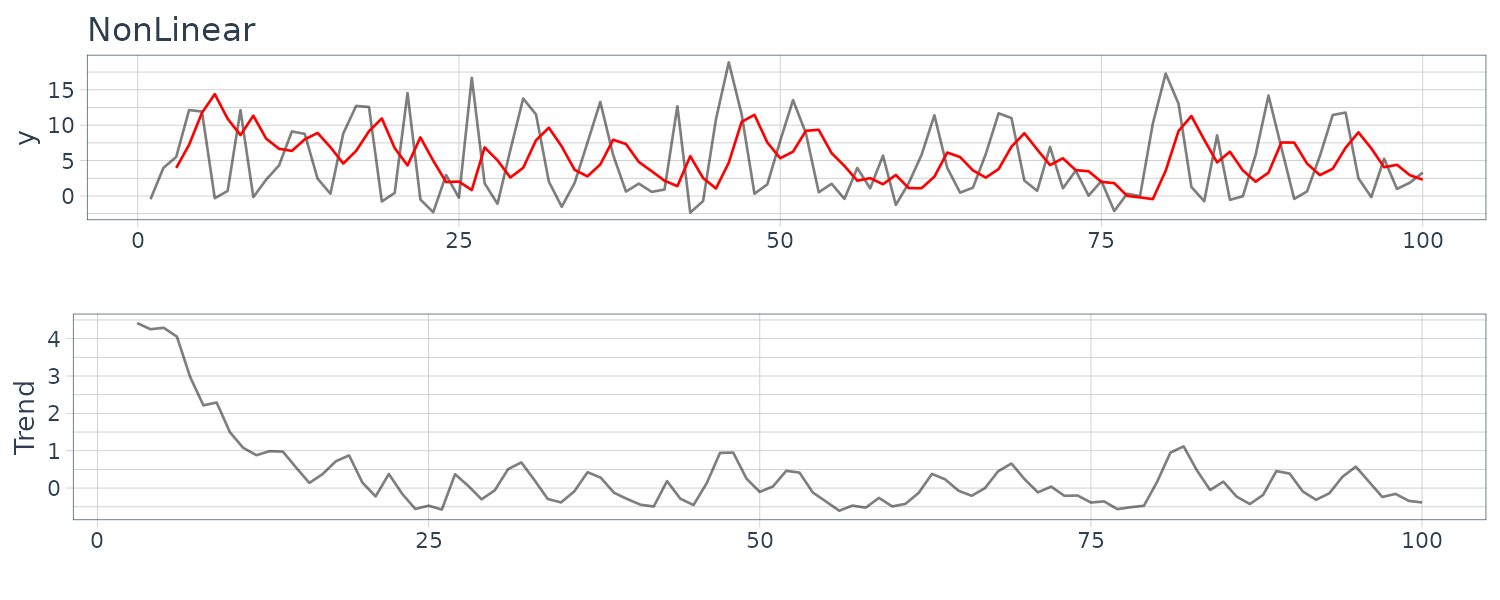

(d) Artificial Generated Data from a Nonlinear Model

HW_nonlinear <- HoltWinters(

y_nonlinear |>

select(y) |>

as.ts(),

gamma = FALSE

)

> HW_nonlinear

Holt-Winters exponential smoothing with trend and

without seasonal component.

Call:

HoltWinters(x = as.ts(select(y_nonlinear, y)),

gamma = FALSE)

Smoothing parameters:

alpha: 0.4013391

beta : 0.1434025

gamma: FALSE

Coefficients:

[,1]

a 2.470568

b -0.305886

y_nonlinear <- y_nonlinear |>

mutate(

pred = c(

rep(NA, 2),

HW_nonlinear$fitted[, "xhat"]

)

)

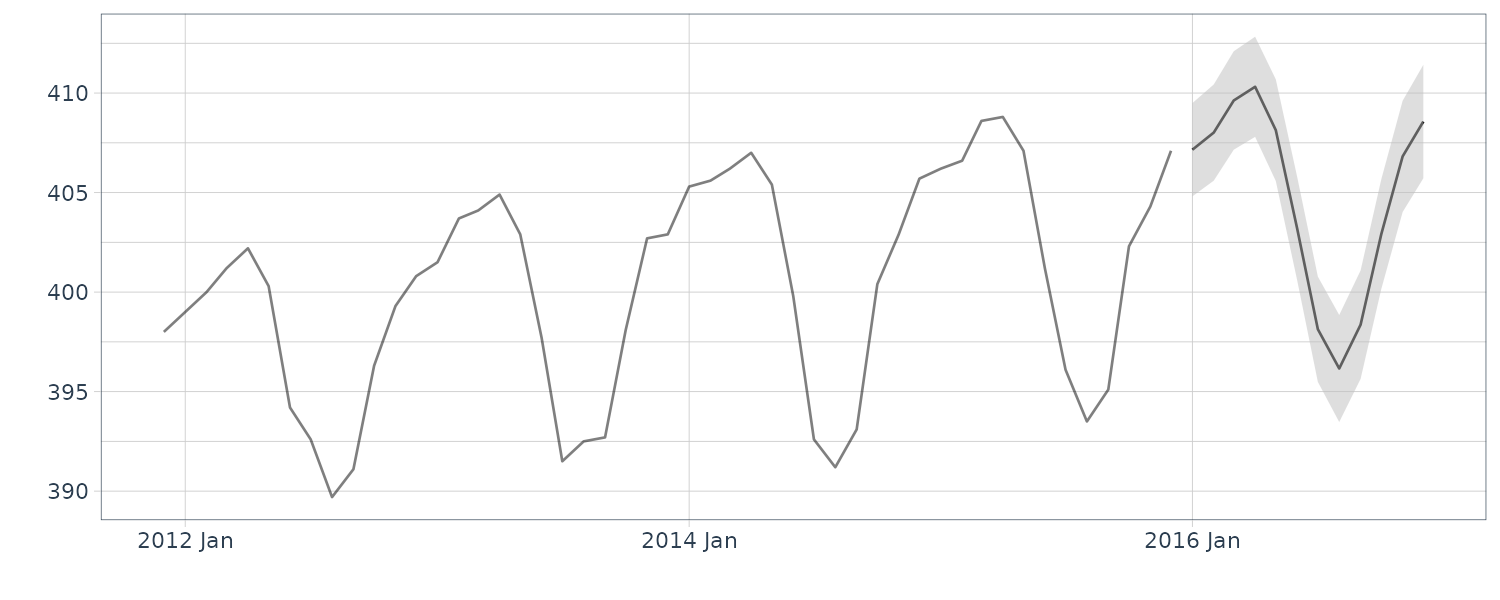

Prediction in R:

nAhead <- 12

dat <- new_data(y_CO2, n = nAhead)

dat$pred <- predict(

HW_CO2,

n.ahead = nAhead

)

y_CO2 |>

autoplot(y) +

xlab("") +

geom_line(

aes(y = pred),

data = dat,

col = "blue"

) +

theme_tq()

State Space Model

The state-space model interprets series data that have a relation with each other in stochastic terms. First, this model introduces latent random variables that are not directly observed in addition to directly observed data. Such a latent random variable is called a state.

We assume the following association for a state, a Markov property: A state is related only to the state at the previous time point. And an observation at a certain time point depends only on the state at the same time point.

Representation by Graphical Model

The Markov property for the state is expressed by the fact that \(x_{t}\) is directly connected with only its neighbors. In addition, the fact that \(y_{t}\) is directly connected with only \(x_{t}\) indicates an assumption for the observations.

Representation by Probability Distribution

\[\begin{aligned} p(\textbf{x}_{t}|\textbf{x}_{0:t-1}, y_{1:t-1}) &= p(\textbf{x}_{t} | \textbf{x}_{t-1})\\[5pt] p(y_{t}|\textbf{x}_{0:t}, y_{1:t-1}) &= p(y_{t}|\textbf{x}_{t}) \end{aligned}\]The first equation shows that when \(\textbf{x}_{t-1}\) is given, \(\textbf{x}_{t}\) is independent of \(\textbf{x}_{0:t−2}, y_{1:t−1}\). This relation is referred to as conditional independence on state.

The second equation shows that when \(\textbf{x}_{t}\) is given, \(y_{t}\) is independent of \(y_{1:t−1}, \textbf{x}_{0:t−1}\). This relationship is called conditional independence on observations.

Representation by Equation

\[\begin{aligned} \textbf{x}_{t} &= f(\textbf{x}_{t-1}, \textbf{w}_{t}) \end{aligned}\] \[\begin{aligned} y_{t} &= h(\textbf{x}, v_{t}) \end{aligned}\]Where, \(f()\) and \(h()\) are arbitrary functions. \(\textbf{w}_{t}\) and \(v_{t}\) are mutually independent white noise called state noise and observation noise, respectively. The noise \(\textbf{w}_{t}\) is a p-dimensional column vector.

The state equation is a stochastic differential equation for the state. The state noise \(\textbf{w}_{t}\) is the tolerance for a temporal change of state. The state equation potentially specifies a temporal pattern that allows for distortion.

The observation equation, on the contrary, suggests that when observations are obtained from a state, observation noise \(v_{t}\) is added. While the observation noise also has an aspect of supplementing the model definition, it is mostly interpreted as typical noise different from the state noise. The less the observation noise becomes, the more accurate the observations become.

Joint Distribution of State-Space Model

The joint distribution of the state-space model is defined as:

\[\begin{aligned} p(\textbf{x}_{0:T}, y_{1:T}) \end{aligned}\]Transforming the joint distribution:

\[\begin{aligned} p(\textbf{x}_{0:T}, y_{1:T}) &= p(y_{T}, y_{1:T-1}, \textbf{x}_{0:T})\\[5pt] &= p(y_{T}|y_{1:T-1}, \textbf{x}_{0:T})p(y_{1:T-1}, \textbf{x}_{0:T})\\[5pt] &= p(y_{T}|y_{1:T-1}, \textbf{x}_{0:T})p(\textbf{x}_{T}, \textbf{x}_{0:T-1}, y_{1:T-1})\\[5pt] &= p(y_{T}|y_{1:T-1}, \textbf{x}_{0:T})p(\textbf{x}_{T}| \textbf{x}_{0:T-1}, y_{1:T-1})p(\textbf{x}_{0:T-1},y_{1:T-1})\\[5pt] &\ \ \vdots\\ &= \Big[\prod_{t=2}^{T}p(y_{t}|y_{1:t-1}, \textbf{x}_{0:t})p(\textbf{x}_{t}|\textbf{x}_{0:t-1}, y_{1:t-1})\Big]p(\textbf{x}_{0:1}, y_{1})\\ &= \Big[\prod_{t=2}^{T}p(y_{t}|y_{1:t-1}, \textbf{x}_{0:t})p(\textbf{x}_{t}|\textbf{x}_{0:t-1}, y_{1:t-1})\Big]p(y_{1}|\textbf{x}_{0:1})p(\textbf{x}_{1}|\textbf{x}_{0})p(\textbf{x}_{0})\\ &= p(\textbf{x}_{0})\Big[\prod_{t=2}^{T}p(y_{t}|\textbf{x}_{t})p(\textbf{x}_{t}|\textbf{x}_{t-1})\Big]p(y_{1}|\textbf{x}_{1})p(\textbf{x}_{1}|\textbf{x}_{0})\\ &= p(\textbf{x}_{0})\prod_{t=1}^{T}p(y_{t}|\textbf{x}_{t})p(\textbf{x}_{t}|\textbf{x}_{t-1}) \end{aligned}\]The above equation simply states that the state-space model is determined by a simple product by assumed prior distribution \(p(\textbf{x}_{0})\), observation equation \(p(y_{t}\mid \textbf{x}_{t})\), and state equation \(p(\textbf{x}_{t}\mid \textbf{x}_{t−1})\). This result is a natural consequence of the assumptions for the state space model.

Features of State-Space Model

The state, enables the state-space model to be used as the basis for easily constructing a complicated model by combining multiple states. Recall that we can arbitrarily choose a state convenient for interpretation. Such a framework improves the flexibility of modeling.

Next, the state-space model represents the relation among observations via states indirectly rather than by direct connection among observations. This feature contrasts with the ARMA model (autoregressive moving average model) which directly represents the relation among observations. The ARMA(p, q) model is a stochastic one often used in time series analysis and is defined as:

\[\begin{aligned} y_{t} &= \sum_{j=1}^{p}\phi_{j}y_{t-j} + \sum_{j=1}^{q}\theta_{j} e_{t-j} + e_{t} \end{aligned}\]The ARMA model for the d-th differences time series becomes an ARIMA model (autoregressive integrated moving average model) and is denoted by ARIMA( p, d, q).

The ARIMA and state-space models are closely related. The models often used in the state-space model can be defined as the ARIMA model, and the ARIMA model can also be defined as one of the state-space models.

There is no essential difference between the two models from the viewpoint of capturing the temporal pattern of data stochastically. However, their underlying philosophies are different. Since the ARIMA model represents the relation among observations directly, the modeling problem requires a black box approach. On the contrary, since the state-space model represents the relation among observations indirectly in terms of states, the modeling problem results in a white box approach. The analyst assumes as much as possible regarding the factors generating the association among data and reflects them in the model through the corresponding latent variables.

It is also well recognized that the state-space model is equivalent to the hidden Markov model, which has been developed primarily in speech recognition. However, the random variable to be estimated in the hidden Markov model is discrete.

Classification of State-Space Models

If either f or h is a nonlinear function and either \(\textbf{w}_{t}\) or \(v_{t}\) has a non-Gaussian distribution, then the state-space model is referred to as a nonlinear non-Gaussian state-space model.

If either f or h is a nonlinear function and both \(w_{t}\) and \(v_{t}\) have Gaussian distributions, then the state-space model is referred to as a nonlinear Gaussian state-space model.

If both f and h are linear functions and either \(\textbf{w}_{t}\) or \(v_{t}\) has a non-Gaussian distribution, then the state-space model is referred to as a linear non-Gaussian state-space model.

Furthermore, if both f and h are linear functions and both \(\textbf{w}_{t}\) and \(v_{t}\) have Gaussian distributions, then the state-space model is referred to as a linear Gaussian state-space model.

Linear Gaussian State-Space Model

The state equation and observation equation for the linear Gaussian state-space model are expressed as follows:

\[\begin{aligned} \textbf{x}_{t} &= \textbf{G}_{t}\textbf{x}_{t-1} + \textbf{w}_{t} \end{aligned}\] \[\begin{aligned} y_{t} &= \textbf{F}_{t}\textbf{x}_{t} + v_{t} \end{aligned}\] \[\begin{aligned} \textbf{w}_{t} &\sim N(\textbf{0}, \textbf{W}_{t})\\ v_{t} &\sim N(0, V_{t}) \end{aligned}\]Where \(\textbf{G}_{t}\) is a p × p state transition matrix, \(\textbf{F}_{t}\) is a 1 × p observation matrix, \(\textbf{W}_{t}\) is a p × p covariance matrix for the state noise, and \(V_{t}\) is a variance for the observation noise.

The prior distribution is also expressed as:

\[\begin{aligned} \textbf{x}_{0} &\sim N(\textbf{m}_{0}, \textbf{C}_{0}) \end{aligned}\]Where \(\textbf{m}_{0}\) is the p-dimensional mean vector and \(\textbf{C}_{0}\) is a p × p covariance matrix.

In addition, the above equations can be expressed equivalently with a probability distribution as follows:

\[\begin{aligned} p(\textbf{x}_{t} | \textbf{x}_{t-1}) &= N(\textbf{G}_{t}\textbf{x}_{t-1}, \textbf{W}_{t})\\[5pt] p(y_{t}|\textbf{x}_{t}) &= N(\textbf{F}_{t}\textbf{x}_{t}, V_{t})\\[5pt] p(\textbf{x}_{0}) &= N(\textbf{m}_{0}, \textbf{C}_{0}) \end{aligned}\]Parameters in the linear Gaussian state-space model are summarized as:

\[\begin{aligned} \boldsymbol{\theta} &= \{\textbf{G}_{t}, \textbf{F}_{t}, \textbf{W}_{t}, V_{t}, \textbf{m}_{0}, \textbf{C}_{0}\} \end{aligned}\]The linear Gaussian state-space model is also referred to as a dynamic linear model (DLM).

State Estimation in the State-Space Model

The state in the state-space model is estimated based on observations through the current time point t. Thus, the distribution to be estimated becomes a conditional distribution (posterior distribution of the state) as:

\[\begin{aligned} p(\text{state} | y_{1:t}) \end{aligned}\]We must first consider all time points known as the joint posterior distribution of the state:

\[\begin{aligned} p(\textbf{x}_{0}, \textbf{x}_{0}, \cdots, \textbf{x}_{t'}, \cdots | y_{1:t}) \end{aligned}\]The estimation type is classified depending on the focused time point \(t'\):

\[\begin{aligned} \begin{cases} \text{smoothing} & t' < t\\ \text{filtering} & t' = t\\ \text{prediction} & t' > t \end{cases} \end{aligned}\]If we want to exclude the states whose time points differ from the focused \(t'\), we perform marginalization as:

\[\begin{aligned} p(\textbf{x}_{t'} | y_{1:t}) &= \int p(\textbf{x}_{0}, \textbf{x}_{0}, \cdots, \textbf{x}_{t'}, \cdots | y_{1:t})\ d\textbf{x}_{\text{except }t'} \end{aligned}\]Known as the marginal posterior distribution of the state.

A Simple Example

Let there be a domestic cat with a collar with a GPS tracker function for monitoring its behavior. We assume a case wherein the cat moves straight away from home at an average speed \(v\). We formulate this problem in terms of the linear Gaussian state-space model as:

\[\begin{aligned} x_{t} &= x_{t-1} + v + w_{t} \end{aligned}\] \[\begin{aligned} y_{t} &= x_{t}+ v_{t} \end{aligned}\] \[\begin{aligned} w_{t} &\sim N(0, \sigma_{w}^{2})\\ v_{t} &\sim N(0, \sigma^{2}) \end{aligned}\]Where \(p(x_{0}) \sim N(m_{0}, C_{0})\) and \(m_{0} = \hat{m}_{1}\) is the guessed position at \(t = 1\) based on prior knowledge, and variance \(C_{0}\) represents the degree of confidence in the guess. \(x_{t}\) is the cat’s position at time point t, \(v\) is the average velocity (constant), \(w_{t}\) is the fluctuation in velocity during movement, \(y_{t}\) is an observation at time t, and \(v_{t}\) is the noise at the observation.

We derive the process of obtaining the state sequentially. We first estimate the cat’s position at the beginning of movement, i.e., at t = 1 when observation starts. Based on prior knowledge, we multiply the prior distribution, \(p(x_{1})\), by the likelihood, \(p(y_{1}\mid x_{1})\), based on observation to obtain the posterior distribution, \(p(x_{1}\mid y_{1})\) (Bayesian updating).

Since the consideration of likelihood increases the accuracy, the variance of the posterior distribution is smaller than that of the prior distribution. This posterior distribution is the result of estimating where the cat is at the beginning of the observation.

Subsequently, we estimate the cat’s position at the next time point t = 2 after it moves. The prior distribution, \(p(x_{2})\) corresponds to the posterior distribution at t = 1, \(p(x_{1}\mid y_{1})\). Since the cat is moving at this time, we reflect the movement in this prior distribution (state transition) based on the average speed. The variance of the one-step-ahead predictive distribution is unavoidably greater than that of the prior distribution because a fluctuation \(w_{t}\) in the moving speed increases the uncertainty of the movement. This one-step-ahead predictive distribution predicts the situation after the movement. Hence, we multiply this predictive distribution by the likelihood based on the observation at t = 2, \(p(y_{2}\mid x_{2})\), resulting in a posterior distribution (Bayesian updating). Since the consideration of likelihood increases the accuracy, the variance of the posterior distribution is less than that of the one-step-ahead predictive distribution, \(p(x_{2}\mid y_{1})\). This posterior distribution, \(p(x_{2}\mid y_{2})\) is the result of estimating where the cat is at the next time point.

First, Bayesian updating at time point t = 1 is expressed as:

\[\begin{aligned} p(x_{1} | y_{1}) &\propto p(x_{1}) \times p(y_{1} | x_{1})\\ &= \frac{1}{\sqrt{2\pi C_{0}}}e^{-\frac{(x_{1} - m_{0})^{2}}{2C_{0}}}\times \frac{1}{\sqrt{2\pi \sigma^{2}}}e^{-\frac{(y_{1} - x_{1})^{2}}{2\sigma^{2}}}\\ & \propto e^{-\frac{x_{1}^{2} - 2m_{0}x_{1}}{2C_{0}} - \frac{-2y_{1}x_{1} + x_{1}^{2}}{2\sigma^{2}}}\\ &= e^{-\Big(\frac{1}{2C_{0}} + \frac{1}{2\sigma^{2}}\Big)x_{1}^{2} + \Big(\frac{2m_{0}x_{1}}{2C_{0}} + \frac{2y_{1}x_{1}}{2\sigma^{2}}\Big)}\\ &= e^{-\frac{1}{2}\Big(\frac{1}{C_{0}} + \frac{1}{\sigma^{2}}\Big)x_{1}^{2} + \Big(\frac{m_{0}}{C_{0}} + \frac{y_{1}}{\sigma^{2}}\Big)x_{1}} \end{aligned}\]The posterior distribution at \(t = 1\) can also be expressed as follows:

\[\begin{aligned} p(x_{1}|y_{1}) &= \frac{1}{\sqrt{2\pi C_{1}}}e^{-\frac{(x_{1} - m_{1})^{2}}{2C_{1}}}\\ &\propto e^{-\frac{x_{1}^{2} - 2m_{1}x_{1}}{2C_{1}}}\\ &= e^{-\frac{1}{2}\frac{1}{C_{1}}x_{1}^{2} + \frac{m_{1}}{C_{1}}x_{1}} \end{aligned}\]Equating the two yields the following relationships:

\[\begin{aligned} \frac{1}{C_{1}} &= \frac{1}{C_{0}} + \frac{1}{\sigma^{2}} \end{aligned}\]And:

\[\begin{aligned} m_{1} &= C_{1}\frac{1}{C_{0}}m_{0} + C_{1}\frac{1}{\sigma^{2}}y_{1}\\[10pt] &= \frac{\frac{1}{C_{0}}}{\frac{1}{C_{0}} + \frac{1}{\sigma^{2}}}m_{0} + \frac{\frac{1}{\sigma^{2}}}{\frac{1}{C_{0}} + \frac{1}{\sigma^{2}}}y_{1}\\[10pt] &= \frac{\sigma^{2}m_{0} + C_{0}y_{1}}{\sigma^{2} + C_{0}}\\[10pt] &= \frac{\sigma^{2} + C_{0} - C_{0}}{\sigma^{2} + C_{0}}m_{0} + \frac{C_{0}}{\sigma^{2} + C_{0}}y_{1}\\[10pt] &= m_{0} + \frac{C_{0}}{\sigma^{2} + C_{0}}(y_{1} - m_{0}) \end{aligned}\]The first equation shows that the precision (the inverse of the variance of the posterior distribution) is the sum of the precisions of the prior distribution and the precison of the likelihood. Such a fact implies that the precision or variance always increases or decreases through Bayesian updating because the certainty increases by considering observations. On the contrary, the second equation shows that the mean for the posterior distribution is a weighted sum of the means for prior distribution and the likelihood; each weight is proportional to each precision:

\[\begin{aligned} \frac{\sigma^{2} + C_{0} - C_{0}}{\sigma^{2} + C_{0}}m_{0} + \frac{C_{0}}{\sigma^{2} + C_{0}}y_{1} \end{aligned}\]From the state equation:

\[\begin{aligned} x_{t} &= x_{t-1} + v + w_{t}\\ \hat{C}_{2} &= \text{var}(x_{1}) + \text{var}(v) + \text{var}(w_{2})\\ &= C_{1} + 0 + \sigma_{w}^{2}\\ &= C_{1}+ \sigma_{w}^{2}\\[5pt] \hat{m}_{2} &= E[x_{1}] + E[v] + E[w_{2}]\\ &= m_{1} + v + 0\\ &= m_{1} + v \end{aligned}\]The above expression shows that the variance always increases with the state transition.

Recall that:

\[\begin{aligned} m_{0} &= \hat{m}_{1} \end{aligned}\]We confirm the Bayesian updating at time point t = 2. Since the mechanism of Bayesian updating remains the same as that at t = 1, we can obtain the result by replacing:

\[\begin{aligned} m_{1} &= \hat{m}_{2}\\ C_{1} &= \hat{C}_{2} \end{aligned}\]Specifically, the Bayesian updating at time point t = 2 is as follows:

\[\begin{aligned} \frac{1}{C_{2}} &= \frac{1}{C_{1}} + \frac{1}{\sigma^{2}}\\ &= \frac{1}{\hat{C}_{2}} + \frac{1}{\sigma^{2}}\\ &= \frac{1}{C_{1} + \sigma_{w}^{2}} + \frac{1}{\sigma^{2}}\\ \end{aligned}\] \[\begin{aligned} m_{2} &= m_{1} + \frac{C_{1}}{\sigma^{2} + C_{1}}(y_{2} - m_{1})\\ &= \hat{m}_{2} + \frac{\hat{C_{2}}}{\sigma^{2} + \hat{C}_{2}}(y_{2} - \hat{m_{2}})\\ &= (m_{1} + v) + \frac{C_{1} + \sigma_{w}^{2}}{\sigma^{2} + C_{1} + \sigma_{w}^{2}}[y_{2} - (m_{1} + v)] \end{aligned}\]The above result corresponds to a simple example of Kalman filtering. We subsequently supplement \(m_{2}\) for estimating the mean. This equation has a prediction-error correction structure: the mean \(\hat{m}_{2}\) for the one-step-ahead predictive distribution, \(p(x_{2}\mid y_{1})\) is corrected by observations \(y_{2}\). This structure is a general result obtained by the combination of state transition and Bayesian updating. In addition, the equation can take the form:

\[\begin{aligned} x_{1}y_{t} + (1 − x_{1})\hat{m}_{2} \end{aligned}\]Therefore, it also has a structure of an exponentially weighted moving average.

Formulation of Filtering Distribution

We now formulate the recursions for the marginal posterior distribution of the state.

For filtering, \(t'= t\) is set:

\[\begin{aligned} p(\textbf{x}_{t} | y_{1:t}) &= \int p(\textbf{x}_{0}, \textbf{x}_{0}, \cdots, \textbf{x}_{t}, \cdots | y_{1:t})\ d\textbf{x}_{\text{except }t} \end{aligned}\]Note the relation:

\[\begin{aligned} p(a, b | c) &= p(a | b, c)p(b |c) \end{aligned}\]We can show the derivation of the above relation. Using the product rule of probability to \(p(a, b, c)\) considering \(a, b\) as a single block:

\[\begin{aligned} p(a, b | c) &= p(a, b | c)p(c) \end{aligned}\]Now, using the product rule of probability to \(p(a, b, c)\) considering \(b, c\) as a single block:

\[\begin{aligned} p(a, b, c) &= p(a | b, c)p(b, c) \end{aligned}\]Apply the product rule to the last term \(p(b, c)\):

\[\begin{aligned} p(a, b, c) &= p(a | b, c)p(b | c)p(c) \end{aligned}\]Thus:

\[\begin{aligned} p(a, b, c) &= p(a | b, c)p(b | c)p(c)\\[10pt] p(a, b | c)p(c) &= p(a | b, c)p(b | c)p(c)\\[10pt] p(a, b | c) &= p(a | b, c)p(b | c) \end{aligned}\]The filtering distribution becomes:

\[\begin{aligned} p(\mathbf{x}_{t} | y_{1:t}) &= p(\mathbf{x}_{t} | y_{t}, y_{1:t-1})\\[10pt] &= \frac{p(\mathbf{x}_{t}, y_{t}|y_{1:t-1})}{p(y_{t}|y_{1:t-1})}\\[10pt] &= \frac{p(y_{t}, \mathbf{x}_{t}|y_{1:t-1})}{p(y_{t}|y_{1:t-1})} \end{aligned}\]Using the relation above:

\[\begin{aligned} \frac{p(y_{t}, \mathbf{x}_{t}|y_{1:t-1})}{p(y_{t}|y_{1:t-1})} &= \frac{p(y_{t}| \mathbf{x}_{t}, y_{1:t-1})p(\mathbf{x}_{t}|y_{1:t-1})}{p(y_{t}|y_{1:t-1})} \end{aligned}\]From the conditional independence:

\[\begin{aligned} \frac{p(y_{t}| \mathbf{x}_{t}, y_{1:t-1})p(\mathbf{x}_{t}|y_{1:t-1})}{p(y_{t}|y_{1:t-1})} &= \frac{p(y_{t}| \mathbf{x}_{t})p(\mathbf{x}_{t}|y_{1:t-1})}{p(y_{t}|y_{1:t-1})}\\ \end{aligned}\]Hence:

\[\begin{aligned} p(\mathbf{x}_{t} | y_{1:t}) &= \frac{p(y_{t}| \mathbf{x}_{t})p(\mathbf{x}_{t}|y_{1:t-1})}{p(y_{t}|y_{1:t-1})}\\ \end{aligned}\]Where \(p(\mathbf{x}_{t}\mid y_{1:t-1})\) is referred to as the one-step-ahead predictive distribution.

And \(p(y_{t}\mid y_{1:t-1})\) is known as the one-step-ahead predictive likelihood.

Expanding the one-step predictive distribution:

\[\begin{aligned} p(\textbf{x}_{t}|y_{1:t-1}) &= \int p(\textbf{x}_{t}, \textbf{x}_{t-1} | y_{1:t-1})\ d\textbf{x}_{t-1}\\ &= \int p(\textbf{x}_{t} | \textbf{x}_{t-1}, y_{1:t-1})p(\textbf{x}_{t-1}|y_{1:t-1})\ d\textbf{x}_{t-1}\\ &= \int p(\textbf{x}_{t} | \textbf{x}_{t-1})p(\textbf{x}_{t-1}|y_{1:t-1})\ d\textbf{x}_{t-1} \end{aligned}\]Expanding the one-step predictive likelihood:

\[\begin{aligned} p(y_{t}|y_{1:t-1}) &= \int p(y_{t}, \textbf{x}_{t} | y_{1:t-1})\ d\textbf{x}_{t}\\ &= \int p(y_{t}|\textbf{x}_{t}, y_{1:t-1})p(\textbf{x}_{t}|y_{1:t-1})\ d\textbf{x}_{t}\\ &= \int p(y_{t}|\textbf{x}_{t})p(\textbf{x}_{t}|y_{1:t-1})\ d\textbf{x}_{t} \end{aligned}\]The filtering distribution:

\[\begin{aligned} p(\textbf{x}_{t} | y_{1:t}) &= p(\textbf{x}_{t}|y_{1:t-1})\frac{p(y_{t}|\textbf{x}_{t})}{p(y_{t}|y_{1:t-1})} \end{aligned}\]The one-step-ahead predictive distribution is corrected based on the likelihood.

One-step-ahead predictive distribution:

\[\begin{aligned} p(\textbf{x}_{t} | y_{1:t-1}) &= \int p(\textbf{x}_{t}|\textbf{x}_{t-1})p(\textbf{x}_{t-1}|y_{1:t-1})\ d\textbf{x}_{t-1} \end{aligned}\]The filtering distribution one time before is transitioned in the time-forward direction based on the state equation.

One-step-ahead predictive likelihood:

\[\begin{aligned} p(y_{t}|y_{1:t-1}) &= \int p(y_{t}|\textbf{x}_{t})p(\textbf{x}_{t}|y_{1:t-1})\ d\textbf{x}_{t} \end{aligned}\]The one-step-ahead predictive distribution is converted in the domain of observations based on the observation equation; this likelihood also corresponds to the normalization factor for the numerators in the filtering distribution.

Filtering can be achieved by:

- obtaining the one-step-ahead predictive distribution through the state transition from the filtering distribution one time before

- correcting the obtained distribution based on the observations

Formulation of Predictive Distribution

We now consider k-steps-ahead prediction with \(t' = t + k\) in:

\[\begin{aligned} p(\textbf{x}_{t + k} | y_{1:t}) &= \int p(\textbf{x}_{0}, \textbf{x}_{0}, \cdots, \textbf{x}_{t+k}, \cdots | y_{1:t})\ d\textbf{x}_{\text{except }t + k} \end{aligned}\]The corresponding k-steps-ahead predictive distribution is:

\[\begin{aligned} p(\textbf{x}_{t+k} | y_{1:t}) &= \int p(\textbf{x}_{t+k}, \textbf{x}_{t+k-1}|y_{1:t})\ d\textbf{x}_{t+k-1}\\ &= \int p(\textbf{x}_{t+k} | \textbf{x}_{t+k-1}, y_{1:t})p(\textbf{x}_{t+k-1}|y_{1:t})\ d\textbf{x}_{t+k-1}\\ &= \int p(\textbf{x}_{t+k}|\textbf{x}_{t+k-1})p(\textbf{x}_{t+k-1}|y_{1:t})\ d\textbf{x}_{t+k-1} \end{aligned}\]The (k−1)-steps-ahead predictive distribution is transitioned in the forward time direction based on the state equation. The (k−2)-steps-ahead predictive distribution is transitioned in the forward time direction based on the state equation. And etc.

Starting from the one-step-ahead predictive distribution and repeating the transition in the forward time direction k − 1 times based on the state equation, we obtain the k-steps-ahead predictive distribution.

Recall that if the filtering distribution one time before is transitioned in the forward time direction based on the state equation, we can obtain the one-step-ahead predictive distribution, \(p(x_{t}\mid y_{1:t-1})\). Therefore, we can conclude as follows: Starting from the filtering distribution \(p(x_{t} \mid y_{1:t})\) and repeating the transition in the forward time direction k times based on the state equation, we obtain the k-steps-ahead predictive distribution.

Comparing the k-steps-ahead prediction with filtering, we can see that the state transition (horizontal arrow) is repeated without correction based on the observations (vertical arrow). Hence, while the accuracy of prediction gradually decreases as k increases, the most likely distributions continue to be generated based on the information through the filtering point. Incidentally, the state-space model applies this concept directly to the missing observations. Therefore, the missing observations in the state-space model are compensated for with prediction values. This is the background showing that it is easy to handle missing observations in the state-space model.

Formulation of the Smoothing Distribution

We now consider smoothing. This book focuses on the popular fixed-interval smoothing; Hence, we replace the \(t, t'\) with T and t, respectively in \(p(\textbf{x}_{t'} \mid y_{1:t})\). In this case, the smoothing distribution is \(p(\textbf{x}_{t} \mid y_{1:T})\).

To perform smoothing, we first assume that filtering has been completed through the time point T . In addition, we assume that sequential updating in the reverse time direction. The recursive formulas for the smoothing distribution require expressing the element about t + 1 clearly. Hence, we deform the smoothing distribution to ensure that the element \(x_{t+1}\) can be expressed explicitly as follows:

\[\begin{aligned} p(\textbf{x}_{t} | y_{1:T}) &= \int p(\textbf{x}_{t}, \textbf{x}_{t+1}|y_{1:T})\ d\textbf{x}_{t+1}\\ &= \int p(\textbf{x}_{t}| \textbf{x}_{t+1}, y_{1:T})p(\textbf{x}_{t+1}|y_{1:T})\ d\textbf{x}_{t+1}\\ &= \int p(\textbf{x}_{t+1}|y_{1:T})p(\textbf{x}_{t}| \textbf{x}_{t+1}, y_{1:T})\ d\textbf{x}_{t+1}\\ &= \int p(\textbf{x}_{t+1}|y_{1:T})p(\textbf{x}_{t}| \textbf{x}_{t+1}, y_{1:t})\ d\textbf{x}_{t+1}\\ &= \int p(\textbf{x}_{t+1}|y_{1:T})\frac{p(\textbf{x}_{t}, \textbf{x}_{t+1}|y_{1:t})}{p(\textbf{x}_{t+1}|y_{1:t})}\ d\textbf{x}_{t+1}\\[10pt] &= \int p(\textbf{x}_{t+1}|y_{1:T})\frac{p(\textbf{x}_{t+1}, \textbf{x}_{t}|y_{1:t})}{p(\textbf{x}_{t+1}|y_{1:t})}\ d\textbf{x}_{t+1}\\[10pt] &= \int p(\textbf{x}_{t+1}|y_{1:T})\frac{p(\textbf{x}_{t+1}| \textbf{x}_{t},y_{1:t})p(\textbf{x}_{t}|y_{1:t})}{p(\textbf{x}_{t+1}|y_{1:t})}\ d\textbf{x}_{t+1} \end{aligned}\]\(p(\textbf{x}_{t} \mid y_{1:t})\) in the numerator does not depend on \(\textbf{x}_{t+1}\):

\[\begin{aligned} \int p(\textbf{x}_{t+1}|y_{1:T})\frac{p(\textbf{x}_{t+1}| \textbf{x}_{t},y_{1:t})p(\textbf{x}_{t}|y_{1:t})}{p(\textbf{x}_{t+1}|y_{1:t})}\ d\textbf{x}_{t+1} &= p(\textbf{x}_{t}|y_{1:t})\int p(\textbf{x}_{t+1}|y_{1:T})\frac{p(\textbf{x}_{t+1}| \textbf{x}_{t},y_{1:t})}{p(\textbf{x}_{t+1}|y_{1:t})}\ d\textbf{x}_{t+1}\\[10pt] &= p(\textbf{x}_{t}|y_{1:t})\int p(\textbf{x}_{t+1}|y_{1:T})\frac{p(\textbf{x}_{t+1}| \textbf{x}_{t})}{p(\textbf{x}_{t+1}|y_{1:t})}\ d\textbf{x}_{t+1} \end{aligned}\]The filtering distribution is corrected based on the smoothing distribution at one time ahead. Hence, the following relation continues to hold in a chain:

- Smoothing distribution at time point T − 1: the filtering distribution at time point T − 1 is corrected based on the smoothing distribution at time point T

- Smoothing distribution at time point T − 2: the filtering distribution at time point T − 2 is corrected based on the smoothing distribution at time point T − 1

- …

Likelihood and Model Selection in the State-Space Model

The likelihood for an entire time series can be decomposed as follows:

\[\begin{aligned} p(y_{1}, y_{2}, \cdots, y_{T};\boldsymbol{\theta}) &= p(y_{1:T};\boldsymbol{\theta})\\ &= p(y_{T}, y_{1:T-1};\boldsymbol{\theta})\\ &= p(y_{T}|y_{1:T-1};\boldsymbol{\theta})p(y_{1:T-1};\boldsymbol{\theta})\\ &\ \ \vdots\\ &= \prod_{t=1}^{T}p(y_{t}|y_{1:t-1};\boldsymbol{\theta}) \end{aligned}\]Where \(y_{1:0}\) does not exist and is assumed to be the empty set \(\emptyset\):

\[\begin{aligned} p(y_{1}|y_{1:0}; \boldsymbol{\theta}) &= p(y_{1}|\emptyset; \boldsymbol{\theta})\\ &= p(y_{1};\boldsymbol{\theta}) \end{aligned}\]Taking the logarithm of the likelihood yields the log-likelihood as:

\[\begin{aligned} l(\boldsymbol{\theta}) &= \log p(y_{1:T};\boldsymbol{\theta})\\ &= \sum_{t=1}^{T}\log p(y_{t}|y_{1:t-1};\boldsymbol{\theta}) \end{aligned}\]We see that the log-likelihood for the entire time series can be represented as the sum of the logarithms of the one-step-ahead predictive likelihoods. Recalling that the filtering recursion derives the one-step-ahead predictive likelihood during its processing, we see that the likelihood can be calculated efficiently through filtering.

When Parameters are Not Regarded as Random Variables

The approach is based on frequentism. In this case, before estimating the time series, we typically specify the point estimates for the parameter using the maximum likelihood method.

A maximum likelihood estimator \(\hat{\boldsymbol{\theta}}\) for the parameters in the state-space model is expressed as follows:

\[\begin{aligned} \hat{\boldsymbol{\theta}} &= \underset{\boldsymbol{\theta}}{\text{argmax }} l(\boldsymbol{\theta})\\ &= \underset{\boldsymbol{\theta}}{\text{argmax }} \log p(y_{1:T};\boldsymbol{\theta})\\ &= \underset{\boldsymbol{\theta}}{\text{argmax }} \sum_{t=1}^{T}\log p(y_{t}|y_{1:t-1};\boldsymbol{\theta}) \end{aligned}\]We obtain the maximum likelihood estimator for parameters based on a particular number of observations. This is achieved through several methods: a direct search for different values of parameters, quasi-Newton method, when a numerical optimization is applicable, and the expectation maximization (EM) algorithm.

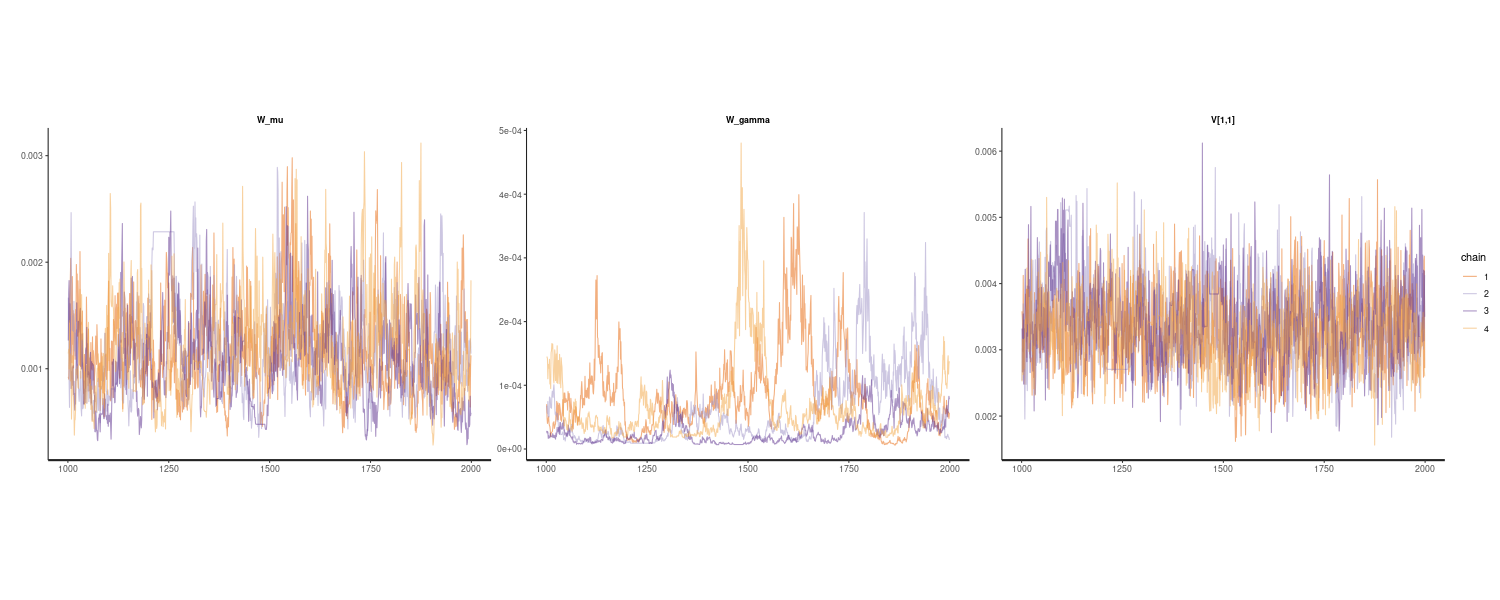

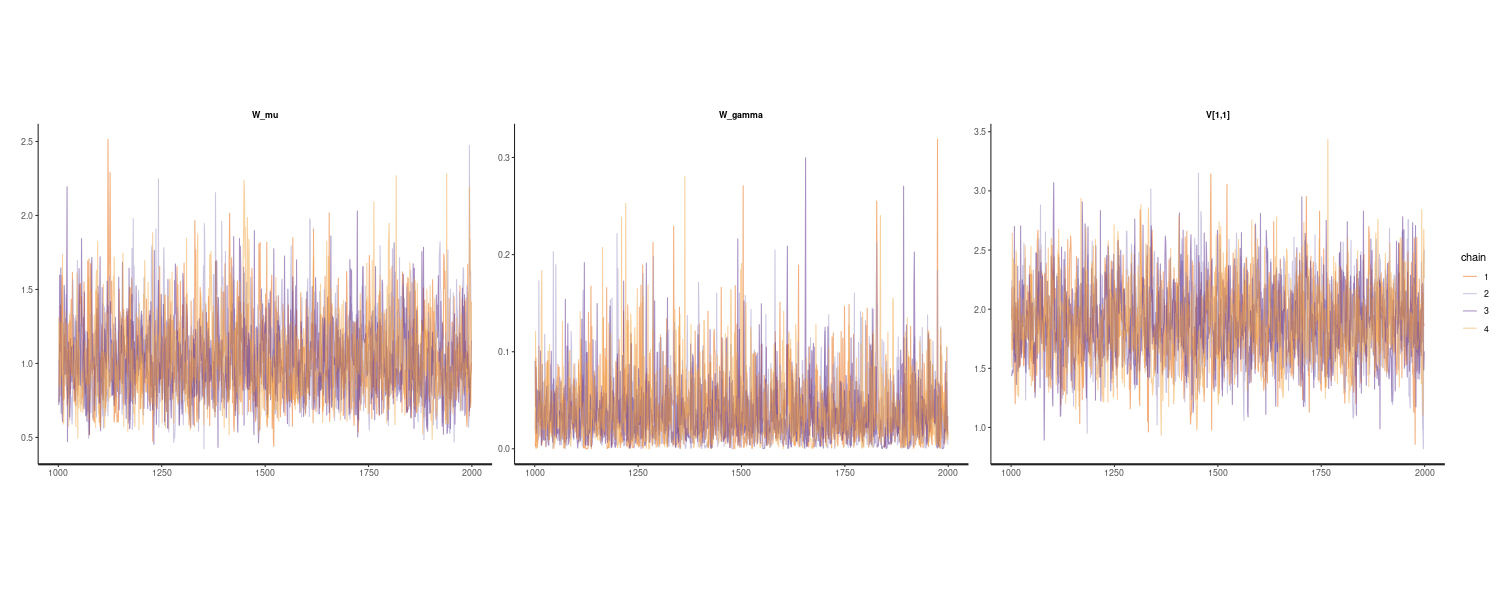

When Parameters are Regarded as Random Variables

The approach is based on Bayesianism. Unlike the case of frequentism, treating parameters as random variables makes it possible to consider their uncertainties more appropriately. In this case, for the estimation of a time series, Bayesian estimation is applied to both parameters and state.

We treat the augmented \(\text{state} = \{\textbf{x}, \boldsymbol{\theta}\}\) comprising the original state \(\textbf{x}\) and added parameters \(\boldsymbol{\theta}\). Thus, the joint distribution of the state-space model is extended from as follows:

\[\begin{aligned} p(\textbf{x}_{0:T}, y_{1:T}, \boldsymbol{\theta}) &= p(\textbf{x}_{0}, \boldsymbol{\theta})\prod_{t=1}^{T}p(y_{t}|\textbf{x}_{t}, \boldsymbol{\theta})p(\textbf{x}_{t}|\textbf{x}_{t-1}, \boldsymbol{\theta})\\ &= p(\textbf{x}_{0}| \boldsymbol{\theta})p(\boldsymbol{\theta})\prod_{t=1}^{T}p(y_{t}|\textbf{x}_{t}, \boldsymbol{\theta})p(\textbf{x}_{t}|\textbf{x}_{t-1}, \boldsymbol{\theta})\\ \end{aligned}\]And similar the posterior distribution of the state is also extended:

\[\begin{aligned} p(\textbf{x}_{0, 1, \cdots, t', \cdots}, \boldsymbol{\theta}|y_{1:t}) &= p(\textbf{x}_{0, 1, \cdots, t', \cdots}| \boldsymbol{\theta}, y_{1:t})p(\boldsymbol{\theta}|y_{1:t})\\ p(\textbf{x}_{t'}, \boldsymbol{\theta}|y_{1:t}) &= p(\textbf{x}_{t'}| \boldsymbol{\theta}, y_{1:t})p(\boldsymbol{\theta}|y_{1:t}) \end{aligned}\]If only the parameter is of interest, then we marginalize out the state in the above equations to obtain the marginal posterior distribution:

\[\begin{aligned} p(\boldsymbol{\theta} | y_{1:t}) \end{aligned}\]Furthermore deriving the point estimator of the parameter, we can obtain it by maximizing this marginal posterior distribution as follows:

\[\begin{aligned} \underset{\boldsymbol{\theta}}{\text{argmax }}p(\boldsymbol{\theta} | y_{1:t}) &= \underset{\boldsymbol{\theta}}{\text{argmax }}\frac{p(y_{1:t}|\boldsymbol{\theta})p(\boldsymbol{\theta})}{p(y_{1:t})}\\[10pt] &\propto p(y_{1:t}|\boldsymbol{\theta})p(\boldsymbol{\theta}) \end{aligned}\]The above expression is a maximum a posteriori probability (MAP) estimation.

Taking the log:

\[\begin{aligned} \underset{\boldsymbol{\theta}}{\text{argmax }}\log p(\boldsymbol{\theta} | y_{1:t}) &\propto \underset{\boldsymbol{\theta}}{\text{argmax }}[\log p(y_{1:t}|\boldsymbol{\theta}) + \log p(\boldsymbol{\theta})] \end{aligned}\]Comparing MLE with MAP, we see that the MAP estimation is achieved by adding the correction with the prior distribution \(p(\boldsymbol{\theta})\) to the maximum likelihood estimation. On the contrary, it can be said that the MAP estimation without correction with the prior distribution is equivalent to the maximum likelihood estimation.

Summary of State-Space Equations

Conditional Independence on State:

\[\begin{aligned} p(\textbf{x}_{t}|\textbf{x}_{0:t-1}, y_{1:t-1}) &= p(\mathbf{x}_{t}|\textbf{x}_{t-1}) \end{aligned}\]Conditional Independence on Observations

\[\begin{aligned} p(y_{t}|\textbf{x}_{0:t}, y_{1:t-1}) &= p(y_{t}|\textbf{x}_{t}) \end{aligned}\]Joint Distribution of the State-Space Model

\[\begin{aligned} p(\textbf{x}_{0:T}, y_{1:T}) &= p(\textbf{x}_{0})\prod_{t=1}^{T}p(y_{t}|\textbf{x}_{t})p(\textbf{x}_{t}|\textbf{x}_{t-1}) \end{aligned}\]Posterior Distribution of the State

\[\begin{aligned} p(\textbf{x}_{0}, \textbf{x}_{0}, \cdots, \textbf{x}_{t'}, \cdots | y_{1:t}) \end{aligned}\]Marginal Posterior Distribution of the State

\[\begin{aligned} p(\textbf{x}_{t'} | y_{1:t}) &= \int p(\textbf{x}_{0}, \textbf{x}_{0}, \cdots, \textbf{x}_{t'}, \cdots | y_{1:t})\ d\textbf{x}_{\text{except }t'} \end{aligned}\]Prior, Likelihood and Posterior Distribution

\[\begin{aligned} p(\textbf{x}_{t})\\ p(y_{t} | \textbf{x}_{t})\\ p(\textbf{x}_{t} | y_{t}) \end{aligned}\]Filtering Distribution

\[\begin{aligned} p(\textbf{x}_{t} | y_{1:t}) &= p(\textbf{x}_{t}|y_{1:t-1})\frac{p(y_{t}|\textbf{x}_{t})}{p(y_{t}|y_{1:t-1})} \end{aligned}\]One-Step-Ahead Predictive Distribution

\[\begin{aligned} p(\textbf{x}_{t} | y_{1:t-1}) &= \int p(\textbf{x}_{t}|\textbf{x}_{t-1})p(\textbf{x}_{t-1}|y_{1:t-1})\ d\textbf{x}_{t-1} \end{aligned}\]One-Step-Ahead Predictive Likelihood

\[\begin{aligned} p(y_{t}|y_{1:t-1}) &= \int p(y_{t}|\textbf{x}_{t})p(\textbf{x}_{t}|y_{1:t-1})\ d\textbf{x}_{t} \end{aligned}\]k-Steps-Ahead Predictive Distribution

\[\begin{aligned} p(\textbf{x}_{t+k} | y_{1:t}) &= \int p(\textbf{x}_{t+k}|\textbf{x}_{t+k-1})p(\textbf{x}_{t+k-1}|y_{1:t})\ d\textbf{x}_{t+k-1} \end{aligned}\]Smoothing Distribution

\[\begin{aligned} p(\textbf{x}_{t} | y_{1:T}) &= p(\textbf{x}_{t}|y_{1:t})\int p(\textbf{x}_{t+1}|y_{1:T})\frac{p(\textbf{x}_{t+1}| \textbf{x}_{t})}{p(\textbf{x}_{t+1}|y_{1:t})}\ d\textbf{x}_{t+1} \end{aligned}\]Kalman Filter

Since the Kalman filter performs only linear operations, every distribution of interest becomes a normal distribution from the reproducibility for normal distributions.

Recall the linear Gaussian state space model:

\[\begin{aligned} \textbf{x}_{t} &= \textbf{G}_{t}\textbf{x}_{t-1} + \textbf{w}_{t} \end{aligned}\] \[\begin{aligned} y_{t} &= \textbf{F}_{t}\textbf{x}_{t} + v_{t} \end{aligned}\] \[\begin{aligned} \textbf{w}_{t} &\sim N(\textbf{0}, \textbf{W}_{t})\\ v_{t} &\sim N(0, V_{t}) \end{aligned}\]Regarding the prior distribution, we also assume that:

\[\begin{aligned} \textbf{x}_{0} \sim N(\textbf{m}_{0}, \textbf{C}_{0}) \end{aligned}\]The parameters in the linear Gaussian state space model:

\[\begin{aligned} \boldsymbol{\theta} &= \{\textbf{G}_{t}, \textbf{F}_{t}, \textbf{W}_{t}, V_{t}, \boldsymbol{m}_{0}, \textbf{C}_{0}\} \end{aligned}\]The filtering distribution, one-step-ahead predictive distribution, and one-step-ahead predictive likelihood are all normal distributions in the Kalman filter. We define them as follows:

Filtering Distribution:

\[\begin{aligned} N(\textbf{m}_{t}, \textbf{C}_{t}) \end{aligned}\]One-step-ahead Predictive Distribution:

\[\begin{aligned} N(\textbf{a}_{t}, \textbf{R}_{t}) \end{aligned}\]One-step-ahead Predictive Likelihood:

\[\begin{aligned} N(f_{t}, Q_{t}) \end{aligned}\]Filtering distribution at time point \(t-1\):

\[\begin{aligned} N(\textbf{m}_{t-1}, \textbf{C}_{t-1}) \end{aligned}\]Update procedure at time point \(t\)

One-step-ahead predictive distribution:

\[\begin{aligned} \textbf{a}_{t} &\leftarrow \textbf{G}_{t}\textbf{m}_{t-1}\\ \end{aligned}\] \[\begin{aligned} \textbf{R}_{t} &\leftarrow \textbf{G}_{t}\textbf{C}_{t-1}\textbf{G}_{t}^{T} + \textbf{W}_{t} \end{aligned}\]One-step-ahead predictive likelihood:

\[\begin{aligned} f_{t} &\leftarrow \textbf{F}_{t}\textbf{a}_{t} \end{aligned}\] \[\begin{aligned} Q_{t} &\leftarrow \textbf{F}_{t}\textbf{R}_{t}\textbf{F}_{t}^{T} + V_{t} \end{aligned}\]Kalman gain:

\[\begin{aligned} \textbf{K}_{t} &\leftarrow \textbf{R}_{t}\textbf{F}_{t}^{T}Q_{t}^{-1} \end{aligned}\]State update:

\[\begin{aligned} \textbf{m}_{t} &\leftarrow \textbf{a}_{t} + \textbf{K}_{t}(y_{t} - f_{t})\\ \textbf{C}_{t} &\leftarrow [\textbf{I} - \textbf{K}_{t}\textbf{F}_{t}]\textbf{R}_{t} \end{aligned}\]Filtering distribution at time point t:

\[\begin{aligned} N(\textbf{m}_{t}, \textbf{C}_{t}) \end{aligned}\]The filtering distribution is obtained by correcting the one-step-ahead predictive distribution. The Kalman gain is related to the degree of this correction. Specifically, if we define the prediction error \(e_{t} = y_{t} − f_{t}\) as the difference between the observations and mean of the one-step-ahead predictive likelihood, the gain corresponds to the weight of the extent to which this prediction error is reflected in the correction. The ratio of \(\textbf{W}_{t}\) to \(V_{t}\) (also known as the signal-to-noise ratio) has a dominant influence on the Kalman gain.

Example: Nile

We consider a simple example wherein:

\[\begin{aligned} p &= 1\\ \textbf{G}_{t} &= \begin{bmatrix} 1 \end{bmatrix}\\ \textbf{F}_{t} &= \begin{bmatrix} 1 \end{bmatrix} \end{aligned}\]The dimension of the state \(p = 1\) implies that all the vectors and matrices of interest become scalar. This model is referred to as the local-level model.

The estimation of the mean is equivalent to the exponentially weighted moving average because:

\[\begin{aligned} m_{t} &= m_{t-1} + K_{t}(y_{t} - m_{t-1})\\ &= K_{t}y_{t} + (1 - K_{t})m_{t-1} \end{aligned}\]The Kalman gain converges to a time-invariant constant in many models for stationary time series.

In the following R code, we define the function Kalman_filtering() to peform Kalman filtering. Note that all the parameters of the linear Gaussian state space model are specified.

dat <- as_tsibble(Nile)

dat <- dat |>

rename(

y = value

)

# Function performing Kalman filtering for one time point

Kalman_filtering <- function(dat, m_t_minus_1, C_t_minus_1, t) {

# One-step-ahead predictive distribution

a_t <- G_t %*% m_t_minus_1

R_t <- G_t %*% C_t_minus_1 %*% t(G_t) + W_t

# One-step-ahead predictive likelihood

f_t <- F_t %*% a_t

Q_t <- F_t %*% R_t %*% t(F_t) + V_t

# Kalman gain

K_t <- R_t %*% t(F_t) %*% solve(Q_t)

# State update

m_t <- a_t + K_t %*% (dat$y[t] - f_t)

C_t <- (diag(nrow(R_t)) - K_t %*% F_t) %*% R_t

dat$m[t] <- m_t

dat$C[t] <- C_t

dat$a[t] <- a_t

dat$R[t] <- R_t

dat

}

G_t <- matrix(1, ncol = 1, nrow = 1)

W_t <- matrix(exp(7.29), ncol = 1, nrow = 1)

F_t <- matrix(1, ncol = 1, nrow = 1)

V_t <- matrix(exp(9.62), ncol = 1, nrow = 1)

m0 <- matrix(0, ncol = 1, nrow = 1)

C0 <- matrix(1e+7, ncol = 1, nrow = 1)

# Allocate memory for state (mean and covariance)

dat <- dat |>

mutate(

m = NA_real_,

C = NA_real_,

a = NA_real_,

R = NA_real_,

)

# Time point: t = 1

dat <- Kalman_filtering(dat, m0, C0, t = 1)

# Time point: t = 2 to t_max

for (t in 2:nrow(dat)) {

dat <- Kalman_filtering(

dat,

dat$m[t - 1],

dat$C[t - 1],

t = t

)

}

# Find 2.5% and 97.5% values for 95% intervals

dat <- dat |>

mutate(

lower = m + qnorm(0.025, sd = sqrt(C)),

upper = m + qnorm(0.975, sd = sqrt(C))

)

Furthermore, we derive the likelihood in the linear Gaussian state-space model. The log-likelihood for the entire time series can be obtained from the one-step-ahead predictive likelihood. Recalling that the one-step-ahead predictive likelihood in the linear Gaussian state-space model is a normal distribution with mean \(f_{t}\) and variance \(Q_{t}\) yields:

\[\begin{aligned} l(\boldsymbol{\theta}) &= \sum_{t=1}^{T}\log p(y_{t}|y_{1:t-1}; \boldsymbol{\theta})\\ &= -\frac{1}{2}\sum_{t=1}^{T}\log|Q_{t}| - \frac{1}{2}\sum_{t=1}^{T}\frac{(y_{t} - f_{t})^{2}}{Q_{t}} \end{aligned}\]Kalman Prediction

The k-steps-ahead predictive distribution in the linear Gaussian state-space model is also the normal distribution. Hence, we define it as follows:

\[\begin{aligned} N(\textbf{a}_{t}(k), \textbf{R}_{t}(k)) \end{aligned}\]The procedure for obtaining the k-steps-ahead predictive distribution at time point \(t + k\) from that at time point \(t + (k − 1)\) is as follows:

At time point \(t + (k-1)\):

\[\begin{aligned} N(\textbf{a}_{t}(k -1), \textbf{R}_{t}(k-1)) \end{aligned}\]Updating at time point \(t+k\):

\[\begin{aligned} \textbf{a}_{t}(k) &\leftarrow \textbf{G}_{t+k}\textbf{a}_{t}(k-1) \end{aligned}\] \[\begin{aligned} \textbf{R}_{t}(k) &\leftarrow \textbf{G}_{t+k}\textbf{R}_{t}(k-1)\textbf{G}_{t+k}^{T} + \textbf{W}_{t+k} \end{aligned}\]At time point \(t + k\):

\[\begin{aligned} N(\textbf{a}_{t}(k), \textbf{R}_{t}(k)) \end{aligned}\]The 0-steps-ahead predictive distribution at time t corresponds to the filtering distribution at time t. The k− 1-steps-ahead predictive distribution is transitioned based on the state equation.

Even if there are missing observations, we can proceed with the filtering procedure by assuming that Kalman gain = O. We eventually regard the one-step-ahead predictive distribution as a filtering distribution. In addition, according to the above property, if we intentionally add multiple missing observations at the end of data, the filtering of such data results in the long-term prediction.

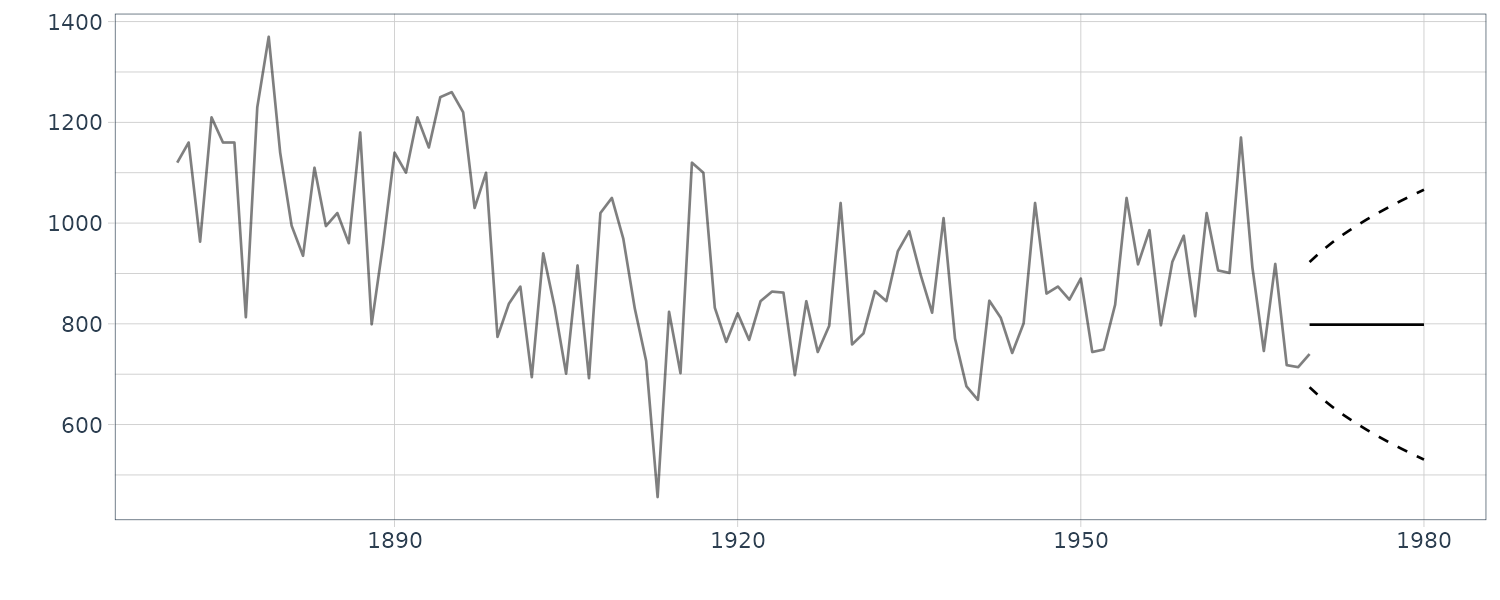

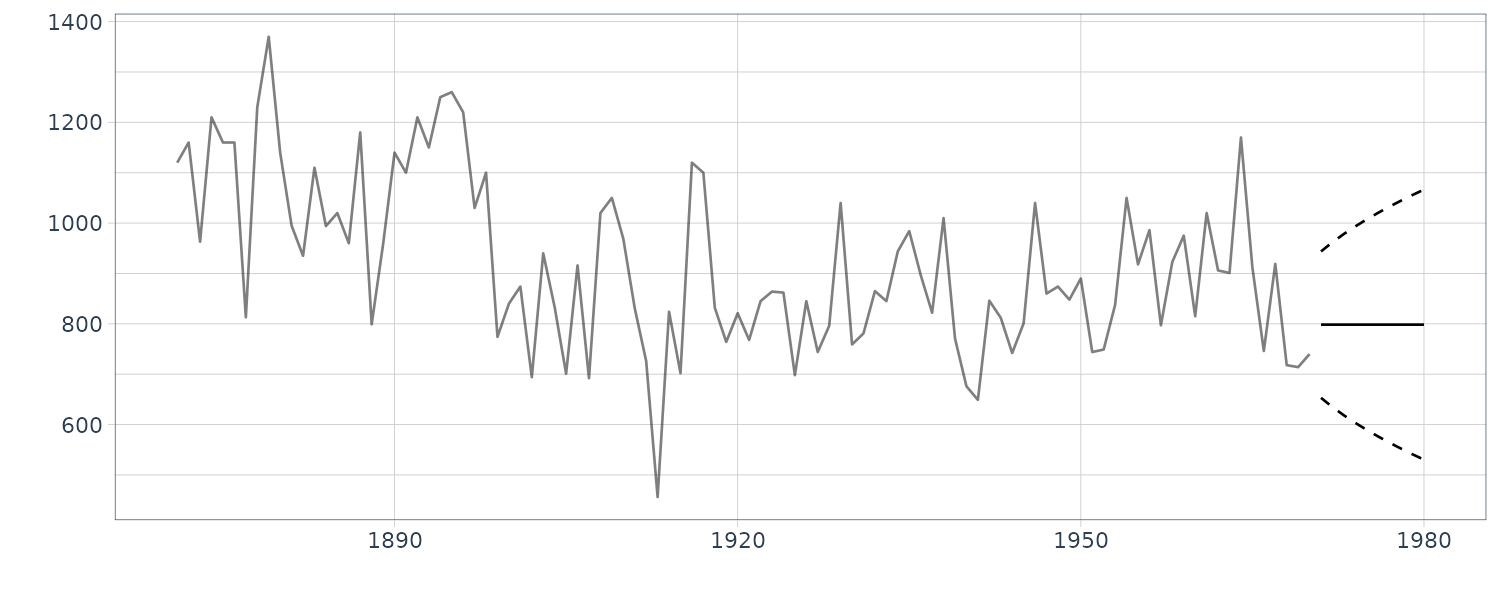

Doing this in R:

nAhead <- 10

dat <- bind_rows(dat, new_data(dat, n = nAhead))

# Function for one-step-ahead Kalman prediction (k = 1)

Kalman_prediction <- function(a_t0, R_t0) {

# One-step-ahead predictive distribution

a_t1 <- G_t_plus_1 %*% a_t0

R_t1 <- G_t_plus_1 %*% R_t0 %*% t(G_t_plus_1) + W_t_plus_1

return(

list(

a = a_t1,

R = R_t1

)

)

}

# Set parameters of the linear Gaussian state space (time-invariant)

G_t_plus_1 <- G_t

W_t_plus_1 <- W_t

# k = 0

dat$a[t + 0] <- dat$m[t]

dat$R[t + 0] <- dat$C[t]

for (k in 1:nAhead) {

KP <- Kalman_prediction(dat$a[t + k - 1], dat$R[t + k - 1])

dat$a[t + k] <- KP$a

dat$R[t + k] <- KP$R

}

# Ignore the display of following codes

# Find 2.5% and 97.5% values for 95% intervals

dat <- dat |>

mutate(

pred_lower = a + qnorm(0.025, sd = sqrt(R)),

pred_upper = a + qnorm(0.975, sd = sqrt(R))

)

dat |>

autoplot(y, alpha = 0.5) +

geom_line(

aes(y = a),

data = dat |> slice(t:n())

) +

geom_line(

aes(y = pred_upper),

linetype = "dashed",

data = dat |> slice(t:n())

) +

geom_line(

aes(y = pred_lower),

linetype = "dashed",

data = dat |> slice(t:n())

) +

theme_tq()

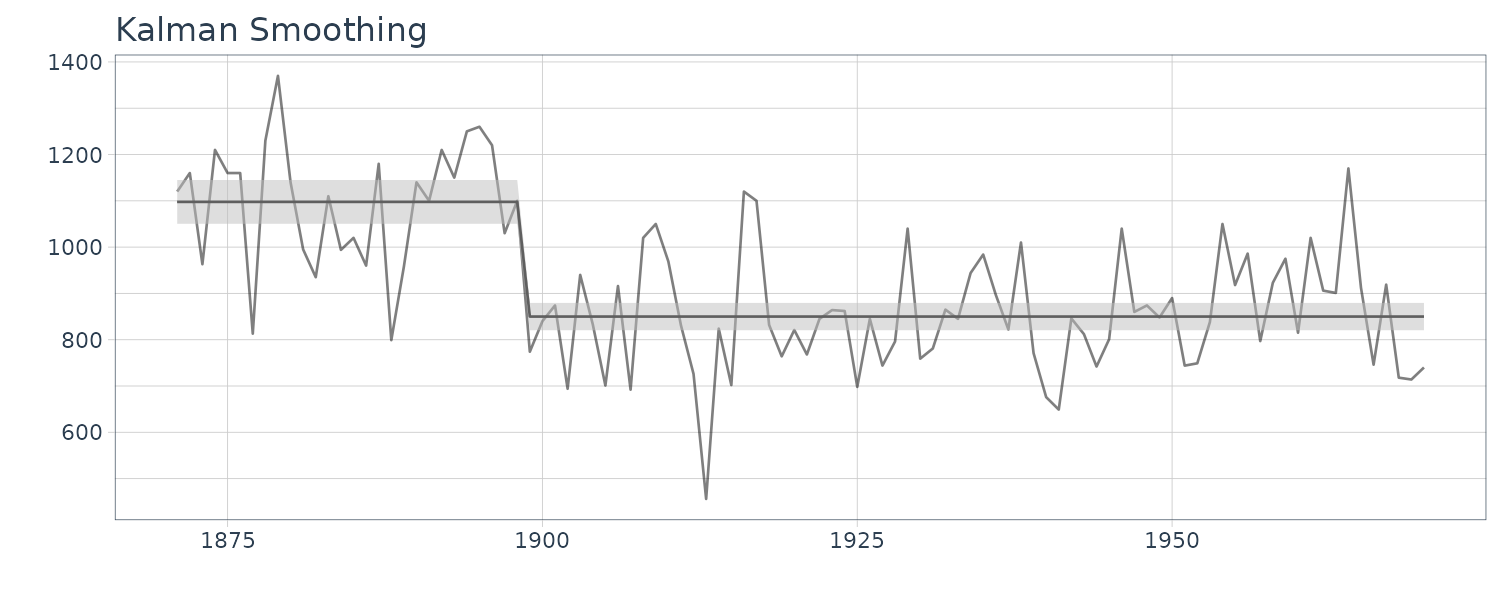

Kalman Smoothing

This book focuses on fixed-interval smoothing. Hence, we assume that Kalman filtering through time T has been completed before smoothing.

The smoothing distribution in the linear Gaussian state-space model is also the normal distribution. We define it as follows:

\[\begin{aligned} N(\textbf{s}_{t}, \textbf{S}_{t}) \end{aligned}\]The procedure for obtaining the smoothing distribution at time t from that at time \(t + 1\) is as follows:

Smoothing distribution at time \(t + 1\):

\[\begin{aligned} N(\textbf{s}_{t+1}, \textbf{S}_{t+1}) \end{aligned}\]Update procedure at time \(t\):

Smoothing gain:

\[\begin{aligned} \textbf{A}_{t} \leftarrow \textbf{C}_{t}\textbf{G}_{t+1}^{T}\textbf{R}_{t+1}^{-1} \end{aligned}\]State update:

\[\begin{aligned} \textbf{s}_{t} \leftarrow \textbf{m}_{t} + \textbf{A}_{t}[\textbf{s}_{t+1} - \textbf{a}_{t+1}]\\ \textbf{S}_{t} \leftarrow \textbf{C}_{t} + \textbf{A}_{t}[\textbf{S}_{t+1} - \textbf{R}_{t+1}]\textbf{A}_{t}^{T} \end{aligned}\]Smoothing distribution at time \(t\):

\[\begin{aligned} N(\textbf{s}_{t}, \textbf{S}_{t}) \end{aligned}\]Repeating the procedure of the above RTS (Rauch–Tung–Striebel) algorithm from \(t = T − 1\) in the reverse time direction, we obtain the smoothing distribution at every time point. Note that the smoothing distribution at time T corresponds to the filtering distribution at time T. The filtering distribution is corrected based on the smoothing distribution at one time point ahead.

t_max <- 100

# Function performing Kalman smoothing for one time point

Kalman_smoothing <- function(dat, s_t_plus_1, S_t_plus_1, t) {

# Smoothing gain

A_t <- dat$C[t] %*% t(G_t_plus_1) %*% solve(dat$R[t + 1])

# State update

s_t <- dat$m[t] + A_t %*% (s_t_plus_1 - dat$a[t + 1])

S_t <- dat$C[t] + A_t %*% (S_t_plus_1 - dat$R[t + 1]) %*% t(A_t)

# Return the mean and variance of the smoothing distribution

return(

list(

s = s_t,

S = S_t

)

)

}

# Find the mean and variance of the smoothing distribution

# Allocate memory for state (mean and covariance)

dat <- dat |>

mutate(

s = NA_real_,

S = NA_real_

)

# Time point: t = t_max

dat$s[t_max] <- dat$m[t_max]

dat$S[t_max] <- dat$C[t_max]

# Time point: t = t_max - 1 to 1

for (t in (t_max - 1):1) {

KS <- Kalman_smoothing(dat, dat$s[t + 1], dat$S[t + 1], t = t)

dat$s[t] <- KS$s

dat$S[t] <- KS$S

}

# Ignore the display of following codes

# Find 2.5% and 97.5% values for 95% intervals

dat <- dat |>

mutate(

smooth_lower = s + qnorm(0.025, sd = sqrt(S)),

smooth_upper = s + qnorm(0.975, sd = sqrt(S))

)

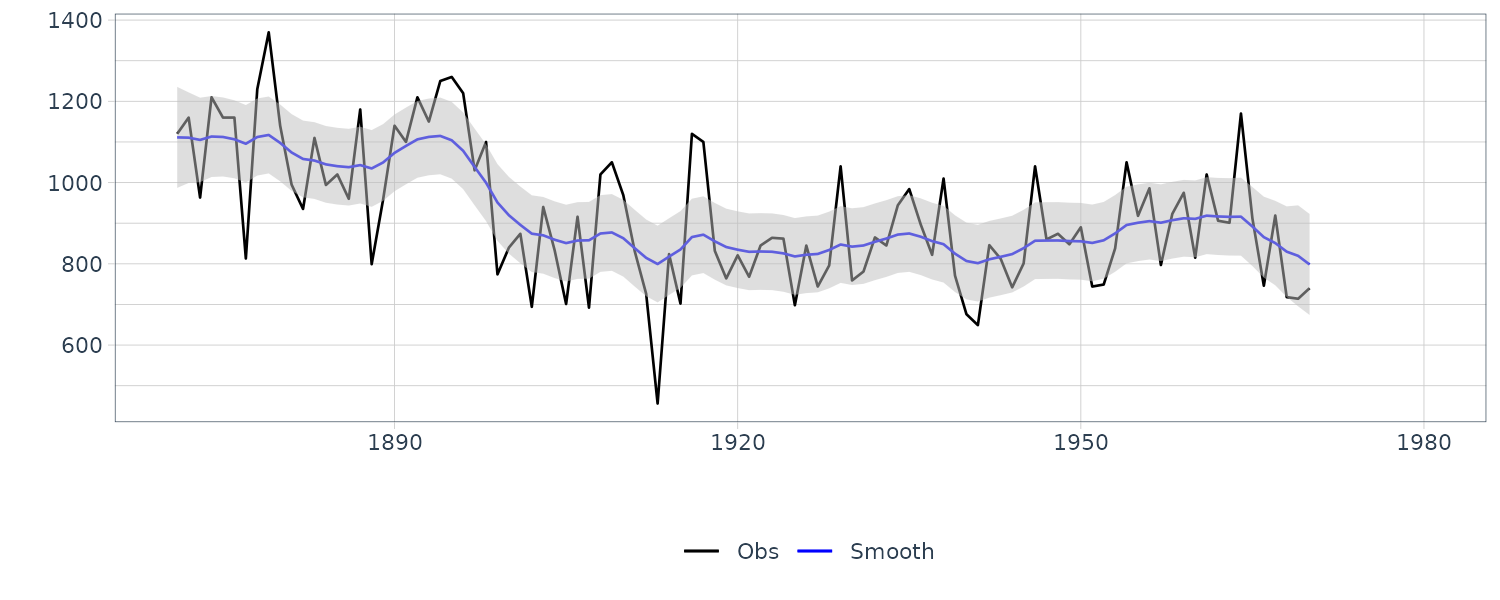

dat |>

autoplot(y, aes(color = "Obs")) +

geom_line(aes(y = s, color = "Smooth")) +

geom_ribbon(

aes(

ymax = smooth_upper,

ymin = smooth_lower

),

fill = "gray",

alpha = 0.5

) +

scale_color_manual(

name = "",

breaks = c("Obs", "Smooth"),

values = c("Obs" = "black", "Smooth" = "blue")

) +

xlab("") +

ylab("") +

theme_tq()

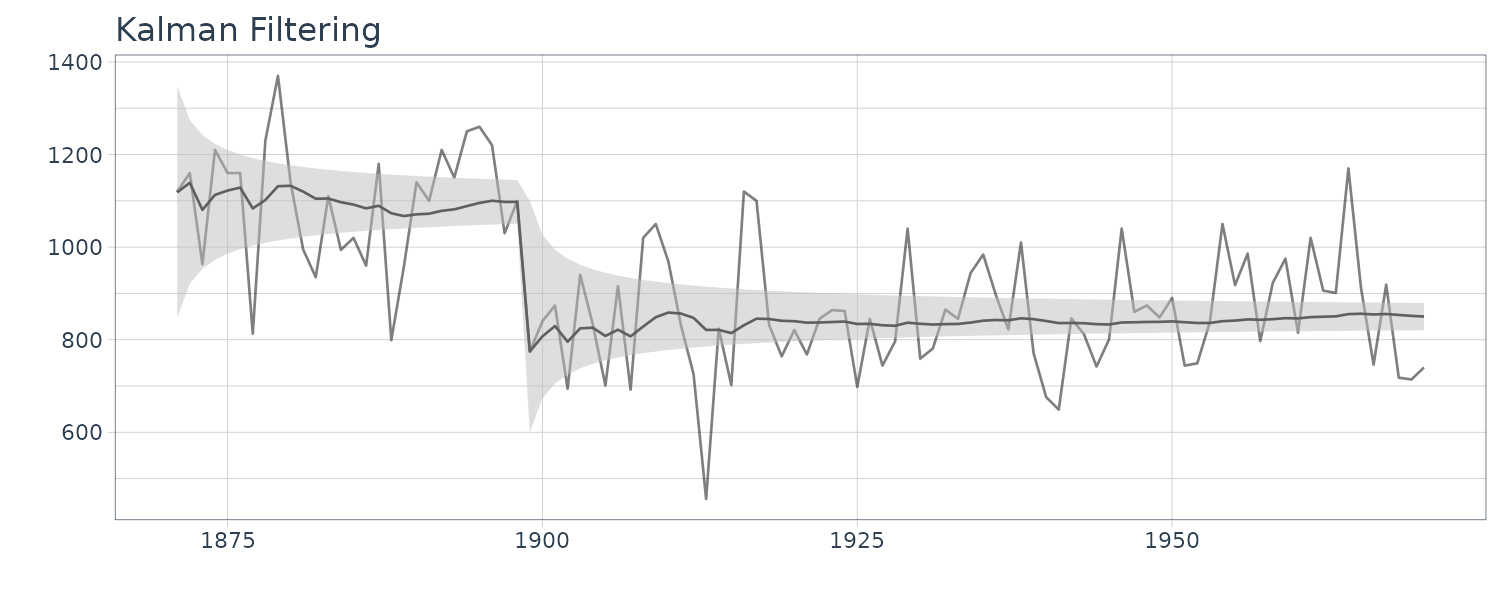

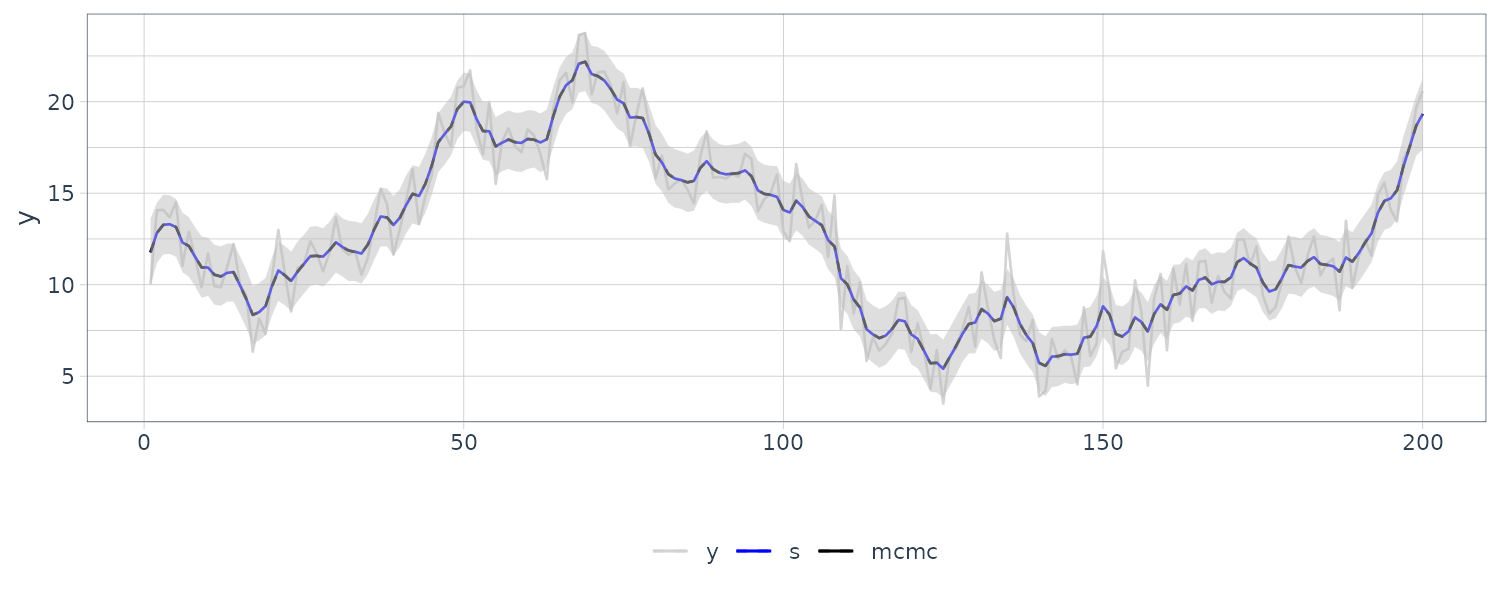

The code assumes that Kalman filtering has been completed. In the above code, we first define the function Kalman_smoothing(), which performs Kalman smoothing for one time point. For smoothing, we then use the function Kalman_smoothing() for every time point to obtain the mean and variance of the smoothing distribution.

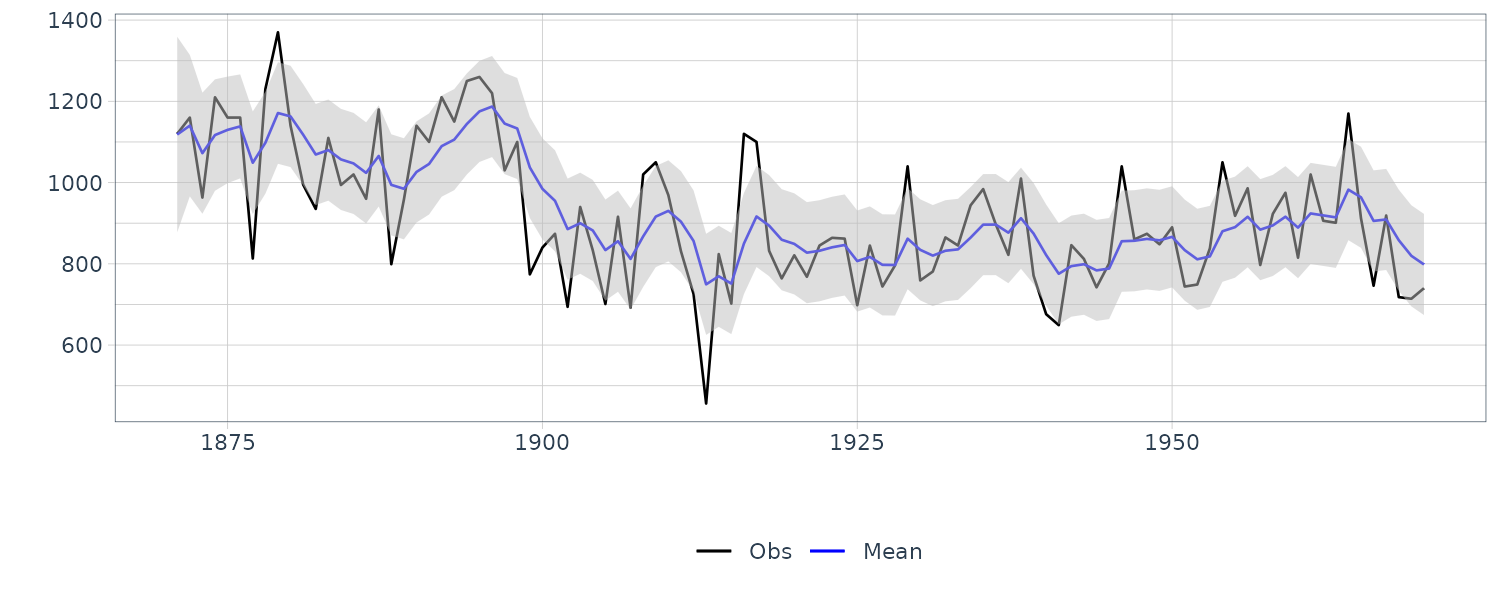

We will now go through examples using the dlm library.

Example: Local-Level Model Case

The local-level model is defined as follows:

\[\begin{aligned} x_{t} &= x_{t-1} + w_{t}\\ y_{t} &= x_{t} + v_{t} \end{aligned}\] \[\begin{aligned} w_{t} &\sim N(0, W)\\ v_{t} &\sim N(0, V) \end{aligned}\]The above model corresponds to the linear Gaussian state-space model that has the settings:

\[\begin{aligned} p &= 1\\ \textbf{G}_{t} &= \begin{bmatrix} 1 \end{bmatrix}\\ \textbf{F}_{t} &= \begin{bmatrix} 1 \end{bmatrix} \end{aligned}\]Furthermore, for simplicity, we assume a time-invariant model:

\[\begin{aligned} W_{t} &= W\\ V_{t} &= V \end{aligned}\]The state equation for the local-level model states that the current observations are roughly the same as the previous ones and that there is no particular time pattern. The local-level model is similar to the AR(1) model with \(\phi = 1\). Such a coefficient leads to the nonstationary time series for the state known as a random walk. For this reason, the local-level model is also called a random walk plus noise model.

If there is no particular knowledge of a prior distribution, mean is set to an arbitrary finite value such as 0 or observations’ average, whereas variance is set to an arbitrary finite value such as a sufficiently large value according to the data. Even such a pragmatic policy does not cause a problem.

Using library dlm in R:

library(dlm)

mod <- dlmModPoly(order = 1)

> str(mod)

List of 10

$ m0 : num 0

$ C0 : num [1, 1] 1e+07

$ FF : num [1, 1] 1

$ V : num [1, 1] 1

$ GG : num [1, 1] 1

$ W : num [1, 1] 1

$ JFF: NULL

$ JV : NULL

$ JGG: NULL

$ JW : NULL

- attr(*, "class")= chr "dlm" We set order = 1 because the local-level model is generally a polynomial model of order one. The arguments dW and dV indicate the variances \(W\) and \(V\) of the state and observation noises, respectively. After the setting of the default value to them, they are updated to appropriate values through the specification of parameter values to be described later. The arguments m0 and C0 indicate the mean \(m_{0}\) and variance \(C_{0}\) of the prior distribution, respectively. While the default values 0 and \(10^{7}\) are set to them, the mean is finite and the variance is sufficiently larger than the data variance var(Nile) = 28,638. Hence, we need not update them.

The return values from dlmModPoly() are m0, C0, FF, V, GG, W, JFF, JV, JGG, and JW. In these elements, those with a name starting from J in the second half are used to specify the time-varying model. Since this example assumes the time-invariant local-level model, we need not change their settings from the default NULL.

The first-half elements m0, C0, FF, V, GG, and W correspond to:

\[\begin{aligned} \text{m}_{0}, \text{C}_{0}, \text{F}_{t}, V_{t}, \text{G}_{t}, \text{W}_{t} \end{aligned}\]When the model contains unknown parameters, we must specify their values in some manner for time series estimation. In this example, the parameters \(W, V\) are unknown and lack any specific clues. Hence we must specify these values. We attempt to use the maximum likelihood method because the data Nile are of a sufficient quantity.

build_dlm <- function(par) {

mod$W[1, 1] <- exp(par[1])

mod$V[1, 1] <- exp(par[2])

return(mod)

}The user-defined function build_dlm() is prepared for defining and building a model. Specifically, this function updates the unknown parameters \(W, V\) in mod by the values in argument par and returns the entire mod. Since both \(W, V\) are variances and do not take negative values, an exponential transformation is applied to par to ensure that the function optim() can avoid an unnecessary search of the negative region.

When the function build_dlm() references an object mod internally, R copies the mod internally and automatically.

fit_dlm <- dlmMLE(

y = Nile,

parm = c(0, 0),

build = build_dlm,

hessian = TRUE

)

> fit_dlm

$par

[1] 7.291951 9.622437

$value

[1] 549.6918

$counts

function gradient

34 34

$convergence

[1] 0

$message

[1] "CONVERGENCE: REL_REDUCTION_OF_F <= FACTR*EPSMCH"

$hessian

[,1] [,2]

[1,] 2.096148 5.35176

[2,] 5.351760 36.70078The argument parm is the initial searching values for the parameters in the maximum likelihood estimation and we can set the arbitrary finite value to those. The argument build is a user-defined function for defining and building a model. Regarding the algorithm performing the maximum likelihood estimation numerically, the L-BFGS method, a kind of quasi-Newton method, is used by default.

We can derive the standard error for each parameter in the maximum likelihood estimation from the Hessian matrix for the log-likelihood function. The Hessian matrix contains the second-order partial derivatives of a function as its elements. In this example, the log-likelihood function \(l()\) contains the parameters \(W, V\). Hence, the Hessian matrix is as follows:

\[\begin{aligned} H &= -\begin{bmatrix} \frac{\partial^{2}l(W, V)}{\partial W \partial W} & \frac{\partial^{2}l(W, V)}{\partial W \partial V}\\[5pt] \frac{\partial^{2}l(W, V)}{\partial V \partial W} & \frac{\partial^{2}l(W, V)}{\partial V \partial V} \end{bmatrix} \end{aligned}\]When we set the additional argument hessian = TRUE to the function dlmMLE(), it is passed through the function optim(), and the Hessian matrix evaluated at the minimum value of the negative log-likelihood is to be included in the return values. We are doing this because we are finding the maximum in MLE and not the minimum.

However, this example cannot use them directly because of the exponentially transformed parameters. Thus, we use the delta method to obtain the standard error of the parameters after exponential transformation:

\[\begin{aligned} \textrm{var}(e^{\theta}) &\approx \textrm{var}\Big(e^{\hat{\theta}} + \frac{e^{\hat{\theta}}}{1!}(\theta - \hat{\theta})\Big)\\ &= e^{2\hat{\theta}}\textrm{var}(\theta - \hat{\theta})\\ &= e^{2\hat{\theta}}\textrm{var}(\theta) \end{aligned}\]The diagonal elements of \(H^{-1}\) have \(\textrm{var}(\theta)\). Hence, we obtain the standard error of a parameter after exponential transformation as:

\[\begin{aligned} e^{\text{MLE Estimate}} \times \sqrt{\text{diag}(H^{-1})} \end{aligned}\]> exp(fit_dlm$par) * sqrt(diag(solve(fit_dlm$hessian)))

[1] 1280.170 3145.999Building the model with estimates from MLE:

mod <- build_dlm(fit_dlm$par)

> mod

$FF

[,1]

[1,] 1

$V

[,1]

[1,] 15099.8

$GG

[,1]

[1,] 1

$W

[,1]

[1,] 1468.432

$m0

[1] 0

$C0

[,1]

[1,] 1e+07Filtering in R:

dlmFiltered_obj <- dlmFilter(

y = Nile,

mod = mod

)

> str(dlmFiltered_obj, max.level = 1)

List of 9

$ y : Time-Series [1:100] from 1871 to 1970: 1120 1160 963 1210 1160 ...

$ mod:List of 10

..- attr(*, "class")= chr "dlm"

$ m : Time-Series [1:101] from 1870 to 1970: 0 1118 1140 1072 1117 ...

$ U.C:List of 101

$ D.C: num [1:101, 1] 3162.3 122.8 88.9 76 70 ...

$ a : Time-Series [1:100] from 1871 to 1970: 0 1118 1140 1072 1117 ...

$ U.R:List of 100

$ D.R: num [1:100, 1] 3162.5 128.6 96.8 85.1 79.8 ...

$ f : Time-Series [1:100] from 1871 to 1970: 0 1118 1140 1072 1117 ...

- attr(*, "class")= chr "dlmFiltered"The return value from dlmFilter() is a list with the dlmFiltered class. The elements of the list are y, mod, m, U.C, D.C, a, U.R, D.R, and f.

The elements y, mod, m, U.C, D.C, a, U.R, D.R, and f correspond to:

- the input observations

- a list for model

- mean of filtering distribution

- two singular value decompositions for the covariance matrix of filtering distribution

- mean of one-step-ahead predictive distribution

- two singular value decompositions for the covariance matrix of one-step-ahead predictive distribution

- mean of one-step-ahead predictive likelihood

Since an object corresponding to the prior distribution is added at the beginning of m, U.C, and D.C, their length is one more than that of the observations.

dat <- as_tsibble(Nile)

dat <- dat |>

rename(

y = value

) |>

mutate(

m = dropFirst(dlmFiltered_obj$m),

m_sdev = sqrt(

dropFirst(

as.numeric(

dlmSvd2var(

dlmFiltered_obj$U.C,

dlmFiltered_obj$D.C

)

)

)

),

lower = m + qnorm(0.025, sd = m_sdev),

upper = m + qnorm(0.975, sd = m_sdev)

)We drop a first element corresponding to the prior distribution in advance using the utility function dropFirst() of dlm. Since the covariance of the filtering distribution is decomposed into the elements U.C and D.C, we retrieve the original covariance matrix using the utility function dlmSvd2var() of dlm.

Predicting in R:

dlmForecasted_obj <- dlmForecast(

mod = dlmFiltered_obj,

nAhead = 10

)

# Confirmation of the results

> str(dlmForecasted_obj, max.level = 1)

List of 4

$ a: Time-Series [1:10, 1] from 1971 to 1980: 798 798 798 798 798 ...

..- attr(*, "dimnames")=List of 2

$ R:List of 10

$ f: Time-Series [1:10, 1] from 1971 to 1980: 798 798 798 798 798 ...

..- attr(*, "dimnames")=List of 2

$ Q:List of 10

dat_pred <- new_data(dat, n = nAhead)

dat_pred <- dat_pred |>

mutate(

a = dlmForecasted_obj$a,

a_sdev = sqrt(

as.numeric(

dlmForecasted_obj$R

)

),

lower = a + qnorm(0.025, sd = a_sdev),

upper = a + qnorm(0.975, sd = a_sdev)

)

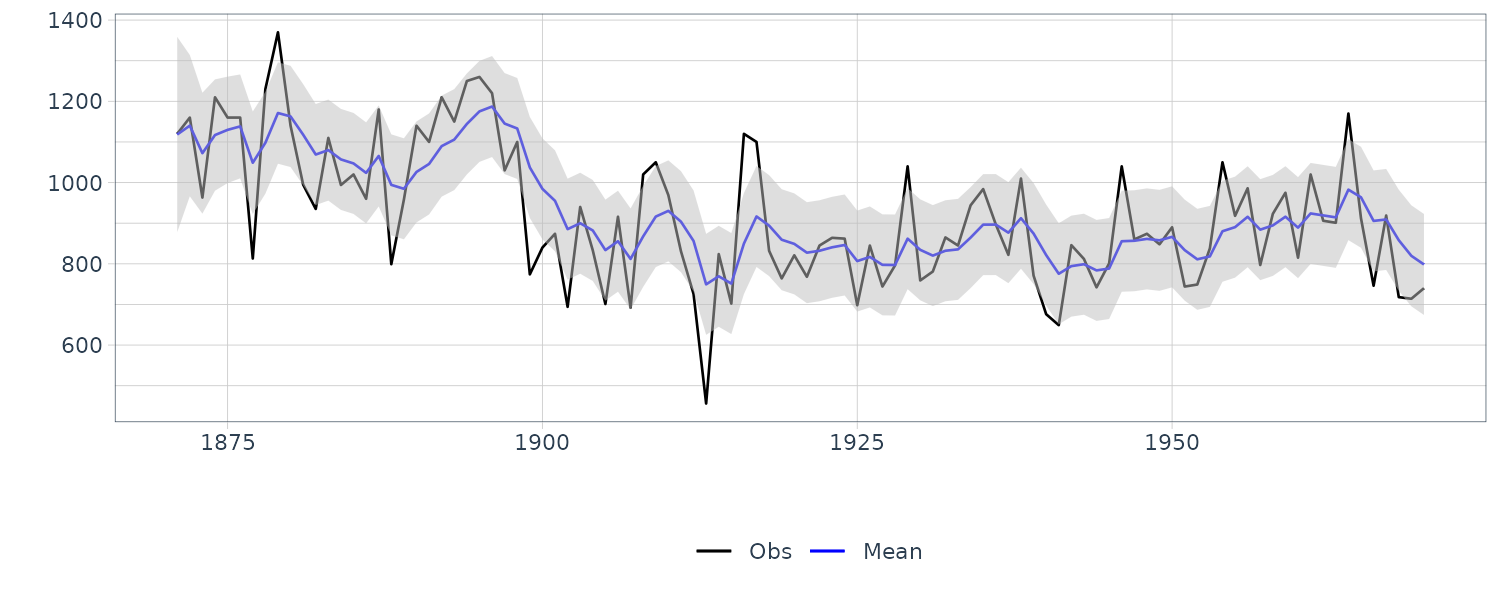

Smoothing in R:

dlmSmoothed_obj <- dlmSmooth(y = Nile, mod = mod)

> str(dlmSmoothed_obj, max.level = 1)

List of 3

$ s : Time-Series [1:101] from 1870 to 1970: 1111 1111 ...

$ U.S:List of 101

$ D.S: num [1:101, 1] 74.1 63.5 56.9 53.1 50.9 ...

dat <- dat |>

mutate(

s = dropFirst(dlmSmoothed_obj$s),

s_sdev = sqrt(

dropFirst(

as.numeric(

dlmSvd2var(

dlmSmoothed_obj$U.S,

dlmSmoothed_obj$D.S

)

)

)

),

s_lower = s + qnorm(0.025, sd = s_sdev),

s_upper = s + qnorm(0.975, sd = s_sdev)

)

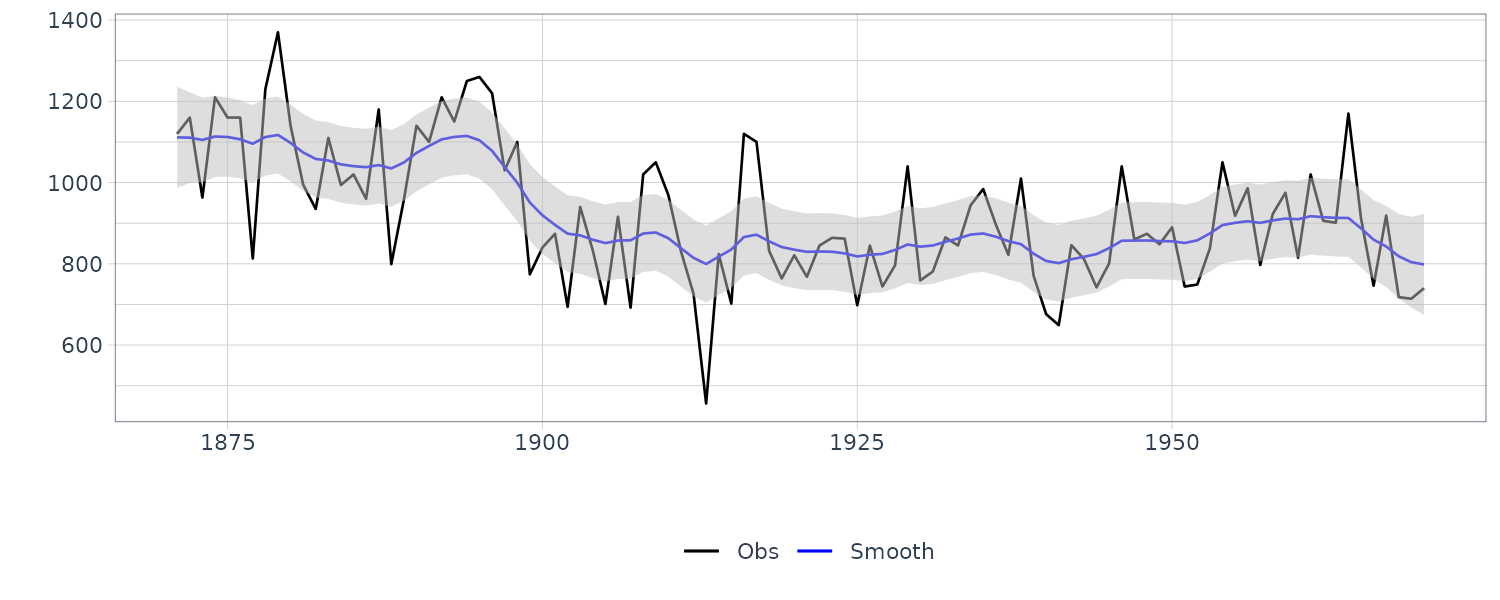

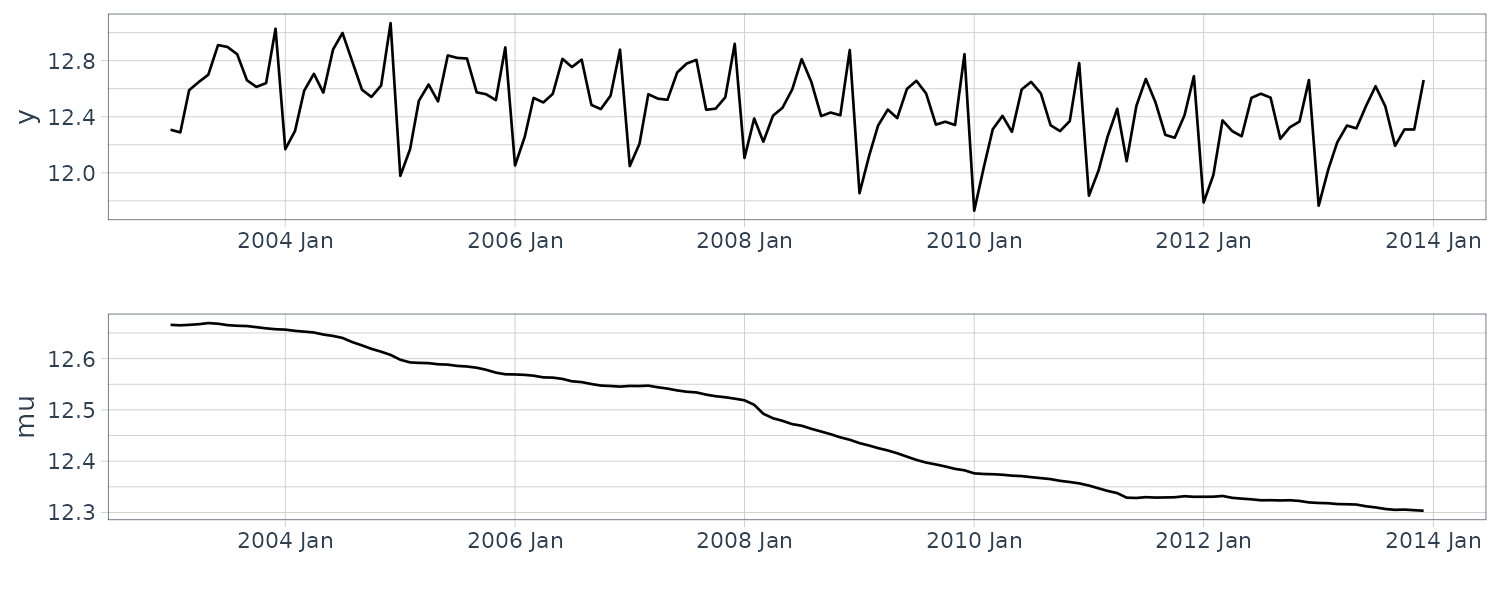

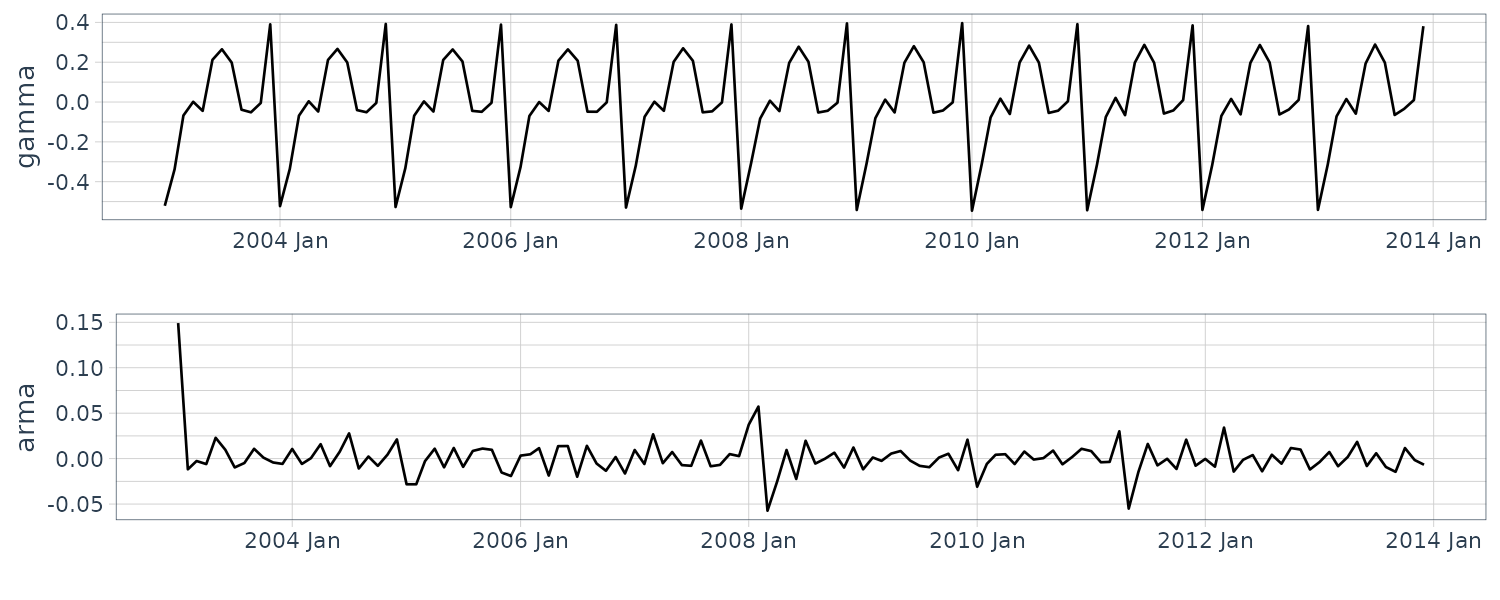

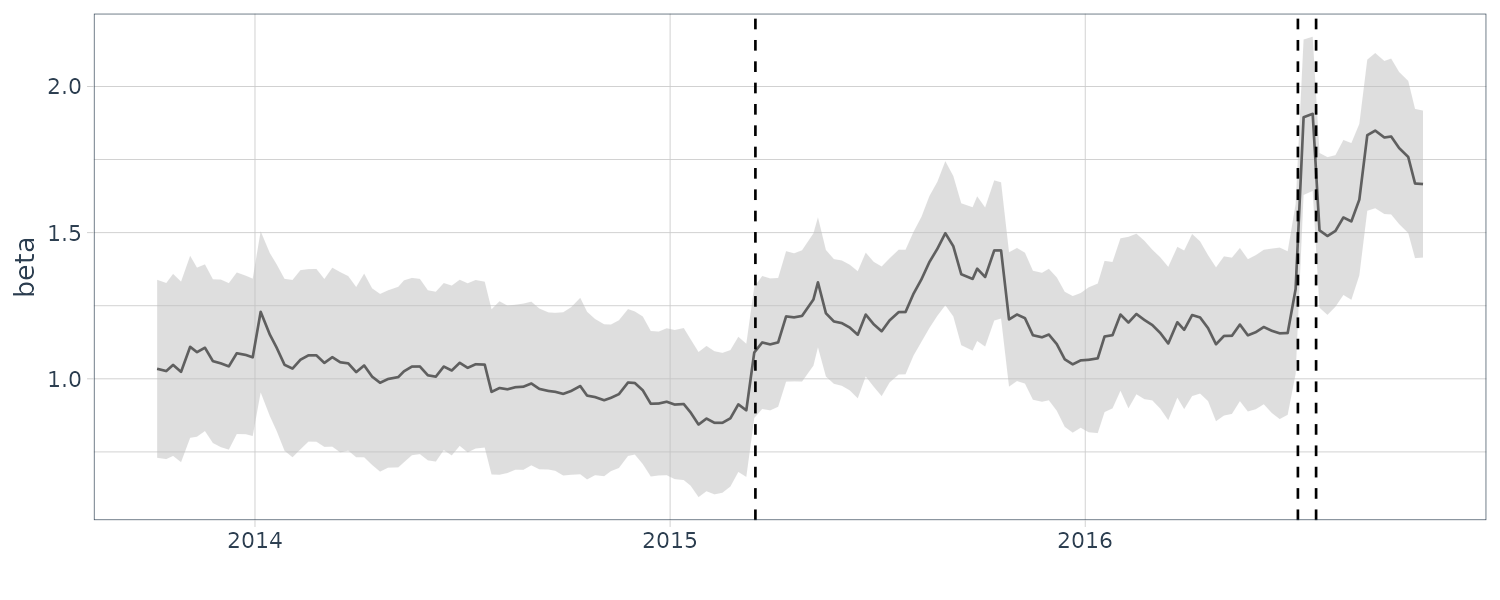

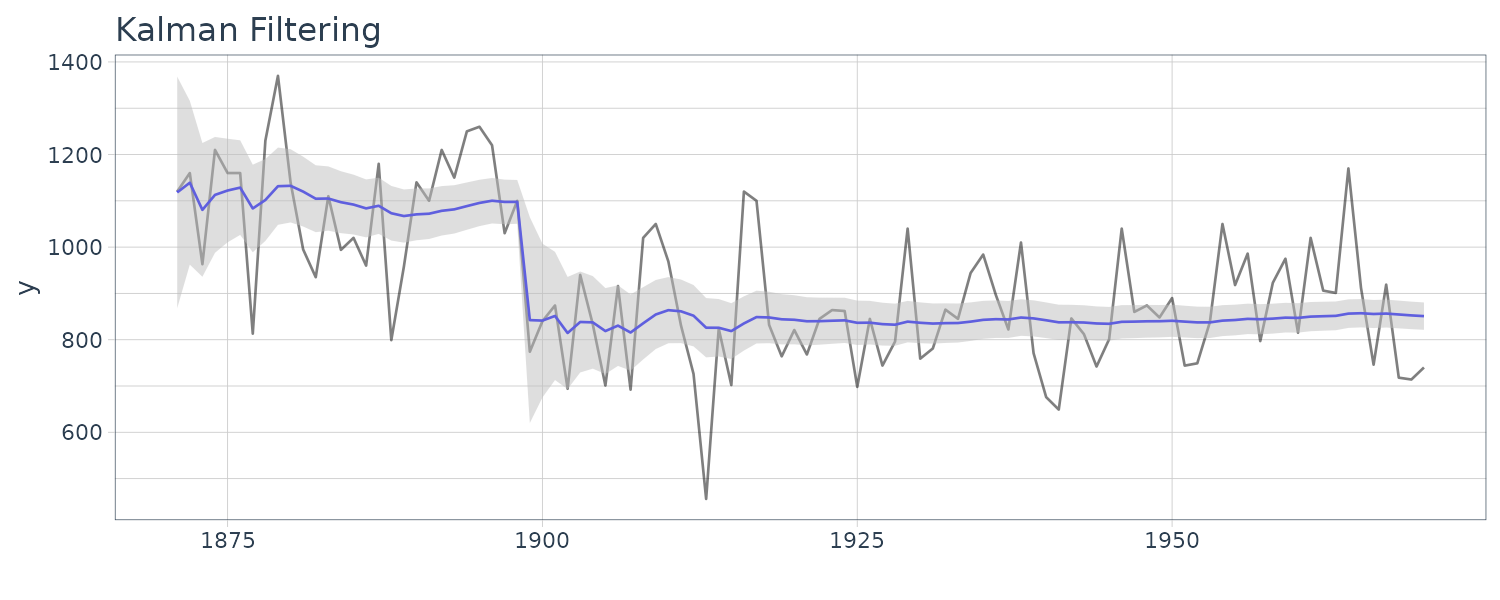

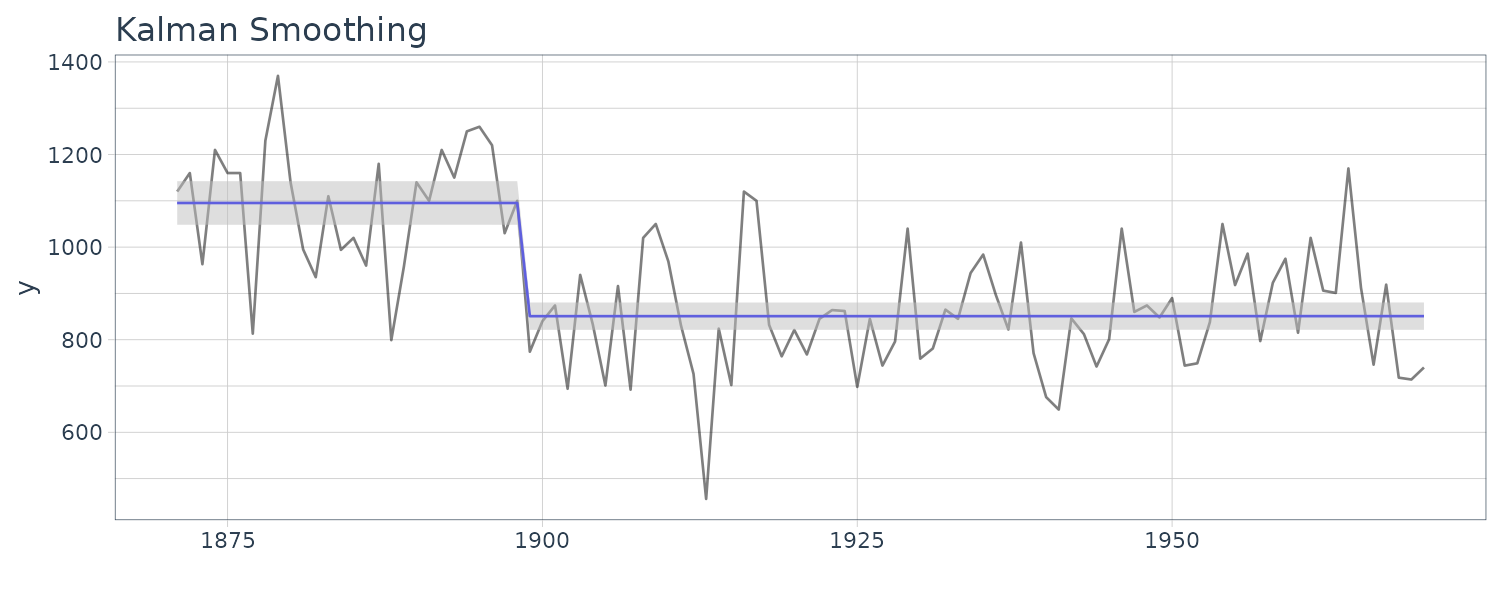

We see that the mean value and the probability intervals become smoother and more narrow, respectively. While the filtering is based on the past and current information, the smoothing can further consider the relative future information; it can generally improve the estimation accuracy. However, even smoothing cannot capture the sudden decrease in 1899. To capture such a situation adequately, we must incorporate particular information into the model we will introduce a method using a regression model later.

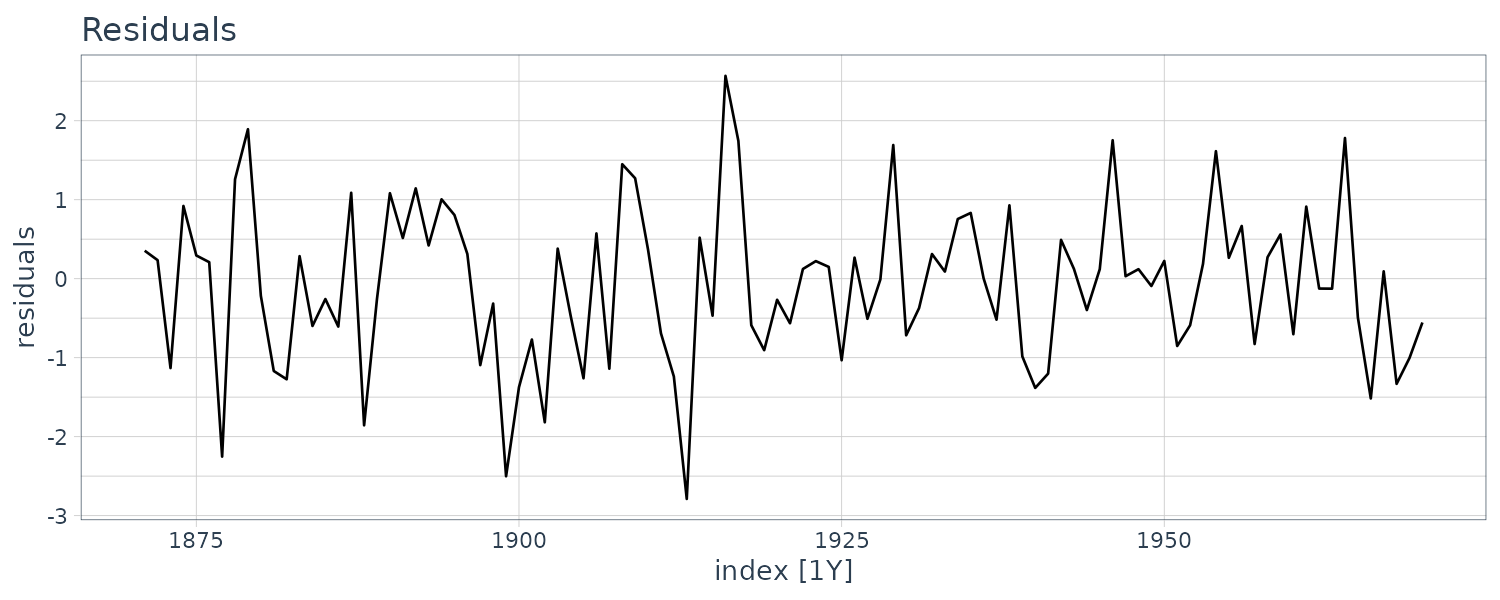

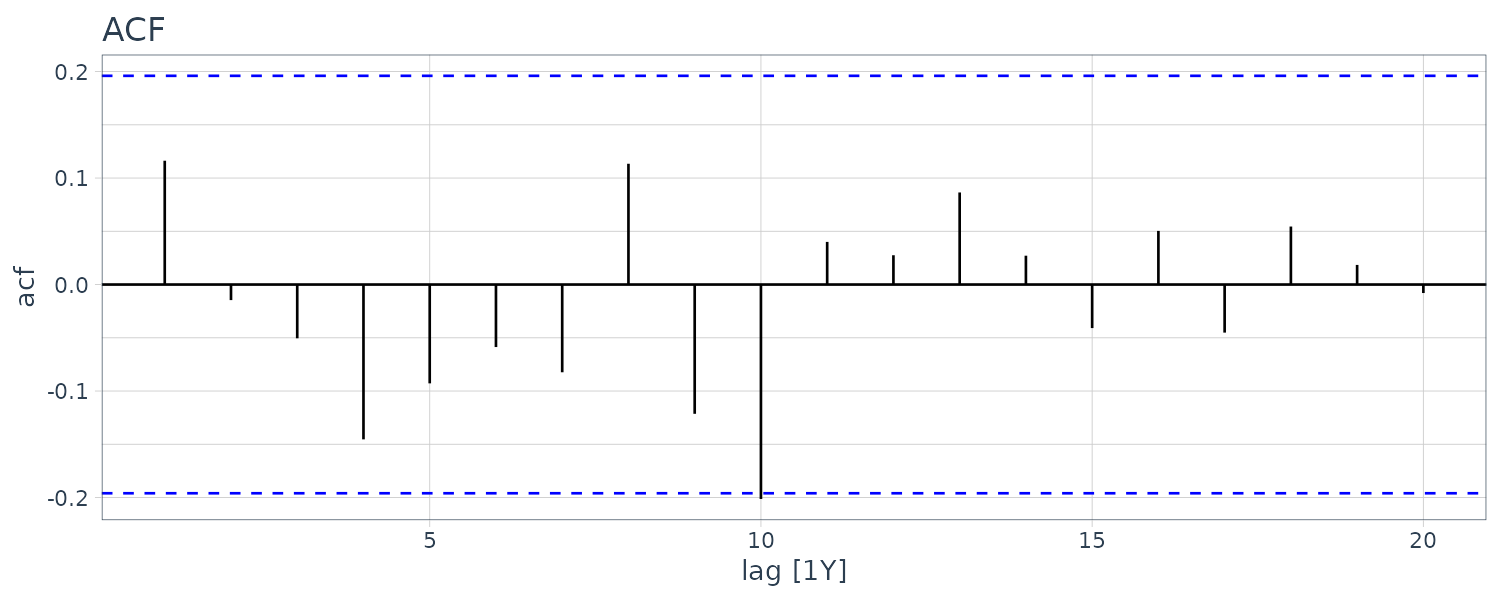

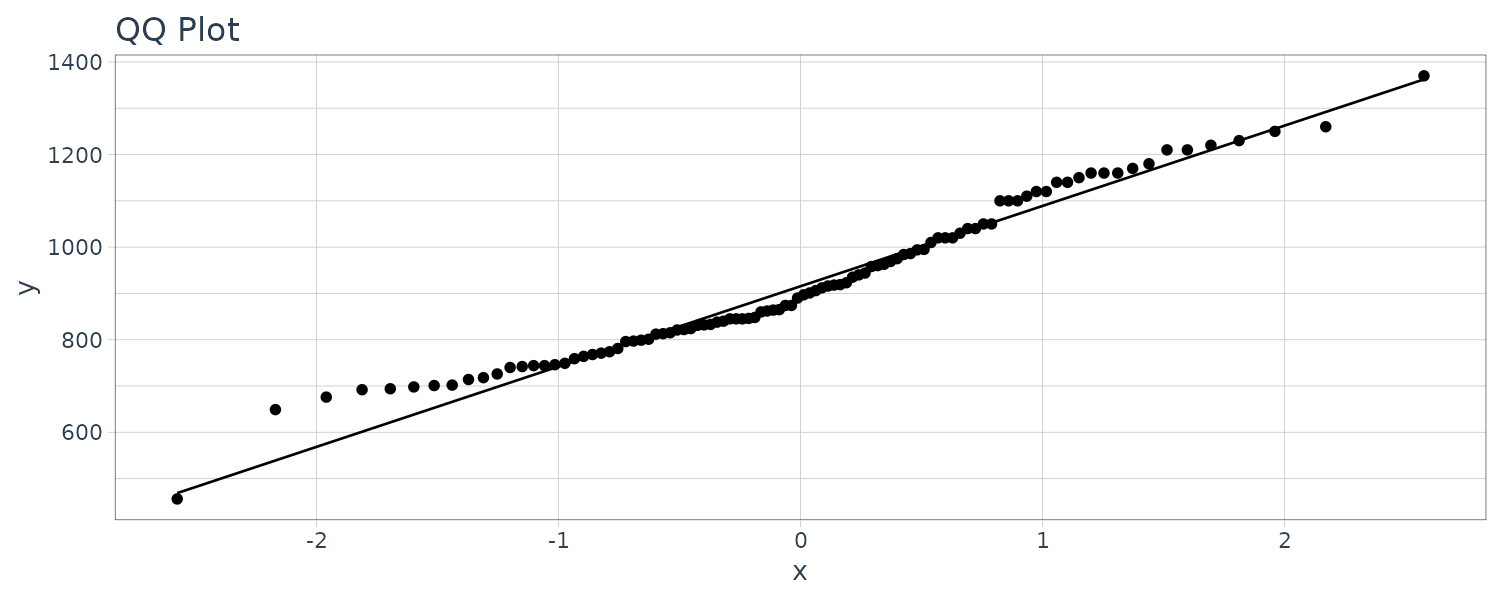

We now diagnose the model with innovations using the library dlm:

dat$residuals <- residuals(

object = dlmFiltered_obj,

sd = FALSE

)

dat |>

autoplot(residuals) +

ggtitle("Residuals") +

theme_tq()

dat |>

ACF(residuals) |>

autoplot() +

ggtitle("ACF") +

theme_tq()

dat |>

ggplot(aes(sample = y)) +

ggtitle("QQ Plot") +

stat_qq() +

stat_qq_line() +

theme_tq()

Local-Trend Model

A local-trend model is also known as a linear growth model and can express a linear slope in the data. Thus, this model is suitable for describing time series data with a trend of rising and falling for a particular term. The local-trend model is generally a polynomial model of order two.

We distinguish each element with a superscript(n) and refer to N as the order of the polynomial model. A state in the polynomial model comprises N elements:

\[\begin{aligned} \begin{bmatrix} x_{t}^{(N)}\\ \vdots\\ x_{t}^{(n)}\\ \vdots\\ x_{t}^{(1)} \end{bmatrix} \end{aligned}\]The state and observation equations for the polynomial model with time-invariant state and observation noises are as follows:

\[\begin{aligned} x_{t}^{(n)} &= x_{t-1}^{(n)} + x_{t-1}^{(n-1)} + w_{t}^{(n)} \end{aligned}\] \[\begin{aligned} w_{t}^{(n)} &\sim N(0, W^{(n)}) \end{aligned}\] \[\begin{aligned} n &= N, \cdots, 2\\[10pt] \end{aligned}\] \[\begin{aligned} x_{t}^{(1)} &= x_{t-1}^{(1)} + w_{t}^{(1)} \end{aligned}\] \[\begin{aligned} w_{t}^{(1)} &\sim N(0, W^{(1)})\\[10pt] \end{aligned}\] \[\begin{aligned} y_{t} &= x_{t}^{(N)} + v_{t} \end{aligned}\] \[\begin{aligned} v_{t} &\sim N(0, V)\\[10pt] \end{aligned}\] \[\begin{aligned} \mathbf{x}_{t} &= \begin{bmatrix} x_{t}^{(N)}\\ \vdots\\ x_{t}^{(2)}\\ x_{t}^{(1)} \end{bmatrix} \end{aligned}\]Setting \(w_{t}^{(n)} = 0\) reveals the relation:

\[\begin{aligned} x_{t}^{(n)} - x_{t-1}^{(n)} &= x_{t-1}^{(n-1)} \end{aligned}\]Based on this property, \(x_{t}^{(N)}\) is interpreted as the level for short term. \(x_{t}^{(N-1)} = x_{t}^{(N)} - x_{t-1}^{(N)}\) is interpreted as the slope for short term. And \(x_{t}^{(N-2)} = x_{t}^{(N-1)} - x_{t-1}^{(N-1)}\) is interpreted as the curvature for short term, etc. Polynomial models of the order N = 3 and more are not generally used because with an increase in N, the estimation result overfits the noise and becomes difficult to interpret.

\[\begin{aligned} \mathbf{G}_{t} &= \begin{bmatrix} 1 & 1 & & &\\ & 1 & 1& &\\ & & \ddots & \ddots &\\ & & & 1&1\\ 0 & 0 & \cdots & 0&1\\ \end{bmatrix} \end{aligned}\] \[\begin{aligned} \mathbf{W}_{t} &= \begin{bmatrix} W^{(N)} & & &\\ &\ddots & &\\ & & W^{(2)}&\\ & & &W^{(1)}\\ \end{bmatrix} \end{aligned}\] \[\begin{aligned} \mathbf{F}_{t} &= \begin{bmatrix} 1 & 0 & \cdots & 0 \end{bmatrix} \end{aligned}\] \[\begin{aligned} V_{t} &= V \end{aligned}\]The polynomial model with the setting \(W^{(N)} = \cdots = W^{(2)} = 0\) is particularly referred to the integrated random walk model. Incidentally, this model is equivalent to taking the N-th difference of the data at preprocessing. We can recognize that the integrated random walk model in the state-space model corresponds to the difference at preprocessing in the ARIMA model.

The example of the local-trend model is illustrated with the seasonal model in the next section.

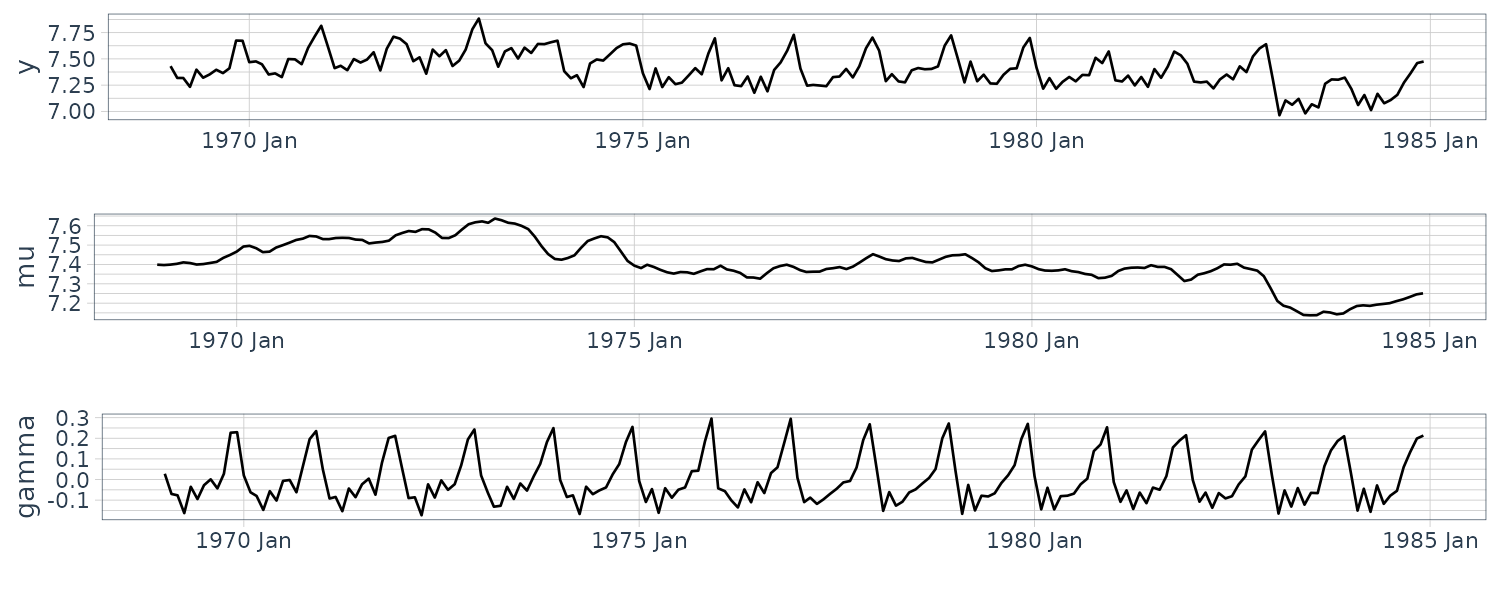

Seasonal Model

The seasonal model is also known as periodical model and is suitable for expressing a model that has a clear periodic pattern in the observations. This section explains two approaches for defining the model: time domain and frequency domain approaches.

Approach from the Time Domain

Let the cycle be s and the seasonal component be \(x_{t}\). Then, such a property is expressed as follows:

\[\begin{aligned} \sum_{t=1}^{s}x_{t} &= \text{any constant that does not differ from the sum in another cycle} \end{aligned}\]The constant can be considered as 0. When we assume that such a fluctuation has a normal distribution with mean 0, the equation becomes as follows:

\[\begin{aligned} \sum_{t=1}^{s}x_{t} &= w_{t} \end{aligned}\] \[\begin{aligned} w_{t} &\sim N(0, W) \end{aligned}\]This equation imposes a constraint on the seasonality.

Next, as a specific explanation, consider the data with quarter seasonality. Let the seasonal component at time point t be:

\[\begin{aligned} \begin{bmatrix} x_{t-4}\\ x_{t-3}\\ x_{t-2}\\ x_{t-1} \end{bmatrix} &= \begin{bmatrix} x_{t-1}^{(4Q)}\\ x_{t-1}^{(1Q)}\\ x_{t-1}^{(2Q)}\\ x_{t-1}^{(3Q)} \end{bmatrix} \end{aligned}\]The time transitions of these elements are expressed as follows:

\[\begin{aligned} x_{t}^{(1Q)} \leftarrow x_{t-1}^{(4Q)}\\ x_{t}^{(2Q)} \leftarrow x_{t-1}^{(1Q)}\\ x_{t}^{(3Q)} \leftarrow x_{t-1}^{(2Q)}\\ x_{t}^{(4Q)} \leftarrow x_{t-1}^{(3Q)} \end{aligned}\]Recalling that the value of 4Q can be defined by those of 1Q, 2Q, and 3Q from the constraint. We can further reduce the above equations. The right-hand side can be rewritten with the values of 1Q, 2Q, and 3Q:

\[\begin{aligned} x_{t}^{(1Q)} &\leftarrow -x_{t-1}^{(1Q)} -x_{t-1}^{(2Q)} -x_{t-1}^{(3Q)} + w_{t}\\ x_{t}^{(2Q)} &\leftarrow x_{t-1}^{(1Q)}\\ x_{t}^{(3Q)} &\leftarrow x_{t-1}^{(2Q)}\\ \end{aligned}\]Finally, we generally define the state and observation equations based on the above descriptions. The state and observation equations for the seasonal model in the time-domain approach, with time-invariant state and observation noises:

\[\begin{aligned} \textbf{x}_{t} &= \begin{bmatrix} x_{t}^{(1)}\\ x_{t}^{(2)}\\ \vdots\\ x_{t}^{(s-1)} \end{bmatrix} \end{aligned}\] \[\begin{aligned} \textbf{G}_{t} &= \begin{bmatrix} -1 & \cdots & -1 & -1\\ 1 & & &\\ & \ddots & &\\ & & 1 & \end{bmatrix} \end{aligned}\] \[\begin{aligned} \textbf{W}_{t} &= \begin{bmatrix} W & & &\\ & 0 & &\\ & & \ddots &\\ & & &0 \end{bmatrix} \end{aligned}\] \[\begin{aligned} \textbf{F}_{t} &= \begin{bmatrix} 1 & 0 & \cdots &0 \end{bmatrix} \end{aligned}\] \[\begin{aligned} V_{t} &= V \end{aligned}\]Approach from the Frequency Domain

This approach is based on Fourier series expansion and can easily adjust the number \(N\) of frequency components to be considered. While there are various kinds of seasonalities, the gradual seasonality can lead to a more natural interpretation than the complicated one in many cases, and the former is further able to suppress the overfitting to the noise. The setting low \(N\) in this model can achieve such a gradual seasonality and even sometimes improve the marginal likelihood as a result.

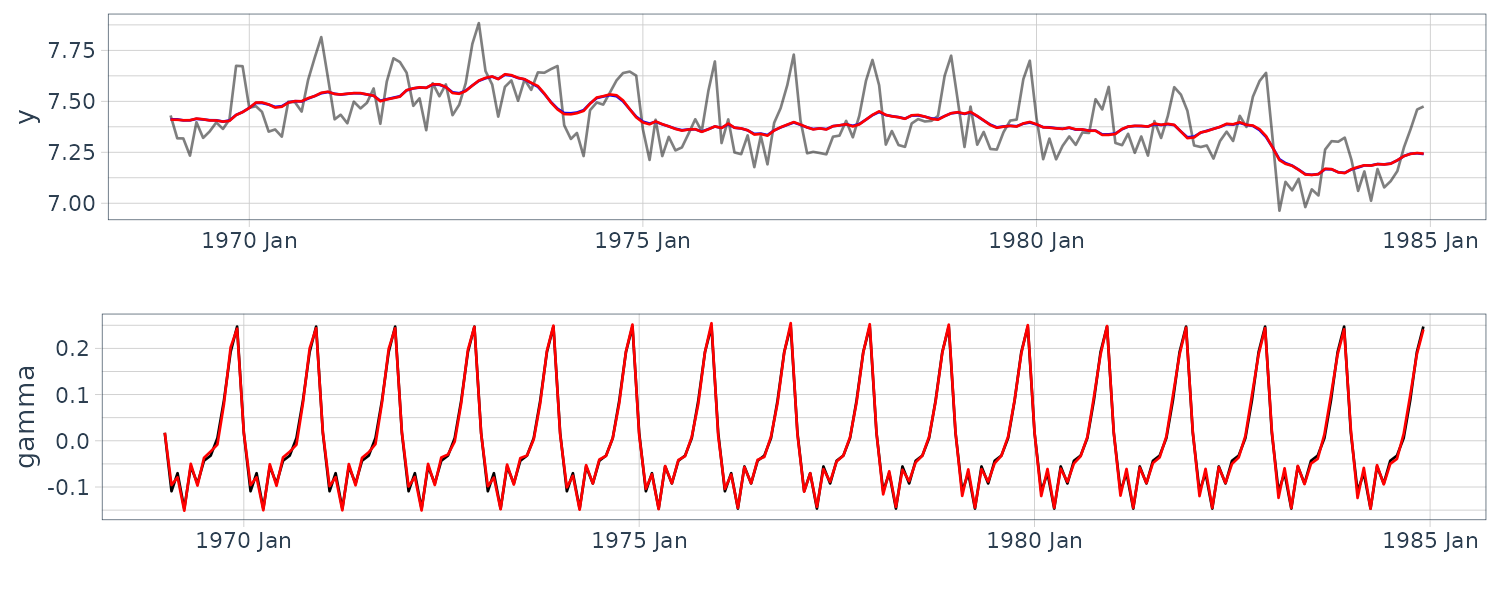

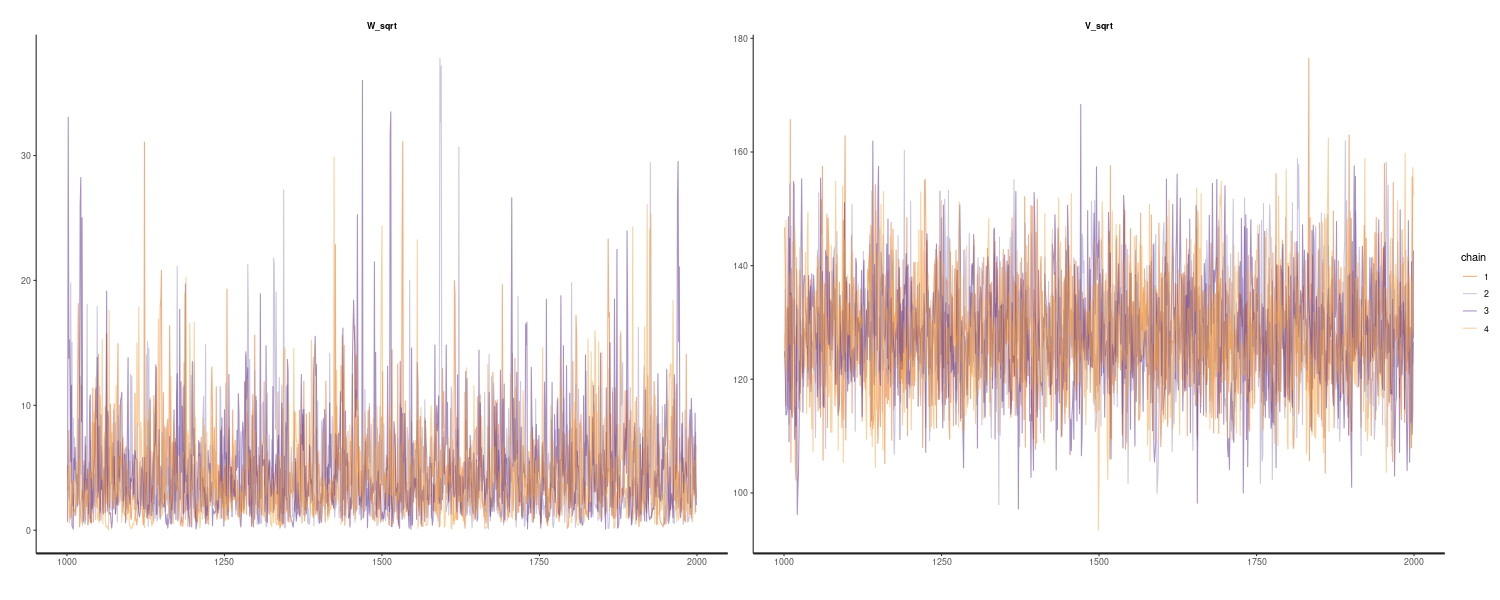

Arbitrary periodic data can generally be represented by the infinite sum of its frequency components.